AlphaGo is the deep learning program that can beat humans at the game Go. You can read Google’s highly technical paper on it, but you’ll have to wade through some very academic language. [Aman Agarwal] has done us a favor. He took the original paper and dissected the important parts of in in plain English. If the title doesn’t make sense to you, you need to read more XKCD.

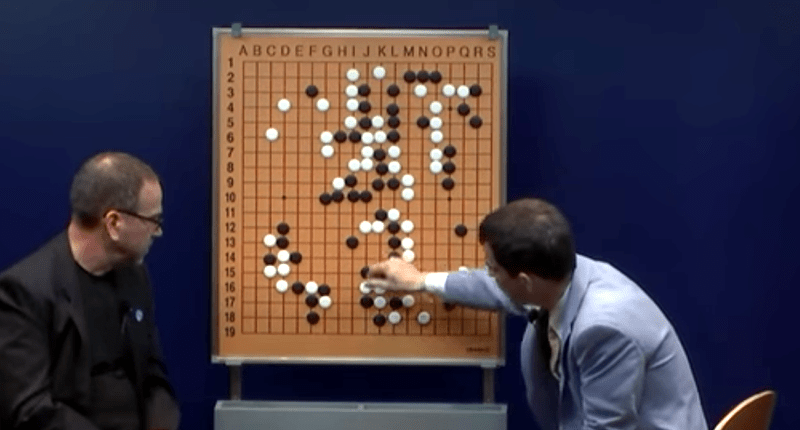

[Aman] says his treatment will be useful for anyone who doesn’t want to become an expert on neural networks but still wants to understand this important breakthrough. He also thinks people who don’t have English as a first language may find his analysis useful. By the way, the actual Go matches where AlphaGo beat [Sedol] were streamed and you can watch all the replays on YouTube (the first match appears below).

Interestingly, the explanation doesn’t assume you know how to play Go, but it does presuppose you have an understanding of some kind of two-player board game. As an example of the kind of language you’ll find in the original paper (which is linked in the post), you might see:

The policy network is trained on randomly sampled state-action pairs (s,a) using stochastic gradient ascent to maximize the likelyhood of the human move a selected in state s.

This is followed by some math equations. The post explains stochastic gradient ascent and even contrasts it to another technique for backpropagation, stochastic gradient descent.

We have to say, we’d like to see more academic papers taken apart like this for people who are interested but not experts in that field. We covered the AlphaGo match at the time. Personally, we are always suckers for a good chess computer.

I’m working on a robot and neural network that can beat humans at calvinball.

It didn’t go very well. I think humanity’s future is secured with calvinball.

Was it just beating humans?

Sometimes Calvinball involves “beating” humans… senseless.

B^)

Coincidence, Humble Bundle has an A.I. bundle that might interest article readers.

TL;DR

There are two neural networks.

One network is shown a million games and told to remember expert moves.

Another network is looking at the first network and told to remember whether any of those moves lead to victory.

AlphaGo first looks at the board, then asks network #1 what are the expert moves. It selects some of them at random, plays each case forwards a couple moves, and then asks network #2 which branch has a better chance for victory. Then it selects the strongest candidate.

To make AlphaGo superior to everyone else, the researchers take neural network #1, copy it multiple times over, apply random variation, and then make the copies play against each other millions and millions of times. This way the networks discover novel strategies by random walk and artifical selection.

This is where they beat human players, because by training the network the real-time equivalent of 800 human lifetimes, they randomly discover moves that human players have never seen.

Hi Luke, this is Aman (I wrote the AlphaGo explanation on Medium).

I love, love, love your tl;dr. Would you like to get in touch with me and collaborate with me on a future essay similar to the one I wrote on AlphaGo?

Like all “computer beats human at ” stories, there is now so much computing power to put behind a simple set of rules, I’m surprised it still makes news.

But can a single one of these computer driven ‘expert gamers’ do a SINGLE other thing? Nope. Let alone doing the miriad of things a human can and does do every single day.

Still, I suppose AI has to start somewhere.

Kind of like…expert systems?

Hey, this is Aman – thanks for writing this article. Appreciate the commentary. ????