If you’ve ever written any Python at all, the chances are you’ve used iterators without even realising it. Writing your own and using them in your programs can provide significant performance improvements, particularly when handling large datasets or running in an environment with limited resources. They can also make your code more elegant and give you “Pythonic” bragging rights.

Here we’ll walk through the details and show you how to roll your own, illustrating along the way just why they’re useful.

You’re probably familiar with looping over objects in Python using English-style syntax like this:

people = [['Sam', 19], ['Laura', 34], ['Jona', 23]]

for name, age in people:

...

info_file = open('info.txt')

for line in info_file:

...

hundred_squares = [x**2 for x in range(100)]

", ".join(["Punctuated", "by", "commas"])

These kind of statements are possible due to the magic of iterators. To explain the benefits of being able to write your own iterators, we first need to dive into some details and de-mystify what’s actually going on.

Iterators and Iterables

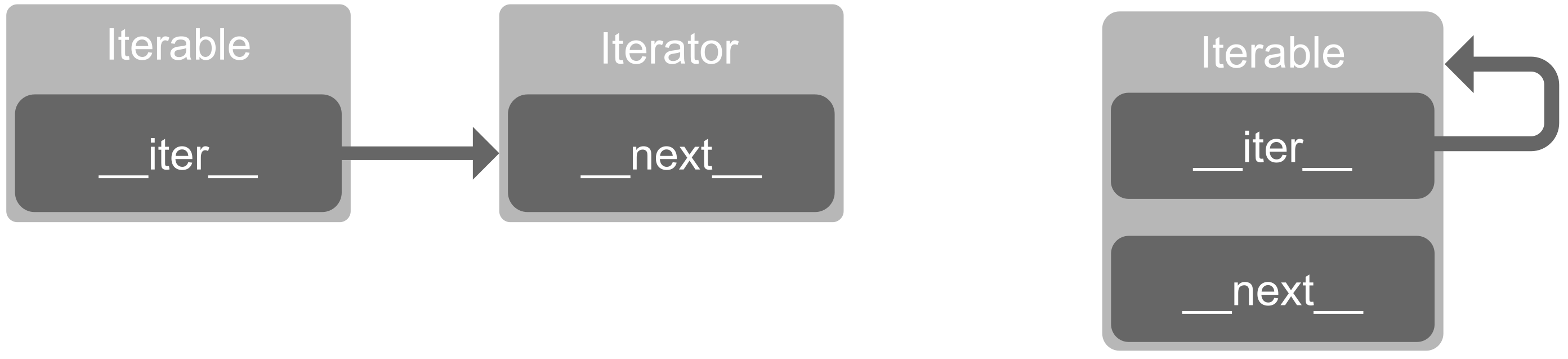

Iterators and iterables are two different concepts. The definitions seem finickity, but they’re well worth understanding as they will make everything else much easier, particularly when we get to the fun of generators. Stay with us!

Iterators

An iterator is an object which represents a stream of data. More precisely, an object that has a __next__ method. When you use a for-loop, list comprehension or anything else that iterates over an object, in the background the __next__ method is being called on an iterator.

Ok, so let’s make an example. All we have to do is create a class which implements __next__. Our iterator will just spit out multiples of a specified number.

class Multiple:

def __init__(self, number):

self.number = number

self.counter = 0

def __next__(self):

self.counter += 1

return self.number * self.counter

if __name__ == '__main__':

m = Multiple(463)

print(next(m))

print(next(m))

print(next(m))

print(next(m))

When this code is run, it produces the following output:

$ python iterator_test.py 463 926 1389 1852

Let’s take a look at what’s going on. We made our own class and defined a __next__ method, which returns a new iteration every time it’s called. An iterator always has to keep a record of where it is in the sequence, which we do using self.counter. Instead of calling the object’s __next__ method, we called next on the object. This is the recommended way of doing things since it’s nicer to read as well as being more flexible.

Cool. But if we try to use this in a for-loop instead of calling next manually, we’ll discover something’s amiss.

if __name__ == '__main__':

for number in Multiple(463):

print(number)

$ python iterator_test.py

Traceback (most recent call last):

File "iterator_test.py", line 11, in <module>

for number in Multiple(463):

TypeError: 'Multiple' object is not iterable

What? Not iterable? But it’s an iterator!

This is where the difference between iterators and iterables becomes apparent. The for loop we wrote above expected an iterable.

Iterables

An iterable is something which is able to iterate. In practice, an iterable is an object which has an __iter__ method, which returns an iterator. This seems like a bit of a strange idea, but it does make for a lot of flexibility; let us explain why.

When __iter__ is called on an object, it must return an iterator. That iterator can be an external object which can be re-used between different iterables, or the iterator could be self. That’s right: an iterable can simply return itself as the iterator! This makes for an easy way to write a compact jack-of-all-trades class which does everything we need it to.

To clarify: strings, lists, files, and dictionaries are all examples of iterables. They are datatypes in their own right, but will all automatically play nicely if you try and loop over them in any way because they return an iterator on themselves.

With this in mind, let’s patch up our Multiple example, by simply adding an __iter__ method that returns self.

class Multiple:

def __init__(self, number):

self.number = number

self.counter = 0

def __iter__(self):

return self

def __next__(self):

self.counter += 1

return self.number * self.counter

if __name__ == '__main__':

for number in Multiple(463):

print(number)

It now runs as we would expect it to. It also goes on forever! We created an infinite iterator, since we didn’t specify any kind of maximum condition. This kind of behaviour is sometimes useful, but often our iterator will need to provide a finite amount of items before becoming exhausted. Here’s how we would implement a maximum limit:

class Multiple:

def __init__(self, number, maximum):

self.number = number

self.maximum = maximum

self.counter = 0

def __iter__(self):

return self

def __next__(self):

self.counter += 1

value = self.number * self.counter

if value > self.maximum:

raise StopIteration

else:

return value

if __name__ == '__main__':

for number in Multiple(463, 3000):

print(number)

To signal that our iterator has been exhausted, the defined protocol is to raise StopIteration. Any construct which deals with iterators will be prepared for this, like the for loop in our example. When this is run, it correctly stops at the appropriate point.

$ python iterator_test.py 463 926 1389 1852 2315 2778

It’s good to be lazy

So why is it worthwhile to be able to write our own iterators?

Many programs have a need to iterate over a large list of generated data. The conventional way to do this would be to calculate the values for the list and populate it, then loop over the whole thing. However, if you’re dealing with big datasets, this can tie up a pretty sizeable chunk of memory.

As we’ve already seen, iterators can work on the principle of lazy evaluation: as you loop over an iterator, values are generated as required. In many situations, the simple choice to use an iterator or generator can markedly improve performance, and ensure that your program doesn’t bottleneck when used in the wild with bigger datasets or smaller memory than it was tested on.

Now that we’ve had a quick poke around under the hood and understand what’s going on, we can move onto a much cleaner and more abstracted way to work: generators.

Generators

You may have noticed that there’s a fair amount of boilerplate code in the example above. Generators make it far easier to build your own iterators. There’s no fussing around with __iter__ and __next__, and we don’t have to keep track of an internal state or worry about raising exceptions.

Let’s re-write our multiple-machine as a generator.

def multiple_gen(number, maximum):

counter = 1

value = number * counter

while value <= maximum:

yield value

counter += 1

value = number * counter

if __name__ == '__main__':

for number in multiple_gen(463, 3000):

print(number)

Wow, that’s a lot shorter than our iterator example. The main thing to note is a new keyword: yield. yield is similar to return, but instead of terminating the function, it simply pauses execution until another value is required. Pretty neat.

In most cases where you generate values, append them to a list and then return the whole list, you can simply yield each value instead! It’s more readable, there’s less code, and it performs better in most cases.

With all this talk about performance, it’s time we put iterators to the test!

Here’s a really simple program comparing our multiple-machine from above with a ‘traditional’ list approach. We generate multiples of 463 up to 100,000,000,000 and time how long each strategy takes.

import time

def multiple(number, maximum):

counter = 1

multiple_list = []

value = number * counter

while value <= maximum:

multiple_list.append(value)

value = number * counter

counter += 1

return multiple_list

def multiple_gen(number, maximum):

counter = 1

value = number * counter

while value <= maximum:

yield value

counter += 1

value = number * counter

if __name__ == '__main__':

MULTIPLE = 463

MAX = 100_000_000_000

start_time = time.time()

for number in multiple_gen(MULTIPLE, MAX):

pass

print(f"Generator took {time.time() - start_time :.2f}s")

start_time = time.time()

for number in multiple(MULTIPLE, MAX):

pass

print(f"Normal list took {time.time() - start_time :.2f}s")

We ran this on a few different Linux and Windows boxes with various specs. On average, the generator approach was about three times faster, using barely any memory, whilst the normal list method quickly gobbled all the RAM and a decent chunk of swap as well. A few times we got a MemoryError when the normal list approach was running on Windows.

Generator comprehensions

You might be familiar with list comprehensions: concise syntax for creating a list from an iterable. Here’s an example where we compute the cube of each number in a list.

nums = [2512, 37, 946, 522, 7984] cubes = [number**3 for number in nums]

It just so happens that we have a similar construct to create generators (officially called “generator expressions”, but they’re nearly identical to list comprehensions). It’s as easy as swapping [] for (). A quick session at a Python prompt confirms this.

>>> nums = [2512, 37, 946, 522, 7984] >>> cubes = [number**3 for number in nums] >>> type(cubes) <class 'list'> >>> cubes_gen = (number**3 for number in nums) >>> type(cubes_gen) <class 'generator'> >>>

Again, not likely to make much difference in the example above, but it’s a two-second change which does come in handy.

Summary

When you’re dealing with lots of data, it’s essential to be smart about how you use resources, and if you can process the data one item at a time, iterators and generators are just the ticket. A lot of the techniques we’ve talked about above are just common sense, but the fact that they are built into Python in a defined way is great. Once you dip your toe into iterators and generators, you’ll find yourself using them surprisingly often.

Looks like there is something wrong with these lines:

33: print(f”Generator took {time.time() – start_time :.2f}s”)

38: print(f”Normal list took {time.time() – start_time :.2f}s”)

Can’t find such syntax for print.

Those are f strings, introduced in Python 3.6

Thanks! Found out I have 3.5.

It’s called “format strings”, introduced in Python 3.6 IIRC.

Is there a reason you used the old-style class decleration in the examples?

For Athena’s sake, stop using Python 2 already. It’ll be EOL in less than a year and a half.

Hehe! I even did some QBasic programming today …

I like python, but I swear to god that half the problems with it stem from really crappy terminology.

Yes. Why use old terminology, when we can invent a new one and confuse people with jargon?

Because using common technolgy terms like ” master-slave” is considered hatespeech nowadays, so you have to find something that doesn’t offend the snowflake generation.

Boss-employee, with just the right amount of kneeling.

Cyk, the irony of you being easily offended about other people being easily offended…

Stop harassing me for harassing you. ;-)

What exactly do you mean? Itterators are such an old mechinsm even c++ make massive use of them.

Not a ha –

Oh wait… I’m still learning Python and this was interesting and useful!

Never mind… do please carry on.

This is only scratching the surface of generators. They also have two other properties, the send and return values. Generators can also receive values during their operation, this is the basis of asyncio.

If anyone wants a generator comprehension function for comparison in this example. It’s faster still.

def multiple_gen2(number, maximum):

”’Uses generator comprehension.”’

max_count = (maximum//number) + 1

return (counter*number for counter in range(1, max_count))