There is a widely derided quote attributed to [Bill Gates], that “640k should be enough for anyone”. Meaning of course that the 640 kb memory limit for the original IBM PC of the early 1980s should be plenty for the software of the day, and there was no need at the time for memory expansions or upgrades. Coupled with the man whose company then spent the next few decades dominating the software industry with ever more demanding products that required successive generations of ever more powerful PCs, it was the source of much 1990s-era dark IT humour.

In 2018 we have unimaginably powerful computers, but to a large extent most of us do surprisingly similar work with them that we did ten, twenty, or even thirty years ago. Web browsers may have morphed from hypertext layout formatting to complete virtual computing environments, but a word processor, a text editor, or an image editor would be very recognisable to our former selves. If we arrived in a time machine from 1987 though we’d be shocked at how bloated and slow those equivalent applications are on what would seem to us like supercomputers.

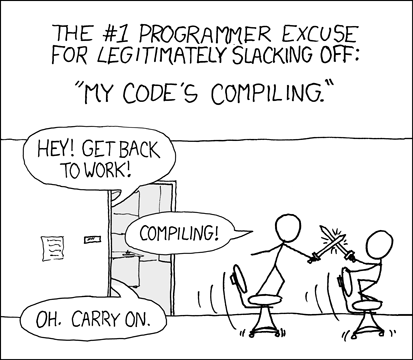

[Nikita Prokopov] has written an extremely pithy essay on this subject in which he asks why it is that if a DOS 286 could run a fast and nimble text editor, the 2018 text editor requires hundreds of megabytes to run and is noticeably slow. Smug vi-on-hand-rolled GNU/Linux users will be queuing up to rub their hands in glee in the comments, but though Windows may spring to mind for most examples there is no mainstream platform that is immune. Web applications come under particular scorn, with single pages having more bloat than the entirety of Windows 95, and flagship applications that routinely throw continuous Javascript errors being the norm. He ends with a manifesto, urging developers to do better, and engineers to call it out where necessary.

If you’ve ever railed at bloatware and simply at poor quality software in general, then [Nikita]’s rant is for you. We suspect he will be preaching to the converted.

Windows error screen: Oops4321 [CC BY-SA 4.0]

January 2020, Windows stops updating, rumors exist about annual payment to keep the system alive.

THe future seems to be a massive swir=tch to Linux..

Windows stops updating? Best day ever.

Yes it truly was a magnificent day they stopped support for XP and made sp 1.2&3 available as a burn to a usb download for airgapped computers.

Windows actually updating? Must be April Fools’

> THe future seems to be a massive swir=tch to Linux..

the day that happens most *real* friends I have (including the girl in the mirror) will stop using computers for every day work.

And why would that be? Why do you expect our work force/economy to grind to a halt and go back to the stone ages? As I sincerely doubt that, I honestly ask, why.

I have a suggestion: maybe because Linux is wonderful OS for programmers, but not for users?

Every book on Linux for beginners I read was divided into three parts, with third one taking 2/3 of the book:

1. How to get your computer infected with particular strain of Linux.

2. How to install and manage your apps and get beautiful GUI to work smoothly.

3. Everything you can do with GUI, you can do with command line interface, and this is how to do it.

Seriously, the only reason Linux has GUI is to have multiple terminals open at once.

Microsoft won’t screw up Windows so much it would be unusable, and they would rather make it free for home users (and keep charging the companies) than force everyone to pay subscription fees – backlash would be too big. This is also the main reason why it’s so easy to get pirated copy of any Windows version with working updates system. Microsoft could have made an update that would crash or lock every pirated copy in use. They didn’t because they want people to have working OS that costs companies to use. Windows is standard, is common, and everyone uses it.

Paraphrasing Richard Dawkins:

Windows – it works! Bitches!

Agree with points one through three, though not with your conclusion. If you insist on a GUI, why not simply stop reading after point two?

My 78 year old mother switched to Ubuntu from Windows 7 without any issues and she is from a non technical background. If she can do it then you can. Sure, it is different but for most things that people do with computers, it is very simple and intuitive. BTW, she never needs to use the command line.

Come on…. Those are misconceptions immortalised by people that talk about Linux without actually using it. And I mean using it, not trying it out 1 lousy afternoon and walking away.

Linux GUIs have been quite capable for a long time and you’d have the same feeling about Windows’ GUIs if you had been raised on Linux. Now, granted there are better and worse distributions of Linux, each reflecting the intent of the creators / maintainers. On the other hand there’s enough options that I’m sure would fit each and every computer user regardless of what they were raised on.

People need to form a mental model of how to interact with some machine, sometimes they just have the wrong model… think of a person that has written with pen and paper all his/her life and hasn’t touched a computer ever. This person will easily dismiss the computer as a whole, but we (computer users) all know how useful computers are and would rightfully call this person stupid for not trying harder to exploit them.

There may be different interaction models between different GUIs and different terminal applications (vi vs Emacs anyone? =P), judging any of them without understanding the respective model will lead you to dismiss them like the poor old guy who didn’t know what a computer is.

Anecdotal evidence is the fight over Windows and MAC, with users lauding their respective interfaces, but most think the one they don’t use is crap. Many MAC users don’t ever touch a terminal. And by the way, Windows has forced a bit of a model change with Windows 8/10. People were up in arms because of that and yet there was no terminal involved… just a new GUI which many didn’t understand and later become to prefer (note I’m trying *very* hard not to pass any judgement yet =P).

And now to spoil the air of neutrality: if you stick with cattle feed, you’re never getting out of the barn. Learn what’s beyond Windows and you won’t be coming back.

–8<–

And FYI, the only 'proper' way of having multiple terminals open is to use tmux or screen like programs. These are terminal multiplexers which were purpose built for that job. Compared to that, using the windowing system and multiple terminal emulators is a cheap substitute. And the punchline is that you can run these straight on Linux's console, no GUI required. So if Linux was relying on that to have GUIs, we probably wouldn't have them. Ever.

Last time you attempted having a fresh start on Linux was 15 years ago I guess…

Even Debian “strain” is easier and faster to install than Windows nowadays.

My advice: If you hate it, don´t try it. Better keep away, it´s not for you since you chose.

What ridiculous arguments are these! Are you stuck in 1990 with GNU/Linux 1.x ?

Linux is as easy to install and use as Windows no command line required. I use both of them.

Unless you are using an iPhone you are using an Linux based OS. Does it hurt?

@random guy:

Nah, the windowing/”region” systems in screen and tmux suck compared to a good tiling window manager, if only because the window manager can use one-press (mod+key) commands using the Super key, whereas screen, being limited by ascii, can’t use higher modifiers, and thus has to use two-press (mod+key1, key2) commands to limit interference with the programs inside it. It’s not the extra key-press when executing a command that’s a problem — it’s that repeating Super+J is as simple as holding down Super and tapping J till you’ve focused the right window, while repeating Ctrl+A,Tab is an incredibly awkward finger dance.

I generally have at least two or three terminal emulators on one wm tag (#8), connected to different windows in the same screen session (screen -x). That session generally has 10 windows open (most of them with nothing happening), but I only ever display one window per terminal. If I’m doing something where I want to see more windows at once, I just start more terminal emulators, on the same or different tag.

>”My 78 year old mother switched to Ubuntu from Windows 7 without any issues and she is from a non technical background.”

That last part is the key. For people with low demands, just getting a web browser working is enough. Granny probably wouldn’t care that her monitor is not working at its native resolution (“OOh, this is so much better because the picture is big!”) and doesn’t mind that her graphics card cannot use hardware acceleration on youtube videos because her laptop is always plugged in.

It’s the intermediate users who use computers for varying tasks from word processing to playing games and using different productivity software, who get the short end of the stick because they are inconvenienced by the lack of consistency and patchy software/hardware support.

Q4OS, works well even on old hardware, and has a version for Pi, well worth a try.

Because Linux still doesn’t just work.

Installed a Debian-based distribution (Bunsenlabs) on an eee PC the other day, when installing it quickly became obvious that it wasn’t possible to install an English language setup with keyboard and other settings in another locale – that’s acceptable perhaps as this is a cut down distribution. So I installed using the language on the keyboard fearing to have to install a custom language/locale combination later (as the system wasn’t translated language for most things revert to English anyway so that was not necessary).

When installing I connected to my wireless router with ease – using a common Broadcom WLAN card that was expected (even though it would have been harder a number of years ago when the “non-free” firmware created problems for some distributions). When installed however there were no way to connect, the list of available networks just didn’t include my network – the one that was used when installing.

Having technical experience I quickly deduced that the missing network used a frequency band that somehow was enabled when installing but not afterwards. Knowing something about radio I guessed that the “locale” of the card was set to a country where the band wasn’t available and after some digging found the command to set it (“sudo iw reg set GB” for instance), it just didn’t work. After a lot of time spent on this I finally took an Ethernet cable to the router and reconfigured it to use another band – so now it works fine.

This is the reality of how things work in 2018 with Linux. Carefully selecting hardware can work around this but can’t eliminate the nature of the beast. How would my mother have handled this problem, or even a reasonably technical person capable of reading manuals and searching on the Internet?

“When installed however there were no way to connect, the list of available networks just didn’t include my network”,

Sounds like you should have clicked show more networks, it can’t show you 90 networks to choose from at once.

You and everyone that is childishly defending Windblows either is too stupid to be worth arguing with, or has never actually used Linux for a couple days in the past few years. Prove me wrong

Why do you people *always* go searching tor the most obscure and unmaintained Linux distribution available? I’ve never even heard of Bunsenlabs, and I have over 20 years of Linux experience

You should have installed a regular Ubuntu, not some crazy exotic distro…

“Use the distro your ‘guru’/tech person knows”. It was a mantra in time I started learning linux (early 2000s). it is still one now. Same actually with windows, you WILL get problems with software/hardware. But – if you choose something reasonably popular, probability of that problem either being known, and being fixed, or at least finding someone who can investigate and fix it is much higher.

I already use Linux for most tasks.

19992000200120022003200420052006200720082009201020112012201320142015201620172018: THE YEAR OF THE LINUX DESKTOPWell, at my workplace, since about a year ago, Linux is one of the fully officially supported software setups. Our team uses either dual-boot or Linux. (With primarily using Linux)

“1999 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 2010 2011 2012 2013 2014 2015 2016 2017 2018: THE YEAR OF THE LINUX DESKTOP”

Yup indeed they were, and I was using it before even then. Does that make me a trend setter?

“January 2020, Windows 7 stops updating, rumors exist about annual payment to keep the system alive.

The future seems to be a massive switch to Linux..”

I’m pleased we all agree..

As to Win10, the beta I loaded erased the entire hard drive trying to recover from a sleep mode.

I was not pleased. AFAIK, Win10 is still “Not Ready for Prime Time”.

The debate on :”Real Time OS” and multi-tasking may continue now.

I thought that was an old discussion, but it keeps being brought up. Languages&libraries are more abstracted so that it’s easier to write software, RAM is cheap, barrier of entry is lower, optimizing software is hard and is a diminishing return (for unskilled people at least). But, more importantly, it’s still easy to write stuff that works quickly and it still exists in droves, you might need to look for it but it’s there. + some tips:

– don’t use Electron-based apps, native is the way to go (or keep a browser tab open, in case of SaaSS)

– install ad, tracking, Javascript blockers in your browser

– learn to triage programs/plugins that slow your system down, remove or replace those

– consider replacing some GUI apps you use with command-line apps

– try switching browsers – maybe there’s one that’s less of a hog but you don’t know about it

– Linux: try switching DE for something less hungry

something else I missed?

i dont know why people blame the guis for slowness. granted ive never written gui code that wasnt equivalent to a 15 minute stroll through hell, but when done right it can be just as fast. like i never want to go back to the command line for managing my file system for example. i always hate when i forget to sudo the file manager in linux so i can move things without the os giving me an access denied on files that should be mine. any part of the system you interact with on a daily basis should have a gui and linux even to this day fails in that regard. what good is it to make a program fast if you have to spend an hour reading the man pages just to remember how the thing worked when you can present a self explanatory gui.

what can die in a fire are convoluted gui libraries that are a bloody nightmare (anything wx). where as a simple straight forward library like iup never gets used. i think a lot of the problems with software bloat are probably related to the overdependence on the #include statement to solve problems.

Never heard about IUP before. Link for the lazy: http://webserver2.tecgraf.puc-rio.br/iup/

The GUi libraries don’t just have to be simple and efficient, they also have to perform every graphics operation as efficiently as possible in all cases. Which is hard enough if you just have to support a single platform, but we expect Qt, GTK etc. to do this on every platform (Windows, Linux, Mac etc.) with every graphics subsystem (X,11, Framebuffer, Wayland, whatever Windows and MacOS use nowadays, etc.) and for every possible combination of GPU hardware and driver version/features.

This is why gaming consoles and many mobile devices perform so much better in this area.

They don’t perform graphics operations even close to optimally. Very little software is optimized to be as efficient as possible, first because it’s unnecessary and secondly it’s impossible for almost everything.

But then I don’t expect you to understand this given your response to my other post above – where you missed the point and went barking in a completely wrong direction instead. Seems that is your standard MO.

IUP is missing from every search for an alternative i would get WX and the other paid for API’s with the most bloated runtimes on offer Tinkering with WX gets to the point of shrinking it down to only what it needs but even then it’s still 12mb to much, Who can be bothered with the wind32 templates..

I think the author’s point is that, while you certainly CAN trim around the edges and jump through hoops to save a bit of RAM using your tips… the REAL problem is that all those tips should be completely unnecessary to begin with. The industry as a whole seems uninterested in making quality software, instead settling for “throw more hardware at it”, and most users don’t want to constantly have to figure out “great how do I disable the latest stupid thing Google did this week”.

Electron is a problem but it’s more than that – it’s indicative of a complete failure of the industry entirely. The only way to reliably ship a cross-platform application that can draw text and images on the screen is, apparently, to package an entire web browser with it (including junk like an XBox 360 controller driver ?!). How did it happen that people would rather do this than use native widgets? How could Qt be around for years and years and still not be the automatic first choice for this situation?

This is the kind of deep-seated undercurrents that I think the author is trying to get at. “Throw hardware at it” is not a solution, at least not any more, since Moore’s Law is dead and buried. Something more profound has got to change in the philosophy of software design.

The electron is probably answer to “we have web version, so we just package it and ship it, because writing native is hard” and “we already wrote tablet version using webview”

It’s not “because native is hard”, it’s because “we don’t want to do the same thing twice”.

That’s why I firmly believe that having limitations bring genuine creativity. Industrial controllers are a good example. We’re still working with pentium celeron grade processors and a few MB at best for new hardware. It does help that using lower level languages don’t need as much memory.

It still requires that we need to be efficient with the resources we’re given.

OS in FORTH.

http://www.forthos.org/

Limitations always force creative solutions to problems.

Any of the early consoles are a perfect example of code optimized to the hardware to an absolute extreme; but this is entirely impractical for today’s computers.

The only things that made this possible are not present anymore.

(fixed hardware, and generations where that fixed development target would be available for several years)

Now, these are broad comments, and x86 compatibility could go a long way, but I think the sentiment is accurate.

Never mind desktops. If I want to look at crappy software, I look at my Android Phone. I am amazed at how often it is busy “doing something” when it’s sole purpose in the universe should be to respond to me.

Android is a mess, but back when I had an ios device it wasn’t much better. Maybe they’re different now, I don’t know.

While somewhat still yrue today, i find running lineage on my almost 7? Year old phone (note2) without google apps to be quite alright.

Yeah, in my experience most performance sluggishness and resource hogging is funny enough Google framework related.

Meanwhile almost all apps not relying on Google’s bloated framework are light and nimble.

Might be because they don’t track my every movement and force ads in my face.

ive never encountered a phone os that i would prefer to use over any linux distro. and thats from a windows user.

If you are talking Android, you are still using linux. And if you compile it yourself without any parts of Googles fork, it is as fast as any small Linux distro and have 16 days of standby power.

technically yes but its so locked down it might as well not be linux.

It’s Linux in the same way that a pile of feces is a three-course meal. Maybe it started that way , but it’s lacking several important Linux-like qualities now.

Response to the user should be the absolute number one priority for software. My stupid phone can take several seconds before it responds to my attempts to answer the incoming call. When the user pokes a text input field on anything, the keyboard should INSTANTLY appear. But it doesn’t, but sometimes it does. It’s highly variable.

For any operating system there are a set of functions for user interaction that should be given top attention, with ONE acceptable way for software to access. User taps a text input field, all software, no matter what it is, should do exactly the same thing to pop up the keyboard.

Operating systems have code to do various things so the application author(s) don’t have to do those in their program. For Windows those are standard dialogs. Open/save a file, use the standard dialog and that’s a big chunk of code you don’t have to include in your program. So what does Microsoft do? They don’t use their own standard dialogs in Office and other programs. Adobe doesn’t use them either.

Why do so many programmers still write software like they’re writing it for MS-DOS?! They make a lot more work for themselves redoing stuff that’s provided by the OS so they don’t have to write those tedious bits of code.

Remember Firefox 4.0? For some reason they decided to not use Windows’ API for mouse input, and broke scrolling so bad the browser ignored ALL scroll input devices. A bit later they got scroll wheels (and only wheels, drag zones on trackpads were broke much longer) working. There was ZERO REASON for the Firefox team to do any of that but they did it anyway.

Right now if I put a disc in my desktop’s burner and started writing to it, Firefox would slow to a crawl. It’s been that way through many updates, across many computers. Not even reading data from the C: drive where Firefox caches data, not involving the bus/controller C: or the burner are attached to – but for some reason that process that Firefox *shouldn’t* have any contact with at all makes that browser, and only that browser, slow down. What the bleep are they doing?

My smartphone never has these issues because I don’t use it for desktop things, I never use it to browse Internet and keep data transfer disabled unless I need it for specific tasks. For example I use two free apps, one to read ebooks and one to listen to audiobooks, both show annoying ads, or would have if I were connected to the internet. My phone is fast despite being 7 years old already, and android app store is not working at all due to forced obsolescence.

I also think that all software developers have problems with using standard system calls and functions. They like to have extra work. Part of the cause for this is the way people learn to program. For example Python has standard system calls to read and write files. But there is nothing in the books/tutorials/documentation about calling open/save dialogs. At least there wasn’t when I last attempted to learn Python. It’s probably because Python is presented in those tutorials and books as CLI app creation tool.

The other problem, especially with open software is the fact that developers don’t think about user experience when creating their GUIs, or even CLI parts of the software. For example bCNC is awesome piece of software, but the UI is not very intuitive. I used KiCAD once and it was horrible – prime example of bad UI design done by engineers for engineers. Eagle was garbage too, with the same problems, and it taught people that it’s okay to make and use crappy tools as long as they are free or cheap. Compare it with DipTrace, which is very easy to use and master. GIMP folk for some reason use their own file open/save dialogs that are just plain bad. Maybe because it started as Linux app, and Linux/Unix has GUI only because people wanted to have more than one terminal too look at the same time.

Firefox gets bigger and bigger, and adds more and more useless functions, like some cloud BS for syncing browsers or some other crap. Why they can’t add a built-in ad blocker, script blocker, or some other useful functions that would enhance user experience? Why it hogs 1/4 of my system memory just to display 4-5 tabs? Firefox is crap, but it’s the best crap we have…

You seriously just said “wah wah software is too bloated. wah wah why doesn’t my software include this”. That was very amusing.

As for your criticisms of user interfaces, it’s true developers think a certain way and tend to put UIs together a certain way. UX is a field of study all on its own, if you want to improve open source software by improving its UX you should study and contribute.

Trying to diagnose such an old problem is near impossible of course , but it serms your OS scheduler would be settibg a lower priority for all user applications not invplved with disk burning to prevent it from creating a coaster …

Programmer time is expensive, user time is free.

Programmer time is expensive, user hardware is free.

Using Java, programmers can bang out apps in record time. All the app requires for good performance, is a few cores, a few Gb of Ram, and a few GB of disk space, all at no cost to the developer.

People don’t want to pay for software. You want $4.99 apps, you get $0.01 performance.

C+ is hard.

C is hard.

Pointers are hard.

Performance analysis is hard.

Java can do all these things, at a small cost of user time, which is 100% free.

Yea, I’ve noticed that a lot of software is built to fit into the target system, not built to use the least resources to accomplish its tasks.

…which is true for almost everything, not just software.

while(1){

java.update();

}

So? Updating software packages seems to only be a painful thing on OSes without a proper package manager. Bunch of self-updaters competing for bandwidth; disk trashing due to multiple layers of self-extracting EXEs and MSIs; the need for a lot of those to pop up GUIs while doing whatever instead of staying out of the way.

Nah man, elsewhere you just get a handful of files extracted to specific places, quickly and in the background.

Updates aren’t a reason to hate on Java for. Their memory (mis)management is.

I hate Java. Java sucks.

Java is responsible for maybe 90% of that software bloat. Java is awful for anything other than making software cross platform.

The only thing written in Java is enterprise software, that’s the only use it has in the software industry (excluding android applications) the kind where users press buttons, enter text into text boxes and watch the data get entered into a database, hell i just described 99% of enterprise software.

Java is still really slow, it has vastly improved its performance compared to the early 2000s, but its still slow. The people who favor Java often point to benchmarks on how fast Java is compared to C or C++ , but honestly these superior benchmarks never translate into faster applications for some reason, in fact java applications are slow as hell, even if you ignore the VM starting time (which is still, in fact time the user has to wait).

The sooner the industry accepts that java is slow, and bloated, the sooner we can move to something better

https://www.quora.com/Why-does-Minecraft-run-on-Java

Hmmmn.

Java itself is not slow, unless you are stuck in the 90s.

Used to develop java SW for embedded automotive platforms and could fit a fast GUI on a 100mhz CPU with 16MB of ram. Also developed some OpenGL stuff, ran very smooth, comparable to non-optimized(SSE) C++ code.

The problem with java is that it allows an incompetent programmers to do very bad things very often, and the product still kinda “works” so it will be shipped and sold. The crappy performance is blamed on java vm, not the programmer.

Not even close. Somebody obviously hasn’t run anything close to modern Java.

Running on the same old i7 970 hex core, I was amazed at how fast Java became with 7.

And then astounded with the speed of 8, and the additional speed from using functional syntax for listeners. And it’s so easy to multi-thread, I use extensive multi-threading.

I stream data transactions for 20 stocks, assembling quarter-second snapshots, push them out for four securities, process around 24 indicators for each of those four, including multiple cascading indicators, driving a strategy for each that determines and passes a position size to an order management module that builds and transmits orders, and receives order executions, status, etc, back from the broker, monitoring for required price improvements eight times a second. From the final data received for a snapshot with that process triggered through its paces until my orders are out the door, is typically under 3 ms, occasionally as high as 6 ms, and rarely 9 ms. In the background, the process is monitored through multiple Swing GUIs with some FX charts. All while the broker’s SW is running its GUIs and multiple charts displaying multiple indicators. My GUIs update so fast that I had to add sound cues and background colour shifts, as without them I was missing updated displays.

Modern Java is not your high-school teacher’s Java.

Take Etcher. All it does is ‘dd if=x.img of=/dev/sdbx bs=1M’, Using stdin and stdout, I could write that in 20 assembly code lines. The Java code is 84 MB!… (And using such a bloatware is an act of faith. Click and pray!)

True or you could write it the same number of python lines or even a few lines of bash.

you mean ‘single line of bash’ – i.e. the one above

I mean something with a select menu which you can do in bash.

But it makes the places were bugs could be hiding and attack foot print for hackers to look for exploits in the size of Snoke’s ship.

Idea for all people running QA and user acceptance tests: Take your typical developer’s machine’s specs, then run those tests on something half as powerful.

(Bonus for the beancounters: Once the devs learn to cope with the backlash from that and write better code, you could skip upgrading their boxes for a few years.)

>Take your typical developer’s machine’s specs, then run those tests on something half as >powerful.

Sadly, this problem isn’t new – one of the computer magazines in the early 1990s got caught on this one after they reviewed a new version of a word processor and wrote about how good the performance was – they’d tested on the latest and greatest machine from {whomever}, which was significantly faster than the typical PC of the day.

“Programmer time is expensive, etc”…

“Java blah blah blah”…

The whole philosophy is a crock of shit. If you respect your users, your users will be loyal to you!!! If you try to tell sell them a gold plated Java turd they might buy from you once because they don’t know any better but word gets around.

“Web applications come under particular scorn, with single pages having more bloat than the entirety of Windows 95, and flagship applications that routinely throw continuous Javascript errors being the norm. He ends with a manifesto, urging developers to do better, and engineers to call it out where necessary.”

All hail the mighty web browser, for without it we’d still be on dial-up.

Not sure what happened with web applications yes they always had some bloat but they usually were still quick and to the point.

But all I can say about what happened to some after 2013 to 2015 is this. https://memegenerator.net/img/instances/67563158/you-just-went-full-retard-never-go-full-retard.jpg

I couldn’t agree more. I have two machines on my desk at work:

1) A company issued workstation that cost $5000+ last year filled with a high-end 6-core core i7, 48 GB of DDR4 RAM, a 4-disk array of NVMe disks in RAID-10, Radeon Pro W9100 video card. It runs the latest stable build of Windows 10

2) A crappy old USFF Dell running a 3.2 Ghz Pentium 4, about 1.5 GB of mDDR2 Ram, a 80 GB 5.4k RPM disk drive scavenged laptop HDD, the Video card is the GMA-950 built into the chipset. This machine runs OpenBSD with the xfce environment.

I found that for like 95% of the tasks I do on a daily basis, the Dell box runs circles around the monstrous workstation I was assigned. The remaining 5% are those shitty web applications that will suck up every scrap of RAM and every processor core it gets near. The little thing also runs very cool and will run for months, only going down due to power drops (usually from testing the backup power systems in the data center, causing a bit of spike and dip during the testing).

There is software out there that is built correctly, but there seems to be an over-obsessive need to add new features like voice assistants, fancy graphics, cloud integration, and other such stuff into things that should never have it in the first place. It also bothers me how many layers of frameworks and obfuscation is involved in modern software, like why does a text editor need a .net runtime? Why are there 4 different JS platforms involved in processing my comment on a web forum?

i still think windows 2000 was the best one in terms of its guis. it got the job done without being a bloated mess. you could get the same thing in windows xp if you turned themes off which i always did. now you cant turn off all the bells and whistles without completely crippling the os. my rule of thumb is to use as little of the os as possible and just use 3rd party applications for everything you do.

This turns off the bells and whistles without crippling the OS

https://www.oo-software.com/en/shutup10

I was also all for Windows 2000 too, I used it for way too long

I remember doing a comparison between a 286 machine running Windows 3.1 and a new pentium running widows 95 – the rest was simple turn each machine on open word type a few lines and print it out.

No prizes for guessing which one competed the task first

Back in the day I had a Windows 95 box that was fully booted in 45 seconds. Haven’t timed my much more powerful, faster, FX 6100 box with Windows 10, but it takes quite a lot longer to be fully booted and ready to go.

Progress?

Boot SSDs do make a difference.

But the old computer didn’t have SSD. We need SSS to keep up with slow spinning mechanical discs?

I fired up my old IIfx and it opened apps faster than my Core i3 laptop.

Windows 10 needs to put 1.5GB of something into RAM to log in.

Windows 95 needs 4MB of system RAM and less to boot.

One thing that really slows down programs is doing unnecessary tasks. For instance, on startup Windows 3.1 catalogs all available fonts. Not knowing this, I installed about 500 fonts on my Win 3.1 machine, resulting in boot times in excess of 5 minutes.

…and when would have been a better time to do this? If it did only do this on demand, your applications would either not have offered the font or your text editor would have lagged tor five minutes the first time you clicked on the Fonts dropdown after installing all those fonts. Using a font cache would most likely have made it faster, but filled up your hard drive.

Some things just can’t be done in a satisfactory way if the user doesn’t want to see the value.

The problem is rather the user installing 500 fonts for no good reason. The system should at least give a warning that you did something silly.

Never underestimate the ability of people to do unexpectedly silly things. My dad, who programmed for 30 years, called computers a glorified toaster and explained that they are only as smart as the person who programmed them. So then, to build a system as complicated as an OS and anticipate every silly thing that anybody might do to it is truly a monumental task, and must be done by the people who programmed it. Show me someone who thinks they can do it, and I will show you someone who is overconfident in the extreme.

…and then that tiny minority who does such a thing goes ranting about how stupid the OS is and why it keeps showing these stupid warnings. If they don’t just click away the warning from the start.

I agree entirely with Nikita’s comments – and he has only been programming 15 years.. Wait till he has been doing it longer and he will be more frustrated…

I handle it by using old software – this PC I’m on has windows 7 64bit, running office 2002 (I’ve added the converter to read .docx and .xlsx) etc. Yet I’m using reasonably modern hardware – state of the art from about 2 years ago – which means it boots fully into windows in under 5 seconds… Word opens in under a quarter of a second, and everything runs pretty fast. The current project I’m working on – not very big, maybe 20k source code lines – compiles (full) and links in under 2 seconds…

The other thing is that you can still write reasonable programs – just ignore all the layers of shit. I’ve recently written something for a small company that did quite a lot – and the .exe was less than 2mbytes.. And it didn’t need any .net libraries or special dlls installed – it just ran on windows xp through to windows 10… They couldn’t believe it, they also can’t believe it hasn’t crashed once yet..

I think the problems really started in the late 80’s when, when the idea of ‘don’t worry about the speed, just get more hardware started’

Which led (by now) to situations like one firm I’ve done work for – they had their daily reports taking over 24 hours to run (they even knew they had a problem at that point!)… Using the SAME database, I rewrote the same reports (output) to take less than 15 minutes….

And I agree with the above, the size of OSs is rediculous. Why is my phone OS about 1000 times the size of my old amiga 1000s OS?

> I think the problems really started in the late 80’s when, when the idea of ‘don’t worry about the speed, just get more hardware started’

As with so many things, it’s in the interpretation of what that means and the intelligence and skills a coder/programmer/designer/etc. invested in and can bring to the table. Due to the cost of maintaining complex code, the emphasis was meant to be on building straightforward robust reliable code, without fancy pieces of crap inserted throughout in attempts to make it run faster. Make it run correctly first, identify bottle necks and THEN optimize. And if you can get some faster hardware to hit your performance goals while keeping the easy to maintain code as easy to maintain code, then your total life-time cost for that code will be significantly lower, than if it’s been crapped up with fancy wtf-did-they-do that attempts to run 4% faster and becomes difficult to maintain. Hardware is not a substitute for someone who is in over their head writing poor code.

As an example, the fast turnaround stock trading software in Java that I mention elsewhere above, wasn’t written to be fast, nor was it optimized, other than I chose to use arrays over lists for speed. Then I simply wrote robust code. The result turned out to be fast. At with a typical under 3 ms turnaround, when the target turnaround was 40 ms (hoping for 20 ms), I’m not going to waste any time on optimization. If I expand the number of stocks I want it to handle, the simple solution is to upgrade to current hardware instead of 10 year old hardware. And if crazy loads are wanted, I can go for the crazy 12, 16 or 32 core CPUs that are available. This would be a classic case where throwing hardware in for performance would make sense, rather than poking fingers into trying to optimize robust code, and code that the Java engine is obviously doing an excellent job of optimizing the running of.

At Oregon State in the early 90s I was trying to make the point that my 28MHz Amiga 600 seemed as fast as things that should have been much faster (HP Apollos and new Pentiums I think at the time) and I think I have the note on my paper still– the professor was Tim Budd whom I think was someone involved SmallTalk but it was essentially that the processor speed and computer resources would so swamp the software complexity that things like Amiga would be forgotten.

And he’s right, but not completely. I think people would gasp if they saw Amigas 25 years later. And not just at the flickering scan lines. And the old QNX full-GUI-system-on-a-floppy promotion.

It is sort of cool though that vintage computers have their uses still, sort of like old cars. Adds some romance instead of everything just being better on all fronts.

Javascript as the holy grail of cross-platform GUI toolkits is one of those trends that needs to be stopped by forcing it’s proponents to work on netbooks.

Better still make them work on a raspberry pi.

Only the first gen rPi A.

Exaggeration is the only way they learn.

Good code is an art. That suggests that good coders are artists, at least in part. When there were fewer computer-like devices, and fewer programs needing written, maybe there were enough of us artists around to meet the demand. But nowadays, there are so many computers, so many programs needing written. We haven’t (yet) figured out how to *make* artists. So, we make coding tools that let poor coders make programs, to meet the demand. it’s not unlike the tech support game: they have to hire inexperienced people to talk the callers through the troubleshooting script because the really good techs, the tier 3 people, are too few to take *all the calls* that come in now.

Nobody today wants to pay for good tech support so it’s all outsourced to India where some Bombay torpedo gives you the wrong answer.

Back before the off-shoring frenzy you could get American tech support even at small companies and they could help you. Sometimes you’d even get one of the developers on the phone.

So today it’s not even worth calling technical support unless you’re some corporation with a platinum level support package.

No, good code is and should be engineering. Analyze the problem, find a solution, implement the solution.

“No, good code is and should be engineering. Analyze the problem, find a solution, implement the solution.”…

And that’s a big part of the problem right there. You are trying to turn a very complex problem into a painting by numbers system for a chimp. At some point you’ve got to accept that making more and more complex tools for the chimps to use is making it harder for them build good simple programs not easier to build complex programs.

No I’m not pushing “paint[ing] by numbers” as a solution, and I find the suggestion of yours that engineering doesn’t contain creative thought insulting to real engineers. Engineering requires skill and experience to see what it the correct solution but also the time, input, and resources to make that correct solution workable. Guess what programmers aren’t given?

I did not imply that “engineering doesn’t contain creative thought” you inferred it.

The problem is that software engineering is a joke. It doesn’t compare to real engineering, it’s just a paint job designed to elevate the status of programmers to that of real engineers. Software engineers can’t analyse a real problem the way a hardware engineer or chemical engineer or civil engineer or any other engineer can. They have lots of fancy terminology for fluff which just baffles most non highly technical computer people. It’s great for generating work, for justifying huge costs, but it doesn’t really simplify coding. If software engineering were a real engineering discipline we would, by now, have tools that could very simply generate complicated systems from simple no nonsense descriptions. The fact is that most of these tools generate tons of code and it is then left to a magician to actually make it work. Yes “Engineering requires skill and experience” but software engineers can hack and patch a ton of software in ways other engineers would never be able to get away with. If you want to understand why systems are so bloated these days take a programmer and ask him to write a simple program than ask a team of software engineers to write the same program without letting them see what the other guy did.

@ospr3y you do realize there are as many ways to build a bridge as there are to code a user interface?

Take an Engineer and ask him/her to design a bridge, now ask a room full of Engineers to design the bridge with unlimited choice of materials and you will probably get as many different designs as Engineers you ask. Uniformity of design comes from restrictions if asked them to design the cheapest to build bridge using only reinforced concrete that will support 200,000 tons of car traffic and they will come up with designs that look more alike.

People who get paid to write software have the limitation that their most expensive resource it their time, given the choice of good code in a week or working code today the employers generally prefer the working code.

If instead of fast to produce and working the economic incentive was for smaller, using less resources, and still working the results would be “better code”.

Seems like most software is Tacoma Narrows Bridges in the making.

Sadly yes. There are people out there doing what I’d call software engineering but the standard is makes something that looks right and then try to glue the pieces falling off.

Not that software is an easy thing to do right, especially with changing demands during development and the expectation that a bicycle should be able to be worked into an oil tanker.

It’s easy to blame the software, but a surprising amount of the problem is just the displays. Back in the day, we had 80×24 character screens = 1920 bytes. Today, we have 3840×2160 pixels at 24-bits each = 24883200 bytes. That’s 12960x the amount of data we need to move just to draw the screen. It adds up.

yes, but now we hae graphics cards that can do those sized screens in a blink of our eye…

Yes it does, but some games manage the trick why can’t windows.

One of the prettiest with a small footprint.

https://www.enlightenment.org/

Even without a beast of a card it does pretty well.

What? Games? Are you talking about games running on Windows?

Why even mention games here?

No it isn’t the problem. We have higher bandwidth buses, higher performing processors and even the slowest GPU is capable of accelerating text rendering. You are in the area of one of the problems, rendering text nowadays require font rendering with variable size characters in a vector format with anti-aliasing, kerning and a lot more which all add up. But that is only a small part of things, text rendering isn’t one of the main problems. To illustrate decrease your resolution to 1024×768 with 32 bit per pixel, is your computer suddenly 10 times faster?

Your numbers aren’t correct BTW, the text screen requires 80×25 = 2k characters encoded as two bytes each, the graphics screen uses 32 bit per pixel => a ratio of about 8300.

Time to read the article, friend. He mentions that the hardware is there, and games do it (a million textured shiny polygons 120 times a second? No problem!), so why the hell does MS Word have latency on pressing a key?

I’d like to add that why is MS office the only program, that has problems with the clipboard? Why does MS office share the undo-buffer between documents? Because MS office is just one complete piece of guano.

Nicholas Wirth observed in the early ’70s that software is getting slower faster than hardware is getting faster…

90’s – Wirth’s Law.

I come from the perspective of writing software since the mid-80’s, and something that hasn’t been mentioned here, is that there is an ever-growing list of items to be checked off in order to get your software graded ‘plays nicely with others’. Back in DOS days–except for TSR’s–the entire computer was owned by one program–yours–and it didn’t have to worry about anything except getting the job done. To my mind, (besides coding inefficiencies) THAT is the primary reason for modern programs being bloated and slow; instead of being able to stride confidently in blessed solitude through their own castle, master of their domain, now they exist with a thousand others in a tightly packed ballroom that they can’t escape, and they have to follow all of the rules of the dance just to get anywhere. (…and like the others that they have to try not to step on or be stepped on by, they have packed on more than a few pounds over the years, victims of laziness, poor habits, and ignorance of what they are ingesting.)

They manged to do multitasking and protected memory on early unix machines.

Partly true.

And yet machines like the Amiga had no problems multitasking. The same with real-time OS’s like OS-9 and QNX. And the Unix workstations like those from Sun or SGI with their tiny memories and disk storage(compared to today’s bloat machines and bloated software) looked like models of efficiency.

And if you go back further. There was the HP-1000 series. Used in aerospace instrumentation, industrial control, testing, etc. You know real world stuff, not silly web pages. Lots of work done within 32k of RAM. Yeah it was the size of a refrigerator but it was rock-solid and the compilers were top notch and easy to use.

Or the Gould-Modicon systems.

And in the industrial sector where real work and reliability are important. We still have 30 year old PLC’s chugging along keeping manufacturing systems, mines and quarries running smoothly. And they all have tiny memories and programmed in ladder logic.

Point is, programmers have been doing serious work with limited systems before you were born. They also knew their stuff and the hardware.

yep, I started programming in the 70s on a hp3000 – I think it’s a bit silly saying that programming it is a ‘new’ thing that we haven’t worked out yet…

From the 70’s to 90’s we mainly did proper designs that DID include a focus on performance and maintenance.. It all seemed to disappear in the 2000’s though…

Many programmers now a) don’t seem to want to learn how to do things well, b) don’t understand how any if it actually works, and c) don’t seem to care about bugs (and certainly won’t sped weeks tracking them down..), d) etc etc

The real thing that annoys me is that is that it’s actually FASTER to write a well structued, testable program, that is also efficient, than much of the crap that is being writen now. So I do not accept that it takes longer to do – I do accept that places may not have the staff who can do it, as I’ve seen some of the idiots they hire…

Yeah, and in 1967 some of us were doing some fairly elegant cryptology on IBM 1401’s with 4K core memories. (Anyone ever heard of Abraham Sinkov?) Unfortunately, these methods have been classified for, and come under review at 60 years…2028, maybe. However, the techniques I learned about buffering and replacement came in handy when I worked for a while for a company called Automated Medical Systems, which developed one of the first (if not THE first) bedside physiological monitoring system based on Honeywell 515’s. The principle designer was a brilliant guy named Stan Leonard, M.D., who also held PH.D’s in Physiology and Computer Science.

It seems to me that the key to success is to accept the limitations and constraints as they appear, and still maintain your belief that the problem is solvable anyway.

Also, the Amiga had a very responsive GUI… The one I used for years had a 68030-25 as CPU. When you clicked on something, you instantly got a reaction (even if it was only inverting the button colors) which told you that the OS had registered you want something. That little detail made a large difference in how the system felt. When switching to Windows and Linux, it took quite a bit of time to get used to the fact that on those OS, there are times when the reaction is delayed, sometimes by quite a bit.

I blame it on the fact, that the Amiga didn’t have paging. When starting a program, it loaded that into memory in its entirety in one sequential load.

I still have an Amiga 1200, it uses a CompactFlash Card hooked up to the rather slow IDE port (no DMA, CPU has to copy the data) as an SSD. Power on to desktop ready in less than 10 seconds. CPU is an 68030 @ 28 MHz.

I also agree with the article. We need to stop the ‘I’ll just add another framework’ approach to programming and get back to where you can run a system for months if not years without reboot.

Then again the Amiga delegated great parts of the GUI functionality to the special chips, so the processor was just hanging at the background doing whatever whenever.

It was a system that was designed as an arcade/console machine and then they bolted a bigger CPU on the side to make it a desktop computer. The chipset could run simple programs and respond to user input instantly and independently of the CPU because it was running in lock-step with the screen refresh.

User input was not handled by he chipset though, that was still done by the CPU and compared to a moderatly modern graphics card, the Amiga chipset is not really that powerful. Any graphics card made after 2000 (or even earlier) should be able to run circles around the Amiga chipset. The OS just needs to make use of that power… On a modern OS on modern hardware, there should be no input lag, ever.

A lot can be attributed to the AmigaOS design and the programmers knowing how to get performance from a limited resource (68000 @ about 7 MHz) so when you went to a faster CPU, things really took off. Instant reaction to a click (even if the requested action then takes a while) also plays a big role in how a GUI is perceived.

Remote ‘updates’, easily accessible shared libraries and Moore’s law have simply made programming easy to do poorly.

99% of programmers I meet didn’t write or review 1% of the code they use. An less than 10% need to maintain their code.

Hell, even in the ’embedded’ world I regularly see programmers spec an OS with WiFI for something that could be done with a battery, PIC10, assembly and one day of work.

I’m amazed programmers still make as much as they do in the USA. This coming from someone whose primary income was programming for nearly two decades. India (on average) might produce the same low quality code but at least they work for fractions as much. If it wasn’t for stupid software laws (copyright and patent), USA programmers would earn a wage more fitting to the quality of work they spew out.

To be completely honest it’s getting that way in hardware. So much is getting shoved into the ICs that most 20 something electronics engineers I get to interview have ‘designed’ FPGA and mother boards, but couldn’t bias a transistor properly to save their life. Its Legos now.

Hence the electronic hobbyist movement I guess.

I can’t agree more it seems like on code bloat and stability they took the bar and threw it down the nearest oceanic trench.

Developers always optimize software until it runs fast enough for them, and then stop (why invest more time? It’s fast enough).

Developers also tend to use high-end hardware.

This means software runs slow on most other people’s systems.

I will say that it is pretty unfair to compare current software to software 20, 30 years ago. I mean, a game boots up faster on a VCS 2600 than on a PS4, but that’s because a VCS 2600 puts a few huge pixels on a screen, while a PS4 produces pictures that are sometimes indistinguishable from a photograph. This needs a lot of programmers, which produce a lot of different layers of abstraction, which slows things down. Similarly, on a PC text editor, you have things like unicode, a clipboard, a windowing system, automatic software update, complex syntax highlighting, and so on, all things an editor didn’t have when it just showed a bunch of ASCII characters to you. If you really want to go back to that, you can, but most don’t want to, and are happy to pay the price in performance.

BTW, let me just add this: 15 years ago, when this guy started programming, software was already slow. In fact, back in 95, software was probably at its lowest point. Windows 95 ran terribly on most common hardware, and people (actually including myself) were complaining about the fact that applications like Photoshop were now tens of megabytes, instead of the slender single-megabyte apps they used to be.

Its not just Windows. Software like the Atom IDE is an electron app meaning it runs its own instance of the chrome browser because programmers want to develop shitty desktop apps in HTML, CSS and Javascript using a shitty Javascript engine that takes up 300MB of RAM on startup.

Gnome is bloated as hell and gnome-shell runs in its own wasteful javascript engine. Heck even Qt with its QML language developed to make app development quick, needs to run in a javascript interpreter as well. Qt apps built in QML are significantly slower than those built in C++.

Embedded developers are expected to leverage buggy hardware vendor libraries and higher level abstraction APIs to speed up development and reduce time to market. Gone are the days when embedded developers put in the time to develop their own carefully crafted drivers for a particular microcontroller. Hey why even use the hardware vendor libraries when we can use Arduino libraries!!! They work right? who cares what’s in the source code….let’s just put code developed my hobbyists into our next major product sight unseen. Or how about that mBedOS thingy? It’ll cut our dev time, but a simple blinky program will eat 55KB of Flash. So what if we’re wasteful? We got 512KB of Flash to burn!

Oh want to divide and multiply a number by a power of two? Oh why bother using bit shifts? You got a FPU on board bro, just do the divides and multiplies the traditional way, no need to look for short cuts…This isn’t the f**kin 80s.

This attitude kills me. And mark my words, It’ll single-handedly end civilization as we know it.

– How would you implement the GNOME Shell plugin system otherwise? The JS engine it runs is a completely self-contained runtime and the plugins are guaranteed to just run on every machine without any compilation or anything else.

– You would be the last person to accept that a car costs 1000 $ more just because the entertainment system was built from scratch instead of just using Android.

I’d rather have a basic infotainment system using QNX or iTron or even something modular and easily replaced in a 2DIN form factor then some disaster that controls my AC etc and won’t be maintained 5 years down the road.

Really controlling the HVAC in a car with something running android or windows should be a crime.

That said I’d rather have the car come with no infotainment system other than a radio capable of playing MP3s and add one later if I feel like it needs one.

…noone is implementing critical car functionality using Android, it’s always just the Infotainment and Navigation systems. Which are isolated from the rest. Air conditing still had hardware controls in every car I drove in the last ten years, and those weren’t connected to the Infotainment systems.

Pretty sure you know that, though

Gnome seems to Red Hats Muppet these days, so I’m no longer surprised.

I hate rot the most. Back in the day, you‘d be able to write a program, archive it somewhere and years later, it‘d still run all the same. Nowadays, everything has to be connected over some api that‘ll be declared deprecated after two years, so without constantly reimplementing those your program will become disfunctional in that time.

I’m still runing things I wrote in 1993, so it depends how you write them and what they do… And I’m still using the class I wrote for multithreading stuff (thread safe stuff, and interprocess communications etc) 20 years after writing them – they worked then, and they work well now… :-) The trick is not to use any of those short term APIs etc if you can avoid it, and to stay away from ‘standards’ that change so much every year or two..

Code bloat, inefficiency and programming languages that encourage lazy programming is why I absolutely despise Javascript. I really hope Golang and Rust change this.

I’d also love to see more effort put into making the CPython interpreter faster. Python is only language that I use nowadays because I can do with it anything that I could possibly want due to its plentiful and varied libraries i.e. numerical computation, scientific computation, symbolic math, GUI, AI, machine vision, web dev, graphing, data visualization, static site generating, web scraping, database, cloud, networking and much more ! Too bad it’s so slow.

I’m with you there. So much effort has been put into libraries and frameworks that try to make Javascript not awful, with incredible webpage bloat as the result… it’s long past time to design a better language and framework for stuff that executes in the browser.

Regarding some of the other points in the rant, I think that part of the problem is well meaning attempts to create do-anything libraries and frameworks with some consistency (Java, dot-NET, db systems etc), but again the final result is bloatware. I don’t have an answer there but I note that there are still some libraries that combine efficiency with capability (JUCE C++ library is a commercial example)

Finally, I’ve only picked up Python in the last couple of years, and i share your enthusiasm for it; it seems to be easy to quickly construct working solutions. But it’s interpreted. Does that make our use of it part of the whole problem?

Curl:

http://www.curl.com/products/prod/language/

Quite possibly, Though I must say Python is no more part of the problem than C++ . Yes C++ (and C) offers a very high degree of performance. But the problem with C++ is how much of an intractable monstrosity it has become. It’s simply too big, too complex and all encompassing. I know developers who have used C++ professionally for 20 years and are still finding things about the language that they did not already know. Sure C++ is very expressive, but too complicated, at least in my mind; And I’ve been using it on and off for well over a decade now.

Python prioritized simplicity and readability over performance and has mightily succeeded. But keep in mind that C is almost 60 years old, C++ is almost 40 years old and even Python is about 25. These are not languages developed to tackle the programming challenges being faced today. We need languages that have C++ like performance with the ease of use of Python. Development of such a language is the first step to correcting the bloat-filled dependency-dependent path that programmers are on today.

Go and Rust are able to achieve a better balance between performance, ease of use, readability, multicore processing e.t.c. Go leans a bit more towards ease of use but is still quite performant whereas Rust leans towards high performance while still being relatively easy to read and use.

I think bad software is like a bad trip, its so hard to get off of it.

And there are so many things wrong we need a wonder to clean that up….

I don’t mind the GUI, it allows me to see the ten open command windows all at once. What really winds me up is the way the writers fix things that aren’t broken then call it an improvement and the way words in the menus are replaced by little pictures that mean absolutely nothing until you learn them.

I think it is Google that have an add on TV at the moment telling you that you need your computer to be more like your phone, no thanks, having one device splattered in meaningless pictures is too many.

Thing is that back in the day the programmer had to be frugal with all resources and check everything. The CPU was never fast enough and you could easily run out of memory so you learned how to make do and program within those restrictions. Kids today with their flashy debuggers, profilers and optimizers just have it too easy. Now get off my lawn!

maybe we need a new architecture. rather than scaling up the complexity of the pc as has been done since the 8 bit era and before, split it up in to many small cores, and assign a program to each core or multiple cores as needed. something like a gpu but with a dedicated hardware kernel to allocate resources and provide a physical barrier to speculative execution.

Oh come on, just stop with the “new architecture” nonsense. Stuff we have today is nothing like the stuff back then and the hardware is mostly not even at fault in the context of this problem.

Also at least make sensible suggestions. Having dedicated cores per application would solve literally nothing but just make the chips much more complicated, larger and expensive.

Stuff we have today is exactly like the stuff we had back then, just with a turbo (caches), nitrous injection (out of order execution) and tuned air inlets and exhaust (speculative execution).

I hope you realize we could have over a thousand capable cores on a chip without problem if the systems were designed for that? We still can’t develop parallel programs efficiently on normal computers as the number of cores are too low to use proper techniques, the work that is done is for specialized systems like supercomputers (superclusters) which targets a completely different workload than a standard desktop computer.

And yes we desperately need a new architecture and we need it yesterday. As frequency scaling have stalled and current types of architectures can’t extract more IPC out of existing code we need new architectures that allow for more performance in a more efficient package combined with new software that is more efficient. In a modern computer the energy needing to do a computation is dwarfed from overhead costs, many of which aren’t theoretically needed but an artifact of the von Neumann/stored program design.

“And yes we desperately need a new architecture and we need it yesterday. As frequency scaling have stalled”…

What gibberish are you spouting now man. Can’t you see what is right in front of you. If we had PCs running at a hundred million GHz the programmer wannabes would still manage to make them crawl. Pay decent programmers a good wage, incentiveize the wannabes to be good and you wont need super computers just to run a text editor any more. Speaking of which, somebody put us out of our misery and kill emacs already.

“I hope you realize we could have over a thousand capable cores on a chip without problem if the systems were designed for that?”

No, we couldn’t. You want those thousand cores to be fast and you need lots of caches and memory to feed them. The thing which comes closest to what you’re claiming is the Sunway SW26010 with 256+4 cores, and that’s very far from a general purpose system which can run a separate process on each CPU. Very, very far.

“We still can’t develop parallel programs efficiently on normal computers as the number of cores are too low to use proper techniques”

I happen to work in Supercomputing. The vast majority of things people run on desktops doesn’t have enough parallelism to warrant all the overhead, complexity, wasted power etc. introduced by all that isolation you’re proposing. Not to speak of all the inter-core communication latencies.

Take a look at the Mill architecture.

well, duh. It’s got nothing to do with how many cores the architecture has. And we could run multi user programs (I’ve done ones that had many thousands of people using them at the same time), and a fair few of them (programs running on the same hardware), on what is now ancient hardware not as powerful as the PC I write this on…

It’s not a hardware problem…

We seem to have lost what we learnt in the 1960’s to 90’s, as a whole generation of programmers are going to have to learn it again…. Somehow…

Using a multitude of cores opens up a different way of computing by eliminating the need for abstraction layers. It may be possible to treat core permissions the same way we treat programs today. For example you could set a core permission for a web browser to read/write its own files and run binaries from the net without security fears. Then run multiple operating systems simultaneously and have them interact without emulation layers or virtual machines.

Using a multitude of cores opens up a different way of computing by eliminating the need for abstraction layers.”

That turns out not to be the case….

Going multithreaded certainly changed the way we designed software (many decades ago) but having those threads run on different cores – or doing super coarse grain as you suggest – makes virtually no difference.

In fact, what you suggested is just about security, and you have just swapped one set of problems for another, as soon a those programs have to talk to something else you have a different – but equally worrying – set of security problems..

You can do all of that today, there are multiple chips with 32, 48 or 64 cores per socket on the market. But they’re huge expensive and draw a lot of power. How are you going to explain to people that they have to buy a 1000$ Xeon instead of a 100$ Ryzen just because of some potential security gains?

Maybe referring to this?

https://spectrum.ieee.org/view-from-the-valley/computing/hardware/david-patterson-says-its-time-for-new-computer-architectures-and-software-languages

Quote: “David Patterson Says It’s Time for New Computer Architectures and Software Languages

Moore’s Law is over, ushering in a golden age for computer architecture, says RISC pioneer

David Patterson—University of California professor, Google engineer, and RISC pioneer—says there’s no better time than now to be a computer architect.”

https://spectrum.ieee.org/view-from-the-valley/computing/hardware/david-patterson-says-its-time-for-new-computer-architectures-and-software-languages

Parkinson’s law – work expands so as to fill the time available for its completion.

Corollary – software expands so as to fill available memory.

This made me laugh so hard and yet, it makes me incredibly sad at the same time.

I think most of the problem, is profit and marketing. Companies don’t care about the product being good, just different enough to get people to buy it. There is just so many options and features packed in, which will most likely never get used, or really don’t do anything useful. It’s new, so when it’s bought, takes a lot of drive space, run slow, the user just thinks he needs a new/better computer. Companies can address bugs and and performance issues in future version, and sell those as well. Back in the old days, companies were self owned, the people who started it, own and operated it. The big money is in selling stock, then selling it to a competitor, who either likes your product, or wants to squash it.

Use to be the operating system was bare minimal, and did little in the background, once you loaded you application. Now, our operating systems take up a good portion of the resources, and do all kinds of stuff on the side, some of it kind of sneaky, and unwanted, for development, and marketing purposes. How much of a Windows really to improve the system, and how much is just to share your usage habits?

After spending my morning reading all the comments, my 2 cents worth is:

Write your program like a device driver:

Init the thing,set up a table driven handler ,this avoids does this = that

[input is converted to jump address]

yield to the OS, and handle your events when they happen.

assembly/machine language does all the above , no sweat

Developers are 1% of software problems Publishers are the other %99.

We can’t use it Licensing, And my kid was telling me we should use this so we’re going to use this it’s the future….

WHEELS REINVENTED

> there was no need at the time for memory expansions or upgrades

Are you kidding? At the time, most PCs shipped with 256k. I only knew one person in the user group who could afford 640k, but memory expansions of smaller sizes were commonplace. It’s what the “expandability” of the PC vs. Mac was all about.

If you could race the beam and pull off a simple game in 1978 on an Atari 2600 with one hundred twenty-eight BYTES of active memory and either 2k or 4k of cartridge ROM… you should be dang well able to produce a decent game for my smartphone or PC in ten megs — maybe a little more for a slideshow game like MYST.

Do not tell me you need a 4k monitor to throw blood around in some stupid-*** shootemup. DOOM can do that, and DOOM can run on my flippin’ printer FFS. Do not tell me that I need a six terahertz processor and however many petabytes of RAM and storage, when all I want is an office suite, a reasonably professional graphics program (think CorelDRAW — Inkscape and Adobe both can stuff it until they learn to design reasonable interfaces), maybe some simple games, and a decent Web browser. If you must have a website that’s so freaking flashy that it won’t load on an Atom netbook in 15sec or less, you need to reevaluate your values and goals in life.

One of my two best friends runs a tech shop, and he tells me most of his customers fit their files neatly into a forty-gig space. So why the **** does anyone have a three terabyte freaking SSD? Bragging rights, is all I can figure. “Whoever has the most toys…” which of course is a complete load of crap. Don Henley put it quite nicely, in a little ditty he wrote (and sung) called “Gimme What You Got” —

You spend your whole life

just pilin’ it up there

You got stacks and stacks and stacks

Then, Gabriel calls an’ taps you on the shoulder

But you don’t see no hearses with luggage racks

I’m typing this post on an Atom z8300-based system with 4gb RAM and a 32gb SSD. Don’t you try and tell me that there’s any reason other than software bloat that I couldn’t do the same dang crap I do all day on this computer, on my mother’s old Dell Latitude CPi with a 300MHz PII and 256mb RAM — or, if you take the stupid flashy ads off the ‘Net (which I would love to see anyways, that stuff is nasty) and exclude YouTube for a brief moment, my Toshiba Portege T3400CT, which rocks a 33MHz 486SX and 20mb RAM. (Before you ask, the motherboard has 4mb built in, and it takes proprietary-ish 72pin SODIMMs — yes, you read that right, they are double-sided — up to 16mb in size. You only get one RAM slot, though.)

…heck, I ought to be able to do *that* on a Commodore 64. So why must I have gigs of RAM and a processor running at Ludicrous Speed — and have that considered “low end” to boot?! Riddle me this. At what point does progress become pointless if not outright wasteful, and at what point do we, as a global civilization, realize that we’ve completely overblown the plumbing to the point of being completely bonkers, and reel things in a bit?

A Commodore 64 can even do this: https://www.youtube.com/watch?v=yW5v93P-gBw

It all the fault of Arduino.

Everyone is a programmer now. (As long as there are libraries that do it all)

Even when you program an Arm it is hard to steer clear of a bloated HAL – usually that resembles Arduino libraries to make it easy for non programmers.

Just take a look at the questions asked on most programming forums and you will see what I mean.

So much wisdom has already been shared, but I agreed with the article too much to not comment. One of my “pet peeves” is that different OSs have unnecessarily different interfaces. To write an app that works on most devices, a developer must learn several interfaces that accomplish the same tasks in completely different ways. This requires months of careful study. Alternatively, the developer can spend a week studying an inefficient cross-platform tool. If I wanted to publish an app quickly (to beat competition, impress a VC, etc.), I know which option I would choose.

Now you know why VM and related are popular. Why write to a moving target when one can write to a virtual machine that’s the same regardless of the hardware.

+1… VMs have definitely made my life easier; their existence offers a glimmer of hope regarding these issues! The contrast between the predictable, rational world of a well-configured virtual machine and the chaos of trying to develop for multiple OS’s APIs has helped me realize which areas of software development I enjoy!

I agree that software bloat is a problem and all, but is nobody here concerned about the sheer number of questionable claims in the article? Here are just three examples of what I mean:

1) comparing text editors and other programs between now and then. Despite claims otherwise, modern text editors do much, much more than they used to. If you want to compare apples to apples, look at notepad in windows 7 (it just got new features in win 10) and in windows 3.1. but everyone hates notepad. It has no features. Lightening fast and lightweight, but no undo history, no Unix/Mac line ending support, barely even any encoding support. All other text editors have changed with user expectations. Some are faster and more lightweight than others, some have more features.

2) Compilers are slow and could be faster. I’m thinking he’s never tried to write his own compiler. They get complicated really fast. It’s hard enough to do a simple one for a more or less worthless made up language for demonstration. Making a hallway decent compiler for a language such as C++11 or later would be a MAJOR undertaking. And of course no compiler these days can be considered decent unless it considers the task of OPTIMIZING it’s generated code. And that alone is a very deep rabit hole. All things considered, I’m amazed at how fast current Compilers are. We won’t discuss just-in-time Compilers.

3) “My Dell monitor needs a hard reboot from time to time because there’s software in it.” Did he really validate that there was a software bug in his monitor, or did he just assume? It seems just as likely to me that he has a hardware problem in his monitor that creates a need to re-initialize the system through a hard reboot. It is well documented that memory errors occur on a regular basis, even in “good” dram modules, due to cosmic rays and other phenomenon: https://superuser.com/questions/521086/i-have-been-told-to-accept-one-error-with-memtest86/521093#comment627249_521093