When it comes to seeing in strange spectrums, David Prutchi is the guy you want to talk to. He’s taken pictures of rocks under long, medium and short UV light, he’s added thermal imaging to consumer cameras, and he’s made cameras see polarization. There’s a lot more to the world than what the rods and cones on your retina can see, and David is one of the best at revealing it. For this year’s talk at the Hackaday Superconference, David is talking about DIY Ultraviolet Photography. It’s how bees see, and it’s the bees knees.

The visible portion of light is just a tiny portion of the spectrum; right now, there are kilometers-long radio waves flying around you, and hopefully not too many gamma rays blasting through your skull. Closer to the visible portion of the electromagnetic spectrum, we have infrared and ultraviolet. Infrared visualization is well-studied, and even moreso since the Raspberry Pi foundation started shipping a camera without an IR filter. Ultraviolet, on the other hand, is a bit stranger. That doesn’t mean it’s not used in nature; bees can see deep into the ultraviolet, and flowers have evolved to be visible to bees.

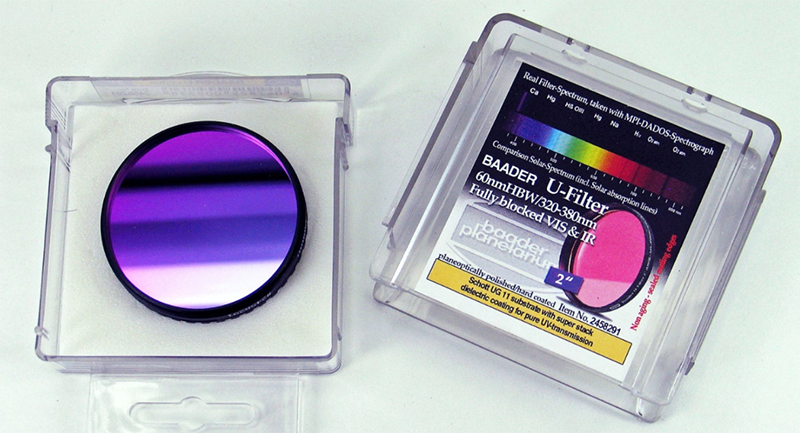

But this is a talk about UV photography, and this means modifying a camera. This means taking apart a camera and removing the IR and UV filter. Yes, all cameras are very sensitive to IR and UV, and most cameras have a filter that only passes the visible spectrum of light. Of course, once you get rid of that IR and UV filter, you’ll need to block out the IR and visible light. This can be done by simply stacking UV band-pass filters and IR short-pass filters. Dedicated UV lenses are extremely expensive, but photo enlarger lenses are nearly transparent to UV, and some old Soviet and East German prime lenses don’t have a UV-blocking coating.

This is an amazing talk that goes into the depths of UV photography, in the end producing UV images simply from stacking filters, no Photoshop involved. Of course, this is just a teaser; David literally wrote the book on UV imaging with a DSLR, and a quick perusal of this volume shows there’s much more than a thirty minute talk can cover.

Update: David Prutchi has made the slides for this talk available as a PDF.

Could we please get the slide deck somewhere? The video doesn’t show all the slides full-screen: sometimes it just shows the washed out video of the projector.

I pinged David to see if we could get a hold of the slides and he published them on his website (PDF). Thanks David!

thank you very much, both of you!

“There’s a lot more to the world than what the rods and cones on your retina can see, and David is one of the best at revealing it. ”

How about bats, or dolphins?

You mean something like this ? https://actu.epfl.ch/news/mapping-a-room-in-a-snap/

The link to the pdf off that page is broken, for some odd reason they have added a +html at the end of the correct URL – https://www.pnas.org/content/pnas/early/2013/06/12/1221464110.full.pdf works.

Also budgies – they are tetrachromatic – having a fourth color receptor in their eyes tuned to UV. Many of their markings are indistinct under white light, but influence their mating choices and other social interactions.

https://www.reed.edu/biology/professors/srenn/pages/teaching/web_2010/RMBsitefinal/index.html

https://www.reed.edu/biology/professors/srenn/pages/teaching/web_2010/RMBsitefinal/index_0clip_image004.png

Taking real UV photos like this is a little difficult because most digital cameras have lowpass (UV cut) filters on the front of the sensor. Even if you have a high quality UV highpass filter, you’re going to get very poor UV sensitivity from the camera.

However, you can pretty easily take UV-fluorescent photos with an unmodified camera – the UV excites the subject so that it re-radiates in the visible spectrum, which any old camera can capture. Flowers are usually pretty cool – a few types of chlorophyll come out bright red – and pollen comes out super-bright blue/white. Expect some trouble if you have optical brighteners in your laundry powder though because there will be super-bright fibres all through your house.

You’ll want to buy a powerful (I used 25W) UV LED of around 365nm, plus a “U340” highpass filter from eBay. That highpass filter – placed over the LED – removes most of the visible blue light that the LED puts out, preventing the fluorescing colours from being swamped by blue. The images are still fairly blue though, so you’ll want to set your camera’s colour temp for 6000K or maybe use a skylight filter on the camera. Also: polycarbonate safety glasses will block the UV and prevent eye damage; without the glasses you can expect conjunctivitis within a few minutes.

My attempts, including photos of the DIY UV source: https://www.flickr.com/photos/24125157@N00/albums/72157651227310381

where is the UV diode array from?

Absolutely, positively must have safety glasses with this. Source – have had UV burns in my eyes from playing with a quartz tube UV lamp years when I was a kid without them – scary stuff.

Ah, that’s nothing, for a real hack don’t hack a camera hack your eyes!

https://petapixel.com/2012/04/17/the-human-eye-can-see-in-ultraviolet-when-the-lens-is-removed/

Almost all silicium based CMOS sensor are insensitive to UV band. What you’d observe is due to UV photons converted to electron due to thermal conversion of (more energetic) UV photons, it’s a very very small proportion and they don’t come from quantum bandgap conversion. This means that you need to perform a very very long exposure to expect getting few electrons of data. Please notice that you could observe any photon this way (X or gamma) but it’s so unpraticable that it’s not used anywhere.

I know videos suck for conveying information, but he does address the sensitivity function of CMOS sensors. Additionally, I’ve had a bunch of early digital cameras that produced conspicuous blurry fringes around patches of daylight due to the near-UV not being adequately blocked. This isn’t a thermal effect.

Hacker conferences are just as good at video and sound recording, cutting, etc. as average users are at securing their data.