In the 1980s there was an impetus for the first time for young people to be equipped with computer literacy. A variety of different educational programmes were launched, typically involving a collaboration between a computer manufacturer and a broadcaster, and featuring BASIC programming on one of the 8-bit home computers of the day. One such educational scheme was a bit different though, the German broadcaster WDR produced an educational series using a modular computer featuring an unusual 1-bit processor that was programmed in hexadecimal machine code. [Jens Christian Restemeier] has produced a replica of this machine, that is as close to the original as he can make it. (Video, in German, embedded below.)

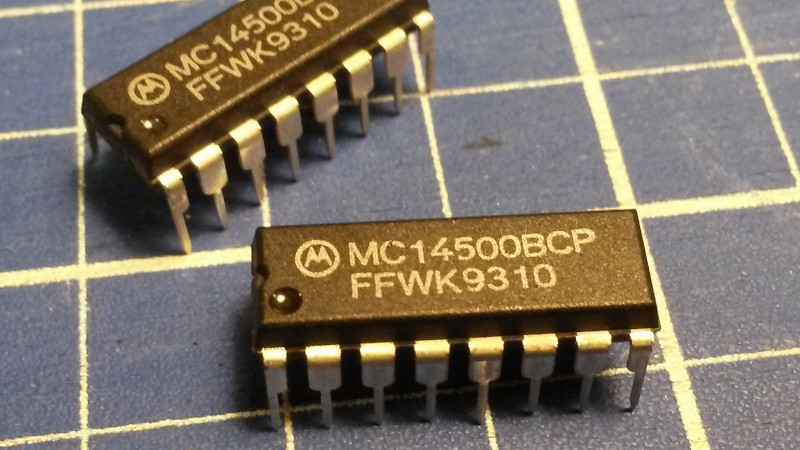

The computer is called the WDR-1, and had its origin in a kit machine before it was taken up by the broadcaster. The unusual 1-bit processor is a Motorola MC14500, which was produced from 1977 onwards for industrial control applications. He takes the viewer in the video below the break through the machine’s parts, explaining the purpose of each daughter card and the motherboard. Lacking an original to copy he instead worked from photographs to replicate the chip placements of the original, substituting pin headers for the unusual sockets used on the 1980s machines. Take a look at his video, below the break.

More information on the WDR-1 can be found online in German (Google translate link). Meanwhile we’ve featured the MC14500 before, in a small embedded computer.

MC14500 image: JPL [CC BY-SA 4.0]

Jenny, my Physics teacher introduced me to the MC14500 in 1982 – when I did an electronics option in my Physics A-level. And the rest is history – thanks to Dave Gell for setting me out on my future career.

I don’t get it. What makes it 1 bit? He says there is a 4 bit instruction. There is talk of words and bytes in the video and a memory of 256 command words – which sounds like there was 8 bits of address space.

It’s true this is really tiny – especially for 1986 – 32 bit processors were state of the art.

The datapath is 1 bit. The MC14500 doesn’t contain any address logic or registers – these must be implemented in other logic on the board, and can be any size.

Here’s the datasheet for the chip. The data “bus” is pin 3 (and not a bus), and there are no address pins.

It has a 4-bit instruction register and gives direct access to that on pins 4-7.

All other registers are 1-bit and wired to one pin each, including the conditional flags.

[PDF Link] https://en.wikichip.org/w/images/5/5b/MC14500B_datasheet.pdf

Think of driving a shift register from one clocked IO pin to an MCU. From the MCU point of view it is still a 1-bit wire, no matter how many 595’s you chain together on it.

My dad actually designed a display panel using the chips in figure 2. His panels were big back lit diagrams of every valve, pump and pipe in a factory. A typical light output was: light this pipe if valve A is lit and pump B is lit. There were many many such equations. I was working at UNIVAC and it was fun project for me.

‘The data bus is pin 3’ is the joke, the punchline, and the explanation all wrapped up in one!

For some retro 80’s German TV fun you can find the original series if you search for “Bit und Byte Der 1-bit Computer”.

As Allan-H says, the 1 bit is for the data path: The CPU can read and write a single bit, and has a 1-Bit ALU. There is no program counter, which has to be provided by an external circuit. Also, the 3-bit argument for each instruction is handled by external components, in this case they go to an 8->1 input selector and an 1->8 latch.

Jump instructions would have to be implemented externally as well, and there are even control lines to control a stack. In this case the jump signal is connected to the reset input of the program counter, so you can just do a single loop. Some limited flow control is possible by a “skip next instruction” conditional instruction.

Not so long ago I was working at an international company that still had production equipment running these devices. It was quite a complex process and the controllers were designed, built and programmed by one man (Edward) who I worked with. It was all built in the 70’s / 80’s on proto-board using wire wrap methods. As far as I know the machines are still running – why change if they’re still running perfectly? I saw this device way back when it first came out but dismissed it and started messing about with the SC/MP, 8080 and 9900.

Not being able to locate spare parts would be one reason to upgrade.

No rotate instructions needed, Cool!

It seems the main chip Motorola Industrial Control Unit is not available anymore but here you can find a discrete clone of the MC14500 https://www.youtube.com/watch?v=5KeHVW0NO6M

I got mine from eBay, which are recycled from old equipment. The first one I got didn’t work properly, the second failed just as I was about to record everything…

As early as 1956, The Royal McBee LGP-30, a desk-sized “personal computer”, used this idea. It had a 1-bit accumulator, and processed instructions in serial form. A tiny little scope let you watch the data streaming into the accumulator. I count myself blessed to have been able to yse it.

Ca. 1965, when I was trying to build a computer of my own, I entertained the same idea. Noting the vast difference between the cost of static 8-bit RAM chips and that of shift-register chips, I envisioned a pair of shift registers: One for “RAM” data, one for the stored program.

Towards the end of WWII, Navy fire-control used mercury-based “mumble tanks.” The data goes whizzing around in serial form, and the logic decides which bit to grab and process.

Having never heard the delightful phrase ‘mumble tank’ before, this led to some roaming around teh webz, where I found this ..

http://www.rfcafe.com/references/popular-electronics/electronic-mind-remembers-popular-electronics-august-1956.htm

.. which made the phrase “mumble tubs”, but hey. Fun stuff.

But you know, looking at how they had to struggle to achieve even a few hundred bits, I got wondering how smug and superior we really ought to feel here in 2020. By which I mean, sure, you can easily, for not very much money, get a quarter of a trillion bits with access way down in the nanos – but consider what a very highly specialized artifact that memory represents. Some frighteningly expensive machinery turns that stuff out in factories by the truckloads. And it’s arranged in a very particular way. When you buy your DDR4, you’re really buying a PC part. A major subsystem, already integrated just so.

This is miles from constructing a mnemonic memory circuit using stone knives and bear skins, which is just about what they were doing in the 1940s. Think about it, wire and crystals and liquid metal, that you could hear work? The medium was primitive but the choice of what to build from it was far less constrained. Ok, sure, 8 decades on we kind of know which patterns are useful, but we’re kind of locked in now, is all I’m saying.

Quote: Think about it, wire and crystals and liquid metal,

It sounds like voodoo black magic today.

One of my favorites, which I can’t find on the net today, was like below

It had two coils of wire for memory, yes just ordinary wire.

A transducer pulsed binary into the wires and a receiver detected these pulses at the other end. The ALU would either pass the original bit back in or invert it depending on the last instruction.

The ALU had only one flipflop. A one bit register.

It was Turing complete and you could run complex code on it.

So yeah, two pieces of wire for RAM and registers and instructions. Voodoo black magic.

Early ingenuity was incredible.

Do you mean this one? https://youtu.be/2BIx2x-Q2fE

Also see this one about the EDSAC with a mercury delay line: https://youtu.be/Yc945sNB0uA

Understanding base principles thoroughly really helps.

Some of us are still happy to tinker electronically, remember the Commodore Amiga? It still has a massive following with lots of new hardware having been designed at board level by fellow hobbyists.

that is beautiful!

growing up with cheap ram, I was surprised to hear of that sort of system, but over the years, I’ve come to appreciate the cleverness. I’d like to make a minimal serial computer someday. Bit like an WireWorld computer..

WireWorld. Sigh. New time waster.

Would love a link for wireworld. Search turned up a bunch of cable vendor hits, but also this

https://en.m.wikipedia.org/wiki/Wireworld

Is that the thing you referred to?

I’m sure it was. I was able to find multiple Andoid apps to play with that support this. It’s like Conway’s Game of Life, but the cells are constrained to wires, so you can implement logic circuits.

Here’s a fun way to think about the wonders of todays RAM – Imagine making some out of only plain old through hole TO-92 type NPN transistors.

So what is one bit of static ram? A d-type flip flop will do the trick. You can trivially get two in a 14 pin IC, a 7474 or a cd4013 are good staples. But how is that made?

A d-type flip flop is 4 nand gates and 1 not/inverter. A nand gate is two transistors and an inverter only takes one.

So a flip flop takes 9 transistors (8 for the nands + 1 for the not)

9 transistors for one bit. 9*8=72 transistors for one byte.

A bb830 bread board should have 63 columns, which fits exactly 21 TO-92 transistors side by side. 42 of them using both top and bottom half. That one byte will need two boards, although not completely filling the second one.

Now extrapolate that out by *1024 for one kilobyte of ram to envision the physical space those boards would take.

It really gives one an appreciation of all the tricks of efficiency combined with the scale of miniaturization and organization of transistors within an IC.

Especially when looking at the outside IC packaging on a single 32 GB DDR4 DIMM, that for just a bit over a hundred bucks, contains 256 billion of those bits

Well, two things:

1) You teased the “what if you tried doing this with old-style transistors?” lead, so I was expecting at LEAST a calculation of how many square miles of circuit board it would take to execute a 16 GB RAM array, but you totally dropped the ball.

2) You compared DRAM cells, which consist of ONE transistor and ONE capacitor per bit, with static RAM, which takes six transistors (not the nine you claim) per bit. So it’s apples vs. mutant oranges by the time you’re done.

3) Just so I’m not being just as lame, if we assume that you make those DRAM cells using about 5mm x 5mm per bit, which is still pretty dang tight (e.g., putting the capacitors on the back side, maybe?), that’s 25 mm^2 * 16 billion * 8 = 3,200,000,000,000 (= 3.2 trillion mm^2) = 320 hectares, or if you like, about 1.2 square miles. If you want to scale that up to your nine-transistor SRAM cell, feel free.

Whoops. I calculated for 16 GB, not 32 GB. So for 32 GB, 640 hectares, or 2.5 square miles. And even at $0.01/transistor + $0.01/capacitor, that’s $.02 * 32 billion * 8, comes to a little over $5 billion, without even considering the cost of the PCB.

Wayyyyy back when it was just out of date junk surplus, I had a card from one of the early 60s I think transistor computers, that was 1 machine word, and 12 transistors, 12 bits, though don’t know if it was parity per nybble or something strange. That was laid out on a lot of board, it was about 8″ x 5″ with an edge connector. If they only managed that density, probably near 50mm x 50mm per circuit, plus the edge connector and bus, it’d be a large city per gigabyte.

“One such educational scheme was a bit different though, …”

Funny. :-)

And now, there is a 1-bit RISC-V core: https://github.com/olofk/serv

Calculate a Mandelbrot set image on the MC14500?

Anybody?

;-)

Impossible?

1. Hitchhiker 25:

(41) Deep Thought paused for a moment’s reflection.

(42) “Tricky,” he said finally.

ॐ.

Don’t force it!

ॐ looonnn…nnnger.

Well, slowest 1bpp Mandelbrot render I’ve ever seen was on an Amstrad PCW 9512+, took some 30 hours I think (*Written in BASIC???) so there’s the record to beat LOL

*There was definitely something a bit weird about it, because I’d also seen it done on a Spectrum, with the same speed CPU, and although it took ages, it wasn’t that terrible, I think 2 or 3 hours.

There’s a great article about MC14500, with the instruction set and detailed explanation on 8 pages, in Elektor from 1979.

Now available at https://www.americanradiohistory.com/UK/Elektor/70s/Elektor-1979-03.pdf

The Guide Book for this amazing chip is available on Bit Savers.

any chance of getting the schematics posted?

The original manual contains the schematics on page 38:

http://wdr-1-bit-computer.talentraspel.de/documents/wdr_1-40.pdf

While I don’t mind sharing the KiCad project I don’t know the current copyright status, and want to avoid a Cease and Desist letter from the company that owns the production license.

if you recreated the schematic in kicad, you own the copyright for that schematic… thanks for the info anyway!

This is absurd on its face. It’s like saying, “If you copy a book by typing it into your computer, then you own the copyright to that version of the book.” Absolute nonsense.

that is slightly different. it is well established (at least in the US) that a schematic is considered a work of art and is unique to the creator. as long as it is not a one to one duplication of the original content as in this case (since it was created from scratch in kicad), then it is copyright of the creator.

The logical electrical connections are not under copyright while the actual layout artwork may qualify. It is a messed up system protecting *art* not science.

indeed @tekkieneet , here is a link with some good reading about the subject just in case someone wants to learn more…

http://patentonomy.blogspot.com/2012/06/copyright-protection-for-mechanical.html

1 bit processing turned out to be a very popular thing, with digital to analog converters in CD players. Process the datastream from the disc one bit at a time, but do it really fast, at least 8 times faster than a 16 bit parallel DAC which would have to also have a sixteen bit buffer to assemble the bits as the come off the disc.

It was a cheap way to workaround the problems with Sony’s first consumer CD player where they’d cheaped out by using a single 16 bit DAC and interleaving the words from left and right channels, causing there to be a very tiny yet not completely imperceptible phase shift. A second DAC would’ve bumped the cost up some.

Years later we’d see another big rise of 1 bitness with USB, then SATA. 1 bit at a time, faster and faster and faster.

I think I get your point – you can potentially save a bunch of money by handling multi-bit tasks serially, one bit at a time. I used to work on Cipher streaming tape drives, where they used a serial data path to do de-skewing on 9 tracks of parallel data, saving a couple dozen chips and a good piece of PCB space. Quite clever, actually. But 1-bit DACs are a completely different thing. They still have to treat the digital side of the conversion in parallel; they just essentially pulse-width modulate the analog side in a closed loop that brings its integral *close* to the digital value coming in, and that is then low-pass filtered to actually realize that integral. I studied these in depth in the 90s, and found that they had an inherent deficiency: their noise level increased linearly with frequency. It just worked out for the consumer electronics industry, that human hearing has a similar drop-off of sensitivity with frequency, so at least in not-very-demanding listening situations, the noise wasn’t audible.

But this is very different from the concept of 1-bit when it comes to computing. In computing, 1 bit means you don’t even acknowledge the existence of more than two numbers – zero and one. The MC14500 was intended to replace discrete logic gates in industrial control applications where feedback came from (for the most part) binary sensors. Yes, you COULD do actual arithmetic of multiple bits, just as you can do 256-bit arithmetic with an 8-bit processor by breaking it into smaller chunks, but this is an even more arduous task when the CPU doesn’t even have a carry mechanism. To add two 1 bit numbers and get sum and carry, you have to do one operation to get the sum, and another operation to get the carry. For each BIT. It’s almost literally like simulating the logic gates it takes to implement an adder in hardware.

1-bit DACs share this much with USB, eSATA, PCIe, SDI, and other modern serial interfaces: they aren’t essentially binary; they just take advantage of the fact that if you multiplex many parallel bits into a single signal path, you eliminate all of the problems with bit-to-bit skew that come with parallel interfaces at high speeds, and as a bonus, use fewer components. You’re basically trading speed, which you have more of than you need, for precision, which costs money.

Let’s be clear about this: this chip was made to be fundamentally binary, not as a cheaper and sometimes better way of serializing processing of multi-bit data, like all of the other examples you mention.

Based on what you said may be the minimum is 2 bit.

But still one can return 2 bit in two 1 bit in sequence, if the invoking clock sync know that incoming is a 2 bit data. A simple fpga gate can handle that.

Or for that matter a multiple 1 bit but interconnect.

Still, the question is even with 2 bit some parallelism is needed for adding say 16 bit number etc. That is what the current cpu design. Or gpu for that matter.

Many devices change to serial, but not cpu or gpu so far. But could there be a use case. Anyway fascinated as we are in microcontroller world view here.

If you want to do actual arithmetic in a 1-bit CPU, like adding of multi-bit numbers, the standard approach would be the same as it is in hardware: for each bit you need a full full adder with three inputs, A, B, and carry, and two outputs, sum and carry. You can do the logic any way your ALU can manage it, but you have to do it twice – once to get the sum and a different operation to get the carry. A 1-bit ALU optimized for this would have an internal carry flag that holds the carry between bits, and a special instruction that does all of the logic for sum = A + B + carry, with the carry from the operation being stored in the same carry flip-flop, all in one clock, but the MC14500 was not so optimized, since that’s not what it was made for. So it would take a number of clock cycles for each bit.

I believe there were some larger small machines, like the PDP-8/s (which used a 12-bit word), that did their arithmetic in serial fashion as described above, so that adding two numbers would take around 14 cycles (including instruction fetch). But again, this was not a 1-bit computer, even if the ALU itself was 1-bit.

I’ve got a sneaking suspicion that I might have thrown one or two of these out a few years back, found in random component selection, looked up specs, went “Ewww” and cast them aside or away. I think I also might have come across them in the wild before, in a coffee maker or something, again, less than impressed, thinking something like “They’ve got a damn cheek calling this thing microprocessor controlled!”. Must have mellowed in my old age a bit, developed an appreciation for the way we got here, as I’m thinking, “Weird, but sorta cool” now.

That’s why you should never throw anything away, my friend who’s a hoarder used to say.

Tell me about it.

My desk has five inches of piled up tech on it.

If I dig down four inches then I get to 1980’s tech.

At the desk surface, which I haven’t seen for a long time, there’s probably 1970’s tech.

The 14500 had an interesting characteristic that made it nice from some sorts of industrial control — a pass through the loop took the same number of clock cycles (and so the same amount of time) no matter which branches the code took. Very handy for things like traffic lights.

The early HP handheld calculators (HP35 family) used a 1 wire bus. The CPU, RAM, ROM, and display all communicated via 56 bit data words on a single signal.

indeed. :D

My favorite site is https://www.quinapalus.com/wi-index.html

Answer: Not Wasting Their Lives On Phones