Phones used to be phones. Then we got cordless phones which were part phone and part radio. Then we got cell phones. But with smartphones, we have a phone that is both a radio and a computer. Tiny battery operated computers are typically a bit anemic, but as technology marches forward, those tiny computers grew to the point that they outpace desktop machines from a few years ago. That means more and more phones are incorporating technology we used to reserve for desktop computers and servers. Case in point: Xiaomi now has a smartphone that sports a RAM drive. Is this really necessary?

While people like to say you can never be too rich or too thin, memory can never be too big or too fast. Unfortunately, that’s always been a zero-sum game. Fast memory tends to be lower-density while large capacity memory tends to be slower. The fastest common memory is static RAM, but that requires a lot of area on a chip per bit and also consumes a lot of power. That’s why most computers and devices use dynamic RAM for main storage. Since each bit is little more than a capacitor, the density is good and power requirements are reasonable. The downside? Internally, the memory needs a rewrite when read or periodically before the tiny capacitors discharge.

Although dynamic RAM density is high, flash memory still serves as the “disk drive” for most phones. It is dense, cheap, and — unlike RAM — holds data with no power. The downside is the interface to it is cumbersome and relatively slow despite new standards to improve throughput. There’s virtually no way the type of flash memory used in a typical phone will ever match the access speeds you can get with RAM.

So, are our phones held back by the speed of the flash? Are they calling out for a new paradigm that taps the speed of RAM whenever possible? Let’s unpack this issue.

Yes, But…

If your goal is speed then, one answer has always been to make a RAM disk. These were staples in the old days when you had very slow disk drives. Linux often mounts transient data using tmpfs which is effectively a RAM drive. A disk that refers to RAM instead of flash memory (or anything slower) is going to be super fast by comparison to a normal drive.

If your goal is speed then, one answer has always been to make a RAM disk. These were staples in the old days when you had very slow disk drives. Linux often mounts transient data using tmpfs which is effectively a RAM drive. A disk that refers to RAM instead of flash memory (or anything slower) is going to be super fast by comparison to a normal drive.

But does that really matter on these phones? I’m not saying you don’t want your phone to run fast, especially if you are trying to do something like gaming or augmented reality rendering. What I’m saying is this: modern operating systems don’t make such a major distinction between disk and memory. They can load frequently used data from disk in RAM caches or buffers and manage that quite well. So what advantage is there in storing stuff in RAM all the time? If you just copy a flash drive to RAM and then write it back before you shut down, that will certainly improve speed, but you will also waste a lot of time grabbing stuff you never need.

Implementation

According to reports, the DRAM in Xiaomi’s phone can reach up to 44GB/s compared to the flash memory’s 1.7GB/s reads and .75GB/s writes. Those are all theoretical maximums, of course, so take that with a grain of salt, but the ratio should be similar even with real-world measurements.

The argument is that (according to Xiaomi) games could install and load 40% to 60% faster. But this begs the question: How did the game get into RAM to start with? At first we thought the idea was to copy the entire flash to RAM, but that appears to not be the case. Instead, the concept is to load games directly into the RAM drive from the network and then mark them so the user can see that they will disappear on a reboot. The launcher will show a special icon on the home screen to warn you that the game is only temporary.

The argument is that (according to Xiaomi) games could install and load 40% to 60% faster. But this begs the question: How did the game get into RAM to start with? At first we thought the idea was to copy the entire flash to RAM, but that appears to not be the case. Instead, the concept is to load games directly into the RAM drive from the network and then mark them so the user can see that they will disappear on a reboot. The launcher will show a special icon on the home screen to warn you that the game is only temporary.

So it seems like unless your phone is never turned off, you are trading a few seconds of load time for repeatedly installing the game over the network. I don’t think that’s much of a use case. I’d rather have the device intelligently pin data in a cache. In other words, allow a bit on game files that tell them to stay in cache until there is simply no choice but to evict them and you’d have a better system. A comparatively fast load from flash memory once, followed by very fast startups on subsequent executions until the phone powers down. The difference is you won’t have to reinstall every time you reset the phone.

This is Not a Hardware RAM Drive

There have been hardware RAM drives, but that’s really a different animal. Software RAM drives that take part of main memory and make it look like a disk appears to have originated in the UK around 1980 in the form of Silicon Disk System for CP/M and, later MSDOS. Other computers of that era were known to support the technique including Apple, Commodore, and Atari, among others.

In 1984, IBM differentiated PCDOS from MSDOS by adding a RAM disk driver, something Microsoft would duplicate in 1986. However, all of these machines had relatively low amounts of memory and couldn’t spare much for general-purpose buffering. Allowing a human to determine that it made sense to keep a specific set of files in RAM was a better solution back then.

On the other hand, what the Xiaomi design does have one important feature. It is good press. We wouldn’t be talking about this phone if they hadn’t incorporated a RAM drive. I’m just not sure it matters much in real-life use.

We’ve seen RAM disks cache browser files that are not important to store across reboots and that usually works well. It is also a pretty common trick in Linux. Even then, the real advantage isn’t the faster memory as much as removing the need to write cached data to slow disks when it doesn’t need to persist anyway.

Sounds like just good marketing to me. Android phones have linux inside. Linux has always used available RAM as a disk cache, so you already have that benefit on a dynamic basis, which seems like the best thing to me. I don’t really see an advantage to having a filesystem locked onto RAM in some way. Just give me more RAM.

XIP (execute in place) is a huge performance win for Java programs that load many libraries at startup, for example Just About Every Android App. Maybe you are happy to wait for apps to start up but the rest of us place value on our time.

Android phones suck and have absurd startup times for apps, no question about it. This may trace to stupid requirements of the Java language. Most linux programs use shared libraries that will end up resident in disk cache. I start my android phone maybe once a month, so I should only be paying the price to bump things into cache on startup. My linux desktop behaves like that — but my android phone is a consistent dog. I’ll blame Java because I hate it already anyway.

That’s funny my android phone works just fine, browsing is no slower than my laptop. And my phone was $175, three years ago so it’s not a “fast” phone by any stretch. Maybe you are infected?

How old is this phone? Most apps on my Pixel don’t even have a noticeable startup time, I just tap the icon and its there.

The Pixel is a comparatively higher-end device than most Androids.

You are assuming that loading one app is more important than loading other apps. Plus, what about the time to reload the app from the network after each restart? I am all for saving time — I do crazy things to tweak an extra second or two out of my workflow. But this isn’t it except maybe for some highly specialized workflows.

But…it’s hardwired!!

Part of Apple’s profit margin is charging outlandish incremental prices for increased memory in its phones. With this marketing fillip, they can not only sell you marginally useful stuff, but charge even more for a larger chunk of marginally useful stuff.

Apparently Apple is to blame for adding useless features to android phones, who knew?

The universal human platform of greed will be swiftly adapted to any hardware.

Whenever you make a stupid practice popular, you’re responsible for others taking up the stupid practice.

No, we don’t need RAM drives. What we need is properly written software. Software that is actually efficient with the resources that it is given.

When my phone gets progressively slower over time, and the hardware hasn’t changed, it’s a software problem.

“. What we need is properly written software. Software that is actually efficient with the resources that it is given.”

Do you have a plan to import an alien species from another planet to do the work, or do you just not see that humans are not capable of writing “efficient” software, because it has never actually been done.

I think I disagree. Not so much that “humans are not capable of writing efficient software,” but rather “what is the intent of the software?”

Most apps seem (to me, at least) to spend more time loading / displaying ads and tracking the user than they do processing what the user asked for. The best client-side software in the universe is still sluggish while waiting for a server on the other side of the planet.

Wow, ad supported software has ads! Who knew? I’m sure you think that software developers make $5 an hour and they grow on trees!

Either you’ve confused my point with someone else’s, or….

I said nothing about the quality, availability, or compensation of the developers.

I’m suggesting that the business model for the corporations behind phones and tablets are centered around monetizing advertising. Even “ad-free” apps and the OS itself have a great deal of features that serve the goal of collecting data about the user.

Those features – even if written with divine quality by a well-paid developer – don’t make the device feel any faster.

You missed the bulk of the argument. Ads being present isn’t the problem in this instance (though many of us find them to be a problem), the problem is that they take forever to load because they are the primary aim of the app, and it always downloads from somewhere non-local. Why not make the ads cached and update once a month or something instead of every-single-time-you-open-the-app? Because you’re a product, not the app. The app is the carrot forcing you to work for the company that “grew” it.

Indeed, efficient software is actually some people are pretty good at historically – because hardware limitations forced every programmer to deal with memory limits, memory handling themselves if they wished to achieve much – there wasn’t the headroom for bloat. Now with all the engines, and IDE libraries doing lots of work, abstracting the hardware the programmer isn’t likely to think about resource use at all, just the easiest way to write the function desired. Add in the big data gathering that is inherent in most phone applications just to waste even more resources.

If memory was stupendously expensive again suddenly programmers and libraries would be focusing streamlining more than function – as it does you no good to make a program that works if it takes longer than getting out the pen and paper and doing it yourself! But cheap memory and ‘portable’ code means that level of efficiency is rarely considered worth the effort, you have ram to burn and you don’t want to optimise for the n’th hardware setup manually.

Software started bloating in the 1990s. Back in 1970 you could have a few simultaneous users on a PDP/8 with core memory and discrete transistors. Your cpu and solid state drive are orders of magnitude faster than hardware from 20 years ago. Does software run any faster? Not really. Big programs like Adobe or Solidworks still have loading times. The internet feels as fast as it did back then because of megabytes of javascript and browsers that need gigabytes of memory just to load.

Software written 20 years ago loads instantly on modern computers, so just force all developers to use Pentium IIs. Problem solved.

Don’t they already import Third World aliens with little training to write software for pennies on the dollar already? Would extraterrestrials really be that much cheaper?

We can dream about people who can long jump 300 feet or throw a football 200 yards or write perfect code but these are all just fairy tales.

It is a bit of a difference between hot garbage and perfect code.

One doesn’t need to be flawless to be better than bad.

And a lot of current applications on phones tends to be a bit silly when it comes to preparing everything ahead of actually starting.

Does an application really need to prepare everything it could possibly need before it wants to show you its first menus?

No not really, it can progressively load additional resources in the background while it lets you fiddle with the first stuff it presents you with. File loading order is a rather large part about software optimization. And handling it dynamically is not all that hard either.

An application knows where in it the user currently is, and where the user can get from that location, thereby the application knows what to prepare. By optimizing this alone, most if not all applications can largely remove their excessive loading screens during startup.

Nor does such a system need to be perfect for it to make a noticeable difference.

You don’t seem to be aware of the economics of software development where quality developers make big $$$ and you seem to think that some race of aliens is going to show up to write code that humans cannot.

And you don’t seem to be a developer, neither software nor hardware to be fair.

Though, who knows, maybe I am just an alien who knows a thing or two about really basic software optimization.

But I can see why a lot of programs have excessive loading on startup.

Relying on a literal library of libraries tends to make any application into a drag. And if those libraries are part of one’s main loop, one can’t trivially just “not load them”, since then one might either hard crash or freeze when one eventually needs them… The solution here is to take out the hedge trimmers and trim down that reliance on libraries. One rarely actually needs a ton of them.

And if one actually needs a ton of libraries, then one can either look into multithreading and split one’s main loop so that one can only load a part of the application on startup and get the UI running while one handles the rest in the background. This can though risk having a second loading screen if the user is fast and/or the device is slow.

“The solution here is to take out the hedge trimmers and trim down that reliance on libraries. One rarely actually needs a ton of them.”

Sure, write every line yourself and you might just finish in 100 years or so. And of course all your code is totally bug free.

“Sure, write every line yourself and you might just finish in 100 years or so. And of course all your code is totally bug free.”

Why jump to such an extreme? (It is kind of silly and honestly makes the “discussion” largely pointless, since you aren’t adding anything to it other then being childish.)

Trimming down on an excessive use of libraries isn’t requiring that one writes everything from scratch. Far from it to be fair.

It’s mostly about looking for libraries that are redundant. A lot of projects worked on by many developers tends to have a few redundant libraries.

Then there is also handling of assets, some programs for example load the low res texture of object A, then the middle res texture of the same object, before loading the high res texture of the same object before loading the low res textures of the next object. Instead of loading all low res textures for all objects first so that a scene can be presented to the user, instead of keeping them in waiting.

Optimizing software is mostly about doing small sublet changes that makes a large difference on the end result. It is mainly about keeping an eye out for poorly optimized work flows and making amendments to them.

It is obviously not a free fix nor a silver bullet.

Nor does it have to be perfectly optimized either.

One can follow the generalization that 30% of the effort will give 70% of the result. (Some say 20% for 80%, or 40 for 60, but it all kind of depends on the exact scenario and one seldom goes far enough into optimization to plot that curve due to diminishing returns in one’s efforts.)

“Sure, write every line yourself and you might just finish in 100 years or so. And of course all your code is totally bug free.”

You did it again, you completely missed the bulk of the argument and went off on a strawman tangent. I’m starting to think you don’t know anything about coding. That’s really bad considering how bad of a coder I am.

How much of that is feature creep? Usually users and programmers want MORE over time.

This is the real problem here. The free market has no mechanism for telling idiots “no”.

That’s sadly true, but it’s less of a problem when the feature creep includes hardware. Feature creep of the type employed by major OS revisions based solely in software are responsible for devices slowing needlessly and fully usable hardware being made prematurely obsolete. My wife’s iMac she bought before I met her is basically useless even though I have Linux boxes FAR older that can do more with less.

Yeah but 8,000,000 Gigapixel cameras 120,000 hz foldable screens and Ram drives sound like sexy new features (ME WANTIE!!) and well written software doesn’t (Software? What’s that, like apps?) so you know what we get.

The statement:

“The fastest common memory is static RAM, but that requires a lot of area on a chip per bit and also consumes a lot of power.”

Is a bit incorrect in practice, and on paper.

Static RAM tends to have lower power consumption compared to DRAM.

Due to DRAM needing to be refreshed a fair few times every second, each time needing to cycle all its bit lines through all the currently stored data in the array. A rather power hungry task to be fair.

And SRAM can instead just sit idly by keeping all of its cells in check. A task that needs no cycling of long bit lines, nor run any comparators for reading the contents in the memory cells. Yes, SRAM does have 4x more transistors than DRAM, but power consumption isn’t purely defined by transistor count.

In regards to RAM drives in phones.

Software should instead just be better implemented. Even 0.5 GB/s is plenty of bandwidth even for exceptionally demanding applications. Not that a phone would have all too much need for shuffling such great amounts of content regardless. It is not like we are live rendering video on it, but even that would only need a fraction of the current bandwidth.

And even with such a drive, it is highly unlikely that the CPU in the device can actually make use of that bandwidth.

It is a bit like installing 10 Gb/s internet to one’s home, while one runs 10BaseT from one’s router to one’s computers…

Not to mention that the extra RAM is going to decrease overall battery life, since DRAM consumes a fair bit of power just sitting there doing nothing but refreshing…. (Typical DDR4 consumes around 0.2-0.4 Watts per GB. This also includes a varying degree of IO power consumption, so not just refreshing. But the 0.2 W/GB figure is for a very low memory bus speed.)

So your answer is ” let’s all have $2000 phones stuffed with SRAM” and you moan about wasted bandwidth! Priceless! Joke of the day!

You totally didn’t get my point.

DRAM is cost effective. But it is also a bit on the power hungry side of things.

Stuffing in excessive amounts of it is generally not beneficial when combating a storage bandwidth issue that largely comes from poorly implemented software.

SRAM is more power efficient, but it is too costly to be practical as a main memory, it is however the bulk of a CPU’s cache.

FLASH is cheap, and also rather power efficient, especially for data storage, but it has a fair bit of latency, so some applications can get into a bit of a waiting game working with it.

But applications can also see improvements in the way they handle their resources, and the order they load them in.

In the end.

These four work together to bring forth their respective strengths for where it makes sense.

Again you are expecting super human capacity from mere mortals, and the software features you want would push out development costs and time into the realm of “unfeasable” . Yes there is fancy enterprise software that manages resources effectively but you must pay about $10000/year in subscription costs to defer the insane development cost.

I think you have forgotten the fact that a RAM drive faces the same challenges as a caching solution.

But instead of nice neat program loops that the CPU has to handle, where cache can be less than 1% of total system memory and still work efficiently, we are instead looking at whole programs, and we need enough of the program to get it to work, except, it could be any program on the device.

A RAM drive would need to be fairly huge to work effectively in this case.

A faster FLASH drive is honestly the better solution if software optimization is “unfeasable”.

Since FLASH is cheaper, it is also the main storage in the device. And it is also more power efficient than a bunch of DRAM.

So as long as the application doesn’t hang up due to FLASH access latency, then it should be the overall better solution.

“Again you are expecting super human capacity from mere mortals”

Who pissed in your cornflakes this morning?

The cache in modern processors is really really fast SRAM and it hits over 95% of memory accesses so it is not really clear that using SRAM for main memory is going to give much of a performance boost.

Neither have I stated that SRAM should be used as main memory.

And yet you talked it up as an alternative to DRAM.

I simply explained why is tends to have lower power consumption compared to DRAM.

Mainly due to the article stating:

“The fastest common memory is static RAM, but that requires a lot of area on a chip per bit and also consumes a lot of power.” a mostly factually incorrect statement from the author.

That SRAM is much more expensive per bit compared to DRAM is enough to make it impractical as main memory, unless excessively low power consumption is required. But a smart phone isn’t that tight on its power budget.

This entire comment section is Alex pwning an overly-caffeinated X.

I guess I can take that as a compliment.

Straw-men make for great action sequences.

Yes. Static RAM dominated, until a better understanding of how to refresh dynamic ram. So instead of the 8K in my OSI Superboard, the Apple II could max out at 48K , and dram was the path forward.

But then CMOS 2K ram arrived in a 24 pin package (compatible pinout with ROM). Still not dense, but there were S-100 boards loaded with 2K ram, and a tiny battery to keep it alive when the computer was turned off. It got better with denser cmos ram.

People did build ram drives using that CMOS ram.

But, leapfrog fashion, just as density improved, ram need increased. So the path forward was still dram.

Dynamic ram’s only advantage is density/cost.

Maybe 20 years ago someone pointed out that the best source of RAM was cache memory on a motherboard. One or two could fill 16bits of address space. If that stuff had been around when 8bit CPUs were king, things would be different.

Yes. DRAM has the huge advantage of being really cheap and high density.

Its downsides is its constant need of refreshing, something that has gotten worse over time.

Like back in the day, DRAM could idle about for a few seconds before losing data. Now it goes much faster.

Partly due to DRAM arrays getting way bigger, so there is a higher risk of failure there.

But also due to the capacitance in each cell getting smaller.

Back in the day, one could read a DRAM cell multiple times.

But if I recall correctly, modern DRAM cells needs to be refreshed when read. (Typically done automatically.)

Back in the day, the capacitor were built in the interconnect layer, providing nice large capacitors for each cell. So one could read each cell a few times before it faded.

And these days the cell uses the parasitic capacitance in the CMOS transistor as the storage capacitor, then one doesn’t have much to drive the bit line with. (And the bit line is huge in comparison, so it likely doesn’t change by much when the cell connects to it.)

Though, some people think that SRAM is magic.

But if we make a many GB large SRAM array, it will likely be even more sluggish than DRAM….

The reason is due to bit and address line capacitance and propagation delay.

This might seem like it will effect DRAM too, and it does. But commercial DRAM arrays aren’t actually all that large, only a few tens of MB, but a DRAM chip has many arrays in it. Each array has its own little controller running it, connecting it to a bus running between all controllers and out to the chip’s IO section.

This also helps with refreshing the chip, since we can just let each controller refresh its own array without us needing to read out all the contents and write it back “manually”. Though, some older DRAM chips didn’t have that feature… (But if modern DRAM modules didn’t support that feature, then a modern DDR4 memory bus running at 24 GB/s would only be able to have about 200 MB of RAM and then it would spend 100% of its time refreshing it, so on chip refreshing is a wonderful feature.)

Intel on the other hand is looking at Optane memory, since its non volatile, doesn’t really wear out, has decent throughput, very low latency, and it doesn’t have any too crazy power consumptions. It is though slower than DRAM to what I have gathered, but still interesting.

Though, for phones, I guess a more parallel Flash drive is a better option since Optane isn’t super cheap…

(But wasn’t 5G supposed to offload all of this into the fluffy clouds above? Just kidding….)

Main memory bandwidth is really not so much an issue with modern processors because almost all fetches are cache hits. If you want faster performance, increasing the cache size is the cheapest and easiest. Way better than using faster RAM which will really push out the power and cost budgets. Take a look at the Intel processor catalog and you can see how performance and price go way up with the big Xeon caches. If you look at the die pictures of these processors you will see that half of the chip is cache.

Caches isn’t a silver bullet, far from it to be fair.

Yes, L1 cache can reach a hit rate of up to about 90% rather easily.

But it can at a lot of times still miss, and most of the time, it isn’t up at 90%. It all depends on what application one is working with, and the dataset therein. L1’s main job is to handle a programs shorter program loops, common control variables and often called functions.

Then we have L2, this tends to have a lower hit rate than L1. Mainly since it is has a somewhat arbitrary size due to how its size is chosen. Its size is dependent on how much time prefetching offers, when fetching from L2, the core should be on the verge of stalling, ie, it is “just in time delivery”. L2’s main job is to handle overflow from L1.

L3 sizing is practically down to how much money one wants to toss onto the chip. It’s main job is simply to handle cache evictions from L2. And to ensure that as much data as possible is local to the CPU itself. L3’s main job is to ensure that if the data is on the chip, then we shouldn’t leave the chip to get it.

Then we have our main memory buses.

These are a lot slower than even L3. They also tend to have fairly arbitrary wait times, since the memory controller isn’t going to change row address out of the blue just because someone wants something on it. Since changing row address on DRAM takes a fair few memory clock cycles, and during that time we can’t use the memory…. (Now there is multi rank memory configurations that can talk to each rank on a memory channel independently, so that if one is refreshing or changing row address, the other rank can still be worked with. And some server platforms can have 10+ ranks per channel… Meaning that you don’t get bus downtime. While consumer systems might have 1-4 ranks per channel.)

The memory controller won’t just switch row address due to that, it will instead wait for a few calls from that row address to come in, or that a call gets to a “time out” and the memory controller is forced to get it, it can’t wait forever since that would lead to the system freezing. The memory controller goes through the queue in a somewhat arbitrary order due to prioritizing rows with lots of calls, or rows that have timed out calls. (I say “time out”, but it’s more like, “We can’t wait forever…” so it’s much more prioritized.) The reason for doing this is to ensure that the memory bus is utilized as efficiently as possible.

This means that when L3 gets a cache miss, the memory call will enter into the queue, it can either be lucky and be fetched in just a couple of cycles, or it can sit there waiting for literally hundreds of cycles…. (Some caching/memory systems will put the call into queue ahead of time, and then evict it if L3 gets a hit. This gives the memory controller some more time to plan its moves. Moves that then might change, so if this is better or not is a debatable question who’s answer varies on the application and system…)

But in some applications, cache size is largely irrelevant. Take 3D rendering for an example, or image processing, or video rendering (that is just image processing on steroids), or file hashing, or anything else that executes on datasets that dwarfs the size of the CPU cache. (Not that any application has all of the CPU cache for itself for that matter, a given application gets a small portion of it, and a fair bit is set to the side for the next few threads waiting to run. So that when your thread inevitably stalls, another thread can come in and run for at least a few tens of cycles without stalling.)

Also, most modern CPUs, and historic ones for that matter, tends to be largely limited by memory bandwidth if you actually push them. Some computing systems gets a bit more interesting in their ways of fixing this issue, one solution is local processing outside of the CPU itself, as can be seen in a lot of mainframes that tends to have dedicated processors for IO tasks. (Even some computers/servers do this, anyone who have used a RAID card has had a dedicated processor for disk management, same goes for some Network cards handling one’s network stack. Or the obvious GPU that nearly everyone has for graphics….)

Cache isn’t actually a solution when one needs to shuffle/work with large quantities of data. Cache is there to mainly ensure that one doesn’t need to reload common resources all that often. And a lot of applications tends to be fairly poorly implemented in how they handle cache. Most CPUs have dedicated instructions for cache management, so that one can force prefetch and force flush resources that one knows that one either will use soon, or won’t use in “forever”.

Now for cache to even work efficiently, our hardware developers needs to have nearly arcane knowledge about program behavior. Or rather, built a system that can understand and adapt to program behavior on the fly, for hundreds or even thousands of threads, that all can change behavior over time as well… Optimizing a caching system is far from a trivial task, so it should fall within your “super human capacity from mere mortals”, so caching systems obviously shouldn’t even be possible to implement as far as you should be concerned.

Not that increasing cache sizes in the CPU will make your phone apps start any faster regardless, since the bottleneck isn’t related to main memory nor cache, or even the CPU.

It’s related to storage bandwidth limitations. And that can frankly only be fixed by increasing the bandwidth provided by said storage. And caching a database is having very different requirements than the caching system in a processor.

Just my humble regards, being someone that has studied and developed computer architectures as a hobby the past nearly 15 years.

“It is not like we are live rendering video on it”

Do I understand correctly that you’re saying people don’t use their phones for FaceTime or Zoom?

“It is not like we are live rendering video on it, but even that would only need a fraction of the current bandwidth.”

My statement clearly indicates that the storage bandwidth is already plenty for the application.

Not that FaceTime nor Zoom processes local video from storage.

It is rather just live streaming from the camera to the internet via the CPU/RAM. (overly simplified.)

So storage bandwidth doesn’t play much part in that.

“While people like to say you can never be too rich or too thin…”

1. They do? I’ve never heard anyone say this

2. There are people who are too rich, it’s a big problem

3. Try telling someone who’s starving that you can never be too thin

I’ve also heard it said Internet commenters can never be too pedantic.

I thought that was a dumb saying I’d never heard before too. I wonder where they say that.

Ethiopian millionaires?

I think the author got it wrong.

We had phones, then cell phones that were phones with radio. The early gen smartphones add processor.

Today what we have in a smartphone is an ultra portable computer that also has cell phone capability. Ie the difference between it and a tablet. The smartphone is for many people there primary computing device and in some case may actually be their only device.

Is a ram drive a good thing — yes, is this currently just marketing hype – yes.

The world and industry needs a breakthrough in ram technology to stop relying on refresh and losing what you’re doing on power loss.

Power loss is not a problem with battery backup, buggy software that forces a reset is a much bigger problem. Refresh is not a problem on modern processors with their tightly integrated DRAM controllers on chip.

What we need is a different kind of intelligent storage that forbids buffer overruns and doesn’t require explicit lifetime management in software. Most software security issues come from bungled attempts at storage management that end up with double frees or use after free or overrun or underrun. We can solve these problems and much more by rethinking how we manage memory in hardware. We have been doing it the same way since core memory and ignoring the possibilities provided by newer hardware

Ram drive IS not new thing i used IT on Nokia n900 (best phone ever)..

With a 64 bit address space and fast flash the whole concept of a “disk drive” is obsolete. Map it into RAM for goodness sake. No more reading and writing from the drive, just normal memory access for everything. Imagine how much cleaner your code is when open() returns a pointer and you don’t have to call read() or write(). You still have to close to flush the cache back to the drive in case of power fail.

“So, are our phones held back by the speed of the flash?”

No.

They’re held back by the bandwidth of the bus that carries data between Flash and the ALU.

It’s a fundamental limit of sequential processing, known since before cell phones or integrated circuits were even invented, called the Von Neumann Bottleneck. (The fundamental limits on parallel processing are the ability to split information into independent, non-sequential paths, and the bandwidth of your parallel processing paths)

The read/write/refresh speed of a block of memory is only one term in the sum of delays in the signal path between a given memory location and the circuit that operates on the bits. If you reduce that term to zero, a system with a single 300-baud serial bus between the memory and the ALU will have a maximum bandwidth of 300 symbols per second. A system with two such buses in parallel will have twice the bandwidth.

That’s the entire reason for thinking 32-bit microcontrollers are better than 8-bit ones: they can process four times as many bits per tick as an 8-bit microcontroller operating at the same clock speed.

It’s also why we use memory caches: the CPU has a bus that can pass single words between the ALU and the L1 cache at the CPU clock speed.. RISC processors generally fetch one data value and decode one instruction per clock cycle. Then there’s a bus between the L1 cache and the L2 cache which is N1 times as wide, and has a rate of at least 1/Nth of the cpu clock. The actual speed is determined by the value of N and the expected number or memory hits per chunk, so the bandwidth of data that actually gets to the ALU equals the width of the ALU bus times the clock speed. The bus between the L2 cache and the L3 cache is even wider, and even slower. By the time you get to the bus between RAM and Flash, you’re moving pages a megabyte at a time, at a clock rate maybe 10% of the CPU clock.

Then it goes down to the external store, the network, and so on. The ultimate expression of the principle is the old saying, “never underestimate the bandwidth of a station wagon full of tapes.”

The goal of memory design is to make the read/write/refresh time as irrelevant as possible.

Modern processors have really enormous cache and about 99 percent of all fetches are from cache so the cache size and speed are far more important to performance than external bus speed. For example the RAM on my laptop is faster than the RAM on my server but the server runs rings around the laptop with it’s enormous and speedy cache. The modern saying is never underestimate the bandwidth of your L0 cache, it is quite awesome on a high end Xeon or power8:processor.

To a degree I see where from you make your argument.

But it is not really remotely accurate to how it is in practice.

First of all, the ALU is all but a fraction of a CPU. The ALU is simply the part that makes the calculations/bitwise-logic-operations on our data. But data processing can and does happen outside of the ALU itself, sometimes even outside the core as well. So no reason to bring up the ALU when talking about FLASH bus bandwidth. Nor is that directly connected to main memory either.

You also refer to the Von Neumann bottleneck as “The fundamental limits on parallel processing are the ability to split information into independent, non-sequential paths, and the bandwidth of your parallel processing paths”, this is factually incorrect. You are more describing Amdahl’s law than anything to be fair.

The Von Neumann bottleneck is in regards to the limitations in a system that splits main memory from its processor, where the processor has a larger bandwidth requirement than the memory bus itself can provide. Though, the vast majority of all processing systems does this. Even System on Chips are nearly always Von Neumann in their implementation.

Also, the statement “RISC processors generally fetch one data value and decode one instruction per clock cycle.” is largely antiquated. Nearly all modern processors does multiple decodes nearly every cycle. (this has been the case for the past 20+ years…)

And your description of ever widening buses is not really how things works…. (One can say it is that way in regards to datasets, but then we are technically on a different topic, and not talking about bus bandwidth, but rather protocol encoding schemes….)

If anything, it’s the other way around, except, speed tends to relate to bus length as far as electrical signals are concerned. (unless we start driving strip lines, but that adds another can of worms.)

For an example, a memory bus like DDR3, is 64 bits wide. It is rather pathetic compared to the bus between L1 and the core itself, this tends to be a multiple of the typical instruction call itself. Now, most instruction calls do not fiddle with memory directly, but rather only operates on registers. So a 16 bit instruction call is sufficient most of the time.

But the L1 bus needs to be able to also handle the occasional variable or two. And with 512 bit AVX instructions, that can get a bit heavy… Though, some SSE instructions with 128 bit variables can be crazy enough as is.

Buses tends to have fairly constant width in the CPU itself. Though, with a multi chip design as modern CPUs are venturing towards, then the bus between the chips tends to get less wide. And given its longer length, then conventional signaling schemes will need to drop the speed a bit, but not all that much, to be fair, serial clock speed of the bus can even get higher than core clock speed.

Then we have cache. I have already written a comment on the topic of caching and memory systems in another comment above. (It starts with the line “Caches isn’t a silver bullet, far from it to be fair.”)

“never underestimate the bandwidth of a station wagon full of tapes.” is true, but it isn’t relevant in regards to this topic.

What I dislike most about this Hackaday comment system is that when a comment branches off into a long, tangential discussion in which I am not interested, I have to laboriously scroll through the whole lot in an attempt to discover any subsequent, directly relevant comments in which I am interested. Often I just give up exasperated.

That could be remedied by a browser extension made for hackaday.com which would allow all nested comments to be collapsed with a little plus and minus sign. It’s a good idea, and I’d do it but I’ve never made an extension before and as I mentioned above, I’m pretty bad at coding. Feel free to run with the idea.

How do you tell if a comment is interesting and relevant unless you expand it and read it? Sometimes there is an interesting answer to a dumb question

Something something reputation system* something.

*The people you like, up up. People you don’t, well let’s not speak about them ever again.

De gustibus non eat disputqndem , or

Stuff that you find tangential is totally mainstream to others.

Enjoy the spice and variety of life and let everyone else do the same.

Expecting order from chaos is insane.

There is a reason the editors are very light with the censor. Appreciate and embrace it

“Expecting order from chaos is insane.”

Which is why there’s life on this dirtball is a miracle unto itself.

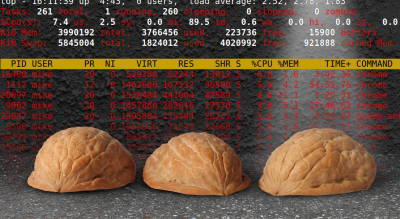

Can someone please explain the walnut shells?

I would say it is a subtle reference to the “where is the ball” street trick

(or “scam”) see: https://antalainen.wordpress.com/2011/10/17/wheres-the-ball-the-scam-called-shell-game-on-the-streets-of-london/ In spanish it is called “El juego de la mosqueta” It is illegal, but sometimes you can see it being played in the street, on a small foldable table (with the main player ready to run if the cops arrive…

Basically the guy who “manages” the game hides a little ball under one of the shells and starts moving them. He stops, the spectators make their bets. Somebody wins, little money an easy game you may think… It goes on and on, until there is considerable money on the table, then… ta, da… everybody loses… You can never win, it is a scam and the first ones who “won” are the guy’s sidekicks…

The three shells area a metaphore for the different types of memory and “the ball” (hiden under one of them) could be the data the program needs “now”…

Best regards,

A/P Daniel F. Larrosa

Montevideo – Uruguay

All I could think of was a pun about “shell game” with the Linux shell… amazing theory with the three different memories! Thank you :)

Looks like any of the popular file-system caching layers of Linux would have done the trick here.

Especially with some clever pre-fetching which the user could influence (pre-load my games, browser whatever)

This is not how innovation loos like. It’s just marketeering.

The point of MOST of the arguments on this page is that a RAM drive only DIVERTS the time-costs on a device like a phone.

This isn’t a server that runs 24/7. It gets rebooted constantly. It goes into low-power states. It will need to load that data from flash at SOME point.

How long does it take to load everything from a ‘reasonable’ sized phones flash (16GB? 32GB?) into DRAM?

Is it better to take 1-2 seconds for an app to load? Or have to wait 15 more minutes every time my phone reboots for a patch?