You might think of interpreters as only good for writing programs. Many people learned programming on some kind of interpreter — like BASIC — because you get immediate feedback and don’t have to deal with the complexities of a compiler. But interpreters can have other uses like parsing configuration files, for example. [Sakib] has a very complete tutorial about writing an interpreter in Scala, but even if you use another language, you might find the tutorial useful.

We were impressed because the tutorial uses formal parsing using a lexer and a parser. This is how you’d be taught to do it in a computer science class, but not how everyone does it.

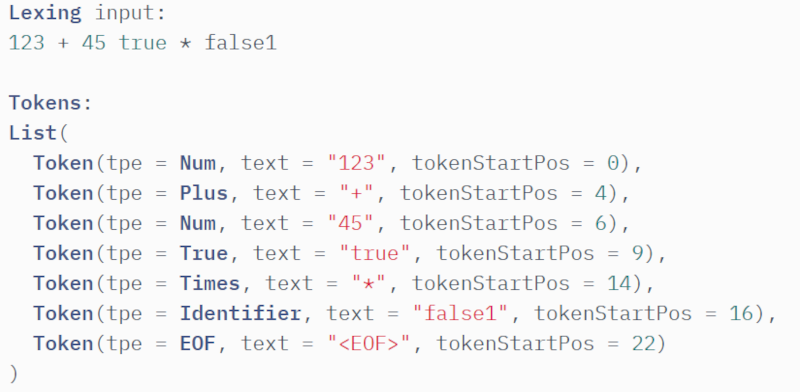

For example, if you wanted to parse commands of HELP, PRINT, and EXIT you could compare each string, but it is nicer to break the input into tokens (lexing) and then examine the tokens for combinations.

Doing it this way lets you identify types of tokens like “floating point number” or “integer” or “operator” and that makes interpreting things like math expressions easier. You can also more easily deal with issues like handling binding so that multiplication, for example, happens before addition.

Tiny computers can benefit from tiny interpreters. Of course, we like Forth, but that’s a different style of interpreter.

Using a lexer and parser is NOT how you are supposed to do it. I know someone who did it that way and made a miserably slow and almost unusable industrial device that way, which nobody could figure out why its performance was so miserable for ten years because of closed source. For an interpreter you need keyword interpretation that is FAST, because it has to happen every time you encounter a new keyword. This is why even the earliest BASIC interpreters pre-compiled keywords to tokens easily identified by ASCII bit 8 being set that could be quickly processed. Lexers and parsers are compiler tools meant to be used when you are building a tool like a compiler compiler that has to handle any language syntax you might ever throw at it, and must be versatile and doesn’t have to be fast because it will only ever see each of those keywords once, before it writes the machine code that becomes the final program.

My fundamental complaint about that device was met wtih bafflement. “What do you want,” the programmer who built it asked, “it’s a 20 MHz 16-bit embedded controller.”

“I want it to be faster than the 4 MHz 8-bit device I learned on in 1980, and it’s not.”

“Well there’s a lot of interrupt overhead and all you know.”

“If there was that much interrupt overhead it wouldn’t work at all. There is something wrong with your interpreter.”

And there was. He had used the lexer from his compiler textbook when he was taught compiler design in college, in the early 1990’s when you were also taught to “never optimize” your code.

Well, for simple things like parsing a few commands or a really well bounded grammar with a handful of tokens, yes you are probably right, but there are many examples of where a lexer and parser end up being a better choice. A formal grammar and a lexer will catch all badly formed tokens, not just the ones you coded for in a bunch of switch/case statements, which quickly become an ad hoc mess as you add more and more corner cases. And yes, I have written lexers and parsers for embedded telecoms systems using the classic tools and in one case my replacement implementation was an order of magnitude faster than the original rats nest of code that was causing problems.

To be honest, I fail to understand why anyone would use a separate lexing pass in 21st century. We have PEGs, we have GLR, we don’t need no lexing any more.

These sort of comments show why a formal grounding in compiler design and implementation is a good idea. If nothing else, it helps you make an informed choice on which approach and/or tools to use.

However, the issue cited by the O/P is the classic “The only tool you need is a hammer” problem.

The problem with such education is exactly its fixation on parsing. Take a look at any compiler curriculum – most likely it’s a copy of the Dragon Book, i.e., neck-deep in the 20th century. Most of the modern tools are yet to reach the undergrad courses. I’ve seen some postdocs in compiler-related research who never heard of PEGs.

“I’ve seen some postdocs in compiler-related research who never heard of PEGs.”

Even if that were true, it’s their fault, not that of the course.

There are far too many idiots who learn one approach and never bothered to look at another for whatever reason. Too lazy, Too complicated, That’s the way professor stodgy did it back in the 60’s, etc.

Lexer/parser (DFA based or otherwise), PEG’s, Combinators, etc. are just tools. Which one you use should depend on the task at hand.

If you’re lexing the program repeatedly during interpretation, you’re doing it wrong and the lexer isn’t at fault. You’re supposed to lex and parse the input once, create an abstract, efficient representation of the program in memory, and use that for interpreting.

+1

What if it’s a command or a protocol language and every statement is executed only once anyway? There’s a lot of cases with such an execution profile, and these languages implementations must be really thin and fast. Parsing takes most of the runtime.

i totally disagree with you, localroger, but i think the comment is a good starting point.

lexing (lexicographical analysis) is just breaking the input sequence into words. lexing almost always involves recognizing keywords, literals, and things like two character operators. like discerning whether +1.0 is an operator followed by a literal, or just a single atomic literal, is typically a lexing problem. lexing doesn’t have to be slow, it doesn’t have to be fast, and it doesn’t have to use a tool like “lex” (though if you do, it is likely to be very fast). you can lex the whole file at once before any other passes, or you can lex as-you-go.

the one thing that matters about lexing is that it is a logically separated part of your compiler/interpretter. i mean, this is just good factorization. if you write “if (curr_token() == TOK_FOR) { … }” you’re in a much better situation than if you write “scanf(“%s”,&tok); if (!strcmp(tok, “for”)) { … } else { ungetstr(tok); }”. even (or especially) if your curr_token() function is that naive! it is just important that your lexer is discernably separate from however you generate the syntax tree.

one of the things i have enjoyed over the years of working on different compilers / interpretters / translators, is that there are a diversity of approaches to these things. your lexer can be driven by a state machine that only consumes one character at a time…or it can be more like a recursive-descent parser, and once it recognizes, say, the start of a literal, it can consume all of the characters at once. and it’s functionally equivalent, and if you’re careful about it, you can get comparable performance either way. the same with syntax trees…most of the time, i prefer to parse to an abstract syntax tree and then generate a lower-level intermediate language as a subsequent pass. but you can do all of those steps interleaved as well, and if you do a good job of factoring then you don’t even have to pay a tax for it.

as for “never optimize”, it’s a complicated question, but i’m on the side against stupid optimizations and for smart optimizations. i care about performance and i often change things from O(n^2) to O(n) or O(n * lg n), which is a huge win. a lot of code winds up being n*(n/1024) where the fact that n is always smaller than 1024 obscures the fact that it’s actually O(n^2), so recognizing that n is big today leads to some obvious opportunities there as well. i also like to eliminate redundant computations, or to refactor a loop to get a kind of strength reduction. sometimes just approaching the thing from a different direction can provide a huge opportunity.

but i think a lot of people look at basically code generator optimizations, like agonizing over the difference between “x%1024” and “x&1023”. these are the kind of optimizations that even old, simple compilers are already pretty good at, and the thing is, it doesn’t change O(…), it just changes a constant multiplier in there, so even if your compiler misses the opportunity, it’s not a big deal. sometimes it’s worth doing that sort of stuff but 99% of the time you can get a better bang for a lesser buck by consciously ignoring these opportunities and focusing somewhere else.

to sum, optimize algorithms, not implementations.

There is one major argument against having a dedicated lexing pass: it will mean your language must have the same tokens everywhere, in all contexts, and it means you cannot mix languages together. And this separation does not really add anything useful, neither in performance nor in code readability. I hope one day everyone will figure it out and stop lexing for good.

first, it’s still lexing even if it is interleaved with parsing. second, if there are markers between token dialects then lexing is perfectly capable of handling this even if the passes are not interleaved.

This defeats the very definition of lexing. If your “lexing” is done by the same set of rules as “parsing”, there’s no more difference between them. And no, it’s stupid to separate languages by special tokens.

Parsing is the least interesting and the least important part of an interpreter or a compiler. It is really sad that till this day most of the tutorials pay the most attention to the least important side of things, I blame the Dragon Book for setting this trend.

Of course you can write pretty decent custom (or existing) languages in Scala. I’m using https://github.com/com-lihaoyi/fastparse as the parser library for a custom transpiler – 10kloc+ and no major pain in the head so far.