There are millions of tiny bugs all around us, in everything from our desktop applications to the appliances in the kitchen. Hidden, arbitrary conditions that cause unintended outputs and behaviors. There are many ways to find these bugs, but one way we don’t hear about very often is finding a bug in your own code, only to realize someone else made the same mistake. For example, [David Buchanan] found a bug in his multi-threaded PNG decoder and realized that the Apple PNG decoder had the same bug.

PNG (Portable Network Graphics) is an image format just like JPEG, WEBP, or TIFF designed to replace GIFs. After a header, the rest of the file is entirely chunks. Each chunk is prepended by a four-letter identifier, with a few chunks being critical chunks. The essential sections are IHDR (the header), IDAT (actual image data), PLTE (the palette information), and IEND (the last chunk in the file). Compression is via the DEFLATE method used in zlib, which is inherently serial. If you’re interested, there’s a convenient poster about the format from a great resource we covered a while back.

Given that DEFLATE is inherently serial, it’s tricky to format the data apropriately. [David] added special sections called pLLD sections (the lowercase first letter means that it can be safely ignored by decoders that don’t support it). These sections let the decoder know that a given IDAT chunk can safely be deserialized concurrently into x pieces. Apple uses a similar trick with its iDOT chunks. However, there is an issue here. It is possible for decompress(a+b) != decompress(a) + decompress(b) if a ends halfway through a non-compressed block. Since the DEFLATE method uses a window, concating two sections together can produce different results.

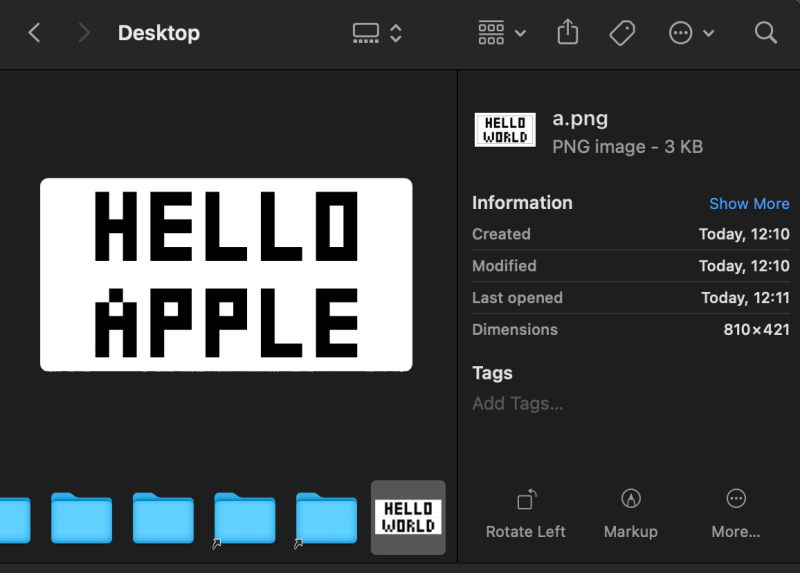

Since there are now two possible interpretations of a given PNG, you can craft a PNG so that when decoded serially, it shows one image, and when decoded in parallel, it shows another. Additionally, [David] found a race condition in desktop safari that results in a slightly different image decoded every time. Here’s the PNG being decoded every frame:

Since there are now two possible interpretations of a given PNG, you can craft a PNG so that when decoded serially, it shows one image, and when decoded in parallel, it shows another. Additionally, [David] found a race condition in desktop safari that results in a slightly different image decoded every time. Here’s the PNG being decoded every frame:

[David] wrote a little tool on GitHub to pack two images into one PNG. It reminds us of the old trick Steganography, where secret data was stashed inside an image.

(Header image courtesy of [PawelDecowski]).

Netscape Navigator’s PNG support had a bug where if transparency wasn’t specified, it would select one color to make transparent and set a non-white background color, usually a ‘cream’ or off-white color. If the PNG was 1-bit it would invert black and white with all the formerly white areas outlined with a 1 pixel wide, white line. But specify transparency, even if it wasn’t used, and the PNGs would render fine.

As usual, the Netscape/Mozilla people let reports and complaints fall on deaf ears. IIRC the bug remained to the final version of Netscape.

I seem to recall windows icons had a similar weirdness.

I think with windows icons it was the preview size being rendered at a different size or something like that.

I ported a game “Die Gemäuer von Kalawaum”, a Rogue-like, from Atari ST to Commodore PET (I was bored).

If your health is below your experience, you will gain 1 health for each step you move (but so do enemies).

Only one enemy can be active at a time.

If you are not at full health and stuck between two enemies that are one step apart, you should be able to get your health back up without them attacking you.

I found a bug in my version of the game, where this never happens. The enemies don’t attack you, but also your health never increases.

I tried to reproduce this situation on the original game and lo and behold, it has the same bug!

i thought the point of the non-standard pLLD/iDOT segment is to assert to the decoder that the blocks *can* be concatenated, that there are no misaligned block breaks or whatever. so this hardly seems like a bug, the bad file goes in and bad graphics get displayed. so long as it doesn’t crash or DoS or whatever, what’s the bug? a bug in this context is when a good file goes in and bad graphics come out.

i’m intrigued by the idea of a parallel decode. honestly, i think it’s a strike against PNG that it serializes the data before compressing it, though i’m sure that i couldn’t come up with better. it really gives me the feeling that they aren’t meant to be decoded in parallel. i do have 9MB PNGs handy that take wall time to render (0.3s on a modernish x86). i would have just expected the opportunity for parallelism to happen somewhere higher level. like if you’re making thumbnails, you’re probably making thumbnails for several images at once. if you’re rendering a webpage, there’s probably a lot of other slow processes going on, etc.

it’s definitely a hack to be able to decode a PNG in parallel!