These days we are blessed with multicore 64-bit monster CPUs that can calculate an entire moon mission’s worth of instructions in the blink of an eye. Once upon a time, though, the state of the art was much less capable; Intel’s first microprocessor, the 4004, was built on a humble 4-bit architecture with limited instructions. [Mark] decided calculating pi on this platform would be a good challenge.

It’s not the easiest thing to do; a 4-bit processor can’t easily store long numbers, and the 4004 doesn’t have any native floating point capability either. AND and XOR aren’t available, either, and there’s only 10,240 bits of RAM to play with. These limitations guided [Mark’s] choice of algorithm for calculating the only truly round number.

[Mark] chose to use a spigot algorithm from [Stan Wagon] and [Stanley Rabinowitz], also referred to as the “Double-Stan Method.” This algorithm only uses integer division and is rather fitting for the limitations of the 4004 chip.

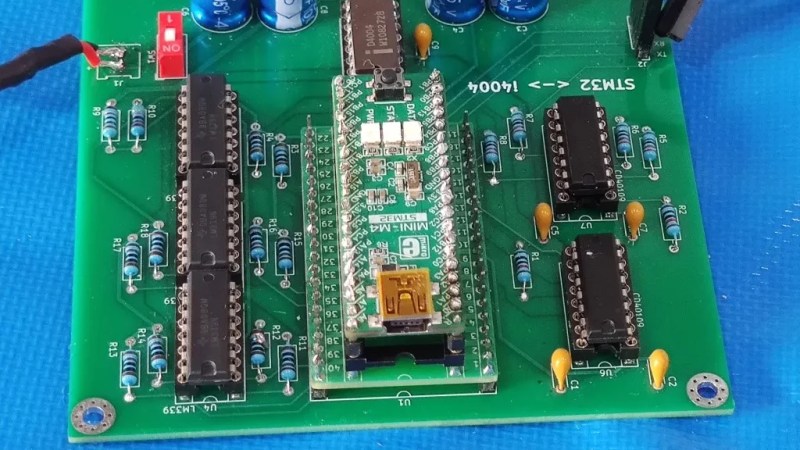

Running on a real 4004 with peripheral hardware simulated inside an STM32, it spent 3 hours, 31 minutes and 13 seconds calculating 255 digits of pi, and correctly, too. As a contrast, an experiment [Mark] ran on a first-generation Xeon processor calculated 25 million digits of pi in under a second. Oh, how far we’ve come.

We’ve seen other resource-limited pi calculators before, too. If you’ve been running your own mathematical experiments, don’t hesitate to drop us a line. Just be sure to explain in clear terms if your work is full of the more obscure letters in the Greek alphabet!

I was looking at building some 4004-based educational boards to help my work team understand the operation of a simple computer but good lord I didn’t realize how expensive they were at this point. So props to this guy for spending the money and then actually USING the thing

Did he actually run it on a real 4004; or just on his STM32 emulation of it?

Look carefully at the chip between the big capacitors.

If you RTFA then you’ll see that the STM32 is only pretending to be the 4004’s peripheral chipset. There’s a real 4004 doing the actual work.

“These days we are blessed with multicore 64-bit monster CPUs that can calculate an entire moon mission’s worth of instructions in the blink of an eye.”

True, but it’s not all sunshine and rainbows.

Today’s technology isn’t always superior to 1970s tech. And speed/throuput isn’t the meaning of everything. That’s why it’s so refreshing to see projects like this. 🙂👍

Btw, here’s an interesting article about 70s tech.

https://www.eejournal.com/article/why-your-computer-is-slower-than-a-1970s-pc/

In the early 1990’s I used several 22v10 PLDs in a project I designed for a Cellular phone company, programmed with PALASM. The compiler running on a Compaq 286 transportable PC (you’d need a big lap for it to be a laptop) took my entire 2+ hour commute home to run – with a battery change mid way. Even my Atom based Netbook completes this task today in around 3 seconds – progress!

https://www.youtube.com/watch?v=pW-SOdj4Kkk

There is far more CPU time being wasted drawing pixels on your screen than is ever going to that compiler.

Please explain that to the kids that try to explain to me that a text terminal is vastly more inefficient than their GPU driven GUIs.

✶Sigh!✶

“64-bit monster CPUs that can calculate an entire moon mission’s worth of instructions in the blink of an eye.”

Is this true? The AGC executed about 83,000 instructions per second. A blink of an eye is possibly 1/10th of a second. Apollo 17’s mission duration was 12 days. So, that’s 83K*86400*12 = 86 billion instructions. So, we need a 64-bit CPU running at 860000MIPs. I think this is unlikely.

But it is in the classic computing myth of: “Your Casio digital watch has more CPU power than the computers that sent astronauts to the moon” ;-) Which isn’t true, but it keeps alive the idea that everything just gets better and better, even though I’ve just been wrestling with getting a Raspberry PI to generate a MIDI baud rate, something any UART from the venerable ACIA 6850 from the 1970s could do. Even a Mac Plus or Atari ST could do out of the box, but it turns out to be beyond the wit of Linux to provide generic baud rate settings even though Linux appeared a full decade after the MIDI standard was established and has then had another 30 years to incorporate it as a standard.

So, instead we have to fool the uart clock at boot time, so that 38400 baud becomes 31250.

‘Progress’ ;-)

The blink of an eye is apparently about 0.1 to 0.4 seconds.

A Ryzen 5950x has 16 cores at ~4.5GHz, and executes more than one instruction per cycle. Supposing an IPC of 3 (pessimistic?), that comes out to 216 billion instructions per second.

0.4 seconds at 216 billion instructions per second = 86.4 billion instructions. Weird coincidence but okay. Given that my IPC number was a guess and there’s also even more going on (hyperthreading, etc), 0.1 second eye-blink AGC calculation probably isn’t far off from modern CPU performance.

A 2 year old AMD threadripper is capable of well over 2 million mips. That’s an order of magnitude higher than the 860000 MIPS figure.

Yes, but it’s busy running 100 tasks in parallel with uncertain timing, instead of 1 with certain timing. Some things require absolutely certain timing. Bare metal direct access.

I’m sure QNX can run on a threadripper, because it’s not the CPU that defines timing accuracy. The spec doesn’t say “executes in 3 clock cycles…ish”

Some people blink real slow.

“Computers may be twice as fast as they were in 1973, but your average voter is as drunk and stupid as ever!”

— Richard Nixon’s Head, the year 3000 [A Head in the Polls] 😁

I have always found the 31,250 bit/second data rate for MIDI curious. I am assuming someone wanted to relate MIDI bit rate to the NTSC-D line rate of ~15,734 lines/second, but it isn’t quite twice the line rate. My guess is Roland, Yamaha, Korg or some combination found that was the maximum data rate some late 1970s / early 1980s era processor could handle without flow control being available. I assume MIDI must drive drum machine users crazy because it doesn’t take much time skew for percussion to sound wrong and the MIDI data rate is slow. I haven’t really played around with synthesizers much since the 1990s. I had apparently falsely assumed everything would be USB controlled or some other higher data rate standard would be de rigeur by now.

And then there is MIDI time clock vs. MIDI time code vs. SMPTE time code vs (reality?). And then there is frame drop time correction.

The thing I do love about MIDI is the cables are cheap, rugged and always the same DIN connector pin-out no matter what. Imagine that: a standard that stuck and that did not subdivide.

31250 fits nicely into 1, 2, 4, 8MHz etc.

Sometimes a metaphor takes a while; early CS folk were geniuses, and the folk that followed them built our modern world, in the blink of an eye – in historical terms.

Ahhh, but the 38400->anybaud “hack” was due to backwards-compatibility with software designed for RS-232; which was *supposed* to allow old and new programs alike to *just work* with anybaud, when using standard OS-supplied functions to access any arbitrary UART chip, on nearly any arbitrary operating-system.

The idea, /I think/ is worthy…

But now the “that’s too hard, we know better” mentality of the kiddos taking over have, oft-willingly, forgotten all that… and stopped implementing it and many other standards in their drivers to such great extents that those decades-long standards and nearly every resource on the matter are no longer relevant.

So now once-great Opensource software-packages (and the slew of once-great documentation/tutorials aimed at many varying learning styles) that’ve been stable and essentially untouched for decades have to be recoded to handle special IOCTLs which now vary more than ever by OS, kernel-version, UART-chip, driver-version, and much more, throwing coders down a huge rabbit-hole of conflicting information, replacing long-stable programs with new ones that barely get the job done, are prone to feature-creep and the associated never-ending supply of bugs and again conflicting documentation, requiring coders to essentially rewrite nearly the entirety of stty into their own programs, and so much more, which is *exactly* what those standards were standardized to prevent.

Consider yourself lucky the RPi folk even bothered to implement it!

And then there is Java..

Pi is exactly 3

π++;

I’m not sure which is more unobtainium: the 4004 or the STM32.

“These days we are blessed with multicore 64-bit monster CPUs that can calculate an entire moon mission’s worth of instructions in the blink of an eye.”

Maybe… but the irony is that if your life depended on it, the 4004 might be the chip you actually want to *take* to the moon.

Modern chips are immensely powerful, but as their feature size gets down to a few hundred atoms across both the register elements and the eeprom cells holding the program and configurations become increasingly vulnerable to “single event upsets”, where, say, a high energy particle comes zipping through the chip and leaves a bunch of dead cells and flipped bits in its wake.

The space environment is replete with high energy thingies, and this is apparently a real PIA to engineer around because it’s so damn random. By contrast, the registers in an old 4004 were the size of continents, and the chip was as delicate as a brick (also, roughly the same speed)

Bah! pi is NOT the roundest number! That honor goes to tau, the ratio of a circle’s circumference to its radius.

Although, to be fair, all it takes to get tau from pi in binary is a bit-shift.

Just curious – how long would Pi to 255 digits take on say a 6502 @ 1.79 MHz? (Atari 8-bit speed) or a 68000 @ 7.14-8.0 MHz (Atari ST/Amiga)