[Alyssa Rosenzweig] has been tirelessly working on reverse engineering the GPU built into Apple’s M1 architecture as part of the Asahi Linux effort. If you’re not familiar, that’s the project adding support to the Linux kernel and userspace for the Apple M1 line of products. She has made great progress, and even got primitive rendering working with her own open source code, just over a year ago.

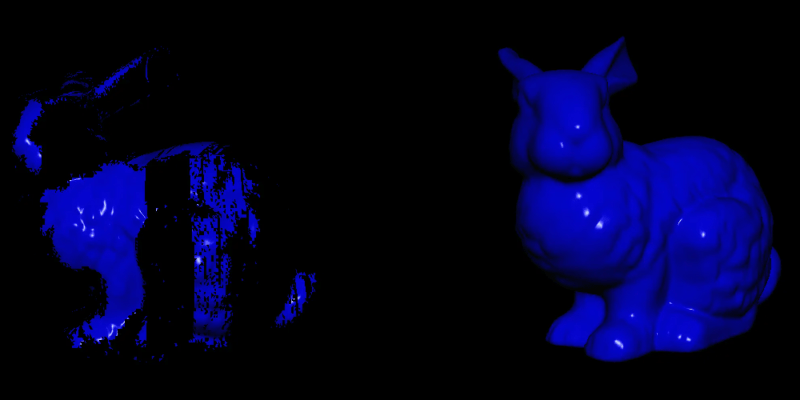

Trying to mature the driver, however, has hit a snag. For complex rendering, something in the GPU breaks, and the frame is simply missing chunks of content. Some clever testing discovered the exact failure trigger — too much total vertex data. Put simply, it’s “the number of vertices (geometry complexity) times amount of data per vertex (‘shading’ complexity).” That… almost sounds like a buffer filling up, but on the GPU itself. This isn’t a buffer that the driver directly interacts with, so all of this sleuthing has to be done blindly. The Apple driver doesn’t have corrupted renders like this, so what’s going on?

[Alyssa] gives up a quick crash-course on GPU design, primarily the difference between desktop GPUs using dedicated memory, and and mobile GPUs with unified memory. The M1 falls into that second category, using a tilebuffer to cache render results while building a frame. That tilebuffer is a fixed size. There’s the overflow that crashes the frame rendering. So how is the driver supposed to handle this? The traditional answer is to just allocate a bigger buffer, but that’s not how the M1 works. Instead, when the buffer reaches full, the GPU triggers a partial render, which eats the data in the buffer. The problem is that the partial render is getting sent to the screen rather than getting properly blended with the rest of the render. Why? Back to capturing the commands used by Apple’s driver.

The driver does something odd, it sets two separate load and store programs. Knowing that the render buffer gets moved around mid-render, this starts to make sense. One function is for a partial render, the other for the final. Omit setting up one of these, and when the GPU needs the missing function, it de-references a null pointer and rendering explodes. So, supply the missing functions, get the configuration just right, and rendering completes correctly. Finally! Victory never tastes so sweet, as when it comes after chasing down a mystifying bug like this.

Need more Asahi Linux in your life? [Hector Martin] did an interview on FLOSS Weekly just this past week, giving us the rundown on the project.

The explanation seems a bit lacking.

It sounds like the M1 is a primitive binning (aka “tiled”) architecture with an on-chip tile buffer. In a “regular” rendering pipeline, each primitive is processed fully in one pass, meaning that the renderer has to be able to access any part of the framebuffer at any time, and while that data can be cached, it usually can’t fit on chip.

The idea with a tiled architecture is that you render in two passes, with the first pass figuring out where the primitives will go on screen, and “binning” them into appropriate primitive buffers as you go, and in the second pass you do the pixel-processing for each tile of the screen, rendering the primitives from the appropriate bin, and having all the framebuffer for that tile stored on chip. When done with that tile, you write the final framebuffer data out to memory and move on to the next tile.

So you have a trade-off: you remove most of the memory traffic for framebuffer accesses, but you now having extra memory traffic for the primitive binning. You can win or lose depending upon which dominates the scene you’re drawing.

Another issue is what’s mentioned in the article: when you have too many primitives, you can run out of memory for binning them. This is because the maximum amount of memory set aside for binning is usually fixed. When this happens, you have to empty out all the bins, render all the tiles, save out all the partial framebuffer data (not just the final data), and then start filling the bins again. When you continue rendering the tiles, you have to read back all the partial framebuffer data from the previous binning pass. If you have a really huge amount of primitives, you might need to do this more than once.

When you overflow the bins, you get a performance hiccup, so developers will usually try to avoid this, assuming they care about performance on the platform in question, and that avoiding it is possible.

Yes, tiled rendering is explained quite well in the original post by [Alyssa Rosenzweig].

To answer the blogger’s question about why different shader programs are needed for storing final results vs. partial results: final results are those bits that are needed for subsequent operations, while partial results include those bits needed for the current operation. To be a bit more concrete: final results might just be the pixel color (which will be subsequently scanned out), while partial results may also include the pixel depth. If the depth is no longer used after this operation completes, there’s no reason to store it out for the final results.

Sounds like a hacky kludge way to do video, instead of having a few gigabytes of dedicated video RAM, like any laptop that costs *that much* should have.

Not really. Mobile has been doing tile-based rendering for years now. Use to have a videologic board that used powerVR to do it’s til-based rendering and it looked pretty darn good for the time.

Tiled rendering is actually a performance win when your primitive count isn’t high but your pixel count is. Sure, there is more complexity, but the same applies to CPU optimizations like out-of-order execution. And if the GPU IP you happen to own and have experience with already does this, it makes sense to use it. You could say it’s a kludge to have a few gigabytes of extra RAM that your CPU can’t access quickly because it’s attached to your GPU.

Not at all. Tile-Based Deferred Renderers (PowerVR, Apple, almost all mobile GPUs) and Immediate Mode (AMD, NVidia, Intel) are just different evolutionary branches of GPU architecture. TBDR’s in-core memory isn’t some weird cost-saving measure, it’s because huge amounts of off-chip bandwidth is (and especially, was) not practical in a mobile device — too much power, too much footprint, too many pins on a chip you’ve got to fit in a phone.

Outside of mobile, where those things aren’t such high hurdles, IMR hardware won out over TBDR after tussling with desktop PowerVR in the 90s (The Dreamcast is PowerVR, even). IMR is conceptually simpler on a hardware level, but it’s forever tied to this need for massive bandwidth.

They each have strengths and weaknesses. If you care less about pins and power, scaling performance monolithically is straight forward on IMR. The interesting question (and the bet Apple is making) is whether TBDR’s power and bandwidth efficiency will allow it to scale further in practice than IMR — you can’t just build a bigger monolith if power and heat are your limiting factors.

In a very real, if unconventional sense, TBDR has already solved the multi-GPU rendering problem. In fact, the M1 Pro, Max, and Ultra effectively have 2, 4, and 8 small GPUs working together; PowerVR’s been doing the same for years. That’s why the M1 Ultra GPU “just works” with it’s bonded dies, but SLI never scaled well and IMR GPUs are only now becoming multi-die.

The trouble right now, for Apple GPU performance on the desktop, is that none of the high-end renderers are actually optimized for TBDR — mobile ports will run and scale great on M1 because they’re built for TBDR already, but renderers built for IMR hardware (all Console ports and PC games/software) fall flat on TBDR hardware — they don’t take advantage of TBDR’s special advantages, and ubiquitous IMR performance patterns are literally performance anti-patterns on TBDR.

TBDR’s challenges on the desktop are much more about the state of software — not hardware failings. Remember also: M1 Max and Ultra already have memory bandwidth to the chip that rivals mid-high-end discrete GPUs, on top of TBDR’s advantages.