It’s hard to watch [Mark Zuckerberg]’s 2018 Congressional testimony and not come to the conclusion that he is, at a minimum, quite a bit different than the average person. Of course, having built a multibillion-dollar company that drastically changed everything about the way people communicate is pretty solid evidence of that, but the footage at least made a fun test case for this AI truth-detecting algorithm.

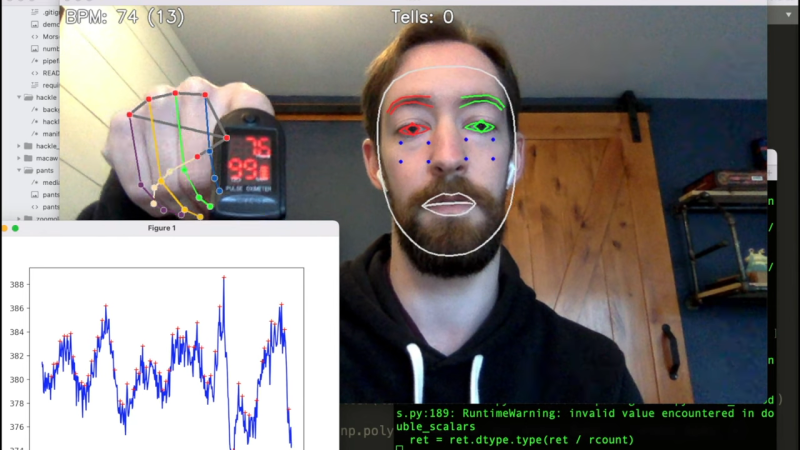

Now, we’re not saying that anyone in these videos was lying, and neither is [Fletcher Heisler]. His algorithm, which analyzes video of a person and uses machine vision to pick up cues that might be associated with the stress of untruthfulness, is far from perfect. But as the first video below shows, it is a lot of fun to see it at work. The idea is to capture data like pulse rate, gaze direction, blink rate, mouth posture, and even hand position and use them as a proxy for lying. The second video, from [Fletcher]’s recent DEFCON talk, has much more detail.

The key to all this is finding human faces in a video — a task that seemed to fail suspiciously frequently when [Zuck] was on camera — using OpenCV and MediaPipe’s Face Mesh. The subject’s pulse is detected by watching for subtle changes in the color of a subject’s cheeks as blood flows through them, which we’ve heard about plenty of times but never before seen presented so clearly and executed so simply. Gaze direction, blinking, and lip compression are fairly easy to detect too. [Fletcher] also threw in the FER library for facial expression recognition, to get an idea of the subject’s mood. Together, these cues form a rough estimate of the subject’s truthiness, which [Fletcher] is quick to point out is just for entertainment purposes and totally shouldn’t be used on your colleagues on the next Zoom call.

Does [Fletcher]’s facial mesh look familiar? It should, since we once watched him twitch his way through a coding interview.

very cool. defo worth watching the videos … and no annoying adverts !!!

This is super neat but it’s Yet Another Lie Detector That Doesn’t Detect Lies. This is just a beefed up version of a polygraph with the added bonus of likely being useless when used on people that have “reduced affect display”.

Or it’s going to flag them as liars if the training dataset contains people lying while making poker faces.

Honestly it’s one of the worst possible uses of AI and I don’t understand why it gets such enthusiastic exposure here without any critical thinking.

Hard agree; this is an application of technology where it’s… not actually useful for anything good, and it can be mis-applied easily. Good actors will see the “this is just for fun, doesn’t actually work” and not use it. Bad actors will download the project, apply it to targets of harassment that they want to discredit/accuse/”expose as frauds”, etc. and be happy they have a tool they can just toss videos of their subject into and then have it spit out a bullshit result that plays to their narrative. And because they used “the open-source analysis tool…” instead of something they trumped up themselves, they can pass it off with a degree of credibility to people who have no idea about the limits of the technologies.

Forensics as a field has centuries of history of people tricking lay people with pseudoscientific nonsense passed as incontrovertible proof (“Burn the witch!”). Sadly the practice continues to this day. This is just one more potential tool in that long tradition.

I understand this was done for education and entertainment purposes, but… as mt22 says, exposure without criticality feels pretty irresponsible here. I feel like the project could have achieved the intended effect without releasing its source — an entertaining video whose impact remains in the controlled bounds of context provided by said video. I don’t think there’s anything wrong with experimenting with technologies and building things that don’t work, but technologists (hackers, engineers, anyone working with this stuff) need to grow more mature in our handling of the potential avenues of abuse.

“enthusiastic exposure here without any critical thinking” I believe this is generally done in the comments section …. so ….. get some more critical thinking comments going !! YeeeHaaaa !!

Actively used by various government and private industries in China. Including things like applying for documents, criminal investigations, job applications, schools, etc.

“Reduced affect display”…You know, as someone with Asperger’s, I’ve often been characterized as someone with quite emotionless presence. What’s worse is that when I do display some emotion via facial expressions and/or body language, I often display it in a manner that is different from neurotypical people or might even seem the complete opposite reaction to them.

I tend to somewhat worry about these kinds of articles and tech and how these things will screw me over one day in the future.

“I tend to somewhat worry about these kinds of articles and tech and how these things will screw me over one day in the future.”

Yeah, that a likely outcome. Since you don’t fall in “the majority use case” this threat to you (and people like you) there is a low likelihood of this being fixed.

It will be a double whammy if you’ve got a darker skin color, where purely by having dark skin predictive policing systems will flag you as a potential criminal. Then you will be interviewed using a flawed lie detection system that will flag you as lying…

Unfortunately I don’t see many paths to preventing that outcome. dair-institute.org (Timnit Gebru) is working on things like that, but comparing her funding to that of companies building AI systems for law enforcement and military applications, things don’t look that hopeful.

(Hate the term “AI” – but calling them computational statistical data classifiers doesn’t roll off tongue as easily.)

” Then you will be interviewed using a flawed lie detection system that will flag you as lying…”

That’s what lawyers are for with their questioning of any new technology.

Lawyers tend to be a luxury that only the affluent can afford.

People screw over other people already when you don’t behave like they expect, and assume or assign you all kinds of ill intents you never had. Dont need to have aspergers for this to happen, people who have been abused will also act awkward or “weird” and people will assume you are hiding something or just completeley misinterpret you, unfortunately that misjudgement often comes with a lot of emotionally expressed righteousness.

I wasn’t trying to imply that one would need a neurological condition like mine or anything like that. I just simply already have lots and lots of experience with getting misinterpreted due to non-conformant facial expressions and body language, and that I worry it will cause me some real trouble sooner or later through no fault of my own.

The polygraph is and always has been simply a piece of showmanship to break the resistance of a suspect during questioning. It’s a bunch of blinking lights, ticking tape, and twitching needles hooked up to you with wires while a stern, expertly-trained egghead pores over the output, supposedly peering into your very soul. It’s never been about detecting lies.

It’s entirely about wearing down the resistance of an individual during interrogation. It serves the exact same function as the blinding, hot light they keep in your face while they sit on the shadowed side of the room. It’s a psychological game. A very manipulative one.

Dependency hell!

I’d actually like to record myself all day and use this. I started to record my >8 hours of video chat each day. I noticed that when reviewing some videos I was saying things that were wrong, but I don’t know if I realized it at the time or not. Also, I find that people have a hard time remembering a lie. As if our brains try to block the memory.

Stop building pseudoscience polygraph machines. You know how the police will use them: as an intimidation prop and nothing more (this one even has AI! Spooooooky! Now confess!).

You have to know that there is no way in hell that the flimsy evidence behind this thing’s operation would ever be accepted in a court. You’d think people would learn from the immense regret of the designer of the OG polygraph. It’s not a good idea!

Whatever hapens, it is still entirely useless to detect a liar who has come to believe their own lies. Also pretty useless to detect somebody who is repeating a lie, but had been told it as if it were true (like most hired spokespeople for corporations/governments).

Also, lie detectaors are a deeply unethical and concerning technology, and for those reasons above aren’t even that useful if they could work reliably.

It is funny to read these responses by individuals who didn’t think of this analytic and couldn’t have built it. Everyone is “harshing” on the fact that this is not THE solution and how unethical lie detectors are. People, you are missing the point. As a security industry professional I am happy to see someone pushing deeper into behavior analytics. There is a good chance that someday a behavior analytic is going to save someone you know; or maybe even you. How many airplane types had to be built and tested (and disparaged) before the Wright Brothers made aviation a reality? Heisler states in the video that this is not intended to be released as a product. Also, I am not sure if you know but polygraph interviews are not admissible in court. So all you nay-sayers, go back to bed.