We’ve been following the open, royalty-free RISC-V ISA for a while. At first we read the specs, and then we saw RISC-V cores in microcontrollers, but now there’s a new board that offers enough processing power at a low enough price point to really be interesting in a single board computer. The VisionFive 2 ran a successful Kickstarter back in September 2022, and I’ve finally received a unit with 8 GB of ram. And it works! The JH7110 won’t outperform a modern desktop, or even a Raspberry Pi 4, but it’s good enough to run a desktop environment, browse the web, and test software.

And that’s sort of a big deal, because the RISC-V architecture is starting to show up in lots of places. The challenge has been getting real hardware that’s powerful enough to run Linux and compile software on, that doesn’t cost an arm and a leg. If ARM is an alternative architecture, then RISC-V is still an experimental one, and that is an issue when trying to use the VF2. That’s a theme we’ll repeat a few times, but the thing to remember here is that getting more devices in the wild is the first step to fixing things.

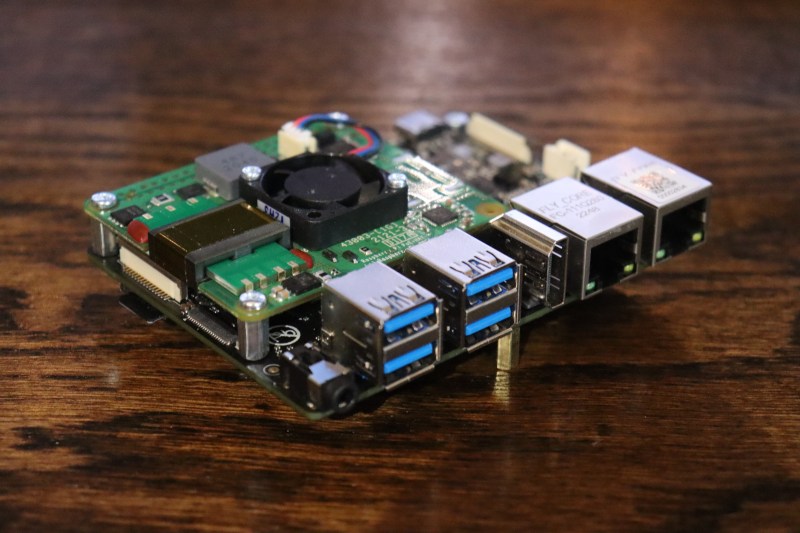

The Hardware

So what do you get? The VF2 comes in three flavors, with two, four, and eight gigabytes of RAM. The boards are otherwise identical, and the star of the show is the JH7110, a 64-bit quad-core RISC-V SoC. Built-in to that CPU is the Imagination BXE-4-32 GPU. There’s a USB-C port, usually used for powering the board, 4 USB 3.0 ports sharing a single PCIe 2.0 lane, and dual Gigabit Ethernet ports. The board has only a single HDMI 2.0 port, but is capable of running dual displays by using a MIPI DSI port as well.

There are also some neat Raspberry Pi compatibility features. The board has a 40-pin GPIO header, mostly compatible with the Raspberry Pi pinout, and even has the four-pin Power over Ethernet header in the correct place for using the Pi PoE HATs. That works very nicely, with the only missing element being the fan control on the HAT.

There’s MIPI input, too. That should be compatible with something like the Raspberry Pi cameras, though I don’t have on one hand to test. There’s an SD card slot, an eMMC socket, and a very welcome M.2 NVMe slot on the bottom of the device. So far, booting off the NVMe still requires a boot partition on the SD card, but still results in all the speed boost the single dedicated PCIe 2.0 lane is worth. Direct boot from NVMe is on the roadmap, but not yet implemented.

OS Support

The hardware is reasonably impressive, but the utility hinges on the OS and software support. There’s a Debian image that’s seeing regular updates, with issues continually getting fixed. What we really care about is upstream status, and that process has started. There’s hope for a minimally booting system with kernel 6.3, though there are quite a few drivers to upstream before the system is fully usable with the vanilla kernel.

And one of those drivers we have to mention is the GPU. The hardware is known as BXE-4-32 GPU, a GPU core from Imagination Technologies, and successor to the PowerVR architecture. Imagination is making a play for getting its designs built into RISC-V chips, and as part of that, has released open source drivers for its modern products. There’s an ongoing effort to upstream those drivers, and some enablement code has already landed in Mesa.

There’s also the broader issue of RISC-V support. Most of the modern distros build RISC-V packages, but it’s not uncommon to find problems or failing packages on this less popular architecture. For example, I wanted to benchmark the VF2 board using the Phoronix Test Suite. That is available as a noarch package, but has multiple dependencies, like php-cli. That depends on php8.2, and that package currently fails to build on RISC-V on Debian. There’s a patch available to fix the issue, so I was able to rebuild the .deb on the VF2 and get things working.

So About Those Benchmarks

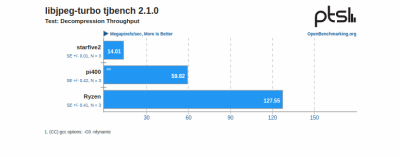

It’s always fun to benchmark shiny new hardware. So imagine my disappointment when nearly every CPU test I tried either failed to install, or failed to run. I suspect this is also the newness of the RISC-V platform, as many of the PTS tests just haven’t been built for the platform yet.

For those that did run, it’s not great. Take a look at my results. I suspect the performance may increase as the software becomes more mature, but it’s currently way behind a Raspberry Pi 4. Jeff Geerling has coverage of this board, too, and found that the VF2 is currently performing in the ballpark of a Pi B 3.

There are some important exceptions to those observations. First, system tests that rely heavily on drive access show a significant advantage for the VF2. The Pi was booted off a NVMe drive via a USB3 adapter, but the native NVMe performance is still significantly better.

And then those two Ethernet ports are particularly interesting. Could this thing be useful as a high performance router? I checked out it’s performance pushing packets with the Debian install, and it’s capable of almost wire speeds. I ran an iperf3 speed test through the device doing a simple NAT, similar to a standard router install, and it managed to average 755 Mbits per second. Using the bidirectional option, the test managed just over 600 Mbits per second in both directions. Respectable for anything but a full Gigabit Internet connection. There has been work done on bring OpenWRT to the platform, and that may come with better throughput, but the latest OpenWRT development branch fails to boot on my device.

What’s it good for?

OK, we’ve covered a lot of ground. So what do the brass tacks look like here? The VisionFive 2 has some potential. The dual Gigabit ports and coming OpenWRT support make the $100 device tempting as a router, and PoE support doesn’t hurt. The NVMe drive is another leg up, and there could be a case made for the VF2 as network storage device.

It’s not powerful enough to be a desktop replacement device, and the lack of dual HDMI ports don’t help any. The various distros don’t really have tier-one support for RISC-V yet, either. And oddly, that may be this board’s biggest selling point. Do you do any maintainer or programming work? Have you checked your code on a RISC-V processor yet? That’s the real opportunity here. It’s an affordable platform to test-run RISC-V support.

That process is ongoing, for developers everywhere. And that’s one of the reasons that performance is a bit disappointing. Many applications that need the performance have function multiversioning, a technique that allows for platform specific code that can really improve performance. If a platform doesn’t have a tailored implementation, the program drops back to the slower default code. And given the relative newness of the RISC-V platform, it’s not surprising that performance isn’t in top shape yet.

But 2023 might just be the year of the RISC-V SBC. The VisionFive 2 is available, and the folks at Pine64 are planning a new board based on the same JH7110 CPU. There’s the upcoming HiFive Pro board, or the Ventana Veyron CPU. So maybe it’s time to dive in, and give RISC-V a spin.

Early Transputer. Interesting device with so-so performance.

Mines bricked. I was able to get it to boot once a month or two ago, but not anymore. I’ve tried every image multiple times. I’m pissed I wasted my money

Hi Mitch, you can send your order info and the issue description to the support team support@starfivetech.com

“For those that did run, it’s not great. Take a look at my results. I suspect the performance may increase as the software becomes more mature.”

Not unless that CPU has non-standard instructions, I’d guess: I’d bet a lot of that difference is just coming from the boost from NEON/SSE SIMD instructions. RV64GC doesn’t have that – you’d need the vector processing set to get anything comparable.

The above benchmark is probably a great example, the SoC has dedicated hardware (CODAJ12V) to maximise jpeg decoding speed licensed from chips and media ( https://www.chipsnmedia.com/others ) . Comparing its ability to decode using a software only is not really a fair benchmark. It would a similar drop in performance if MMX/SSE/AVX/AVX-512 instructions on x64 were not used.

“Comparing its ability to decode using a software only is not really a fair benchmark.”

I… kinda agree? But the problem with having an on-chip accelerator for something like that is that it’s *just* for that, whereas of course SIMD stuff generalizes to anything. I mean, if you’re talking about benchmarking CPUs specifically, I don’t think it’s really “unfair” to compare it – there are always going to be inherent limitations to a hardware encoder/decoder.

RISC-V’s lack of vector instructions in existing CPUs is part of the reason I’m just not that interested in it right now, outside of softcore implementations.

The SiFive/Intel Horse creak will have vector support and the next JH8100 will have vector, but if it comes with Intel ME, I’ll give it a miss.

To be clear I’ll give the Intel chip a miss, if they try and force ME into RISC-V. The JH8100 (when official announced in about 3 months) will depend on its price benchmarks vs the SiFive P550 and if it supports PCIe3. It is also China home brewed chip which …. yea lots of thinking.

And I’m guessing a year to a year and a half before the JH8100 will be in a Board you can hold in your hand will be another factor.

The RISC-V vector instructions are very different than SIMD instructions, though, so it’ll be interesting to see both how quickly compilers can handle them well, and how quickly companies can produce bug-free implementations of them.

The compiler portion isn’t *that* different so that’ll probably be quick, but I wouldn’t be surprised if gotchas show up in the first few RISC-V vector implementations.

@Pat there have already been implementations and they were before the extension was ratified (2.0 or above is ratified), so they are in a no mans land, only compilation tools that will support them, because they are incompatible at the instruction and binary level, are modified point in time tools supplied by the vendor.

Allwinner D1 0.7.1 Vector spec

Bouffalo Labs BL808 is also 0.7.1 Vector spec

T-Head TH1520 SoC (should out perform the RPi4) but is also 0.7.1 Vector spec

“only compilation tools that will support them, because they are incompatible at the instruction and binary level, are modified point in time tools supplied by the vendor.”

Yes. This is literally what I’m saying. Because the vector extensions are more complicated at the hardware level than SIMD-type processing like SSE/AVX/NEON/etc. and there’s not a long history of compiler support to lean on, I wouldn’t be surprised if the early silicon ended up having issues once it gets more widely used.

SIMD kindof started the other way around, with MMX being an extremely trivial implementation, and the limited usefulness with data types meant software support came slowly – essentially, the instruction set evolved to fit the compiler.

NEON didn’t really have to go through that curve because the common use cases map almost perfect to SSE, with the exception of the fact that they just *had* to swap the element ordering.

The RISC-V vector instructions are trying to abstract a bit away from the capabilities of the hardware, but that doesn’t mean that the hardware itself won’t have to evolve to fit the common use cases.

@Pat The ratified Vector extension (2.0) has initial supported by most of the tools that need to support them. And I guess further compiler optimisations may be possible. But I suspect that with the extensive validation and verification tools that exist, there should be no hardware that ships with flaws for any of the standard ratified extensions.

“@Pat The ratified Vector extension (2.0)”

The 2.0 vector extension hasn’t been ratified yet…?

“But I suspect that with the extensive validation and verification tools that exist, there should be no hardware that ships with flaws”

We might have different ideas as to what “flaw” or “gotcha” means. I don’t mean non-working, I mean less useful than originally expected or with side-effects not originally expected. I mean, some chip vendor could produce a chip with huge vector capabilities but which end up adding severe performance penalties in other areas. Again, the vector extensions in RISC-V aren’t like SSE/AVX/NEON/etc., they’re more abstracted away from the implementation. You technically could have so limited an implementation that it could *work* but wouldn’t be any faster.

My guess would be that after a few years most general purpose chips will settle on a ‘standard’ ish set of resources and compilers will tend to lean on that, with more special-purpose stuff spinning off on the side. I mean, that’s kindof the way the entire ecosystem is supposed to work.

It’s both the beauty and the curse of the vector extensions. They’re designed to be really flexible and powerful, but it also means that it’s going to take time for chip designers to find the right complexity tradeoff in the implementation.

A fun benchmark would be:

RPi4B H.265 software encode vs VF2 H265 hardware encode

or

RPi4B H.264 hardware encode vs VF2 H264 software encode

RPI 4B supports: h.264 decode+encode, and h.265 decode

VF2 supports: h.264 decode, and h.265 decode+encode

Is this actually working on the VF2 though?

RISC architecture is gonna change everything.

Yeah. RISC is good.

ARM is RISC. RISC-V is RISC

RISC is not RISC-V.

Whooooooosh.

If you define RISC as a strict load-store architecture, ARM is not a RISC.

Check it out!… Crash… and Burn!

Most of the provided images do not work for me just the minimal image 69.

No idea why but frustrating!

You have to update the pre-installed uboot for most recent image to boot properly. It is fairly easy using a simple FT232-TTL USB cable and it is well documented:

You can find in detail in StarFive QSG document on page 35 “4.4. Recovering the Bootloader”

https://doc-en.rvspace.org/VisionFive2/PDF/VisionFive2_QSG.pdf

And the needed binaries are there:

The “recovery” tool is there: https://github.com/starfive-tech/Tools/tree/master/recovery

And both uboot & “fw-payload” ready to use files can be found here: https://github.com/starfive-tech/VisionFive2/releases/tag/VF2_v2.10.4

Did you update the firmware?

https://forum.rvspace.org/t/a-definitive-guide-to-getting-a-visionfive2-board-running-on-a-debian-69-version/1468/11

Or did you try the latest image 202302 and set the boot switches?

https://doc-en.rvspace.org/VisionFive2/Boot_UG/VisionFive2_SDK_QSG/boot_mode_settings.html

And just in case, don’t use a 4K monitor, but just 1080p.

How is the power efficiency of this board? I keep wanting to setup my own GlusterFS system at home, so all I want is SATA, GigE, 8Gb of RAM and a serial console. I don’t need anything else like bluetooth, graphics, etc.

Jeff Geerling’s GitHub issue ( https://github.com/geerlingguy/sbc-reviews/issues/10 ) covering this board lists it at basically similar to a Pi 4, probably a bit higher (5.3W max, 3.1W idle). Although I don’t know if both GigE ports were in use there – GigE when running properly at gigabit is a significant power draw (typically a third to a half a watt). The transceivers they use do have energy-efficient ethernet (EEE) support, but who knows if it’s actually in use.

Overall though it’s not much different than other existing SBCs.

There is OpenWRT WIP port here:

https://git.openwrt.org/?p=openwrt/staging/wigyori.git;a=shortlog;h=refs/heads/riscv-visionfive-202301

Links to everything but the actual product :/

https://www.starfivetech.com/en/site/boards

Try here: https://forum.rvspace.org/t/visionfive-2-faq-quick-links/1376

Also Armbian https://www.armbian.com/visionfive2/

Some time ago I saw a review of an older version (with JH7100) from “Explaining computers”. BlaBla://youtube.com/watch?v=4PoWAsBOsFs

@12:30 He does a very simple test with Gimp / Filters / Render / Lava with the results @13:40 RiscV took 71 seconds, Raspi 4 did it in 47s and my own AMD 5600G did it in about 3s. And it also ran on just one core. These things are never designed to be speed monsters, and I also paid around EUR600 for my 5600G (Which was still quite close after the Corona price peak)

What I’m a bit disappointed in is that these little single board PC’s are all the same. I’d like to see a version with 4 to 8 SATA ports to make a nice NAS, but most don’t even a single SATA connector. The Odroid-HC4 with 2 Sata ports is already a rare find. For more Sata connectors you have to go to the much bigger mainstream boards with for example an Intel J41xx processor, but I am still boycotting intel because of their anti competitive behavior.

I’d like to find more that actually have proper temperature specs! The VisionFive guys just list “recommended” temperature range (seriously?) and it’s only 0 to 50 C, which… c’mon.

Some of the olimex boards are available in an industrial temperature range for very modest extra costs. And indeed, extended temperature range is another way for manufacturers to stand out in the crowd.

IIRC the JH7100 had some fairly significant hardware bugs that required costly software workarounds. The JH7110 was supposed to fix those bugs, so you could see some significant improvements over the old version. It’ll still only be maybe slightly faster than a Raspberry Pi 3, that’s just the limits of the SiFive U74 core they’re using.

If the chip includes a memory controller, why couldn´t they use a normal SODIMM connector in the board so that people would fit memory sticks the size they need ?

Would also simplify upgrades..

Also, a “chipset” of some kind, with sata controller/usb controllers/other things would also be nice to have

The thing about allowing all SODIMM memory chips is that you need to buy and test them all and add extra quirk parameters to your second stage loader (the bootloader that configures and enables LPDDR/DDR) to squeeze the maximum performance out of each make and model for your memory controller. And instead of using cheap solder you need to use expensive gold edge connectors with enough of a gold coating to allow at least a minimum of five insert remove cycles. All that adds cost (and delays initial product shipping) that will always be passed on as a more expensive board to buy. The StarFive JH7110 can at most support 16 GiB of RAM and already sold 2/4/8GiB boards. The other thing is that you have also added a new potential point of failure when trying to trouble shoot boards. I’ve seen non technical people try and insert SODIMM chips, and it is truly scary. One person (in the 90’s) was looking for a hammer to tap them home just to make sure that it was firmly wedged in (double face palm)!

The other thing is that it would mean a change from LPDDR (low power) to DDR. LPDDR needs to be soldered directly to the board, which also has the advantage of physically taking up less space on the PCB. This means smaller cheaper, lower power boards. 8-12 layer PCB’s are not cheap reducing the physical size lowers price. And every ¥/€/$/£ that the price can be lowered, means more product shipped.

It’s way more size/routing than cost/compatibility/reliability. The connectors just don’t cost anywhere near the chip cost, and if compatibility was a problem you’d just say “use this.”

LPDDR4 is a lower pin-count interface than a standard 72-bit DDR4 DIMM by a huge amount, so routing is a massive reason.

I know there were attempts to create a 32-bit SO-DIMM but I don’t think that caught on anywhere.

I’d love to see comparison power per watt usage, lets face it if you need power you don’t go with this type SBC

@Pat I get what you mean now, under the ISA in the silicon it could be implemented any way and still be 100% binary compatible, but slow.

Ah yeah that reminded me: I ordered one of those last year and have had it sitting around ever since it showed up (I forgot when exactly). So I got it up and running with the February Debian image over the weekend (not trivial, I needed the usb serial and tftp to get it going, but it didn’t take too long), moved rootfs onto an NVMe easily enough(!) and left it building Qt dev branch overnight. With a small passive heatsink, CPU temperature got up around 65C while keeping all 4 cores busy. After running overnight it still took most of this morning to finally finish building the few modules that I tried, around 13k build steps with ninja (but I’m not even going to try to build qtwebengine). (Although if I had more than one of these, I’d use icecc.) Qt Quick doesn’t render anything, not even with the software renderer so far; and GPU support is known to be WIP. glxgears doesn’t render either. Painting in the working X11 apps is quite noticeably slow (don’t scroll the terminal too fast, for example). I will be glad to see that GPU supported ASAP, and glad to hear Imagination is helping too. It’s also the first time I’ve seen a Linux machine that can run X11 but has no video console. But having a real SSD is so awesome. And I will also look forward to having those vector instructions in the next-gen chip. I verified with https://github.com/gsauthof/riscv that programs which attempt to use those instructions cannot even be assembled.

I have the 8gb vision 2. Is there a link to t

he proper way get the latest debian image to boot

It kept throwing errors about the wifi dongle they sent and x never finished starting but I just got the arrow cursor

The visionfive (the first one) ran riscv assembler code nicely. I think a use case for these is for education and assembler programming. Being able to program in riscv assembler should lead to nice performance for certain tasks.

They’re quad-core 1.5Ghz cpus, that are comparable to e-cores (or in arm language: A50-cores, not performance cores like the A70 cores).

As such they work well for low power applications, where you want a certain amount of work done below a certain tdp threshold.

For such cores, especially at 1,5Ghz, it doesn’t make sense trying to compare it to much more power hungry cpus. If that’s what you want to do, you may want to compare a similar riscV chip with 80 cores at 3Ghz (like an altera) to an x64 architecture with 12 cores 24 threads at 4Ghz (like a ryzen 2950/3950x).

At least, both of them operate below 200W TDP, and should give comparable performance outcomes, the riscV chip being more dependent on fast ram for faster performance, as it lacks the larger L-cache of x86/64 cpus.

So true

The original article says:

“The board has a 40-pin GPIO header, mostly compatible with the Raspberry Pi pinout”

Can someone explain what the differences are that make it “mostly compatible”? I’d be particularly interested in hats that work or don’t work, and why (if you know).

There were some different on the GPIO pinout, but recently StarFive has added more software updates to resolve the difference.

The following page shows the pinout of VisionFive 2: https://doc-en.rvspace.org/VisionFive2/40-Pin_GPIO_Header_UG/VisionFive2_40pin_UG/gpio_pinout%20-%20vf2.html

By installing the VisionFive.gpio as mentioned in the following instruction, the GPIO of VisionFive 2 should be fully compatible with RPi demonstrations and applications.

https://doc-en.rvspace.org/VisionFive2/AN_RPi_GPIO/VisionFive2_ApplicationNotes/Shared_preparing_software%20-%20vf2.html