In early March of 2023, a paper was published in Nature, with the researchers claiming that they had observed superconductivity at room temperature in a conductive alloy, at near-ambient pressure. While normally this would be cause for excitement, what mars this occasion is that this is not the first time that such claims have been made by these same researchers. Last year their previous paper in Nature on the topic was retracted after numerous issues were raised by other researchers regarding their data and the interpretation of this that led them to conclude that they had observed superconductivity.

According to an interview with one of the lead authors at the University of Rochester – Ranga Dias – the retracted paper has since been revised to incorporate the received feedback, with the research team purportedly having invited colleagues to vet their data and experimental setup. Of note, the newly released paper reports improvements over the previous results by requiring even lower pressures.

Depending on one’s perspective, this may either seem incredibly suspicious, or merely a sign that the scientific peer review system is working as it should. For the lay person this does however make it rather hard to answer the simple question of whether room-temperature superconductors are right around the corner. What does this effectively mean?

Cold Fusion

What room-temperature super conducting materials and cold fusion have in common is that both promise a transformative technology which would alter the very fabric of society. From near-infinite, cheap energy, to zero loss transmission lines and plummeting costs of MRI scanners and every other device that relies on superconducting technology to work, either technology by itself would cause a revolution. At the same time, as with any such revolutionary new technology, it would also stand to make a select number of people very wealthy.

At this point in time, the term ‘cold fusion’ has become synonymous with ‘snake oil’, with researchers finding themselves unable to replicate the results of the original 1989 experiment by Martin Fleischmann and Stanley Pons. This experiment involved infusing a palladium electrode with deuterons (nucleus of deuterium), from surrounding heavy water (D2O), before running a current through the electrodes.

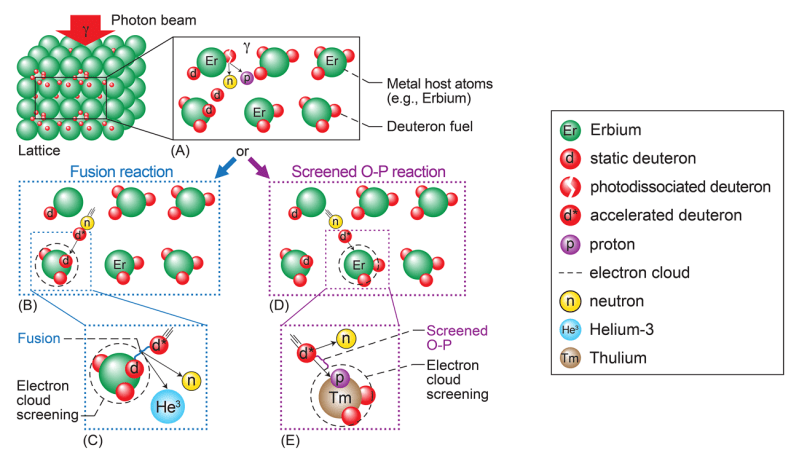

After the media backlash from the failure to replicate these results poisoned the very subject of ‘cold fusion’, its researchers have since maintained a low profile, calling the research area that of ‘low energy nuclear reactions’ (LENR) and generally shied away from the public eye. What is tragic about LENR is that at its core it’s essentially lattice confinement fusion (LCF), which NASA researchers at Glenn Research Center recently demonstrated in a study using erbium to provide the metal lattice.

The NASA LCF experimental setup involves a similar loading of the metal with deuterons as in the Fleischmann-Pons experiment. The advantage of LCF over plasma-based fusion approaches as performed in tokamaks is that inside the host metal lattice the distance between the deuteron nuclei is less than that of D-T fuel nuclei in deuterium/tritium fuel plasma, making theoretically overcoming the Coulomb barrier and initiating fusion easier while not requiring high pressures or temperatures.

To initiate D-D fusion, the NASA researchers used 2.9+ MeV gamma beams to irradiate the deuterons, with the researchers confirming that fusion had in fact taken place. This fusion trigger is where the biggest difference between the Fleischmann-Pons and NASA experiment would appear to be, with the former using purportedly the electric current to initiate fusion. As with all experimental setups, contamination and environmental factors that were not accounted for may have confounded the original researchers.

In the world of scientific inquiry, this is an important aspect to keep in mind, a point further illustrated by the wild ride that was the hype around the EmDrive. This was supposed to be a fuel-less, microwave-based thruster that was supposed to work by physics-defying means. Ultimately the measured thrust from the original experiment was refuted when all environmental influences were accounted for, resigning the concept to the dustbin of history.

An important aspect to consider here is that of intent. Many major discoveries in science began with someone looking at some data, or even a dirty Petri dish and thinking to themselves something along the lines of “Wait, that’s funny…”. No one should feel constrained to throw wild ideas out there, just because they may turn out to be misinterpreted data, or a faulty sensor.

Superconductivity

Although superconducting materials have been in use for decades, the main issue with them is that they tend to require very low temperatures in order to remain in their superconducting state. So-called ‘high-temperature‘ superconducting materials are notable for requiring temperatures that are comfortably away from absolute zero. The record holder here at atmospheric pressures is the cuprate superconductor mercury barium calcium (HGBC-CO), with a temperature requirement of only 133 K, or -140 °C.

While we don’t understand yet how superconductivity works, we have observed that increasing pressure can drastically increase the required temperature, bringing it closer to room temperature. This discovery came along with the increased focus on hydrides, following the 1935 prediction of a ‘metallic phase‘ of hydrogen by Eugene Wigner and Hillard Bell Huntington. When combined with a material such as sulfur, the required pressure of 400 GPa (~3.9 million atmosphere) to create metallic hydrogen is reduced significantly. Even more tantalizing is that metallic hydrogen is theorized to be an excellent superconductor.

Metallic hydrogen was first experimentally produced in 1996 at Lawrence Livermore National Laboratory using shockwave compression. The first claims of solid metallic hydrogen being produced came shortly after this, with many researchers having claimed to produce metallic hydrogen, though with some of the results being disputed. In 2017, rather than using the traditional diamond anvil cell (DAC) to put pressure on hydrogen, researchers used the Sandia Z Machine’s extremely strong magnetic fields to produce metallic hydrogen.

Controversy

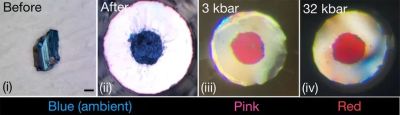

Ranga Dias and colleagues have also published a number of papers on metallic hydrogen, including the production of it in a DAC at 495 GPa, a claim which was met with skepticism. This comes alongside the research in hydride alloys, with carbonaceous sulfur hydride (CSH) being the subject of the retracted 2020 paper. This material, when put under 267 GPa of pressure in a DAC, purportedly was superconducting at a balmy 15 °C, which would put it firmly in the realm of room-temperature superconducting materials as long as the pressure could be maintained.

Key to the claim of superconductivity lies in measuring this condition. With the speck of material trapped inside the DAC, this is not as simple as hooking up some wires and running a current through the material, and even this would not be sufficient as evidence. Instead the gold standard of measuring superconductivity is the ability to expel an applied magnetic field when the material enters the superconductive phase, yet this too is hard to accomplish with the material under test being inside the DAC.

Thus this property is inferred via the magnetic susceptibility, which requires that the magnetic noise from the environment is subtracted from the weak signal one is trying to measure. The effect is, as James Hamlin puts it, like trying to see a star when the sun is drowning out your sensors. One factor that led to the original Dias paper being retracted was due to the lack of raw data being supplied with the article. This made it impossible for colleagues to check their methodology and verify their results, and ultimately led to the do-over and this year’s paper.

Face Value

What is most interesting about this 2023 paper is perhaps that it does not merely repeat the same claims about CSH, but instead focuses on a different material, namely N-doped lutetium hydride. The claimed upper temperature limit for this material would be 20.6 ºC at a pressure of a mere 1 GPa (10 kbar, 9,900 atmospheres).

Even though it’s still early days, it shouldn’t take long for other superconductivity researchers to try their hands at replicating these results. Although the gears of science may seem to move slowly, this is mostly because of the effort required to verify, validate and repeat. The simple answer to the question of whether we’ll have room-temperature superconductivity in our homes next year is a definite ‘no’, as even if these claims about this new hydride turn out to be correct, there are still major issues to contest with, such as the pressurized environment required.

In the optimal case, these youngest results by Ranga Dias and colleagues are confirmed experimentally by independent researchers, after which the long and arduous process towards potential commercialization may conceivably commence. In the less optimal case, flaws are once again detected and this paper turns out to be merely a flash in the pan before superconductivity research returns to business as usual.

One thing remains true either way, and that is that as long as the scientific method is followed, deceptions and mistakes will be caught, as physical reality does not concern itself with what we humans would reality to be like.

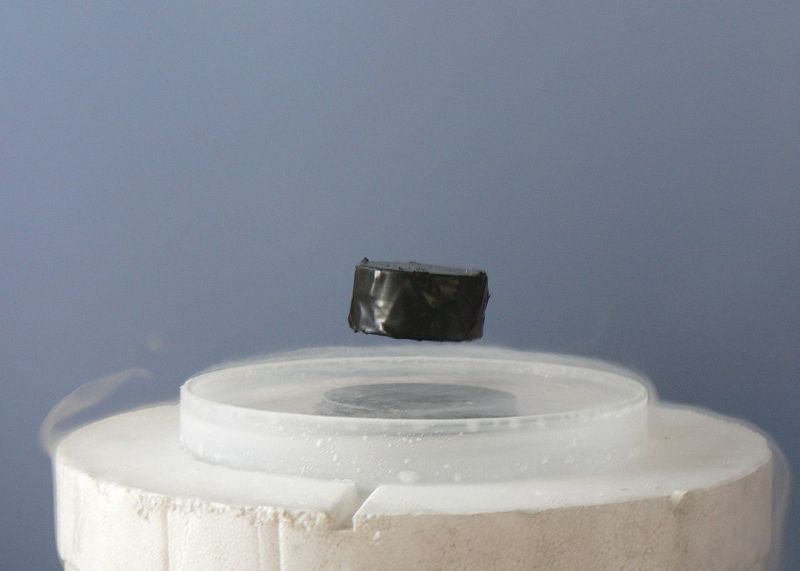

Featured image: “Meissner effect p1390048.jpg” by [Mai-Linh Doan]

An interesting thought experiment might be to imagine the sorts of end-user devices/packaging that would go along with a high-pressure-but-room-temperature superconductor. It’s easy to imagine a ambient-pressure-low-temperature superconductor — just put your “ordinary” electronics inside a refrigerator or immerse them in liquid nitrogen/helium/etc. Long distance transmission lines end up as jacketed cables where the outer jacket is hollow and filled with the coolant.

Now what does this look like in the high-pressure world? There are certain materials which can “freeze in” a certain amount of stress as they solidify — gorilla glass (and tempered glass) being the probably most common examples of this. Would such a system work for these superconductors? Would you end up with something like an extremely rigid glass-encapsulated conductor, where the glass shell locked in the pressure on the inner superconductor? What sort of mechanical setup do you need to get to 1GPa?

Here you go Kid. Here’s your superconducting Gameboy.

Oh… one thing… there’s the energy of a dozen sticks of dynamite trapped in there, so be careful not to scratch it.

Yeah, I definitely thought about the consequences of nicking the outer shell of a high-pressure high-voltage line.

But on the other hand, a piano is also under an enormous amount of tension, and we let kids play those. (Slightly older kids get to throw them off buildings, if they go to the right schools.)

To be fair, I have always been afraid of pianos for that reason. Never know when it’s going to snap.

Would kind of depend on the size. Something the size of a SMD component might not be too bad if it “popped”. Now, if it’s the size of a standard cellphone battery that could be bad.

Considering a superconductor only needs to be a hair thin to conduct a whole lot of current (limited by its critical current density), the embedded energy doesn’t necessarily need to be all that great.

You don’t need a car’s leaf spring to squeeze it down – it would be more like a microscopically thin shrink wrap sleeve, much like glass fiber. A Prince Rupert’s drop has surface stresses almost up to 1 GPa (0.5 – 0.7 GPa) and while it shatters quite dramatically, the outburst can be contained more or less in a paper bag.

Tempered glass has a built-in surface compression of around 50 – 150 MPa, up to 300 MPa. Common steel breaks at around 355 MPa, while UHS goes to 1,500 MPa or so.

1 GPa is just within reach for real-world ordinary materials we have.

I was doing this back in the 80’s.

Can’t speak to the researchers credibility but while the artical states “near-ambient pressure” it goes on to state the pressure is 10kbar (145000 PSI) so… I guess near ambient as far as diamond anvil cells? Jupiter?

They also mentioned a “plan” to introduce alloys designed to raise internal stresses in the material to move that closer to “ambient” pressure

near ambient means, not needing diamond anvil cells I guess…

“I guess near ambient as far as diamond anvil cells?”

Near-ambient meaning you don’t need diamond anvil cells. 10 kbar is ouch, but you can build a pressure chamber that can handle it. If you can lower it by a factor of 10-20, you’re getting to the point where the bottom of the ocean basically does it for you.

With this having made the waves it has so far, I am reasonable confident someone will try to replicate it.

But taking into account the history of the retracted paper I wonder if at some point other scientists stop bothering to try to replicate the results from this group, especially since the incentives are already often stacked against top scientists doing replication of other peoples publications as the laurels to be gained are limited in general.

I have been an active scientist for about 20 years now, about 10 years out of grad school. I have worked in physical (chemistry, organic and inorganic synthesis and catalysts) and biochemical labs (virology, enzymology) and have many, many personal friends working at top university and by government labs (NASA, NIH, CDC, etc) in highly diverse fields.

I have never had, nor has any of them ever had, a paper retracted. Not even once and that includes publications in Science, Nature and all the other “good” journals. Not just that, but to have multiple papers published then retracted is so abnormal as to elicit national news!

So, I’m pretty skeptical, to put it mildly.

Only about 600 papers a year are retracted worldwide, so it is indeed rare. That said, how many actually get checked/replicated and questioned? Probably only the ones that are a) wildly unusual in their claims or b) clearly copied. It makes it more likely that “I’ve found a new superconductor” is going to be analyzed more closely than “Material type A yields 1% higher UTS than material type B”. We’ve all seen the endless streams of paper-mill groups that publish infinitesimally small changes in some simulation result each month.

That, and given OPs comment about having a large scientific network of “top” universities. It’s well known that connections like that have a big influence on publications and confirming each other, circumventing peer review.

Being part of a big group can actually mean your work is less good than that of an outsider or small uni, since their network will be smaller, and peer review harsher.

So indeed, if it’s not something very novel and useful, most people just wont bother, especially if the researchers are well connected and reputed.

There have been experiments about publishing to prove that tendency.

I’ve never seen anyone with an old-boys club when it comes to publication (usually the opposite, they’re keen to shoot down people with competing research interests), but I’ve only been in a couple of the UK’s top establishments, and I can imagine it might happen. Can you link to the experiments to save me searching?

If there is an old boy’s club, I’d like to be a part of it. Also, it isn’t like I just hang out at the yacht club, my network certainly (and mostly) includes the lowly (like me) and medium-scale researchers. I too would like to see some evidence that being part of a top place “circumvents peer review,” it just has not been my experience. Peer review is far, far from perfect but like science itself, it is about the best we can do. I definitely agree, in the words of Sagan that “extraordinary claims require extraordinary evidence” and those claims will be highly scrutinized, but even my own goofy, inconsequential work was highly scrutinized by my 1) ethical research mentor/principal investigator, 2) Peer reviewers and 3) everyone I ever met at conferences.

As I recall, conventional “low-temperature” superconductivity is well-understood. It’s the high-temperature variety tgat is up for grabs.

Room temperature superconductivity is like practical Fusion, it’ll get here when it gets here.

Yes, my immediate thought was “Anyone up for a re-run on cold fusion?”. I’m grateful for the update on where that field stands today, though I recall I saw that at one time NRL were determinedly checking that absolutely all the rocks had been turned over on the original concept.

EMdrive was yet another brainwave that relied on a vast supply of Unobtainium for its success.

If you want a good example where contamination seems to have played a role check out Polywater, a field that was causing chemists to get their knickers in a twist when I was a wet-behind-the-ears young college student: https://en.wikipedia.org/wiki/Polywater

As Sagan put it: “Extraordinary claims require extraordinary evidence”, and quite rightly so.

If this fails they can always turn to publishing climate porn, no one’s career is ever harmed by getting it totally wrong in that area of “science”. Fail big enough and they’ll make you a fellow of the American Academy of Sciences.

Someone has an opinion!

It’s not ‘getting it wrong’ that causes problems in science careers. It’s getting it wrong and hiding it and publishing anyway. Much like most other jobs.

I do love how certain folks like to point out individual studies from 40 years ago saying that we are heading into an ice age (that they have only heard about, not actually read) as proof that climate science is all made up though. Really showing their literacy.

Fact: A competent modeler can get the model tell him anything he wants it to.

That’s the definition of ‘competent modeler’.

Anything based on a model not tuned by backcasting (and how could it be with novel conditions) is pure opinion. Not even worth reading if the dataset and model aren’t completely open.

Climate models can tell you what you want to hear by adjusting the ‘CO2 level to water vapor level feedback co-efficient’. Don’t set it too high or the model will go to Venus with the first gnat exhale. Even the worst of the chicken littles learned not to do that 20 years ago. I was embarrassing.

It’s often assumed that if a climate model can reproduce history, it can predict the future, because people intuitively think of it like shooting a bullet through a long pipe. It must be going straight since it cleared the narrow pipe – but since positive feedback models are always unstable, shooting off to “venus” or “iceball earth” is just a question of time after the real data ends. These models fundamentally assume that the earth climate is not a stable system and will inevitable run off to some extreme, and we’re just lucky to exist through a seemingly stable period.

The difference between two exponential curves – the error you’re left with – is also an exponential curve. The 95% confidence interval 100 years into the future opens up like the cone of a trumpet. Then there’s further bias in rejecting model runs and models which predict more cooling or stable temperatures for being “obviously wrong” while not rejecting as much of those which predict extremely high temperatures – so the “most likely scenario” based on the distribution of multiple model and sets of parameters becomes skewed upwards.

It’s kinda like that experiment where people are guessing the number of skittles in a jar. Everyone gets it wrong, but the average is surprisingly correct, because as much as people guess too high they also guess too low. Rejecting obviously low-balled answers like “a hundred and a brick” would actually make the average less accurate because it neglects to remove the guesses which are too high. You’re supposed to remove outliers at both ends, but ideologically motivated people have a different criteria on what counts as an “outlier”.

You think that everyone outside the community is aware of this bias, and everyone inside the climate modelling community is completely unaware and not accounting for it? Seems to me like another case of someone thinking they found a critical flaw in something which they don’t fully understand the precise details of. There’s a name for it….

I’d rather say most people are aware of it, but mostly putting it down as not that serious, or “at least I’m not doing it”, because addressing it would be opening a whole can of worms that would only give the loonies in the opposition more ammunition.

Besides, it wouldn’t be the first time that such expectation bias has tainted even the hard sciences. People reject results that “can’t be right”, while accepting dubious results that align with expectations. Feynman recounts in his book the case of measuring the mass of the electron, where: “When they got a number that was too high above Millikan’s, they thought something must be wrong – and they would look for and find a reason why something might be wrong. When they got a number close to Millikan’s value they didn’t look so hard.”. Of course Millikan had originally measured it wrong.

It does correct itself over time and people quietly revise their models, but it also means the science that gets used for deciding policy right now is always going to be skewed.

It’s rather a myth that science is done by “serious men performing science methodically and rigorously”. We’re taught this myth in schools which prefer rather to tell a good simple story than what actually happened:

https://arxiv.org/pdf/physics/0508199.pdf

“A selected history of expectation bias in physics”

You don’t even need to bring modeling into it. We have actual measured data clearly showing accelerated warming that correlates with ghg emissions, a mechanism to link it and no natural phenomena that could reproduce the same results.

The modeling is just frosting.

It’s happening. We’re doing it.

Pretty amusing when the public poops on science. I mean, seriously, you think all that gibberish about “exponential curves .. 95% confidence..” compares to ice packs melting, seas and temperatures rising, CO2 and methane rising? Empirical, 100%. Your spaceship must be the only place that hasn’t been affected by weather extremes.

Enlighten us with what is the cause or rising temperatures. Just Nature misbehaving? I’ve never heard of any other explanation from change-doubters.

No doubt, not all research is of the same quality. Not all researchers are competent and unbiased. BUT, when it comes to the evaluation of what is presented, the READER’s BIAS is a far greater problem. Some prefer their fantasy world.

The problem with car emissions, is that it’s in gaseous form. Here’s a little visualization:

Think of the equivalent mass of brown liquid coming out the car exhaust. That would be a 5ft. cube of muck annually per car, on average (4.6 tons). Over the life of the car, your room would be filled. Multiply that by a world full of vehicles — That’s a lot of crap! Shoveling driveways, snow plows clearing all year round.

For some, it’s beyond possibility that we are the problem. Facts be damned. I remember 40 years ago as a teen, scientist were warning about what would happen as CO2 levels rise. They were dead-on.

Even if this were a natural hiccup, how about easing the load? Time for humanity to grow up. Only kids can get away with “I didn’t know”. Only Losers stick their head in the sand.

Luckily, CO2, methane, particulates, etc. just “go away”.

Now take a deep breath and Happy Motoring!

> We have actual measured data clearly showing accelerated warming that correlates with ghg emissions

Sure. That’s not the point.

The point is that the earlier climate models show faster acceleration and greater doom and gloom than the later models, because the science is converging towards more realistic models that approximate real data accumulated since 20-30 years ago. However, the policies that we have, and the politicians, and the demagogues and activism, are still running on the models from 20-30 years ago.

>Enlighten us with what is the cause or rising temperatures. Just Nature misbehaving? I’ve never heard of any other explanation from change-doubters.

That’s an example of what happens when you start criticizing the science/scientists. You’re put down by complaining that you’re dismissing the science entirely.

No. The point is that while the scientists are observing a real phenomenon, their results and predictions will be psychologically, ideologically and politically biased. Not accurate – until much later when everyone is forced to admit that they were too early to call it. People see what they want to see in it – on both sides of the question.

….Which are among the many reasons why humans should not presume themselves capable of “saving the planet.”

The notion that 50 years of computerized climate modeling qualifies creatures with 75 year lifespans to argue the correct thermostat setting for a 4 billion year old planet represents a breathtakng degree of hubris.

Yes. This is what science does. It’s not easy, but at least we try.

That is in reply to:

“The notion that 50 years of computerized climate modeling qualifies creatures with 75 year lifespans to argue the correct thermostat setting for a 4 billion year old planet represents a breathtakng degree of hubris.”

Yes. This is what science does. It’s not easy, but at least we try.

Mostly in response to ‘Dude’ and ‘HaHa’:

‘Models’ and ‘scientists’ is pretty vague. Unless you have specific models and dates to cite, you’re just making blanket statements. Also, don’t confuse a scientific study with what is then reported by the media, and Bob next door. If you have a study that predicted an ice age or Venus in the near future, do share.

Anyway, thank you to all the diligent scientist and activist who make this world a better place. Without you we would still be inhaling lead, drinking Cholera tainted water, and baking in a larger ozone hole. Personally, I much prefer getting my info from scientific institutions than public opinion. Preferably European. When i get sick I listen to medical advise, not some Mom on youtube, or a clown having me drink bleach.

As far as being alarmist, unfortunately that’s by necessity. How long did it take to get action on lead, cigarettes, ozone depleting chemicals?

It’s a good thing they’ve already received venture capital for commercialization and filed patents. That’s their excuse for not sending samples to other independent laboratories!

I often use room temperature superconductors in my circuits. A 0 ohm resistor is actually very useful in many places.

With the focus on superconductivity I fear that researchers may be missing the low hanging fruit. It is incredibly difficult, if not impossible to achieve superconductivity at normal ambient temperatures. However, it may be possible to reduce the resistance compared to existing conductors. If for example it is possible to reduce the resistance by 25% in comparison to coper then that in itself would go a long way to reducing losses in distribution lines.

To add to the ongoing controversy, some say that the cold fusion thing was actually hydrogen converted to a lower engerg state (Dark matter) . See at BrilliantLightPowerInc with SunCell.

You wrote: “researchers finding themselves unable to replicate the results of the original 1989 experiment by Martin Fleischmann and Stanley Pons.” That is incorrect. By late 1990, cold fusion was replicated in 92 laboratories, listed here:

https://lenr-canr.org/acrobat/WillFGgroupsrepo.pdf

It is true that researchers in some labs had difficulty replicating, but there were more successful replications than failures.

By the late 90s, it was replicated in over 180 major laboratories, and these replications were published in mainstream, peer-reviewed journals.

Let say we get room temperature/pressure superconductors. What really changes? Slightly more efficient transmission lines, maybe motors, etc.

It really is not society-changing in the way that abundant cheap sustainable energy/fusion would be.

This time Dias has made available what the team says is the “raw data” but if this raw data is real, the authors have made made an ultra-high precision measurement of 10 picovolts.

They might want to publish a paper on how exactly they managed that!

Interesting note, I actually observed a possible Meissner effect, briefly in a freshly made sample of a material here.

Would have been several years ago.

The exact experiment involved a bismuth based alloy being exposed to microwave energy in an attempt to make a specialized material for another project, but botched both the timing and ther quantities because my scales were evidently defective reading oddly due to internal damage.

Took the somewhat frazzled dark grey block out, dusted off the SiC susceptor and allowed it to cool.

Left it on my desk in freezing conditions while I got on with another project.

Was the other side of the room and heard a very strange “click”.

Turned around expecting it to be something like a magnet move, and initially saw nothing.

Was rather astonished to see a neodymium magnet that had previously been on my desk, floating about 1/2″ above my sample.

Rushing to get my meter to see what the ^!(^!&% was going on, it slowly drifted back down and landed.

Did actually measure some wild resistance fluctuations then it settled down.

Tried cooling it back down but got no response though the resistance fluctuations persisted for days.

Not quite sure what happened there, never did work out why this sample worked but others did not, but belive it might be to do with stirring it with a screwdriver handle that had been used for opening and then messing with an old OLED panel from a watch so might have been contaminated with iridium (Ir)

Alas no pictures but still have the sample somewhere here.

Its pretty strange, very large crystals so something odd happened.

My working hypothesis is that the extended microwave cooking boiled off some of the metal or otherwise stratified it, so the RTSC must have been just at the surface or below.

Actually, the Pons/Fleishmann experiments were replicated over the years. A theory, the Widom-Larsen theory adopted by U.S. government agencies such as NASA, ARPA, DOE, etc, was developed in 2005 to explain the Pons/Fleishmann phenomenon.

LENR is not fusion, it’s a reaction, and it is nuclear, as noted on the US Navy Institute web magazine:

“According to the (Widom-Larsen) theory, localized LENR reactions take place on the surfaces of nanostructured metallic hydrides—metals that have reacted with hydrogen to form a new compound.13 Ultralow-momentum neutrons are created through collective “many-body quantum effects.” (These occur when more than two subatomic particles interact; their behavior is very different from two-body interactions.) As nearby nuclei absorb neutrons—and the nuclei subsequently decay, releasing beta or alpha particles—LENRs transmute elements and release energy.” (More on : https://www.usni.org/magazines/proceedings/2018/september/not-cold-fusion)

There is STILL a lot of confusion around the old “Cold Fusion” Pons/Fleishmann experiment, and the confusion is exacerbated by research on plasma fusion, which gained in popularity in recent years. But as a sure sign governments are taking that LENR seriously, the European Union has even launched an initiative, https://www.cleanhme.eu, to try to get to the bottom of the phenomenon. BTW, a good source of info on LENR is https://news.newenergytimes.net/.