From the early days of ARPANET until the dawn of the World Wide Web (WWW), the internet was primarily the domain of researchers, teachers and students, with hobbyists running their own BBS servers you could dial into, yet not connected to the internet. Pitched in 1989 by Tim Berners-Lee while working at CERN, the WWW was intended as an information management system that’d provide standardized access to information using HTTP as the transfer protocol and HTML and later CSS to create formatted documents inspired by the SGML standard. Even better, it allowed for WWW forums and personal websites to begin to pop up, enabling the eternal joy of web rings, animated GIFs and forums on any conceivable topic.

During the early 90s, as the newly opened WWW began to gain traction with the public, the Mosaic browser formed the backbone of the WWW browsers (‘web browsers’) of the time, including Internet Explorer – which licensed the Mosaic code – and the Mosaic-based Netscape Navigator. With the WWW standards set by the – Berners-Lee-founded – World Wide Web Consortium (W3C), the stage appeared to be set for an open and fair playing field for all. What we got instead was the brawl referred to as the ‘browser wars‘, which – although changed – continues to this day.

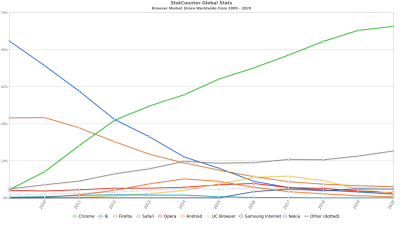

Today it isn’t Microsoft’s Internet Explorer that’s ruling the WWW while setting the course for new web standards, but instead we have Google’s Chrome browser partying like it’s the early 2000s and it’s wearing an IE mask. With former competitors like Opera and Microsoft having switched to the Chromium browser engine that underlies Chrome, what does this tell us about the chances for alternative browsers and the future of the WWW?

We’re Not In Geocities Any More

For those of us who were around to experience the 1990s WWW, this was definitely a wild time, with the ‘cyber highway’ being both hyped up and yet incredibly limited in its capabilities. Operating systems didn’t come with any kind of web browser until special editions of Windows 95 and the like began to include one. In the case of Windows this was of course Internet Explorer (3+), but if you were so inclined you could buy a CD with a Netscape, Opera or other browser and install that. Later, you could also download the free ‘Personal Edition’ of Netscape Navigator (later Communicator) or the ad-supported version of Opera, if you had a lot of dial-up minutes to burn through, or had a chance to leech off the school’s broadband link.

Once online in the 90s you were left with the dilemma of where to go and what to do. With no Google Search and only a handful of terrible search engines along with website portals to guide you, it was more about the joys of discovery. All too often you’d end up on a web ring and a host of Geocities or similar hobby sites, with the focus being primarily as Tim Berners-Lee had envisioned on sharing information. Formatting was basic and beyond some sites using fancy framesets and heavy use of images, things tended to Just Work©, until the late 90s when we got Dynamic HTML (DHTML), Visual Basic Script (VBS) and JavaScript (JS), along with Java Applets and Flash.

VBS was the surprising victim of JS, with the former being part of IE along with other Microsoft products long before JS got thrown together and pushed into production in less than a week of total design and implementation time just so that Netscape could have some scripting language to compete with. This was the era when Netscape was struggling to keep up with Microsoft, despite the latter otherwise having completely missed the boat on this newfangled ‘internet’ thing. One example of this was for example when Internet Explorer had implemented the HTML iframe feature, while Netscape 4.7x had not, leading to one of the first notable example of websites breaking that was not due to Java Applets.

As the 2000s rolled around, the dot-com bubble was on the verge of imploding, which left us with a number of survivors, including Google, Amazon and would soon swap Geocities and web rings for MySpace, FaceBook and kin. Meanwhile, the concept of web browsers as payware had fallen by the wayside, as some envisioned them as being targets for Open Source Software projects (e.g. Mozilla Organization), or as an integral part of being a WWW-based advertising company (Google with Chromium/Chrome).

As the 2000s rolled around, the dot-com bubble was on the verge of imploding, which left us with a number of survivors, including Google, Amazon and would soon swap Geocities and web rings for MySpace, FaceBook and kin. Meanwhile, the concept of web browsers as payware had fallen by the wayside, as some envisioned them as being targets for Open Source Software projects (e.g. Mozilla Organization), or as an integral part of being a WWW-based advertising company (Google with Chromium/Chrome).

What’s Your Time Worth

When it comes to the modern WWW, there are a few aspects to consider. The first is that of web browsers, as these form the required client software to access the WWW’s resources. Since the 1990s, the complexity of such software has skyrocketed. Rather than being simple HTML layout engines that accept CSS stylesheet to spruce things up, they now have to deal with complex HTML 5 elements like <canvas>, and CSS has morphed into a scripting language nearly as capable and complex as JavaScript.

JavaScript meanwhile has changed from the ‘dynamic’ part of DHTML into a Just-In-Time accelerated monstrosity just to keep up with the megabytes of JS frameworks that have to be parsed and run for a simple page load of the average website, or ‘web app’, as they are now more commonly called. Meanwhile there’s increasing more use of WebAssembly, which essentially adds a third language runtime to the application. The native APIs exposed to the JavaScript side are now expected to offer everything from graphics acceleration to access to microphones, webcams and serial ports.

Back in 2010 when I innocently embarked on the ‘simple’ task of adding H.264 decoding support to the Firefox 3.6.x source, this experience taught me more about the Netscape codebase than I had bargained for. Even if there had not been a nearly complete lack of documentation and a functioning build system, the sheer amount of code was such that the codebase was essentially unmaintainable, and that was thirteen years ago before new JavaScript, CSS and WebAssembly features got added to the mix. In the end I ended up implementing a basic browser using the QtWebkit module, but got blocked there when that module got discontinued and replaced with the far more limited Chromium-based module.

These days I mostly hang around the Pale Moon project, which has forked the Mozilla Gecko engine into the heavily customized Goanna engine. As noted by the project, although they threw out anything unnecessary from the Gecko engine, keeping up with the constantly added features with CSS and JS is nearly impossible. It ought to be clear at this point that writing a browser from scratch with a couple of buddies will never net you a commercial-grade product, hence why Microsoft threw in the towel with its EdgeHTML.

Today’s Sponsor

The second aspect to consider with the modern WWW is who determines the standards. In 2012 the internet was set ablaze when Google, Microsoft and Netflix sought to push through the Encrypted Media Extensions (EME) standard, which requires a proprietary, closed-source module with per-browser licensing. Although Mozilla sought to protest against this, ultimately they were forced to implement it regardless.

More recently, Google has sought to improve Chrome’s advertising targeting capabilities, with Federated Learning of Cohorts (FLoC) in 2021, which was marketed as a more friendly, interest-based form of advertising than tracking with cookies. After negative feedback from many sources, Google quietly dropped FLoC, but not before renaming it to Topics API and trying to ram it through again.

Although it’s easy to call Google ‘evil’ here and point out that they dropped the ‘do no evil’ tagline, it’s important to note the context here. When Microsoft was bundling Internet Explorer with Windows and enjoying a solid browser market share, it was doing so from the position as a software company, leading to it leveraging this advantage for features like ActiveX in corporate settings.

Meanwhile Google is primarily an advertising company which makes it reasonable for them to leverage their browser near-monopoly for their own benefit. Meanwhile Mozilla’s Firefox browser is scraping by with a <5% market share . Mozilla has also changed since the early 2000s from a non-profit to a for-profit model, and its revenue comes from search query royalties, donations and in-browser ads.

The somewhat depressing picture that this paints is that unless you restrict the scope of the browser as Pale Moon does (no DRM, no WebRTC, no WebVR, etc.), you are not going to keep up with core HTML, CSS and JS functionality without a large (paid) team of developers, meanwhile beholden to the Big RGB Gorilla in the room in the form of Google setting the course of new functionality, including the removal of support for image formats while adding its own.

Where From Here

Although taking Google on head-first would be foolishness worthy of Don Quixote, there are ways that we can change things for the better. One is to demand that websites we use and/or maintain follow either the Progressive Enhancement or Graceful Degradation design philosophy. The latter item is something that is integral with HTML and CSS designs, with the absence of, or error in any of the CSS files merely leading to the HTML document being displayed without formatting, yet with the text and images visible and any URLs usable.

Progressive enhancement is similar, but more of a bottom-up approach, where the base design targets a minimum set of features, usually just HTML and CSS, with the availability of JavaScript support in the browser enhancing the experience, without affecting the core functionality for anyone entering the site with JS disabled (via NoScript and/or blocker like µMatrix). As a bonus, doing this is great for accessibility (like screenreaders) and for search-engine-optimization as all text will be clearly readable for crawler bots which tend to not use JS.

Perhaps using methods like these we as users of the WWW can give some hint to Google and kin as to what we’d like things to be like. Considering the trend of limiting the modern web to only browsers with only the latest, bleeding-edge features and the correct User-Agent string (with Discourse as a major offender), it would seem that such grassroots efforts may be the most effective in the long run, along with ensuring that alternative, non-Chromium browsers are not faced with extinction.

Gee, it’s awfully nice, that some of you are waking up to the alarm bells that we were sounding in the aughts, long after we can do something about it.

Well there’s always the dark web if one wants to start afresh.

https://en.wikipedia.org/wiki/List_of_Tor_onion_services

Take Microsoft for instance.

They own,

NPM

Github

Typescript

Visual studio and vs code

You want a job?

Microsoft own Linkedin

Maybe somewhere to deploy?

Azure

They have a way wider grip than even that simple list above.

Hey but its all open so its fine.

You forgot Minecraft.

And soon Activision/Blizzard

“With no Google Search and only a handful of terrible search engines along with website portals to guide you, it was more about the joys of discovery. ”

Ah the days of the yellow “phone books” listing of web sites.

Terrible search engines? I kinda rather liked the Yahoo “yellow pages” approach – at least for searching for something common. I’m honestly right now wishing Altavista was still around to be legitimate competition for Google. I’m beginning to not like their search engine, but I see nothing out there as good at this point.

Both Aliweb.com and Webcrawler.com from ’93 are still around today, the former still looking especially 90’s ‘Archie’ as ever.

(I don’t actually use either so can’t comment on quality, I just keep the bookmarks around so I don’t forget they exist, since that’s what us old geezers tend to do)

On aliweb.com, it’s still the same as before, hence many links are dead. Webcrawler is now just another Bing copy.

The history of browsers is always told via the Mosaic line then Google just comes up out of nowhere.

Mosaic was the first browser, Internet Explorer and Netscape were both originally forked from Mosaic then they fought the great browser wars competing with frustratingly incompatible implementations of new features making web developers lives hell until finally the evil empire Microsoft won. Dying Netscape as it’s last act pulled out all the third party code they did not own and open sourced what was left. Mozilla picked up the ashes of Netscape, intending to replace the missing code but instead spent years creating a near complete rewrite. Their browser Mozilla and it’s smaller brother, Firefox almost reignited competition but then Google swooped in (out of nowhere?) and ate everyone’s lunch.

That’s the story everyone knows and tells.

But Google’s browser has a lot of history too.

While Mickeysoft and Netscape were still slugging it out some of us used Konqueror, The fairly obscure web browser of the Open Source desktop KDE with it’s web engine, KHTML. Unix-way purists hated Konqueror with it’s integrated file browser and even terminal. It was very reminiscent of Mickeysoft’s attempt to shut out Netscape by merging Internet Explorer with their Windows operating system. But for web development that was pretty useful. It was the first browser (I think) with tabs or at least had tabs before the big browsers did. And the file browser could support scp transfers. Or with the built in terminal one could run a text editor. So web development and testing could fit in one convenient window. That was awesome in those days of smaller, single monitor computers. Otherwise, keeping an editor, a browser and a file transfer program all open at the same time and juggle the windows around was kind of a pain in the ass.

A funny thing about KHTML. The developers seemed to REALLY like css. KHTML passed all the css Acid Tests years before the big browsers. But… unfortunately as they excelled in css support they seemed to have much less interest in Javascript. The Javascript engine always seemed to be behind the rest.

Worse than the Javascript problem, Macromedia, the makers of Flash, a third party browser plugin which basically served the niche that Javascript fills today died. Adobe (arguably a more evil empire than Mickeysoft) bought the still warm carcass of Macromedia and stopped updating the Linux version of Flash. In those days it seemed like EVERYONE used Flash, even for dumb features that could have been easily implemented in straight Javascript or even just HTML. As a result a lot of pages didn’t work right in Konqueror. Eventually Konqueror gained the ability to run Netscape plugins and Codeweavers came out with their Crossover plugin that made using the Windows version of Flash possible but that was still slow, buggy and not free.

As “The Year of the Linux Desktop” failed to arrive (which back in those years this Flash situation contributed to a lot, I believe) a lot of Linux Desktop enthusiasts switched to Macintosh, the computers of closed hardware, locked bootloaders, overpriced devices and hipsterism. Their maker, Apple decided they needed their own browser and created Safari. They didn’t want to start such a large project from scratch however so they used the open source KHTML. Quickly they began adding the missing Javascript features that so plagued Konqueror. But.. they were not good Open Source participants. At first they refused to share any of their improvements back with the community. When finally they were convinced to do so (cause that’s kind of the price of using other people’s hard, open source work to kickstart your own project) they were still jerks. They did not contribute back via versioning control or even patches. It was just a big tarball with their codebase, old KHTML and the code of their new fork (WebKit) jumbled together. They didn’t even follow existing coding styles.

With a lot of complaining the KHTML people did try to cleanup and merge Apple’s WebKit code. It was too much however and eventually WebKit itself became packaged as a library that could be substituted in place of the more tidy but less featured KHTML making Konqueror more functional and also allowing others to make their own browsers just by slapping a UI around WebKit.

Open source controversy and spaghetti code aside Apple did accomplish something great for open source and smaller browsers in general. They refused to support Flash. This forced web developers to begin the process of learning to walk without their Adobe crutch. Pages which would not run on open source and third-party browsers began to become fewer and farther between until it was no longer a problem.

Finally came Google. By the time they began their own browser basing it on WebKit was the only logical choice. So they did. For a time this made WebKit, which was based on KHTML, from Konqueror and KDE the most popular html engine on the Internet. Who saw that coming? Eventually however with so many generations of web features grafted on WebKit became bloated and slow. How much of this was due to Apple’s famous spaghetti I wonder? Eventually Google began simplifying, refactoring and cleaning WebKit’s code and made this into a new html engine, Blink. Blink seems to have replaced WebKit and is the new top html engine. Even Microsoft replaced their Mosaic based Internet Explorer with Edge which since 2019 has used Blink. Pretty much the only significant browser not using Blink is Mozilla Firefox and other Mozilla derivatives which still trace their history back through Netscape to Mosaic.

How much of KHTML lives on in Blink I wonder… Is nearly the whole world using a little piece of KDE?

A whole extra article in the comments! Thanks :)

💯🙏

+1, great extension to the original article.

Can’t we link this valuable comment to end of article, pin to start or something like? Thank you, Twisty Plastic.

I second this motion

I would really like to know how much of Firefox is from Mosaic !

If Hackaday had moderation, [twisty plastic] would receive all my points. Thank you for sharing.

Kinda – related / on topic: Please vote for JPEG-XL support ->

https://connect.mozilla.org/t5/ideas/support-jpeg-xl/idi-p/18433

https://bugzilla.mozilla.org/show_bug.cgi?id=1539075

So, “no one” noticed that during this great evolution of the “browser wars”, that graphics capability, CPU power and speed, and the HUGE increases in RAM and drives had “no effect” on all of this? Credit where credit is due people!

Only in that allowed bloated code and feature creep without noticeable performance hits. Try running a modern browser on an average Celeron.

Its the ads, lesser machines can barely load, let alone play 4 ads at once, which is apparently now industry standard.

Looks like it’s time for a change in web browsing. I have long dreamed of a ESP32 or ? html, java, CSS based rendering engine that would send jpg images to a display driver / graphics card on a computer. No more hidden or embedded code since it would be display only. The mouse / pointer device would send coordinates back to the rendering processor and any keyboard presses to login etc. Html, Java or CSS would run on the rendering processor and send back the responses to the website. Download files would be scanned by the rendering processor for viruses , malware etc. Since there would be separate memory and processor microcode this hardware would minimize malware. A last feature would be no transmission of binary encoded files. A new ascii segmented ( with delineated segments for display, variable input and output ) unicode could be created that would be processed by a 2nd rendering engine and checked for malware before being translated to the main processor binary code and stored for later use.

Sounds like Lynx on a VT100 to me

At one point many experienced Lynx. But by now, it’s a relative few. Websites got so.complicated and bloated that it just became too much trouble. But getting high speed in 2012, so much of the bad is hidden.

The future of the internet is a generation brain-damaged and made infertile by it, who will then forget how to maintain it. It will reset eventually.

Nature is healing.

From 1996, when Igot internet, to 2001, I used Lynx exclusively. Until I got wideband in 2012, it remained my primary browser.

There was yahoo in 1996.

I’m not sure what this fussing is about. So many sites keep telling me there’s an app. The CBC was getting obnoxious ads, when I complained they said “maybe you’d prefer our app”!

My knowledge of all this is pretty superficial, but it seems that browser needs now to be pretty slim and just implement a virtual machine which runs webasm. CSS, HTML, javascript can be supported as libraries on top of webasm and hopefully slowly replaced by better alternatives.

The browser will be replaced by the metaverse! /sarcasm

CSS still is nowhere near a scripting language. It’s just a bit more capable than it was. It’s still just a cascading style sheet that describes behaviour and aesthetics, with no actual logic programming possible. Or did I miss something and is it now anywhere near Turing-complete?

Essentially CSS is Turing-complete, yes: https://notlaura.com/is-css-turing-complete/

Only gotcha is that it does require a human to be in the loop somewhere, and there has to be HTML involved too, but at that point you’re already pretty deep into nitpicking what ‘Turing-complete’ truly means :)

Web pages are waaay too complicated now, in my opinion. Web 1.0 was the best. My favorite browser is NetSurf. It is unbelievably fast! Page loads run laps around Chrome. Of course, it doesn’t really support JS, so that’s to be expected. Unfortunately, way too many sites now are unusable without JS, so I usually end up using a Brave, another Chrome derivative. Oh, for the good old days… As far as I am concerned, all those scripting languages should just go die! In the end we’d be better off. Don’t get me started on the security implications of modern web complexity…

+1 . I still liked the Java applet model where you downloaded an ‘application’ into the browser for application type functionality rather than JavaScript that manipulates the web page it self. It seemed to be a clever way to run/deploy your custom application as needed by your company or customer. I was really excited when the functionality became available…. At our companym we were well on the way to realizing this when …. wammo … squashed by security :rolleyes: … Customers put foot down on Java Applets…. Back to regular app deployments (CDs, DVDs, ftp downloads, etc.) . With applets available, you could just leave the web pages to just show static information (documents, pictures, media). Still seems logical to me rather than the bottomless bloat you see today with JS frameworks and such needed to built internet apps. It would make for a ‘clean’ small foot print browser with a hook to a local (J)VM on the backside to run your app. However, I think we to far along though now, to ‘re-invent’ the browser model. So it goes…..

If you’re really longing for a return to applets then there are a few projects that aim to translate java into js or webassembly, CheerpJ is probably the least complex in that it pretty much takes a .jar and runs it in the browser without any real changes to your code. Eg. here’s a native swing app, in the browser: https://leaningtech.com/swing-demo/ . I think it might only work up to java8, tho. Probably not a good idea to develop new stuff in it, but a cool achievement nonetheless.

I’m sad wasm didn’t seem to take off, it’s still there but i rarely encounter it used anywhere. when it was announced i was full of hope that it would herald a return to the desktop days where you wrote a piece of software in whatever way and language you wanted, and then compiled it into a format your machine understood. but it doesn’t seem to have gained much traction and instead we continue to spend our time wallowing in the mud, wrestling with javascript :-(

I have to disagree with the anti-scripting language sentiment.

Javascript used sparingly can be a very good thing. For example, using Javascript to validate a form before submitting makes the process quicker and nicer for the user. Not that this should replace server-side input checking… And there is no reason the form should rely on Javascript, breaking if it isn’t available. It should instead just submit to the server with a plain old HTML submit button and the server should redirect the user back to the form with the old data filled back in and an error message explaining what went wrong. It should still be functional, just slower as the user must wait for it to be submitted and bounced back.

Large applications have their place too. For example, I’m in the process of “slowly” switching from OpenSCAD to JSCAD. In part I am doing this because OpenSCAD does not run on my phone while JSCAD works just fine in my phone’s browser. Sound strange to do that on a phone? I have a NexDock. (Yes, I know about ScorchCAD, it is incomplete and unmaintained, no hulls nor minkowski)

OTOH, a lot of pages do rely way to much on bloated scripting for no good benefit. One should certainly never need to have Javascript enabled just to look at static content. And yet often it is required…

While Mozilla may be for-profit now, they do at least maintain a separate browser engine to Webkit which is used by just about everything else (TIL about Pale Moon, installing shortly). Good enough reason to use it IMO.

“The browser wars, never finished, they were.”–Grandmaster Yoda

Browsers are long gone except for some last niche survivors.

Browsers were meant to be a front-end to the whole net using lots of protocols and document formats. Multiple document formats kind of have survived with in-browser PDF display and a few similar things but the rest of what claims to be a browser today converges to only supporting a single protocol.

Demand real browsers! Finger, FTP, Gemini, Gopher, Mail, News, WWW, … can be so much more fun if you have a browser that can resolve links of all of them.

Every browser that cannot do this is broken by design.

I know at least two browsers that can do most of the list above.

Dig a bit…

“I know at least two browsers that can do most of the list above.”

…such as?

Lynx does a lot of that.

Kiss port 80 goodbye. Join the revolution on port 70.

I don’t normally wish ill fates on people, but…

All I know is that whomever invented the web popup needs to have their fortunes diminished until they are destitute and just wishing for the end to finally come and claim them – which hopefully takes a long, long time.

It did not “contribute to the betterment of mankind,” and is so abused as to be cliche.

I don’t know who said it, but “Man is basically self defeating” and you can sure see it with the internet and associated social media … or whatever else goes on out there. Nice we can share information here on this site for example, yet there is still hints of censorship when you don’t follow certain ways of thinking in one’s comments…. This site is minor compared to some others I’ve heard about.

While I know that Microsoft had “popups” before the web did, I not sure they invented it.

“Fortunes diminished” needs to be placed as a blink tag.

No mention of Pale Moon is complete without a link to the mess they made over someone trying to port it to OpenBSD:

https://archive.is/jwTab

https://github.com/jasperla/openbsd-wip/issues/86

Do we need a campaign to encourage website builders to design for accessibility-first and compatibility with low spec hardware and browsers like IceCat, Lynx etc. ?

Disagree somewhat with graceful degradation and progressive enhancement. It’s highly dependent on your app and your audience. Over engineering to cover non or barely existent use cases for your app isn’t worth the cost. A11y? 100% agree you treat that as a 1st class citizen, but you don’t have to rely on Progressive enhancement principles to achieve that.

Dillo can do many of those via plug-ins here:

http://celehner.com/projects.html#dillo-plugins

Firefox’s current plug-is system doesn’t even allow adding extra protocol support properly anymore (some plug-ins use a web proxy approach).

The most obvious protest against bloated browsers is to use lightweight ones and show the webmasters with an (honest) User-Agent header. Unfortunately that doesn’t seem to have done much for me. I used to browse many websites far more easily in Dillo than I can today.

Posting (hopefully) from Dillo, where I always view hackaday.com (but hackaday.io makes it really hard).

IMO the issue of “standards” is a far less severe problem than is the demise of democratic publishing that the web once had infrastructure for.

Anyone old enough to remember dial-up Internet knows what I am talking about: your Internet provider gave you web hosting (even cable ISPs like Comcast did, to compete). This necessitated ISP web forums, where users would help each other get their content online. As ISPs were largely regional, you would actually connect to people you might already know or later find at a MeetUp.

And then we had Webrings and LinkExchange to allow users on different ISPs to link their conversations, regardless of ISP.

All of this infrastructure has been systematically discontinued, or purchased-then-killed so that the web could be molded back into the old television–>consumer paradigm.

Today yes yes you can get free webhosting, but there’s really no way to link up the way we used to. A lot of hosting providers do not even provide FTP. So if you wanted to make an Internet app support communities and publishing and promotion (webrings etc) there’s really no common demoninator. In a lot of ways, the web has regressed.

Given that both Chrome AND FireFox are getting killed by Mobile Apps (especially Facebook) it’s in their interest to not see this all progress into a walled garden.

I moved to high speed internet in 2012, but kept an account at my ISP since 2006. It had been dialup, but I dropped by to a shell account. So I get email and keep my webpage there.

But yes, it’s come a longway

It’s funny, in your WildFox’s page you say “Mozilla’s Firefox web browser stood for, years ago: a light-weight, versatile and efficient web browser.”

Wow that’s a statement !

Until recently, and from the very beginning, Firefox was the parangon of bad memory managment. Sp many times I had to reboot because of this horrible product. After two of surfing I could get 90% of my system reserved… forever. Even closing all tabs would not release a byte of memory. Firefox was a total catastrophee in memory managment since the beginning. And all these great years Mozilla systematically denied problems with its memory managment, offering no help of any sort to their users about it. They said it was because of the extensions, but it was another lie because Firefox was doing exactly the same thing with no extensions installed. They made their butter with the silence of the gamma users which, as usual, have no clue about what to ask for, where just eating what these shitty companies give them. Like kids with the music industry. What ever you give’em, they gonna eat it, and like it. It’s a business model…