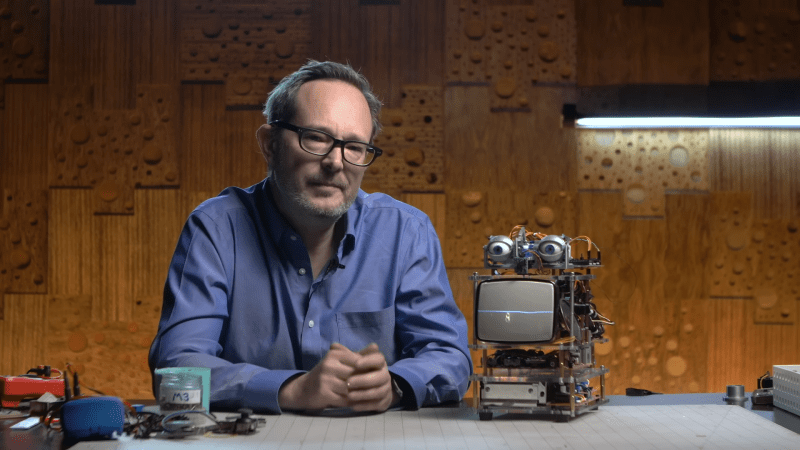

Today, we’re surrounded by talking computers and clever AI systems that a few decades ago only existed in science fiction. But they definitely looked different back then: instead of a disembodied voice like ChatGPT, classic sci-fi movies typically featured robots that had something resembling a human body, with an actual face you could talk to. [Thomas] over at Workshop Nation thought this human touch was missing from his Amazon Echo, and therefore set out to give Alexa a face in a project he christened Alexatron.

The basic idea was to design a device that would somehow make the Echo’s voice visible and, at the same time, provide a pair of eyes that move in a lifelike manner. For the voice, [Thomas] decided to use the CRT from a small black-and-white TV. By hooking up the Echo’s audio signal to the TV’s vertical deflection circuitry, he turned it into a rudimentary oscilloscope that shows Alexa’s waveform in real time. An acrylic enclosure shields the CRT’s high voltage while keeping everything inside clearly visible.

To complete the face, [Thomas] made a pair of animatronic eyes according to a design by [Will Cogley]. Consisting of just a handful of 3D-printed parts and six servos, it forms a pair of eyes that can move in all directions and blink just like a real person. Thanks to a “person sensor,” which is basically a smart camera that detects faces, the eyes will automatically follow anyone standing in front of the system. The eyes are closed when the system is dormant, but they will open and start looking for faces nearby when the Echo hears its wake word, just like a human or animal responds to its name.

The end result absolutely looks the part: we especially like the eye tracking feature, which gives it that human-like appearance that [Thomas] was aiming for. He isn’t the first person trying to give Alexa a face, though: there are already cute Furbys and creepy bunnies powered by Amazon’s AI, and we’ve even seen Alexa hooked up to an animatronic fish.

Thanks for the tip, [htky], [Kris] and [TrendMend]!

Just finished watching the video and boy wasn’t that a video for the ages.. from the cinematography to the storytelling and the sheer coolness of the final product alone. This is something i’ll never forget and look back on. I wish I had a little buddy like this in my life

Thanks for the kind words, David! I’m working on getting the build notes up on Hackaday.io!

I prefer mycroft

Although this looks really impressive, i’m from the “no snooping in da house” generation, so no alexa or other thing for me. that said. it looks way too friendly to me. i think it should not sleep during idling, but sneaky peek around until it notices someone and pretend to be sleeping. imho that is more accurate behavior. or have the facial expression of HAL9000…

Great job and prototype. I would prefer Alexa’s shorter sentences versus the diarrhea chatGPT responds with. But that’s just me. The holiday lighting crowd has LOTs of software and experience in converting words to phenoms to facial expressions (the step beyond TTS). My preference would be animated eyes and an animated mouth rather than the animatronics and oscilloscope output, but admittedly that’s just my preference. For those wanting additional insight into the animatronic eyes, the holiday lighting crowd has tons of that as well, simply look for halloween skull and eyes.

ChatGPT speech reminds me of that of a career polotician or a business manager/executive, they speak incredibly well and with a tone of authority, but are not always truthful, percise with facts or exhibit wisdom as opposed to parroting safe ideas in a way that’s comforting to hear.

I get the feeling reading GPT text that i do looking at a fractal or playing a procedurally generated video game, at first it appears winderful and infinite in complexity, but the deeper you go the more repetition and patterns appear.

If the audio is in the vertical deflection then the horizontal timebase is very high for lo-fi audio to display “waves”. Usually the other way and the old horizontal needs a load inductance. Rotate the yoke 90degrees and then the timebase is the low voice range.

Glad it don’t look like me even as it responds to my name.

You’ve got a good eye, Echodelta. The audio signal is coming into the horizontal deflection, but I rotated the yoke 90 degrees, as you mention. Thanks for watching!

Amazing video and build, but no matter how cute it is, it’s still spying on you and your family.

I’m just curious… So what if it’s spying/listening? I think we all presume our web surfing history and emails are collected and there’s always the thought our cell phones are always listening. I gave up on the concept of privacy long ago. Now with the feds setting things up to go all digital currency, there’s another aspect of privacy out the door. Add Door Dash, Uber, grocery checkout, face recognition, and who really thinks privacy is a thing.

Resignation is no solution, though. That’s the quickest way how people do enslave themselves.

Oddly much of this stuff is correlated through activity within an account, often tagged by Email addresses, which are still very easy to get in large numbers. Having a separate account Email for each gizmo (or fleet of that particular type) fends off at least some of this. You can ask “ThinkerersAlexa@gm@il.c*m” (which doesn’t exist of course, but you get the point). Likewise for online shopping though that gets tedious.

Yes, of course a clever system could look at everything coming from one’s internet address, could winnow through all of the digital cruft and find some other useful inferences, but most of it is just a search for low-hanging-fruit looking to sell you on the notion that you’re not adequate without their hair product or digital gizmo.

Hm. I was under the impression that a “key word” is needed to activate the voice transmission to the servers of each digital assistant service.

That “ley word” is the only voice recognition/pattern recognition that’s actually being done locally.

That being said, my information might be outdated. That’s how things were in the days in which that Amazon Dot was released first time and in which Google had listened to “Hey Google”, “Computer!” and some other word.

Is there any current information available about the matter? A simple network traffic monitor should tell at which point the device does make a call home.

Looks like something from Old World Blues, the Fallout: New Vegas DLC

Interesting that there was a lawsuit against Amazon and they were found to be guilty of some outrageous stuff related to Alexa and yet I see tons of articles and videos basically advertising Alexa..

And no editor that makes any remark either.

Fun project – I like the answering element the old screen provides.

Tangential question: why do these projects on hackaday never reference the work Cynthia Brazeal did at MIT? She was building interactive robots very similar to this 25 years ago – not networked, no voice recognition, but interactive and expressive – but I rarely see any reference to her work…

For those who are unfamiliar, it’s worth the time to check out Kismet: https://robotsguide.com/robots/kismet/

Would you like to share the Code for the face detecting module for Arduino?