Within the Artificial Intelligence and natural language research communities, Lisp has played a major role since 1960. Over the years since its introduction, various development environments have been created that sought to make using Lisp as easy and powerful as possible. One of these environments is Interlisp, which saw its first release in 1968, and its last official release in 1992. That release was Medley 2.0, which targeted various UNIX machines, DOS 4.0, and the Xerox 1186. Courtesy of the Interlisp open source project (GitHub), Medley Interlisp is available for all to use, even on modern systems.

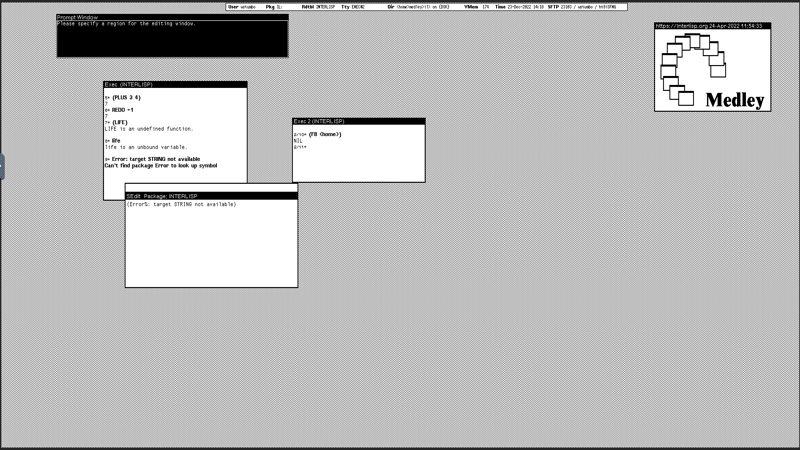

The documentation contains information on how to install Medley on Linux but also Mac and Windows (via WSL1 or WSL2). For those just curious to give things a swing, there’s also an online version you can log into remotely. What Medley Interlisp gets you is an (X-based) GUI environment in which you can program in Lisp, essentially an IDE with a debugger and the (in)famous auto-correction tool for simple errors such as typos, known as the DWIM (Do What I Mean), which much like the derived version in Emacs seeks to automatically fix simple issues like misspellings without forcing the developer to fix it and restart the compilation.

Thanks to [Pixel_Outlaw] for the tip and for also telling us about a project they wrote using Medley Interlisp for the Spring Lisp Game Jame 2023 titled Interlisp Fifteen Puzzle.

I’m thurprithed that Lithp ith thtill thomething people are interethted in uthing!

B^)

Lithp has grown into a family of programming languages. At work we have a team that writes web services in pure Clojure. You’ll find pockets of Common Lisp in strongly scientific circles. You’ll find GNU Guile in a few open source projects. ;)

You can find a chart of some dialects and a timeline in the wiki.

InterLisp spanned a large section of the early AI era, quite interesting in that regard.

https://en.wikipedia.org/wiki/Lisp_(programming_language)

I’ve done serious production work in clojure. Fortunately it fit within a very FP shop so it was a good fit. I want to revisit the combination of vert.x, kotlin, clojure and react.js. Outside of an existing heavy FP discipline I don’t know if I’d be able to use it.

Well, from the beginning lisp was not supposed to be a programming language but a sort of pseudo language but programmers that was tasked with the translation to machine code happened.

Since it is a early/small, interpreted, language it has been made suitable for microcontrollers, even stand alone units http://www.ulisp.com/show?2L0C

Also, I suppose, AI research and the education you need still has examples in lisp.

(Like most things on hackaday, because it is fun.)

It makes more sense if you align problems according to the calculus of Alonzo Church more than that of Turing. You have to be in a deep mindset of FP for it to even make sense, but when it does it explodes with utility and expressiveness.

Lisp always was and still is the most powerful language in existence. Those who avoid it do it at their own peril.

It’s important not to think of Lisp as ana interpreted language. Almost from the beginning, Lisp on the IBM 704 had both a tree-walking interpreter and a native AOT compiler. Interlisp provides a bytecode compiler, trading off speed for compactness. Across the entire spectrum of Lisps today, we have tree-walking interpreters, native compilers, bytecode compilers with and without JIT (including JVM, CLR, and private bytecode), and compilers to C.

“and compilers to C”

Do some c compilers compile to platform machine code … instead of calls to run-time libraries?

“and compilers to C”

Isn’t that an transpiler, then?

With C standards that ends up being the difference between statically linked and shared object runtimes. Consider golang binaries, you can get statically linked C like that, sort of.

(too many ((parenthesis) fickin’ everywhere)))

What is the actual issue with having one set of parentheses used liberally?

I wrote lisp professionally for 11 years back in the 80’s and 90’s using Symbolics computers. To this day still use emacs. Every now and then I’ll pause to write some small tool in lisp (emacs is extensible in lisp), then when I go back to whatever language I’m supposed to program in I spend half an hour wondering why it’s not as convenient as lisp. If all you know about lisp is what you learned in a “Survey of Programming Languages” in college or what you’ve read in articles you won’t understand this post.

Or if you do understand your post, you wonder why functional languages add so much ceremony. I’d like a seamless merging of kotlin & clojure and an async framework like vert.x.

Since when Lisp have anything to do with functional languages? It can be functional if you really want to (though I wonder why would anyone need to do it), as well as it can be any other kind of language you can imagine, of any possible level of abstraction.

I am one of those guys that learned about Lisp in “Programming Language Landscape” (think that is what course was called) in the first half of the 80s. My only experience with Lisp. And … I haven’t missed Lisp at all :) . Pascal and Fortran was where all the action was. Then ‘C’ came of age and used it mostly in my career.

I’m surprised to read about LISP and AI, but the terms “expert system” and “neuronal nets” aren’t even mentioned here. How comes? 🤔

You may be surprised if you don’t know the history behind Lisp.

Lisp is a foundational language for early Artificial Intelligence. (Along with Prolog)

Partially because of the extreme flexibility of the language especially around generating new syntactic forms. You extend Lisp through Lisp. Often you don’t wait for language features – you add them. Lisp looks strange because it’s made out of lists itself. You can think of them a bit like python tuples (foo, bar, baz) only in Lisp the list is (foo bar baz) and foo is a function that gets applied to the parameters bar and baz.

Amusingly enough, is an old Lisp “expert system” with your username written by Symbolics.

https://www.reddit.com/r/lisp/comments/704clr/symbolics_joshua_expert_system_operation_with/

Lisp is a language you won’t really get until you put serious time into it. Especially with tools that understand and help you balance and work with the structure of it. You can’t just expect to write it like you would Python for example. It’s a totally different branch of the language tree with nuances.

Lisp is about symbolic computing. Expert systems are only tangentially related, think of the more interesting cases, such as CAS, proof assistants, knowledge bases, etc.

Expert systems were kind of a dead-end as well. Doing those languages no favors when they passed on.

Expert systems, like many new “technologies” of the 1980’s and 90’s, were oversold by the press and marketing groups. I believe that they “died prematurely” due to failing to live up to the externally provided “hype.” I think that they still can be a better way to provide “applied knowledge” in limited, bounded, domains than GPT-style AI.

For example, I was a co-developer (other than the “domain experts”) of two Expert Systems in biotechnology in the 1980’s: SpinPro and PepPro, both at Beckman Instruments (now Beckman Coulter). SpinPro advised on the design of procedures using preparative ulracentrifuges to isolate/purify subcellular components.

PepPro advised on the design of procedures for peptide synthesis (chemically assembling small proteins from individual amino acids).

These domains had few people with expert-level knowledge, more people who needed these abilities than the experts’ had availabilty to assist, and no corpus of examples to use for ML. I believe that there still are many such problem-spaces for which rule-based expert systems could be valuable (and, perhaps, even profitable.)

Both of SpinPro and PepPro were implemented in Interlisp (Medley and its predecessor versions) using Xerox AI Workstations, and then compiled for PC (DOS) systems. The extensibility of the lisp language made it possible to have the implementation be executable in both the Interlisp and PC lisp environments.

An aside, rule-based expert systems, since they should have strictly bounded domains, have a low probability of “hallucinating” or contributing to concerns of “AI run amok.”