Data retention is a funny thing. Atmel will gladly tell you that the flash memory in an ATmega32A will retain its data for 100 years at room temperature. Microchip says its EEPROMs will retain data for over 200 years. And yet, humanity has barely had a good grasp on electricity for that long. Heck, the silicon chip itself was only invented in 1958. EEPROMs and flash storage are altogether younger themselves.

How can these manufacturers make such wild claims when there’s no way they could have tested their parts for such long periods of time? Are they just betting on the fact you won’t be around to chastise them in 2216 when your project suddenly fails due to bit rot.

Well, actually, there’s a very scientific answer. Enter the practice of accelerated wear testing.

Faster, Faster Now

EEPROM and NAND flash storage are both immensely important technologies. EEPROMs are used to store firmware for all kinds of devices, as well as things like cryptographic keys and other such largely-static data. Most EEPROMs have data retention ratings for many decades, if not centuries. Flash can be used in much the same way, but it’s also used as mass storage. It’s not quite as good at retention as EEPROM is. Some parts are rated for only a few years if left to sit, particularly at elevated temperatures. Other flash parts can hang on to data for much longer if designed to do so.

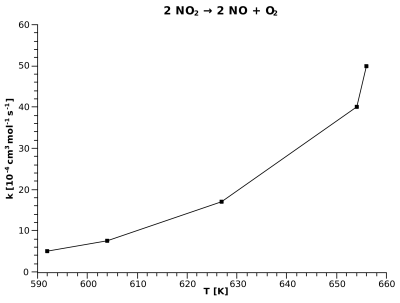

The question is, though, how we determine these numbers. Given the impracticality of real-time testing over a century, the industry instead relies on accelerated life testing methods. These techniques involve subjecting memory devices to heightened stress factors, such as elevated temperatures, to expedite the aging process. The underlying principle is based on the Arrhenius equation, which posits that the rate of chemical reactions increases exponentially with temperature.

The degradation of memory cells is, fundamentally, because of chemical reactions that take place over long periods of time. Over time spans of decades or centuries, plastics degrade, materials oxidise, and all kinds of other chemical reactions go on. These chemical reactions can damage the tiny structures of a silicon chip responsible for storing data as little electrical charges. It’s almost like when you leave an apple out and it rots away; the phenomenon is often referred to as bit rot.

As per the Arrhenius equation, you can thus model a long period of memory degradation in a much shorter time by elevating the temperature, because the reactions occur at a faster rate. If you’ve studied physics, this should all be pretty familiar. Temperature is really just the rate at which atoms are wiggling around. Thus, if they’re hotter, they’re wiggling around more and it makes sense that reactions would occur faster, what with all these atoms and molecules bobbling about. This explanation is simplified, of course, and won’t get me invited to any real science conferences, but it serves our purposes here.

Thus, to test a device for long-term data retention, you merely need to place it in a hotter-than-typical environment and run checks on how it holds data over time. Naturally, this is done with many samples with scientific rigor, enabling statistical insights to be made. Obviously, there are limits, too. There’s no point testing EEPROMs at 500 C, where they’ll melt and burn in mere seconds, retaining precisely zero data. However, within the realistic limits of the part, significant insights can be made.

By observing the effects of accelerated aging, predictions can be made about the long-term retention capabilities of these devices. After accelerated aging, the EEPROM and Flash memories undergo rigorous data retention tests. The outcomes of these tests are extrapolated to estimate how the devices would perform over extended periods at normal temperatures. This extrapolation, while scientifically grounded, is not without its uncertainties and relies heavily on sophisticated statistical models.

While temperature plays a pivotal role in accelerated aging, other factors like voltage variations and humidity are also considered to simulate various stress conditions. This holistic approach ensures a more comprehensive assessment of long-term data retention capabilities.

Due to inherent variations in memory cells, a statistical approach is employed in these tests. By testing a large batch of devices and analyzing the average behavior, more accurate predictions of long-term performance are made. This statistical analysis is crucial in understanding the overall reliability of memory technology.

Key to these longevity tests is the monitoring of specific failure mechanisms, such as charge leakage in memory cells. Understanding these failure modes is essential in predicting data loss and devising strategies to mitigate such risks. These specific failures will occur on their own timeframes and will be more subject to certain conditions than others.

It bears noting that accelerated aging methods aren’t just used for assessing flash memory and EEPROMs; the techniques are applied to everything from archival papers to inks and other such products. These methods aren’t without their negative points, though. Criticisms of these methods revolve around the fact that different chemical reactions can occur at different temperatures, which spoils the correlation between an accelerated aging procedure and what would naturally occur over time at a lower temperature. Correlation can at times be poor, and for many items, particularly those invented recently, we simply haven’t had the chance to compare accerated aging results with what occurs in real time. At the same time, with the rate that technology moves, it raises the question—will anyone in 2100 care if an ATmega can really store data on an EEPROM for 100 years?

Despite the rigor and sophistication of these testing methodologies, predicting the performance of memory devices over a century carries inherent uncertainties. Seemingly minor manufacturing changes or unforeseen environmental factors can impact the accuracy of these predictions. Understanding the physics and chemistry at play is key to accurately modelling long-term aging in more human compatible time frames. Even still, our best models are just that. Until somebody actually checks a given EEPROM or flash part in a century, we can’t know for certain how accurate these models really are.

At the end of the day, most of us don’t have to worry too much about storing data on centuries-long time frames. For those that do, accelerated aging techniques are a highly useful tool in understanding how best to preserve data on those time scales. If you take one thing away from all this, just remember that leaving your flash drives or microcontrollers on a hot surface is going to trash your data far quicker than if you left them somewhere cooler. If your PhD thesis is currently sitting on an old flash drive in a hot car, you’d be best advised to make multiple backups and store it somewhere wiser.

“There’s no point testing EEPROMs at 500 C, where they’ll melt and burn in mere seconds”

Only the plastic package would be damaged. Die, lead frame and bond wires will probably survive, their melting points are well above 500°C.

You might run into issues with differential expansion. A typical lead frame will be nearly 1% bigger at 500c compared to room temperature. Tin plating on pins will melt well below 500c. Aluminium bond wires will be quite soft too. Everything is going to oxidize extremely fast at the temperature too

In the Agilent (now KeySight) Reliability Test Lab we did the testing on bare dies that were sent straight down from the Fab upstairs. No plastic cases/shells to melt, worked great.

Looking at it the other way round, should I keep my backups on non-magnetic media in a freezer to prolong the time before failure?

Perhaps not. My fridge/freezer has huge temp swings resulting from opening the door. Temp cycles can also be from very low temp to low temp for the same failures

” My fridge/freezer has huge temp swings resulting from opening the door”

Chest freezers are designed to limit just that.

I have a chest fridge, built from a chest freezer and a external industrial thermostat.

I have this fridge set to 0-1°C that way I can hold meal prep and fresh fruit and greens for over one week much better than in a normal fridge.

Problem is that most new fridges have inverters and electronics and apps… which interfere with an external thermostat in the power cord. Best is to buy a commercial rated product.

I only found one vendor who has such a refrigerator setting out of the box, but it does not have a C (Ex-A+++) energy consumption class rating.

RE “…..My fridge/freezer has huge temp swings resulting from opening the door….” The item could also be stored in a “insulated container” that would drastically slow down such temperature change of the item to days or longer if desired.

“My fridge/freezer has huge temp swings resulting from opening the door.”

The largest and longest swings will be from the self defrosting cycles which most freezers included with refrigerators will have, at least in the US.

This. 👆. I have two freezers that I monitor with DS18B20 probes and have seen temps go up to 28F from 0-4 during defrost cycles

1. Did you check your DS18B29s with a calibrated thermometer? https://github.com/cpetrich/counterfeit_DS18B20

Mine went to the rubbish instantly, off by 3°C and nonlinearly so. I use TSic 306, TO92 which I could get at all and get for a reasonable price.

2. Can you see in your logs how the freezers do trigger such a cycle? Is it time based, temperature based, temperature gradient based, temperature rate based?

As long as they haven’t absorbed too much moisture. You don’t want ice making things break.

In 2100 there will be retro-hackers looking back at this stuff.

If they can read the eeproms, they can laugh at our style of programming :)

I’d be happy if they’d at this point, it’s a small price to pay if therefore Idiocracy won’t be a reality at this point.

I think there are multiple factors. It’s really hard to say.

Maybe it also depends on if it’s an optimistic or pessimistic point of view (maximum lifetime or minimum lifetime).

There’s the EPROM itself, of course.

Then there’s the type of programming sequence.

There are multiple ways to program an EPROM.

For example, using the standard 100 µs pulse (one pass).

Or by using multiple passes.

Or using a proprietary pulse sequence that the EPROM manufacturer recommends.

Then, there’s voltage levels.

You can slightly vary the programming voltage, with different results.

If the EPROM was previously been used and erased by UV light, there’s a chance that the erasing was too heavy or too weak (exposure wasn’t too short, crystals didn’t “grow back” entirely).

Then there’s the material itself.

If it’s cheap, UV rays or other forms of radiation may cause bit rot of some sort or bits to toggle.

That might be a problem for satellite projects (ham sats). Or sea/ballon based payloads crossing South Atlantic Anomaly.

Anyway, these are just my two cents. 🙂

It’s hard to make predictions without knowing the conditions of an EPROM.

A synthetic calculation based on a generic model does not make it.

It’s like with old computer media (datasette, 5,25″ floppies).

According to the generally accepted opinion, they may last 10 years or so.

In practice, though, they do last about 40 years under good storage conditions.

Same goes for coin cell batteries. They’re said to last 10 years, at best.

But in reality, they can last 30 years, depending on the use cases.

For example, NES and Gameboy cassettes do have them for retaining SRAM data and draw very little current, so they may still have power.

If a real-time clock is also present, however, the battery could also be depleted already.

It’s the small things that may do make a difference, sometimes. 🤷♂️

PS: My bad. My eyes did error correct the term EEPROM (two EEs).

It’s like with “the the” typo that often is overseen.

UV light doesn’t regrow crystals. It ionizes the silicon, which makes it conductive, to allow the gate to discharge

He might have mixed it up with bipolar PROMs. In early variants sometimes the blown fuses would grow back.

And it’s the photoelectric effect that lets electrons escape from the floating gate, not ionization.

“Temperature is really just the rate at which atoms are wiggling around. Thus, if they’re hotter, they’re wiggling around more and it makes sense that reactions would occur faster, what with all these atoms and molecules bobbling about.”

Yes, but a warmer temperature may also make things more flexible, more elastic.

A low temperature isn’t always best, thus. It generally makes things brittle.

Organic cells may take damage during freezing process (membrane breaks etc), that’s why cryogenics is still tricky.

Similarly, LC displays do break in an icy environment. It’s all relative, maybe.

Electronics simply have an ideal operation temperature, too.

Cooling things down below a certain temperature won’t be healthy, either.

It will cause weird effects, even.

Long story short: you can’t.

Such figures are meaningless, because you cannot know whether any given EEPROM doesn’t have defects or manufacturing deviations that lead to its demise in 20 years time – or maybe it means the death of just one bit out of millions.

It’s the CD-R story in a different form factor. A manufacturer can say their accelerated aged test articles indicate a theoretical lifespan of 100 years, but they cannot guarantee all discs are the same, so it’s all pointless. A good percentage of your discs will be coasters within 1-2 years.

Absolutely. In a previous job we burnt tens if not hundreds of thousands of optical disks. Aside from the “medical” CD-Rs, a surprising proportion were showing errors within a year or two, with many completely unreadable.

It all comes down to how closely matched your manufacturing tolerances are.

For CD-Rs it was a complete joke. For the better point of it, no consumer could check whether any disc could actually last 100 years, or even 10 years, since by the time you notice your disc is broken it’s already off the market, replaced by a different model from some different company. All the manufacturers of CD-Rs just put out whatever junk they managed to produce without a care in the world, because the worst outcome was that the rare customer who actually complained would simply get their discs replaced with the same junk.

Well the media might be perfectly fine, how about finding a machine that can play it. Good luck in finding a machine that you can trust to put your precious discs in. Or finding a system that actually boots reliably and also offers a way to transfer the data to a modern environment. I’m sure it can be done, but it won’t be easy.

I always looked at it with the idea that a disc with theoretical lifespan of 100 years, will still be fine after 10 years. By then technology has progressed to a point where you migrate to a different type of media. It is not unlikely that the device to play it all back with that’s the limiting factor. Even if you have thousands of CDs in a perfect state, if it is your drive that fails you have a problem.

Is there a difference if the EEPROM is powered 100 years? Does it wear out faster than without power while its temperature is kept equal?

Is the EEPROM data refreshed every time it is powered up or does a refresh happen only when data is written or read?

It’s a simulation. Most likely they miss a little tiny factor and the device is long dead in 100 years.

I wonder what’s worse for EEPROM: constant high temperature or frequent temperature swings.

Both are terrible, high temps will degrade the IC faster, temp swings will reveal or cause solder defects faster.

Well what if you take flash eeprom replace the transistor latches with just a grid of literal nanoswitches, removes propagation delays and internal resistance and capacitance, other than from the diode network and transistor output buffers.

The switches would actually act like relays, so write times would be based on a settle down period when writing a word to memory, and setting and resetting can either be achieved electrostatically or magnetically or both in tandem.

That’s essentially what a dlp multi mirror chip does to a degree, how much data can a 4k dlp chip store, with one sub pixel per bit?

Roughly 30 megs dci 4k since you have roughly 9-10 million pixels and each typically made of 3 or 4 subpixels storing raw binary data, more if you use multiplexed data and compression and software interpolation

Might be ram that may actually work on a quantum computer, use a scanning laser to read out bits, at different wavelength can read different sets of bits

One question that I can’t seem to find an answer to and to which I would be very greatful if anyone could answer:

For a typically SSD, if I write a file and don’t touch it (the file), how long afterwards would the file still be fully intact — with the computer typically powered up perhaps at least once a month for say at least an hour? How about same question but if the computer remains unpowered and sitting on the shelf? In both cases, assume normal indoor air temperatures.

It depends on the technology – QLC/MLC/SLC/etc. Each cell is a bit like a capacitor, and the electric charge last stored when data was written will eventually leak out and the data will be lost. Powering up the computer makes no difference because the charge is only set when data is written – so if you wipe the drive and rewrite the data once a year, it will remain viable for a LOT longer than writing it once and never touching it again. But of course each write causes a tiny bit of deterioration, so rewriting it every hour will wear out the “capacitors” much sooner and after a few years of this, the charges will leak out a few minutes after being written. I don’t have any figures but a good quality SLC drive should keep data written once for at least 20 years, and less for MLC and less again for QLC. Most data hoarders recommend making a complete copy of all your data at least every 5 years, and of course having multiple copies of the data in case some devices fail.

Basically, don’t rely on any one storage device holding on to the data – keep multiple copies and make new copies of those every few years.

The cost of higher cell density is more reliance on the controller to handle error correction.

When powered, most SSD controllers will scrub data when idle. If it detects a bit flip, it can apply error correction, mark the block bad, and rewrite the contents to a free block. This is why it’s recommended to use TRIM and keep a percentage of the SSD free.

The same data stored to different SSD flavors would probably be fine over the course of 20 years is powered on periodically.

Here’s a question that I haven’t been able to find an answer and hopefully someone here can answer:

With a typical SSD at room temperature, how long will a typical file remain assessable with no errors if the file hasn’t been accessed and the drive has been powered on for a few hours each week? What if the SSD has been sitting on a shelf, unpowered?

Well, sitting on the shelf at ‘normal’ indoor temps, from what the ‘internet’ says, a SSD that has exceeded its TBW rating will start losing data in one year. It depends on ‘usage’ and type of SSD. The numbers I find are 3-5 years, some say even 10 years if barely used. Bottom line is “don’t depend on SSDs for long term storage” and if you do, ‘recycle’ your SSD backups to ‘refresh’ (power them on at least) them periodically. I personally use portable HDDs (spinning rust) for my yearly backups.

That’s yearly ‘off site’ backups (relatively long term backups).

And you’ll still be salty. :)

So I gather, using acomputer which contains a SSD weekly and backing the SSD to an off-line HDD every 6 months is not completely adequate as that is only going to “read” all the contents of the SSD. Since the cells will not be “written” to or the capacitors recharged data may start to melt away. To prevent this one should then restore the same backup data from the HDD to the SSD immediately after the backup, correct? But then I read that the SSD controller does some refreshing. It that not trustworthy? Does the OS like Windows 11 do any refreshing? Is that not trustworthy?

What % of data really needs to be preserved for 100 years?

if you’ve never seen a chair testing machine in person, you’re missing out.