Since ELIZA was created by [Joseph Weizenbaum] in the 1960s, its success had led to many variations and ports being written over the intervening decades. The goal of the ELIZA Archaeology Project by Stanford, USC, Oxford and other university teams is to explore and uncover as much of this history as possible, starting with the original 1960s code. As noted in a recent blog post by [Anthony Hay], most of the intervening ‘ELIZA’ versions seem to have been more inspired by the original rather than accurate replicas or extensions of the original. This raises the question of what the original program really looked like, a question which wasn’t answered until 2020 when the original source code was rediscovered.

A common misconception about ELIZA was that it was written in Lisp, but it was actually written in MAD-SLIP, with MAD being an ALGOL 58-inspired language and SLIP (Symmetric LIst Processor) an extension library written by [Weizenbaum], first for Fortran and then for MAD and ALGOL. Ultimately the original code was found in the bowels of the MIT archives, which is now finally available for the world to see.

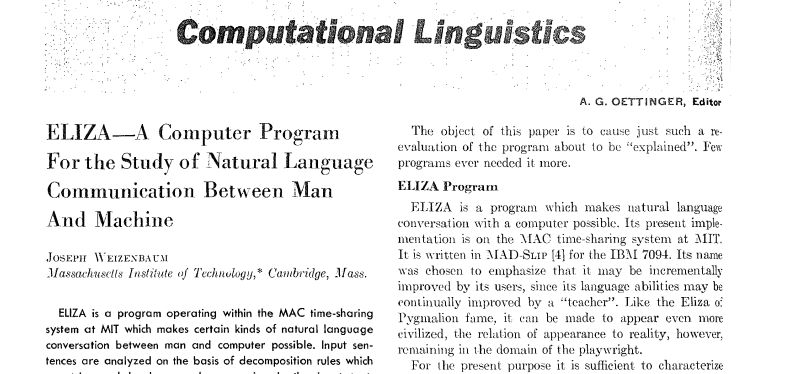

This version of ELIZA is from 1965 and predates the publication of [Weizenbaum]’s paper (PDF) in the January 1966 Communications of the ACM journal issue in which he details the workings of the code, as well as a partial listing. Thanks to some archive spelunking efforts, we can now once again see the full code as half a century of ELIZA history is being puzzled back together. Here project member [Jeff Shrager] would like to remind us that the team very much welcomes assistance from the community in this effort.

Thanks to [Jeff Shrager] for the tip, as well as his efforts on this archaeological project.

chatgpt is embarrassed by its primitive ancestors.

that source code is hard to read.

It should be.

It was hard to write.

:-)

The source code is available as a text file, annotated and unannotated. If there is sufficient interest I’ll put it on my website. As a note, I am a member of a group of academic and non-academic researchers investigating ELIZA. We are writing a book on ELIZA which we expect will be published by MIT Press sometime in 2025.

To my experience, a similar algorithm seems to exist in some parts of some of the new machines. If one includes words connected with sensitive issues the response seems ELIZA-like, ignoring the context.

They have to have one to fix the errors and censor the machine, because they can’t control what the core algorithm will output. There’s teams of people adding on exceptions and corrections as they go.

I remember typing in the program from the most influential computer book to have ever been written: More Basic Computer Games by David Ahl. ;)

It taught me how to use DATA statements to my advantage.

https://www.atariarchives.org/morebasicgames/showpage.php?page=56

I wrote that program!

:-)

BTW, the history at the end of that page is wrong in a couple of ways. First off, the original wasn’t written in Lisp — but that was a common mis-understanding at the time). Second, I didn’t “convert” it from Lisp (or anything) to BASIC — I just sort of made it up. And, TBH, my knock-off is pretty terrible! :-)

Thank you, giant. As a teenager in the 1970s I stood upon your shoulder (among many others), which gave me quite a boost.

Back in the late 1970s, my brother got a bright idea to take the output of Eliza and feed it back to the input. Then he logged the entire conversation with itself. The result was hilarious. However, it also revealed a lot about how Eliza worked.

haha

I wonder if anyone has tried that with ChatGPT yet.

Or better: A conversation between ChatGPt and DAN ChatGPT.

he invented Generative Adversarial Networks.

he was far ahead of his time.

Reading the ELIZA source code reminds me of how cumbersome it was to program in older “high level languages.” While ELIZA may not have been written in Lisp originally, the later Lisp rewrites were undoubtedly easier to read and understand. Yes, it is important to preserve the original source code for posterity as an accurate historical record. At the same time, it is also valuable to preserve ELIZA’s original behavior written in languages that are accessible to the eyeballs and brains of today’s software engineers and students. That modern language might not be Lisp (maybe it’s Python), but the relevance of Lisp was its library of built-in primitives that made writing pattern-matching programs like ELIZA easy, so programmers could focus more on the program’s behavior and less on its less relevant implementation details.

Lisp defines a syntax for representing tokens, lists, and tree-structured data conveniently as text. It provides a built-in parser for this syntax that makes it easy to convert files and user input typed on the console into data structures, and Lisp provides primitives that make it easy to search for patterns, manipulate lists and trees, and print them onto the console or into files.

Perhaps the most salient feature of Lisp is that both list/tree data and program source code are represented using the same syntax, and the same primitives can be used to manipulate source code as well. This makes it easier to write interpreters, compilers, and “source to source” translators. This last “super power” provides the language with its extraordinary macro facility, which is very useful for creating entirely new “application specific” languages or simply adding new language features to Lisp itself. For example: (with-timeout (10 seconds) (compute-something-that-might-not-terminate))

“Reading the ELIZA source code reminds me of how cumbersome it was to program in older “high level languages.””

It did “entice” people to pay attention to writing uncomplicated and unbloated code, though. :)

My second computer was a kit from Southwest Technical Products. There was a story about the same time about a Doctors practice trialling a system for patients and I think it was called Eliza or Liza. SWTPC ran three BASIC interpreters 1.5k,4K and 8K loaded from CUTS formatted audio tapes. More here: https://vintagecomputer.net/cisc367/Creative%20Computing%20Jul-Aug%201977%20Eliza%20BASIC%20listing.pdf

If you know what MIKBUG is then you are showing your age. ;-)

There is also an unrelated investigation of doctorial analysis of bacterial infections called “Mycin”. It was not a Chatbot, such as ELIZA, but rather a expert rule system. I don’t believe that it was ever adopted by the medical community although it was more accurate than doctors in diagnosing diseases.

Mycin was a miracle. Did better than GPs with antibiotics although that is a low bar.

If you are intrigued by powerful macro facilities, look for papers on “hygienic macro expansion,” which is a powerful technique for “understanding” the outside context and interior code of a macro call so the correctness of variable references and bindings are preserved.

All those enamored of ChatGPT and related large language models need to read a story from 1971:

https://archive.org/stream/Galaxy_v31n04_1971-03/Galaxy_v31n04_1971-03_djvu.txt

Scroll down to page 99 and read the story there named “Choice” by J.R. Pierce.

Much like Harvey in that short story, you will find that chatbots only appear intelligent as long as you play along. If you do something unexpected, they will simply roll with it rather than reacting as a thinking being.

The book and article referenced in the story are real:

https://mitpress.mit.edu/9780262110235/recognizing-patterns/

https://cse.buffalo.edu/~rapaport/572/S02/weizenbaum.eliza.1967.pdf

As primitive as Eliza was, there were people who insisted that Eliza was an intelligent person. In reality, Eliza (and modern chatbots) only reflect the intelligence of the user.

The paper by Weizenbaum is referenced in the article above.

Not quite accurate. There were people who knew that they were talking to a machine and yet reacted as if they weren’t. This effect is known as the “Eliza Effect”. Eliza (really) doesn’t reflect anything. The actual ‘program’ that is commonly called ELIZA is the DOCTOR script. ELIZA-MAD is an interpreter of the script and a pattern matcher for the input text. ELIZA, and the scripts which become the interactive persona, has no intelligence nor does it reflect the intelligence of the user nor the intelligence of the questions posed to the current persona. In effect, the ELIZA scripts are static representations of a dynamic conversation, with the input conversation used to populate the output ELIZA dialog.

Please expand. When I first heard about ELIZA, I thought this was just a different name for a BASIC program I encountered in 1971 called “DOCTOR”, which looked for keyphrases and keywords in the user input and responded by choosing among several possible responses for each, all in a sequence of DATA statements. Hearing someone mentioning ELIZA and DOCTOR and making a distinction between them opposes my assumption. What is the DOCTOR script you mention?

It’s a bit confusing. The original ELIZA (the MAD-SLIP one) was an interpreter whose grammar and content came from a script that was represented in the moral equivalent of a lisp s-expression. (In fact, it was read by Weizenbaum’s own list-processing plug-in to MAD — that’s the SLIP part of “MAD-SLIP”, and it’s mostly convergent evolution that it looked like a sexpr.) Only a few original scripts have survived, the most commonly known one being the DOCTOR script. So, ELIZA the MAD-SLIP program did not, on its own, act in any way at all, but when running the DOCTOR script, it interacted in the famous Rogerian Therapist persona. Since only one script famously survived, the name “ELIZA” came to be associated with the DOCTOR (Rogerian Therapist) persona, although that was not at all Weizenbaum’s intention. In fact, Weizenbaum did not intend ELIZA to be what we now call a Bot or an AI at all! His intention is clearly stated in the title of his famous CACM paper: “ELIZA—a computer program for the study of natural language communication between man and machine”. Weizenbaum intended ELIZA as a platform for studying human-machine communication.

“Comments on a Problem in Concurrent Programming Control” is pretty interesting, too.

Whoever this “Mr. Dijkstra” dude is.

Dr. Djikstra. Two of his notable accomplishments was to state that GOTO’s were harmful, CACM V11N3, 1968, and Djikstra’ Algorithm to find the shortest path between two nodes in a weighted graph.

Is this the comment you are referring to? https://dl.acm.org/doi/pdf/10.1145/365153.365167

Or Did you mean the “Solution of a Problem in Concurrent Programming Control”? https://dl.acm.org/doi/pdf/10.1145/365559.365617

:)

In the 1960s the UK government (Department of Trade and Industry) ran a Microelectronics Programme (MEP). As part of the development for Primary Schools a Language Pack was produced which included a modified version of Eliza. This was intended to demonstrate the limitations of a language model relying on adjustable keywords and their possible responses.

We’re working on a simulation of what is was like to use ELIZA in the mid 1960s, by embedding Antony Hay’s ELIZA in an ASR33 simulator. So far the effort is pretty simplistic, but has basic sanity. Check it out here: https://github.com/jeffshrager/elizagen.org/tree/master/ELIZA33/current