Along with the rise of the modern World Wide Web came the introduction of the JPEG image compression standard in 1992, allowing for high-quality images to be shared without requiring the download of a many-MB bitmap file. Since then a number of JPEG replacements have come and gone – including JPEG 2000 – but now Google reckons that it can improve JPEG with Jpegli, a new encoder and decoder library that promises to be highly compatible with JPEG.

To be fair, it’s only the most recent improvement to JPEG, with JPEG XT being introduced in 2015 and JPEG XL in 2021 to mostly deafening silence, right along that other, better new image format people already forgot about: AVIF. As Google mentions in their blog post, Jpegli uses heuristics developed for JPEG XL, making it more of a JPEG XL successor (or improvement). Compared to JPEG it offers a higher compression ratio, 10+-bit support which should reduce issues like banding. Jpegli images are said to be compatible with existing JPEG decoders, much like JPEG XT and XL images.

Based on the benchmarks from 2012 by [Blobfolio] between JPEG XL, AVIF and WebP, it would seem that if Jpegli incorporates advancements from AVIF while maintaining compatibility with JPEG decoders out there, it might be a worthy replacement of AVIF and WebP, while giving JPEG a shot in the arm for the next thirty-odd years of dominating the WWW and beyond.

very good is still ‘Real’ compression, why not put stream compresson (and stream change data, for example stock price changes every minutes in table), partial different compression (for example background bad compression, face better, and alpha channel and animation; like gif

Have a look at APNG and animated WebP.

look at real.com codec and rating compresion in jpg

Why? What level of detail is required by people scrolling on their 5″ phone screens?

JPEG is also used in almost every webcam, the video stream being a series of multiple MJPEG images.

There are other methods like H.264/H.265 video format standardized in USB UVC 1.5, but hardly used, and require a lot of CPU power. Even JPEG is intense to encode for a MCU. 64 MHz takes minutes for a 720p image.

New compression algorithms are typically also much more intense to compute. I wonder how JPEGLI can perform on low-end embedded imaging hardware.

What would be some low-complexity JPEG alternative? Something that’d run on a low-power MCU to transmit slowly over LoRaWan or Sigfox or Cat-M1?

The kind of things that trill me more personally than “better JPEG”:

– https://en.wikipedia.org/wiki/Color_Cell_Compression (see also S3TC/S2TC)

– https://qoiformat.org/ (for lossless and when PNG is overkill)

But JPEG is good for if the output is a file, so producing JPEG directly means no loss due to conversion between formats. There are image sensors (the thing soldered to a PCB to make a camera device, the actual chip that senses light) that have JPEG compression built-in directly like OV5640 or OV2640.

QOI is genius. It is significantly much faster than PNG and the result is almost as small (for our benchmark images). We use it to stream the output of the simulation of an embedded UI. The QOI implementation is a single header file. When I checked out the repo first I was like: so it is just an interface repo but where is the implementation? I don’t know the author but I am sure he is a fun guy.

Comic format (.CBR) uses JPEG, too, often.

https://en.wikipedia.org/wiki/Comic_book_archive

There’s a lot more out there than digital picture albums and websites, indeed.

*Keeping compatibility with ordinary JPEG is important.

Likewise, Unicode keeps rudimentary compatibility with 7-Bit ASCII (but not codepage 437, sadly).

Or let’s take MPEG-4 decoders and their backwards compatibility to MPEG-2 and MPEG-1.

Or MP3 audio players, which often can handle MP2 files.

(* there was an early form of JPEG compression that had been forgotten by the times. I have one Windows 3 era application that can read/write it.)

jpeg-LS was once the image format that suited this use. It was ridiculously low complexity, could run on weak chips and compress perceptually losslessly gigapixels almost in realtime.

Its imo superceded by JPEG-XL at effort=1 (fastest/lowest complexity, can still run at even lower complexity if you tune parameters to only focus on what you need). JXL massively multithreads, supports lossless, handles a lot more data and layers including thermal (ie for drone footage), weather overlays (satellite imaging), progressive loading by design so image starts showing once only 10% data is obtained (can enable extra progressiveness), but especially saliency so the ‘most interesting’ parts of an image loads first (you can override the estimation to focus on one part in particular and always give it highest priority in decoding).

‘JXL art’ shows extreme limits of analytic-based recreation of patterns but you really need to ask around to make sure it can be usable for your transmission uses (allocating more data for less abstract results based on your actual images, closer to SVG).

I recollect someone was interested in doing a motionjpeg-style utility thatd only store differences from previous images or one reference image so itd save ton of storage and bandwidth for surveillance type footage. Format allows that but its supposed to be encoder optimizations that will happen over its existence, and not necessarily part of the reference library.

JPEG-LS has a very good encoding speed and ratio for photographic images or renderings consisting of gradients. It doesn’t completely fall apart on artificial images with sharp edges either, although the compression is less than state of the art. But LS doesn’t degrade to lossy well. The artifacts appear as noticeable horizontal streaks as each row is quantized separately from its neighbors.

I use JPEG-LS in Photoshop and IrfanView (the latter doesn’t support channel decorrelation and compression is lower).

There is an experimental closed source HALIC encoder for lossless images with even better speed. It could be used for realtime capture, and transcoded to something else after editing for distribution.

You’re missing the point. The idea is to keep the same perceptual level of details but with smaller files.

Better compression means less storage and bandwidth use. And at FAANG, that’s a considerable amount of potential savings.

Which is quite handy for huge providers like Google but pretty much irrelevant for the vast majority of humanity and the people who produce the cameras since JPEG isn’t in need of a replacement as far as they’re concerned. That’s why all the other formats got a resounding “meh” as a reception and why Google is going to have to pay to get this format used by anyone except themselves and a few fanbois.

This isn’t a new format. It’s an improved JPEG encoder — that’s why it’s interesting. It produces perfectly normal files which everyone can read; they’re just smaller.

Right, and a higher compression ratio and 10bit colors is interesting to tech nerds like us, photography geeks, and straight up money in their pockets to Google, Facebook, etc… It means nothing at all to anyone shooting a selfie, cat pictures, or the people making the cameras for the next line of phones though.

It makes a difference when you save all your photos to the cloud – you could upload more images in the same free space limit.

If you save them all to the cloud for a decade or two, sure. Or if you’re insisting on taking 4k or larger resolution photos. Anyone that serious about their photography is in a very small subset of the General Public part of the Venn diagram though.

I already have to push users providing me images to do better. This has to exist across all their toolchains for it to be relevant.

Basically, I can’t move mountains, and this is requiring me to move mountains for a net gain that approaches zero.

Not at all. The result is just a jpeg, so any of your users whose toolchains use it will simply be sending you better jpegs. And the users who are sending you pixelated snowstorms will still be sending you pixelated snowstorms, no matter how you cajole them.

Id argue the real objective was always actually to obtain *higher quality* jpgs for the same bit budget/filesize. That slightly smaller filesizes could be secured is only a welcome benefit. Keep in mind jpg’s quality was already considered no longer acceptable so discourse shouldnt focus on filesize else itd drown the massive gains from jpegli as a replacement for mozjpeg and hardware encoders for jpg (efficiency over those exceeds 35% but its not simple to measure). As long as quality jpgs are generated, even higher storage gains are possible from losslessly converting to jpegxl eventually.

I would give everything for a flagship phone with a 5″ screen. Unfortunately all flagships are > 6″

Google Pixel 2, Apple iPhone

I still have, and use, my flagship Google Nexus 5. Although… it’s not 5″. It’s 4.95″. (And all the rubber on the back and sides melted so I painstakingly scraped it off.)

The main use-case for 10-bit JPEGs is so that the next Pixel phone can “take pictures with *a billion more colours*”, while still letting users upload them to facebook or e-mail them to Aunt Doreen.

Not sure if you’re trolling or just ignorant.

The purpose of high-bit depth images is that they still have lots of detail after processing. That’s why photographers shoot raw not JPEG, but happily export to jpeg after post-production. This is particularly useful to keep detail in lighter and darker areas – which is useful if you have friends with varying skin tones and don’t want to choose between one being over or the other under exposed.

With phones now having quite passable post-production capabilities and normally being used in uncontrolled lighting, it’s quite sensible for phones to use 10-bit.

I didn’t say “10-bit images”, I said “10-bit JPEGs”. We already have high-depth image formats, and the Android camera API can already produce high-depth raw files. But Jpegli is high-depth *and* compatible with existing apps.

Conspicuously absent is any mention of HEIF, which is (thanks to Apple ramming it down people’s throats without permission) more widely used than any of the mentioned alternatives.

Ram? Samsung has it as well.

> Apple ramming it down people’s throats without permission

HEIF is Apple’s default – but you don’t have to use it.

On the iOS devices you can choose between jpg, HEIF or raw. Depending on your throat ;-)

Banding is an annoyance in displayed images caused by the 8 bit limit of conventional JPEG. 10 bits is enough to solve the problem for all but the most discerning viewers.

Inaccuracies its important to correct.

– this is not from Google itself, but the JPEG-XL authors, Google Research, or Google Zurich (google’s imaging and compression experts).

– Jpegli is NOT a new format but a new encoder for the original JPEG, thats meant to surpass libjpeg-turbo and even mozjpeg. More efficient coding is meant to help those stuck with JPG get more milleage out of it, not motivate them to avoid superior newer formats.

When webp was initially announced, its lossy encoding was so bad mozilla created the mozjpeg encoder to show that we dont have to adopt [inefficient format of current year]. Jpegli surpasses mozjpeg and can be dropped in as a replacement library with no changes to your workflow or your applications. The Jpegli ENcoder more efficiently generates JPGs fast, while the Jpegli DEcoder displays JPGs more smoothly regardless of the JPG encoder that was used.

– the longterm successor to JPEG is still JPEG-XL. Adoption will only keep growing across the imaging world regardless of the chrome team’s attempts to slow it and pretend it doesnt exist.

JXL has a unique forward compatibility guarantee – if your source images are JPGs, you can almost instantly convert them *losslessly* to JXL and consistently get 20% smaller filesize (in compareason, converting to either web/avif will either lose quality in lossy or result in huge files if lossless). If your originals are pngs, doing a proper recompression to JXL would result in much higher quality with even smaller filesize. Jpegli is useful to generate higher quality JPGs that will then give higher quality JXLs when losslessly converted – transitional JPGs so good its not useful to waste storage generating webp duplicates of your images.

Questions or need information? Check the JPEG XL discord where devs hang https://discord.gg/DqkQgDRTFu

The author of that article also proved such nonsense and ignorance. A JPEG XL successor? An AVIF-bias reference from “2012”???

Nice answer. One point to notice however, is the amount of lie in the OP. There’s no way to have 10-bit “HDR” color space in JPEG. So what JPEGLI does is simply called tone mapping, that is, mapping 10 bit over the (maximum) 8 bits that the JPEG STANDARD allows. You can do the same thing in your photo editor, no need for any “encoding algorithm”.

Similarly, I’ve yet to see a picture produced by this encoder that’s so good compared to a same picture by another “optimal” JPEG encoder. I don’t believe in the 20% better compression, simply because the technology at the time can’t encode the entropy better. JPEG is still using Huffman encoding, it’s still using lossy temporal to frequency conversion algorithm (DCT) and it’s still using integer based quantization. So in the end the space to optimize is very limited.

In comparison, JPEGXL improves everywhere and is definitively the next algorithm to use.

I ran tens of thousands jpg->jxl conversions, 20% is most commonly consistent filesize reduction at effort 7. No need using any higher complexity, itd take longer for barely 2 additional kilobytes saved.

Going from a png original to jxl yield waay bigger gains – just png to *lossless* jxl consistently guarantees 50% smaller filesize already, a storage/bandwidth that makes it unnecessary even recompressing to a lossy version unless youre optimizing for storage and bandwidth consumption.

Download a current stable or nightly of libjxl static and check against your own images

https://github.com/libjxl/libjxl/releases (latest stable build currently 0.10.2)

https://artifacts.lucaversari.it/libjxl/libjxl/latest/ (nightlies)

https://github.com/JacobDev1/xl-converter (easy to use GUI converter)

Actually, what we know as 8-bit JPEG is internally using 12-bit DCT coefficients. For things like slow gradients, the internal representation of JPEG is in fact precise enough to preserve ~10 bits worth of sample precision in the RGB domain, _provided_ you implement it in a way that takes >8-bit RGB input, does all the encoding and decoding with sufficient precision, and produces >8-bit RGB output.

The most widely used libjpeg-turbo API works with 8-bit RGB input/output buffers, and then of course everything is automatically limited to 8-bit precision. But this is a libjpeg-turbo API limitation, not an inherent limitation of the JPEG format.

So what Jpegli does is not at all tone mapping; it can actually encode 10-bit HDR images in a reasonably precise way.

But note that this requires a high quality setting, and JPEG XL will perform significantly better at encoding HDR images than Jpegli. Also note that (8-bit) JPEG can only just barely represent HDR images; it could be good enough for web delivery use cases but certainly not for authoring workflows (e.g. as a replacement for raw camera formats), unlike JXL which can also be used for such use cases.

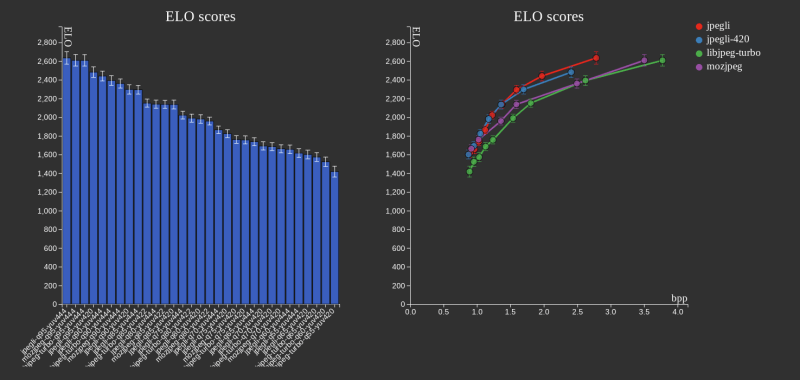

The space to do better perceptual encoding within the JPEG format is indeed limited, but it is not as small as you might think. When I did some testing for a recent blog post (https://cloudinary.com/blog/jpeg-xl-and-the-pareto-front), I observed that MozJPEG does indeed improve substantially (10-15%) over libjpeg-turbo (in terms of compression, not speed, and mostly for medium quality), while Jpegli brings a similar if not even larger improvement over MozJPEG (in terms of both compression and speed, and mostly for high quality). It is true that JPEG’s entropy coding is quite limited (not much encoder freedom besides optimizing the Huffman codes and the progressive scan script), but the main improvements in lossy compression are not coming from better entropy coding but from better quantization decisions.

JPEG files encoded with “Li” show somewhat less banding when decoded with regular software. The bands have more uniform width, and the first few levels near black are not truncated. But an image encoded from a 16-bit source without dithering looks worse one produced by a standard encoder from dithered 8-bit data. Perhaps “Li” could include an option to apply dither before quantization. This would reduce compression and dynamic range (for the Li decoder), but could be used at lower -d when quality and compatibility are important.

Maybe more simple formats in c/djpegli.exe to exchange data with other applications: TGA and TIFF. PNG takes too much time (for next to no compression at 16-bit), and PNM are often not supported on Windows. Gif is there and doesn’t belong.

You can have HDR regardless of bit depth. In JPEG, too.

I would be very surprised if jpegli performed any kind of tonemapping.

I remember a version of Corel PhotoPAINT! promoting the wavelet-transform bitmap (.wvl). I was interested in this for theoretical reasons, but it seems to have fallen by the wayside, like JPEG2000. Indeed, I don’t think anyone outside Corel ever supported it.

Definitely need to be backwards compatible with viewers and editors as that was the big problem with webp.

THIS! Omfg webp does not seem to work with anything’s known plugins. I don’t know how something can be a standard if it only works in one place lol. We had loads of support tickets from staff complaining “I can’t import image into blahblah application…” The support team came together and did up a quick n easy converter plugin for the company. Basically it just converts webp to jpeg all day long lol. I don’t know why existing apps don’t like to play with webp and honestly don’t care. I just wish it would go away or be replaced by something actually universal in some sort of way. Glad I am not the only one seeing this…

Not anything. GIMP is free software and supports WebP out of the box.

Probably won’t be, and this is the problem with their fad formats which some shortsighted software engineer decides is the next best thing because of some slight improvement in memory or whatever, but they don’t stick with it for long enough for it to be adopted widely so everybody hates it and shuns it in areas which do turn out to be important

Does this encoder place an ad in the corner of the picture?

Asking for a friend…..

If the algorithms in JPEG compress by eliminating data that is beyond the ability of the Human Visual System to see, then any further compression must eliminate data that one may see. Further compression will eliminate features that are visible to the user. I would not call that an improvement.

Way back when JPEG was first developed scientists would perform human visual studies to see what levels of compression were visible to their customers. It would be interesting to know more about what human studies Google performs when “improving” JPEG algorithms.

JPEG encoders allow quality level settings so that the user can determine how much corruption is acceptable for each image. A better encoder will allow a better choice of which corruptions to allow for a given amount of compression.

JPEG does not “compress by eliminating data that is beyond the ability of the Human Visual System to see”. JPEG allows a tradeoff between file size and image quality.

“maintains high backward compatibility” means it’s not really backwards compatible.

I wrote that. The word ‘high’ there actually means ‘high’, not some marketing term for “doesn’t really work in practical use cases”.

The main two catches:

1)

If you use the XYB colorspace, then legacy systems that don’t yet follow ICC v4 (published in 2012) will show wrong colors. Browsers and OSes generally are able to use ICC v4. Ad hoc thumbnailers, viewers and web services often not.

Because of this, by default, Jpegli does not use XYB because of that.

We conducted the rater experiment about image quality without XYB to make the results match practical use.

If you choose to use XYB in your use case, you will get 10 % more quality/density but with the expense of being slightly less compatible, i.e., incompatible with pre 2012 digital world and incompatible with software that has faults in implementations of color standards (ICCv4). Sometimes this can be acceptable risk, especially if it is a controlled viewing system such as textures for a game or similar.

2)

If you codify 16 bit channel frame buffers, you need Jpegli in both ends to have great 10+ bit frame buffer precision. If you use a legacy system in either end, it is difficult to get 10+ bit performance out of it.

Because of this, all rater experiments were done with 8-bit coding and with an 8-bit stock JPEG decoder — only the encoder performance was highlighted there.

Conspicuously absent is any mention of HEIF, which is (thanks to Apple ramming it down people’s throats without permission) more widely used than any of the mentioned alternatives.

But Google still refuses to adopt JPEG-XL.

Never mind all that, it’s time we spread Quite OK Image Format:

https://qoiformat.org/

QOI format is very poor compared to others: cloudinary.com/blog/jpeg-xl-and-the-pareto-front#:~:text=the%20chart%20is-,qoi,-%2C%20which%20clocked%20in

Quite OK Image Format does not compress well, is not fast, and is 8-bits only.

I’m glad that by the year 2050 people will still be recompressing a lossy image 20 times (because they are idiots by-and-large) and we have those lovely renditions of make something ‘psychovisually’ psychohorrible.

On the ‘ dominating the WWW ‘ remark. I’m not so sure, even this very page has 16 PNG type images and 5 jpegs (and 2 or 3 svg).

And many sites I visit have mostly png and webp or even avif.

Author of Jpegli, libjxl and JPEG XL here:

Jpegli is not a replacement or successor for JPEG XL, but a clever adaptation of some of the JPEG XL ideas into the existing JPEG format. This offers immediate improvements while we await broader JPEG XL adoption. We will receive a further ~35 % of quality/density improvement in high quality and more in lower qualities when we use JPEG XL vs. Jpegli.

Thanks you for the clarificarion.

And excuse me for my negativity over lossy formats, that does not take away I appreciate coders who put in an effort nor does it take away the sympathy I feel for how hard it is to get people to adopt a new format.

And even if I don’t like lossy formats it’s still nice if they are improved.

On a related note, I noticed G’MC who boasts about their internal high precission floating point pipeline and deep color support – and all that , does not seem to be able to support PNG beyond 24 bit.. (nor JPEG-XL it seems.)

Ans the number of software packages that output outdated gif files (and its shitty palette based color model) but refuse to output animated PNG is also unacceptable and vexing to me. Especially since they all do out-/input PNG single images, so the codebase is there.

I guess as a person trying to introduce formats you have to make the tough choice of supporting the old standard and be backwards compatible and then having people only use half the capabilities in practise, or making a format that is NOT backward compatible and then have nobody wanting to implement it..

Bah, humbug – I have a revolutionary compression algo that can beat ANY current one by many orders of magnitude (and no it’s not “middle-out”). The encoder simply uses AI to identify the content of the original picture, which it then saves in a tiny human-readable text file (“me stroking a cat”), then the (also AI-based) decoder just takes that and presto, re-creates the original picture from the prompt. Well, yeah, occasionally I still end up with fourteen fingers and/or a cat head, but dang, you can’t beat that compression ratio! Genius, eh…?