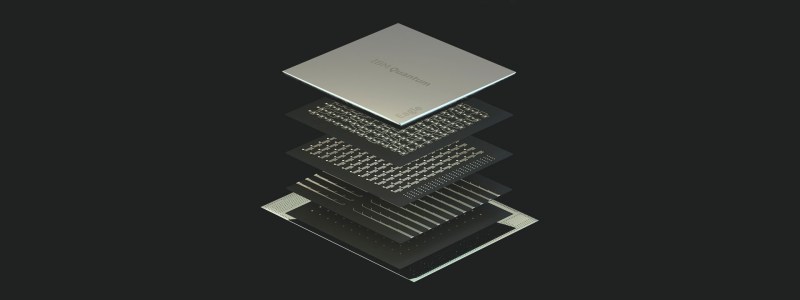

The central selling point of qubit-based quantum processors is that they can supposedly solve certain types of tasks much faster than a classical computer. This comes however with the major complication of quantum computing being ‘noisy’, i.e. affected by outside influences. That this shouldn’t be a hindrance was the point of an article published last year by IBM researchers where they demonstrated a speed-up of a Trotterized time evolution of a 2D transverse-field Ising model on an IBM Eagle 127-qubit quantum processor, even with the error rate of today’s noisy quantum processors. Now, however, [Joseph Tindall] and colleagues have demonstrated with a recently published paper in Physics that they can beat the IBM quantum processor with a classical processor.

In the IBM paper by [Yougseok Kim] and colleagues as published in Nature, the essential take is that despite fault-tolerance heuristics being required with noisy quantum computers, this does not mean that there are no applications for such flawed quantum systems in computing, especially when scaling and speeding up quantum processors. In this particular experiment it concerns an Ising model, a statistical mechanical model, which has many applications in physics, neuroscience, etc., based around phase transitions.

Unlike the simulation running on the IBM system, the classical simulation only has to run once to get accurate results, which along with other optimizations still gives classical systems the lead. Until we develop quantum processors with built-in error-tolerance, of course.

It used to be that mathematicians could do calculus faster in their head faster than a computer compute it. Progress has a way of making claims of superiority by old means over a burgeoning technology seem both petty and temerarious.

Except for all the things that fail… flying cars, 99% of battery technologies, …

To quote Prof. Collier. “Quantum quantum quantum. Quantum quantum? Quantum quantum quantum quantum.”

Very true, it’s almost hilarious how vacuous this marketing is.

What new hardware instructions do quantum and AI platforms offer?

AI platforms offer nothing new. It’s nothing but matrix math, done fast.

There’s a good argument that quantum computers may never be faster.

The technology used to build them is inextricably linked to the manufacture of classical computing hardware: our ability to etch microcircuits into silicon.

Given that increasingly complex quantum systems require more and more redundancy to check answers, this may continue to consume all of their theoretical perfromance lead and more, far into the future. So far an alternate, satasfying solution to the problem of quantum noise has not been found and may not be possible.

Side note: the quantum world is a significantly less convenient one.

Quantum information can never be coppied, meaning it cannot really be reused after a computation is complete. All inputs must be re-prepared each time. Stored information can also randomly disintegrade due to the actions of someone taken a whole universe away.

The quantum world is also one where we are forced to trade being able to exchange simple symbolic information across the internet, which can take any form, for one where we have to physically transport fragile particles. Those particles are real, physical artifacts.

Maybe some day quantum calculators will be better for some difficult tasks, but nothing in the entire corpus of everything we know about how they will work suggests that they will make a good replacement for general purpose classical computers, much less our massive, global network of connected, classical computers.