Once upon a time, a computer could tell you virtually nothing about an image beyond its file format, size, and color palette. These days, powerful image recognition systems are a part of our everyday lives. They See Your Photos is a simple website that shows you just how much these systems can interpret from a regular photo.

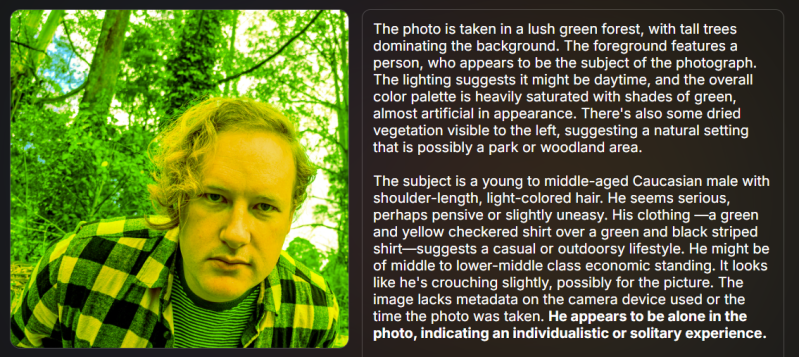

The website simply takes your image submission, runs it through the Google Vision API, and spits back out a description of the image. I tried it out with a photograph of myself, and was pretty impressed with what the vision model saw:

The photo is taken in a lush green forest, with tall trees dominating the background. The foreground features a person, who appears to be the subject of the photograph. The lighting suggests it might be daytime, and the overall color palette is heavily saturated with shades of green, almost artificial in appearance. There’s also some dried vegetation visible to the left, suggesting a natural setting that is possibly a park or woodland area.The subject is a young to middle-aged Caucasian male with shoulder-length, light-colored hair. He seems serious, perhaps pensive or slightly uneasy. His clothing —a green and yellow checkered shirt over a green and black striped shirt—suggests a casual or outdoorsy lifestyle. He might be of middle to lower-middle class economic standing. It looks like he’s crouching slightly, possibly for the picture. The image lacks metadata on the camera device used or the time the photo was taken. He appears to be alone in the photo, indicating an individualistic or solitary experience.The saturation level of the greens and yellows is unusually high, hinting at possible digital editing post-capture. There is a very slight blur, particularly noticeable in the background which could be from a smaller aperture or shallow depth of field when captured, creating a focus on the subject. The color alteration and seemingly intentional focus on the subject suggest it may not be a candid shot but rather a posed photograph, possibly with an artistic or stylistic goal.

The model did very well—easily determining both the vague type of locale , and the fact that my shirt implies I don’t have a Ferrari at home in my garage. It also picks up on the fact that it was a posed photograph with certain artistic intent.

Back in 2014, the webcomic XKCD stated that it would be an inordinately difficult task for a computer to determine if a digital photo contained a bird. These days, a computer model can tell us what’s in a photo down to the intimate details, and even make amusing assertions as to the lives of the subjects in the image and their intentions. We’ve come a long way, to be sure.

Machine vision is still far from perfect—there are ways to fool systems and areas in which they still don’t stack up to real humans. The only thing we know for certain is that these systems will continue to improve.

le upvote

I wonder what it would make of a full face mask

ref: https://www.schneier.com/blog/archives/2024/12/full-face-masks-to-frustrate-identification.html

There are many celebrity full face masks, but you can also get your own custom full face mask (sock) created/printed for less than a tenner. They are currently good enough to fool most AI, but not close inspection by any human.

I mean, the article has a link to the site, you had the video. Let me do that for you. I took paused the video, grabbed and cropped a screen capture, and uploaded it:

Another test

“The image shows a close-up view of a person wearing a dark red hoodie. The background is blurry and indistinct, suggesting an indoor setting. The foreground is dominated by the person’s face and upper body. The person’s hands are visible, adjusting the hood of the hoodie. There’s a faint watermark or logo visible in the upper right corner of the image.

The individual appears to be a man with a beard, likely in his late twenties or thirties. His expression is somewhat serious, perhaps even slightly concerned. He is of Caucasian descent. The image seems to have been captured using a smartphone camera, given the relatively close-up, unprofessionally-lit shot and the slightly grainy quality. His eyes suggest a level of alertness, perhaps indicating he is engaged in an activity demanding attention.

The lighting in the image is soft and diffuse, making it challenging to discern precise details. The color saturation is slightly muted, adding to the somewhat subdued mood. The focus is predominantly on the person’s face, with a shallow depth of field blurring the background. The overall image quality is acceptable for a casual snapshot or video capture, not indicative of high-end professional equipment. The man’s attire points towards a relaxed or informal setting. There appears to be some slight blurring or motion blur, suggesting a slightly shaky camera during capture, typical of handheld smartphone recordings.”

Looks like she got her team.

ha! wouldn’t it be wild if AI all developed from a bird project years ago…

Maybe good idea for captcha:

Look at the picture and choose inoffensive prefix ;-)

Human would be my answer since it’s often impossible to tell which one of the 48 genders the pictured person is.

Interestingly enough, AI can very accurately determine race from rather tightly cropped and data-sanitized images of X-rays as well

It’s not very surprising. It’s a pretty well documented fact that as a child we learn how to identify unique people based on certain facial features. For different ethnicities different parts of the face become “important”. So if a child grows up in say rural China they will learn what distinguishes Chinese faces but then struggle greatly with separating out people from say central Africa as what makes the face unique is not something they have learned to look at. As such the computer model just learned these different traits for the different races.

You’re re-creating phrenology through the fake-objective perspective of AI.

Grok can do this too. You can also let it explain posts on X. It will find context, translate parts, explains what the included image means and can even create a new image based on all the rest of the information combined. I think it’s pretty impressive how much it can do and how fast the technology is going. It can now even take a post without context and explain it with the context based on other posts and previous posts by the user. It can render images on how it thinks the person behind an account looks based on the persons post history. It’s pretty crazy.

Here is the description that Grok gave me based on the person in the post above. I don’t know how accurate it is.

Yet while I try to get any of the tools to write a roast on purpose, I get an error of some sort. This one goes straight to dirty smelly hippie. Is this another case of techbros vs you know, actually going outside and meeting regular folk?

I didn’t read it like dirt smelly hippie. I thought it was about the hair and outfit. His hair isn’t brushed and his shirt isn’t closed up, which would fit the conventional grooming standards part. I didn’t ask it to insult him, I just asked to describe him and the surroundings and this is what it told me. The prompt was “take a look at this picture and describe the person you see and the environment the person is in, take note of details in the picture and write a story about it”. If you actually tell it to be insulting it will start to insult on purpose. Fun mode can be quite entertaining, but I didn’t use that. Grok can very easily roast people if you ask it to.

I could see it as a future aid towards determination of post and poster social score.

“somewhat untamed further supports the idea of a free spirit or someone who doesn’t adhere strictly to conventional grooming standards.”

is a hilarious line with some wild implications. it seems to suggest only straight hair meets “conventional grooming standards” which… other social commentators can run with

I tried it with a picture of a Capybara firing a machine gun I’d generated with Google ImageFX earlier, and got the following output:

“The image shows a pattern of vertical stripes in shades of dark purple and greyish-black. There is no discernible foreground or background, as the pattern fills the entire frame. The consistent repetition of the stripes creates a sense of depth, although there are no objects or features to indicate a specific location or setting. The subtle variation in the shade of purple suggests that there might be a gradient effect present, though not immediately apparent.

There are no people or other life forms present in this image. It lacks any characteristics that allow for inference about emotions, racial characteristics, ethnicity, age, economic status, or lifestyle. Because of this, there are no activities depicted either.”

This is so bad, it’s not even wrong.

That sounds more like a problem in the conversion from the image format into the internal format required for feeding to the network, not a fault of the network itself.

I wouldn’t be surprised if Google was putting some kind of AI fingerprint on outputs. Training future models on AI-generated work would degrade quality, so it’s in their interest to know if the images they’re scraping are real or not.

I tried with an AI generated face from https://thispersondoesnotexist.com/ and it worked. Although it is probably not using any google trained network.

Write a script to do it automatically! Keep feeding the bots!

This. The only thing better than not being in an invasive database is to poison said database until it becomes useless. I like to make deliberate mistakes in captchas too. Don’t give away good training data for free.

Are you blocking canvas access in your browser and/or have anti-fingerprinting features enabled?

Well my comment containing the word “Jetpack” was not auto-banned this time. But it took a cacheless browser refresh just to see my post in the first place. What a mess…

And yet another cacheless browser refresh was required just to see my post…

Same again – Cachless refresh needed just to see my post in Firefox :-(

Are you new? It has always taken a cacheless browser refresh to see your posts. Next you will complain that you can’t reply to a post without cookies enabled.

@easy: I can pretty much guarantee I’ve been posting here here on HaD much longer than you have! And yes, this cachless refresh problem is not new, but now it has become a persistant annoyance. I don’t care about cookies, for the most part they are automatically “managed” on my machine. I try to never block ads here on HaD, they deserve to be paid.

And the “email me new comments” switch is still broken.

there was only one question on my mind and the answer is blue-black:

On a picture I tried it on: “The girl’s cardigan has a subtle imperfection—a small, almost invisible hole near the button”.

Too smartypants for its own good – it’s the buttonhole!

They are looking for people to run cardigan ads for.

If there were any “intelligence” here, it would know that a photo that has overall green lighting, will make other objects appear green, and therefore no determination can be made about the subject’s clothing color.

Even more disturbing is the fact that it says “indicating an individualistic or solitary experience” since they are alone in the photo. It doesn’t consider that maybe someone else is taking the photo? Who trained this AI anyway?

Someone please ban all this stuff immediately. Humans are perfectly excellent at drawing incorrect conclusions and locking in on inaccurate opinions themselves without authoritative seeming answers from some well meaning but truly ignorant “intelligence” doing it for them.

I think the people is a position to ban it would still use it regardless. Other ideas?

FWIW this reads a little dishonest; they are prompting the API to include information about people, “age, race, emotion, or economic status” Try it with a non-human subject, for instance:

“The spectrogram itself doesn’t depict people or their activities; it’s a representation of an audio recording. Therefore, there’s no information about the people involved or their characteristics like age, race, emotion, or economic status. It is likely that the audio was recorded digitally using a computer and processed for visualization. The specific camera or device is not directly observable; rather, the image itself is a product of software that processes audio data. The overall visual structure of the spectrogram suggests it’s a relatively long audio recording.”

Great work. These systems ought to reveal those instructions they are given up front, it would go a long way toward addressing my concern stated above.

When I feed it images I’ve photographed that don’t include any humans, it consistently tries to guess at my ethnicity, gender, and economic status, despite anything that would indicate that. These guesses seem to be full of ridiculous amounts of bias; for example, apropos of nothing it always guesses the photographer (me) to be Caucasian (correct), upper class (no), and male (nope), usually based on cues involving lighting and interests demonstrated.

But this is what it’s told to do. These are the datapoints that Google is interested in. Wealth, health condition, willingness to purchase…

I like the experiment of feeding it white noise to reveal what the prompt is!

Those points are what ente.io configured their query to google APIs with; that’s where this comes off as dishonest.

If it was “give an accurate description of the photo” the results would be very different.

You too can pay out the nose to make a misleading website to ~make commentary on visual perception AIs~ market your product.

Good point! I think we missed the point initially that this website was “selling” a private image-storage service!

I fed it a few drawings of some of my characters and it did a pretty good job of describing them! I also found the ominous framing of the website around it to be absolutely hilarious as a result.

It’s taken me until now to realise that you’re the same Lewin who writes for the Autopian ;)

Thats it I’m going amish

Those “amusing assertions” are a lot less amusing when the system is being used to racial/social/class/income profile you.

What odds will someone I’ve me on whether this is already being used in places to alert employees about people who look “undesirable”, are “theft risks”, or even just people who might “ruin” the aesthetic of your store and need to be escorted off the property?

I’m sorry ma’am but your credit rating and purchase history mean you shouldn’t be here. You need to leave before your presence disgusts our REAL customers. The police have already been called.