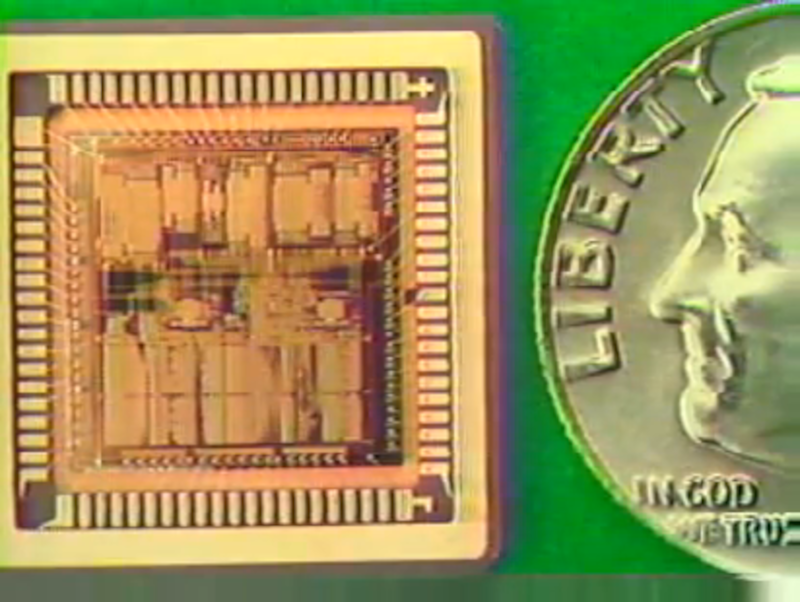

If you have never heard of the Bellmac-32, you aren’t alone. But it is a good bet that most, if not all, of the CPUs in your devices today use technology pioneered by this early 32-bit CPU. The chip was honored with the IEEE Milestone award, and [Willie Jones] explains why in a recent post in Spectrum.

The chip dates from the late 1970s. AT&T’s Bell Labs had a virtual monopoly on phones in the United States, but that was changing, and the government was pressing for divestiture. However, regulators finally allowed Bell to enter the computing market. There was only one problem: everyone else had a huge head start.

There was only one thing to do. There was no point in trying to catch the leaders. Bell decided to leap ahead of the pack. In a time when 8-bit processors were the norm and there were nascent 16-bit processors, they produced a 32-bit processor that ran at a — for the time — snappy 2 MHz.

At the time (1978), most chips used PMOS or NMOS transistors, but Bellmac-32 used CMOS and was made to host compiled C programs. Problems with CMOS were often addressed using dynamic logic, but Bell used a different technique, domino logic, to meet their goals.

Domino logic lets devices cascade like falling dominoes in between clock pulses. By 1980, the device reached 2 MHz, and a second generation could reach speeds of up to 9 MHz. For contrast, the Intel 8088 from 1981 ran at 4.77 MHz and handled, at most, half the data in a given time period as the Bellmac-32. Of course, the 68000 was out a year earlier, but you could argue it was a 16-bit CPU, despite some 32-bit features.

It is fun to imagine what life would be like today if we had fast 32-bit Unix machines widely available in the early 1980s. History has shown that many of Bellmac’s decisions were correct. CMOS was the future. Many of the design and testing techniques would go on to become standard operating procedure across the industry. But, as for the Bellmac-32, it didn’t really get the attention it deserved. It did go on in the AT&T 3B computers as the WE 32×00 family of CPUs.

You can check out a 1982 promo video about the CPU below, which also explains domino logic. Instruction sets have changed a bit since then. You can see a 68000 and 8086 face off, and imagine how the Bellmac would have done in comparison.

That line about “we don’t have to, we’re the phone company” would make Microsoft look tame.

lol; “one ringy-dingy…”

My first job, we had a Data General Eclipse-based Danray PBX. The guys who watched over it had a quirky sense of humor. Inside their office, above the door, where you normally wouldn’t see it, they had a bumper sticker, with the Bell logo, and “WE DON’T CARE WE DON’T HAVE TO”

They helped me when I was doing a voice messaging system, by setting up and helping me to interface to, an E&M trunk. They also gave me my first copy of Notes on the Network. This was in the late 80s, so after breakup of the Bell System.

you can tell it’s the 70’s by the ashtrays in the dev meeting

One of my jobs used to be to support the servers and storage arrays at a very big tobacco company’s UK offices, they were attaches to a distribution warehouse so the whole site was customs bonded and staff could buy tobacco products at cost (but couldn’t take them off site) and could smoke at their desks so every desk had an ashtray and the place absolutely stank of tobacco smoke.

That was the case all the way up to July 2007

Funny, reading the post I thought this was an architecture that was never actually used, some kind of “might have been”. Then I Googled it and discover it was the processor used in the 3B2 line. I owned a couple in the mid 90’s, one bought from a BBS friend and another from a college junk sale. And as I recall, they were pretty common – I’d had the chance to buy a couple others but didn’t.

From the article above, “It did go on in the AT&T 3B computers as the WE 32×00 family of CPUs.”

The M68000 is arguably a full 32-bit processor. The original CPU would have handled an OS and user applications up to 4GB if the address bus had been 32-bits instead of 24-bits. In fact it could have handled 8GB or more with a split user/supervisor space, thanks to the FC pins. Its only limitation for full 32-bit code is the 16-bit offsets, but that’s not so different to many early RISC processors and can be worked around (e.g. MOVE.L #offset,D7: MOVE.L 0(A0,D7.L),D0).

Which brings us to ARM, which accomplished a comparable 32-bit processor in 1/6 of the number of transistors as the BellMac-32.

I did several designs with it. Internally, it looks very much like a PDP-11. I like its architecture much more than the x86. Intel won because they got there first, not because the architecture was the best.

“Intel won because they got there first” I think that’s partly true. Pretty sure the 8086 wasn’t quite the first 16-bit microprocessor. The GI CP1600 beat it and it was very much like a pdp-11. The CP1600 might not have been the first either.

In 1977 Intel were already having issues, because the 32-bit iAPX 432 (started in 1975) was soaking up resources and delivering little. But they had a customer who wanted a CPU with more than 128kB of address space, so as a stop-gap they implemented the 8086, but importantly made it assembly, upward compatible with the 8080.

The 8086 gave Intel enormous leverage, because CP/M machines already dominated the business world so they might have won anyway. However, IBM’s choice of the 8086 pretty much sealed it, IMHO because “Nobody ever got fired for buying IBM.”

The bit depth of any CPU certainly depends on where you look. To a software engineer, the 68000 is 32-bit, no question, regardless of the address space supported. To a hardware engineer, it’s a 16-bit chip because it’s got a 16-bit data bus. To a chip engineer, it’s a 16-bit processor because it’s got 16-bit ALUs (three of them) and some 16-bit data paths, but also a 32-bit processor because it’s got 32-bit registers.

In Computer architecture, the Bitty-ness of a computer is defined by the size of arithmetic and logic a CPU can handle in a single instruction.

It’s not even really the internal size of the ALU. The Z80, an 8-bit CPU had a 4-bit ALU that was double-pumped to perform like an 8-bit ALU. The early Data General Nova minicomputers also had a 4-bit ALU, but were always (and correctly) called 16-bit computers, because the ALU iterated 4 times across a register to perform 16-bit arithmetic and logic operations (it could do a 16-bit AND operation; and 16-bit shifts/rotates).

It’s honestly not the size of the data bus, because then the 80386sx would have been called a 16-bit processor, but it never was. The Pentium 1 would ben called a 64-bit processor, but it never was. The 8088 would have been called an 8-bit processor, but it never was.

That term, I’m afraid is only ever used to refer to the data bus for marketing purposes.

16-bit bus also meant that 32-bit operation was slower than 16-bit. There were more weird things in it’s implementations

Anyone else thinking we need a VHDL core or complete emulator for it?

:)

The datasheet says 8088 could run at 5 MHz. It was IBM PC that ran at 4.77 MHz. Not that is changes much, though.

With modern gaming lipid nitrogen cooling setup it’s probably possible to easily overclock 8088 to 4 GHz. As usual, only the heat is the limit and if you got lots of liquid flowing to CPU at -196°C then it can remove plenty of heat. I bet LTT could do it for a fun challenge.

citation needed

Smith, David A. Overclocking: A Practical Guide. New York: Tech Press, 2015.

Brey, Barry B. The Intel Microprocessors. 8th ed. Upper Saddle River, NJ: Pearson, 2013.

Hennessy, John L., and David A. Patterson. Computer Architecture: A Quantitative Approach. 5th ed. Cambridge, MA: Morgan Kaufmann, 2011.

Baer, Jean-Loup. Microprocessor Architecture: From Simple Pipelines to Chip Multiprocessors. Cambridge: Cambridge University Press, 2010.

“Overclocking a CPU to 7 GHz with the Science of Liquid Nitrogen.” PC Gamer, June 9, 2017.

The one in the list that I could search for was absolutely not about a 8088

Best I can find for the 8088 is around 12MHz, just a tad shy of 4GHz you might say.

Theres a lot more to CPU speed limits than just heat.

The magic smoke is another.

I very much doubt the 8088 transistors could transition at 1000X the speed designed for, cold or not cold.

I don’t even mention the signal propagation, degradation, interference, capacitive effects, interfacing with the rest of chips of the system for achieving some useful, etc, etc, etc, etc, etc…

If you claim something like that in a technical website, or in a one doing thing “because we can”, better provide some evidence, don’t you think?

Let him be, he’s one of those blank pseudo tech channel (LTT) watchers, they’re all messed up.

Hewlett-Packard developed and sold, in the HP9000 Series 500, a full 32-bit single chip CPU that hit the market in 1982 and ran 14MHz. It is generally acknowledged as the first commercial 32-bit microprocessor, the 68000 being 16-32, and Belmac not being a commercial offering. Based on the date on the video it may have been slightly earlier than Belmac as well.

Pls ignore, fat finger mistake.

I once got a job offer from the HP division that developed the 9000/500, but turned it down to work at Bell Labs. During my HP interview, they asked “What five instructions does a RISC processor need, minimum?” I demonstrated that only four were needed – they loved that answer.

Unfortunately, HP (as a superb engineering company) and Bell Labs are long gone. Both had a commitment to R&D unmatched in recent memory.

In the late 90s a friend dumpster dived an AT&T 3B2-310 and gave it to me, correctly surmising that I’d enjoy playing around with an old System V server, and it was a fun machine to work with. Eventually its hard drive failed and though I managed to find a compatible replacement I found that one of the big stack of floppies for the OS install had developed some bad sectors sometime in the 14 years since they were last used so that was the end of that.

Still, from playing with the compiler and assembler I can attest that its oddball CPU was closer to a modern 32-bit machine than anything else from that era =:-)

From the linked article: “The 16-bit Intel 8088 processor inside IBM’s original PC released in 1981 ran at 4.77 MHz.”

The 8088 could handle 16-Bit instructions, but was 8-Bit, just like IBM PC.

It was the 8086 which was a real 16-Bit chip (16-Bit registers, ALU and address bus), with 20-Bit adress bus.

The NEC PC-9801 from 1982 was a real 16-Bit system using it, for example.

*16-Bit data bus

In Computer architecture, the Bitty-ness of a computer is defined by the size of arithmetic and logic a CPU can handle in a single instruction.

It’s not even really the internal size of the ALU. The Z80, an 8-bit CPU had a 4-bit ALU that was double-pumped to perform like an 8-bit ALU. The early Data General Nova minicomputers also had a 4-bit ALU, but were always (and correctly) called 16-bit computers, because the ALU iterated 4 times across a register to perform 16-bit arithmetic and logic operations (it could do a 16-bit AND operation; and 16-bit shifts/rotates).

It’s honestly not the size of the data bus, because then the 80386sx would have been called a 16-bit processor, but it never was. The Pentium 1 would ben called a 64-bit processor, but it never was. The 8088 would have been called an 8-bit processor, but it never was.

That term, I’m afraid is only ever used to refer to the data bus for marketing purposes.

No, it’s not that simple. Look what Dave Haynie wrote.

The IBM PC was an 8-Bit design and the 8088 was an 8-Bit CPU from point of view of the support chips.

I did, and corrected him earlier. I’ve given you a list of examples of computers and CPUs that would have had totally different designations if the bus width or internal chip design determined whether the processor was 4-bit/8-bit/16-bit or 32-bit.

The interface logic for the original IBM PC wasn’t the same as for IBM’s 8085 based predecessor, because the CPU interface was nearly the same as for the 8086 apart from the data bus width and a couple of control pins. Namely, the meanings of the control pins worked the same way on the 8088 and 8086 and had the same pins. The only differences are that A0 is present on the 8088 and that D8..D15 aren’t shared with A8..A15 on the 8088 as they are with the 8086. IBM could not have taken their 8085 predecessor and substituted an 8088 to get an IBM PC; neither could they take an IBM PC and substitute an 8085 to get a working computer: the differences are more than just a data bus.

However, you could take an 8086 and add a few latches to turn it into an 8088 and similarly, take an 8088; add some latches to turn it into a (slower) 8086.

Other examples: the WDC65C816 is the 16-bit successor to the 6502, but it still only has an 8-bit data bus. It is a 16-bit CPU. The Sinclair QL was never described as an 8-bit computer even though it had a Motorola 68008 CPU (a 32-bit MC68000 with an 8-bit data bus).

Honestly, it saves a lot of convoluted explanations for the Bitty-ness of a CPU if one just accepts it’s the natural size of data in a register, a Central Processing Unit can process in a single instruction.

The Z80 has 16 bit add and subtract, with and without carry/borrow.

I worked on this project as a summer student between Cornell undergrad and Stanford graduate school. I wrote the code for the “Graybox” that tested the microprocessor after manufacture.