Originally known as FORTRAN, but written in lower case since the 1990s with Fortran 90, this language was developed initially by John Backus as a way to make writing programs for the IBM 704 mainframe easier. The 704 was a 1954 mainframe with the honor of being the first mass-produced computer that supported hardware-based floating point calculations. This functionality opened it up to a whole new dimension of scientific computing, with use by Bell Labs, US national laboratories, NACA (later NASA), and many universities.

Much of this work involved turning equations for fluid dynamics and similar into programs that could be run on mainframes like the 704. This translating of formulas used to be done tediously in assembly languages before Backus’ Formula Translator (FORTRAN) was introduced to remove most of this tedium. With it, engineers and physicists could focus on doing their work and generating results rather than deal with the minutiae of assembly code. Decades later, this is still what Fortran is used for today, as a domain-specific language (DSL) for scientific computing and related fields.

In this introduction to Fortran 90 and its later updates we will be looking at what exactly it is that makes Fortran still such a good choice today, as well as how to get started with it.

Modern Fortran

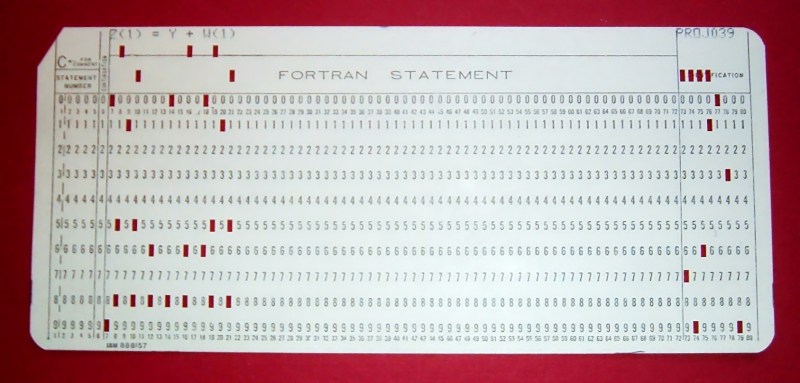

The release of the Fortran 90 (F90) specification in 1991 was the first major update to the language since Fortran 77, and introduced many usability improvements, as well as the dropping of punch card era legacy requirements and limitations to variable lengths and more. This is the reason for our focus on F90 here, as there is no real reason to use F77 or earlier, unless you’re maintaining a legacy codebase, or you have a stack of new cards that need punching. In case you are dying to know what changed, the Wikibooks Fortran Examples article has examples of early FORTRAN all the way to modern Fortran.

Of note here is that a modern Fortran compiler like GCC’s GFortran (forked from g95) still supports F77, but users are highly encouraged to move on to Fortran 95, a minor update to F90, with GFortran supporting up to F2008, with coarray support, as covered later. F2018 support is still a work in progress as of writing, but many features are already available.

Support for the latest standard (F2023) is not widely available yet outside of commercial compilers, but as a minor extension of F2018 it should eventually get rolled into those features as the implementation progresses. This means that for now F2008 is the latest standard we can reliably target across toolchains with new Fortran code.

Beyond GFortran there are a few more options, including Flang in LLVM and LFortran in addition to a gaggle of commercial offerings. Unless you intend to run high-performance computing code on massive parallel clusters like supercomputers, the GNU and LLVM offerings will probably suffice. Simply fetch either GFortran or Flang from your local package manager or equivalent and you should be ready to start with programming in Fortran.

Hello World

As with most DSLs, there is very little preamble to start writing the business logic. The ‘Hello World’ example is most succinct:

program helloworld

print *, "Hello, World!"

end program helloworld

The program name is specified right after the opening program keyword, which is repeated after the closing end program. This is similar to languages like Ada and Pascal. The program name does not have to match the name of the file. Although there’s no explicit specification for what the file extension has to be for a Fortran source file, convention dictates that for F77 and older you use .f or .for, while F90 and newer uses generally .f90 as extension. Although some opt to use extensions like .f95 and .f03, this is rather confusing, isn’t recognized by all compilers and all of those are similar free-form Fortran source files anyway.

Tl;dr: Use .f90 for modern Fortran source files. Our Hello World example goes into a file called hello_world.f90.

The other point of note in this basic example is the print command, which looks somewhat cryptic but is quite easy. The first argument is the format, reminiscent of C’s printf. The asterisk here simply means that we use the default format for the provided value, but we could for example print the first string as an 11 character wide field and a variable string as 8 wide:

character(len=8) :: name = 'Karl' print '(a11,a8)', 'My name is ', name

This also shows how to declare and define a variable in Fortran. Note that if you do not start the code with implicit none, variable names that start with I through N are considered to be integer type and real otherwise. With gfortran you can also globally do this by compiling with the -fimplicit-none flag.

A total of five basic types are supported:

- real

- integer

- logical (boolean)

- complex

- character

Finally, comments in Fortran are preceded by an exclamation mark !. It’s also relevant to note that Fortran – like all good programming languages – is case insensitive, so you can still write your Fortran code like it’s F77 or Fortran II, yelling in all caps without anyone but the people reading your code batting an eye.

Hello Science

Now that we have got a handle on the basics of Fortran, we can look at some fun stuff that Fortran makes really easy. Perhaps unsurprisingly, as a DSL that targets scientific computing, much of this fun stuff focuses around making such types of computing as easy as possible. Much of this can be found in the intrinsic procedures of Fortran, which make working with real, integer and complex values quite straightforward.

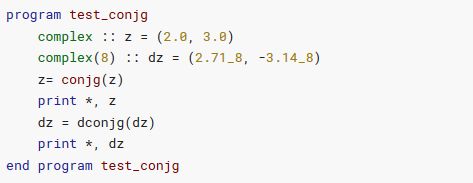

For example, conjugating a complex number with conjg:

Basically, whatever mathematical operation you wish to perform, Fortran should have you covered, allowing you to translate your formulas into a functional program without having to bother with any dependencies or the like. This includes working with matrices and getting into the weeds with numerical precision.

Even better is that you’re not stuck running your code on a single CPU core either. Since Fortran 2008, Coarray Fortran (CAF) is now part of the specification. This feature enables parallel processing, which is generally implementing on top of the Message Passing Interface (MPI) protocol, with gfortran implementing CAF support. Depending on the selected option with the -fcoarray= flag, gfortran can use the ‘single’ image (thread) option, or with a library like OpenCoarrays it can use MPI, GASNet, and others.

When using CAF with MPI, the program (‘image’) is distributed across all nodes in the MPI cluster per its configuration, with synchronization occurring as defined by the program. With OpenCoarrays available from many local OS repositories, this means that any budding molecular scientist and astrophysicists can set up their own MPI cluster and start running simulations with relatively very little effort.

The DSL Life

Much like when we looked at COBOL, a DSL like Fortran is often misunderstood as ‘yet another programming language’, much like how in the 1980s some thought that the scientific and engineering communities were going to switch over to Pascal or Modula-2. One simple reason that didn’t happen lies in the very nature of DSLs, with them being developed explicitly to deal with that specific domain. It will always be possible to do everything a DSL does in any generic programming language, it’s just that a DSL can be optimized more exactly, and is often easier to maintain, since it is not generic.

There are many things that Fortran does not have to concern itself with, yet which haunt languages like C and its kin. Meanwhile, reimplementing Fortran in C would come at considerable cost, run into certain limitations of the language and potentially require compromises in order to get close to the original functionality of the DSL.

Running a cluster with Coarray-based Fortran source, churning through complex simulations that require utmost control over precision and where one small flaw can waste days of very expensive calculations, those are the kind of scenarios where you’re not looking for some generic language to poorly reinvent the wheel, but where you keep using the same – yet much more refined – wheel that has gotten us this far.

Fortran has a unique language feature.

The calculated GOTO.

As in GOTO intvar

Where intvar contains a linenumber.

For some reason, nobody takes my requests to add a calculated COMEFROM to the language seriously.

They should at least add the calculated goto to rust.

That and adding support for self modifying code.

What kind of nerfed language won’t let you self modify the code?

It’s no wonder the kids just said ‘F it, we’re using JS for everything!’

Thought…

Can JS get access the a site’s JS via the DOM and self modify the code?

Node.js really should contain at least one piece of self modifying code, just for completeness of the pig Fing.

I digress.

Self-modifying code doesn’t exist in Rust because runtime polymorphism and metamorphism are intrinsically unsafe.

You know what else is unsafe? Life, yet we do it all the time.

I don’t care about rust and it’s artistic fanatics doing artistic screeching every time some C programmer uses a pointer.

fwiw the proper C mechanism for calculated goto is switch/case. many compilers also allow you to save &&label into a void*var; and do goto *var;, which you could combine with an array of pointers to achieve the same effect. switch/case is better.

Lacks the simple elegance of Goto linenum.

Won’t see such gems as:

NextIterEntryPt = NextIterEntryPt + recalcHydroShadowPrices

NextIterEntryPt = NextIterEntryPt + recalcPipelines

Which of course requires a bunch of code to sit at magic linenumbers.

And as they hadn’t had the sense to make each offset a power of 2, they had no way of checking if any of the offsets were already added to NextIterEntryPt.

Of course the right way was just booleans and code at the top of the loop that does the right recalcs.

Technical debt.

What can you say about applied math Phds?

For sure, never let them design databases.

Yes, I saw this in the real world on real code, likely used by some of your local utilities to forecast grid operations.

I should have gotten out of there even sooner.

no it doesn’t lack the simple elegance of goto linenum :)

ugh i hope i get the mark up correct

switch (goto_value) { case 10: printf("hello world\n"); case 20: goto_value=10; break; }switch really is exactly the same as line numbers :)

.. and in the PLC world, we use it to implement steps/ state machines, preferably for about everything, ( ST: CASE pc OF … ), making industrial machinery be very deterministic more-machines.

There is more than one way to drown a litter of kittens.

You are missing the point, nextIterEntry is state.

It is modified throughout the code.

Not quite. cases are stuck in the switch block. Fortran goto hss no such limit.

I learned to code on the mighty Sinclair ZX81 and that could do calculated GOTOs. Just saying.

Your wish had been granted long ago. Phi nodes in SSA are, essentially, a COMEFROM.

A calculated Comefrom.

Whenever the program counter matches an intvar, it jumps to the comefrom statement.

Even if the comefrom has not executed yet.

Not 100% unique – Sinclair BASIC offered the same feature, suggesting in the manual that it could be used in place of ON…GOTO (which was not offered).

BBC BASIC has it also

Fortran makes me facepalm. It’s as verbose as Python, yet with all the issues that are also present in C++.

If you need to do university-level math then MATLAB can be 🏴☠️downloaded🏴☠️ for free from the 🕸 and it also has Simulink. If you need direct-to-machine code then it’s easier to just use C++. For graphical stuff nowdays there’s choice: UE5, Unity etc.

With the difference that Matlab is interpreted and very slow compared to compiled Fortran. Matlab is good to proof the math but the heavy lifting should be definitely done by Fortran. With ATLAS instead of BLAS. It becomes up to 100x faster than Matlab. Had to do all that in grad school. Oh, and nothing wrong with verbose, can read a code from 1970 easy enough.

Matlab being slow is nowadays a myth, you can even use ATLAS instead of BLAS should you want to. Do not be afraid to give it a try even for “heavy lifting”

However, a Real Programmer can do anything in FORTRAN.

You want verbose, you hire a COBOL weenie.

Or you could just use GNU Octave

It was good enough for all my university courses.

in the late 1960s one could learn from Daniel McCracken’s A Guide to Fortran IV Programming.

And there were subtle distinctions from Fortran II, which was on the old IBM 7000 series mainframes.

Yes! That’s the book I used at the University to help me become a better programmer.

FORTRAN, used in EE university for a few courses – actually had a few advantages over the usual languages.

For highly controlled print formats, doing numerical analysis, massive equations – it’s great for that.

But I always blew the stack, one mistake with a missing/extra parameter and it craters. It does crash hard.

I have no desire to go back to fortran again. Fortran IV was my first actual language (360 assembly, and a couple others, came first) and I still get the occasional shakes thinking about tracking down bugs in complicated code. Then repunching the card or cards. (Who forgot to turn on the automatic serializer on the cardpunch, then dropped a deck? fun times)

Ratfor was a hella improvement, in the sense that it reduced the hardcore spaghetti factor, but I still chose Pascal or Lisp when I could, for the easier debugging, if that says anything about how much I do not miss it..

Dont know about your education, but mine was: pull deck from keypunch, add Sharpie diagonal stripe across eleven edge, waller. If you reassembled a deck wrong, the diagonal shows blanks and offset cards. Nothing a sorter wouldn’t fix. Oh you didn’t number your cards?, ohwell.

Started with keypunch as a student, and still dealt with them until the mid-1980’s or so. In that world, for me, VDT transition was a 5252 connected to a system/34 in maybe 1980 or 81. Eliminated the need for serializer, as the editor did dynamic numbering. Then 3278’s and televideo’s connected to a 360, upgraded to 370, at which point I was not doing much fortran,, mostly assembler, some Pascal, C, and Ada. Then eventually a 3090 soon before I shifted and haven’t touched big iron since, professionally.

In the early days, the worst were the guys with routine jobs in line ahead of you. Four JCL cards and a reel of tape in hand, the cards to SREQ a tape mount and standard load, well used, swelled with sweat, itching to jam the feed on the card reader. Me, with two boxes, minimmally touched by human hands, never a fault.

My least favourite runs using cards were SPICE simulations. Order wasn’t that big of a deal for most blocks, so scrambling a deck was fairly easy to deal with, but fixing netlist errors with no search function was maddening.

i am surprised that my disagreement isn’t a nitpick but rather the article’s repeated explicit main thrust!

i do not think fortran is a DSL. it is YAPL.

the thing about fortran is that it’s sseminal for so many things that we take for granted in every programming language today. it didn’t elide subroutine calls because scientists don’t need them. it lacked them because it was approximately the first “third generation programming language”! fortran wasn’t against function calls…rather the opposite. its most impressive result is showing that these problems are not only solvable but actually easy to solve. it inspired everyone that came after it to think of the incredible feats of mechanical language translation that are possible.

Backus himself is quite explicit about it being Yet Another Programming Language in a 1978 retrospective on fortran. in as many words he says that the deign goal of the language was simply to have something that could justify the creation of a compiler. even in 1978, he knew he had to beg the audience to put themselves in the mindset of 1954 so that they could understand how incredibly primitive fortran’s innovations were https://dl.acm.org/doi/abs/10.1145/960118.808380

and Backus himself became fond of functional style, once he had seen a couple decades of progress built on top of fortran’s core innovations. it’s clear that he views the 1950s as “trapped in the Von Neumann style” https://dl.acm.org/doi/10.1145/359576.359579

fortran the language is just a footnote to the invention of the concept of a compiler for a high level language. the expression syntax wasn’t designed around the needs of scientists so much as it was designed around the theory that something new could exist in the world: an expression parser.

anyways in real life fortran’s limitations are all pain for actual scientists. 25 years ago i had a summer job porting a large fortran nightmare from VMS to Linux using the awful Absoft fortran compiler. unfortunately i didn’t have access to actual users of the program so i found that 99.99% of the code was ‘unscientific’ and specifically unsuited to fortran. it was all UI and I/O. the actual math turned out to be just a histogram!!!! in real life, scientific programmers are often buried under mountains of poorly-factored code to implement very clunky UIs on top of I/O hardware that doesn’t exist anymore. and fortran was just about the worst language for all of the above.

fortran itself belongs in the dustbin of history. it’s much worse for every problem domain than languages that came decades later. it is decidedly not like cobol or ada that are still valued within their domains.

but the accomplishment that it represents is the foundation of everything that came after. it is the first yet another programming language.

anyways i’m writing partly because i’m frustrated with the misrepresentations in the hackaday article. but i also hope some readers see the living history represented by these articles that Backus published which we can still read today. if anyone’s reading the articles i’m linking to, i hope they also glance at Guy Steele’s impassioned defense of function calls. if Bjarne Stroustrup had taken this seriously instead of delivering a meditation on the importance of inlining to mitigate the mythical expensive procedure call, we wouldn’t have to suffer C++ today. https://dl.acm.org/doi/10.1145/800179.810196

It kind of threw me when they referred to Fortran as a “DSL”. “What domain?”, I wondered. I remember that at least in the 1980s, Fortran was the standard programming language for any graphics application running on a VAX/MicroVAX/VAXstation. Alas, my involvement with VAXen was exclusively hardware, so I never got around to trying to write anything in Fortran. Consequently, while at least in principle I like its simplicity (vs. C/C++), I’m not triggered whenever the name comes up. But now I have to ask, what do you see as Ada’s domain?

not an expert on Ada but i have the impression that people who have used Ada in safety-critical systems love it. by contrast with C, C leaves a lot of things undefined because they want it to be efficient on any old piece of hardware. but as near as i can tell, Ada has the opposite mentality…everything is very rigidly defined, which has a small performance hit in some cases but makes verification easier

This article should come with a trigger warning! I spent far too much of my earlier career writing Fortran when I should have known better.

Started with pure F77, found F90/95 a huge improvement but it felt like the wheels fell off after that.

There is too much in the later functionality seemly designed explicitly for the unwary programmer to shoot themselves in the foot.

Where are the FORMAT statements?

I kinda learned FORTRAN after I already knew BASIC and it felt like a practical joke. With Basic on a microcomputer, like the Commodore PET at school or the Altair in my bedroom, you wrote and debugged a program interactively – and given my skills at the time, more time was spent debugging than writing.

With Fortran, you wrote your program on paper, punched the cards and then mailed (US Postal service) the cards to the computer and they mailed the results back. I never got anything to actually compile and run.

“learned FORTRAN after I already knew BASIC”, “With Basic on a microcomputer, like the Commodore PET at school or the Altair in my bedroom, you wrote and debugged a program interactively”

My Uni had an IBM S/370 Model 165-II, 8 MiB core memory in 1976 when I learned FORTRAN. The non-networked system cost in excess of $400K. Undergrads were only allowed to use punch cards; TSO terminals were assigned to some seniors and grad students.

BASIC on a $1K microcomputer was a joy in the late ’70’s.

ITERATIVE programming on a mini-mainframe was simply not allowed for beginners.

I remember receiving my JCL card with only 5 seconds of CPU time allowed per batch run. I would have sacrificed my M-I-L for Coarray Fortran back on my first massive FORTRAN project in the 1980s. Then came Delphi.

“The program name is specified right after the opening program keyword, which is repeated after the closing end program.” This is unclear. Which is repeated after the closing end program? the opening program keyword or the program name? Taken as read it’s the keyword.

“The program name which is repeated after the closing end program is specified right after the opening program keyword.” Per the writer’s example. Still needs editing, should be two sentences.

Calling all caps “yelling” is a tactic adopted by the early internet bullies (who often go by the name “mods”) and should be eschewed.

It is easier to read ‘mixed’ caps dialog between people. However, early on, every program (Basic is what I remember) was written in ‘upper case’.

I agree, notice I don’t use all caps but the bullies/mods always need a knuckle rapping.

That’s because the Model 33 Teletype machine, which was the go-to I/O terminal for interactive programming in the early 1970’s, did hot support lowercase letters.

Nor does the 026 or 029 keypunch, which was the goto for programming when FORTRAN was invented.

I find it amusing somebody writing for a website that uses a font that turns everything into all caps, criticizing all caps.

Anyone else remember the “FORTRAN Coloring Book”?

I don’t … but here is a link to the book.

https://archive.org/details/9780262610261

Hmmm, Above url only gets you a couple of pages…

The following seems to have all the pages availabe.

https://archive.org/details/FortColBkKaufman/page/n39/mode/2up

Oh my gosh, yes! I took Dr. Kaufman’s FORTRAN course at MIT. We were given a mimeographed copy of the book, which was still a work in progress in 1976. I already knew FORTRAN from my summer job, so I didn’t use the book very much. But I still remember one gag: “The * is also known as the Nathan Hale operator, because he had but one asterisk for his country.”

Yes! I still have my instructor’s copy, from about 1979.

When I was in college, meteorology students were required to take a F77 course. The highlight of the course was creating a line editor (much like DOS’s EDLIN program). It was interesting for me because my dorm wasn’t equipped with many VT100 terminals, so I had to make due with the teletype that was repurposed as a printer.

Fast forward 4 years when the same students had to write numerical weather prediction programs. My classmates by that time were, like, “Time to put that F77 class to use so we can run our predictions on the campus mainframe.” I, on the other hand, had gotten ahold of a government surplus 8086-turbo and a copy of QBASIC. The program ran slowly (especially at double-precision), but at least I didn’t have to put in multiple weeks of debugging and finding every unwanted space and misplaced parenthesis like my classmates did.

When I entered college, the punch cards were on the way out. The seniors were the last to punch cards and wait for output from the data center. Missed that era by ‘that’ much! :D Yesss. Still most engineers (and of course all us CS majors) had to take Fortran. Also had a ‘programming landscape’ class to cover a lot of languages like Cobol, lisp, etc.) Pascal was the learning language of the times for CS. When I got a DEC Rainbow, I also was able to buy Turbo Pascal (1.0?) to write my ‘homework’ locally. Also bought Nevada Fortran as well at the time. Then upload to VAX and re-compile there. Saved a ‘lot’ of debugging time and phone time, or have to go to the lab and wait for a terminal. Fortran came in handy later as I helped maintain plasma boundary software (vehicle re-entry from space problems) for the scientists on the side of my main job. Nothing wrong with Fortran. It did and does the job then and now. I don’t dabble with it much anymore. I did buy an ‘up to date’ book on ‘modern’ Fortran to keep me up to date!

Interesting. I’ll probably get into Fortran at some point (likely after Ada). Right now I’m having to get used to using Python, as all the lab and engineering jobs in this part of the country primarily use Python and occasionally use Matlab for their simulations (at least the ones that mention it in the job listings).

Good writeup. My experience has been that people can write bad code in a great many different languages.

And good code too, for that matter.

But I still haven’t forgiven the computer scientists for telling me I had to switch to Algol. Wasted a lot of time on that one.

Wow,

Blast from the past.

My Masters Thesis (of 1983) was an adaptation of Green’s Theorem as a general purpose analysis package.

Unlike FEM, in with the entire volume must be discretized, Greene’s Theorem only requires the surface of the body to be discretized. Unfortunately, it’s also singular at the point of application and requires a lot of hand waving not to crash the system.

It was all written in Fortran 77. Luckily my school allowed graduate students to use dumb terminals unlike under grads who still had to punch cards. I had the mini mag tape with my dissertation on it until not too long ago.

At the end of the day, my thesis concluded that it’s basically a technique that’s not really suitable for numerical analysis. My professor was not particularly happy about the outcome, but I got my degree.

Fun times

When I started college in 1972, It was Teletypes and punch cards. So, I got myself a Teletype (thanks, unknown tech at the Teletype facility in Framingham, MA!) and, quite coincidentally, a job as a Teletype repair person at the Computing Center (they were obviously desperate, and I was a quick learner). So, I had an employee account on the mainframe (unlimited time, no charge) which was a CDC 3600/3800, soon to be upgraded to a CDC CYBER74. I managed to avoid punched cards altogether, though there were plenty who didn’t all through my time at UMass/Amherst. Still have a Computing Center logo’d card above my PC :-)

All my undergrad classwork was done on my Teletype (I bought an Omitec 701B acoustic coupler for $350) from my dorm room. Later, I managed to assemble a DEC VT-05 from scrap parts and moved up to 300 baud! I would have absolutely KILLED for a spreadsheet and a word processor. My only experience during school with a micro was a SWTPC 6800 system that I built memory boards for one summer. FORTRAN? I tested out of it as a freshman, and most of our coursework was done in Pascal (which I have never used again). Obviously, the 6800 system was assembly language, and I also picked up PDP-11 assembler and COMPASS assembler for the CDC CYBER.

When I started work at Data General in 1978, my first project was the D200 terminal, built around the Motorola 6802, so I was quite comfortable writing the keyboard scanning firmware in 6800 assembler.

And it was all downhill from there :-)

I attended Umass/Amherst from 1977 to 1981. Initially, I learned Fortran, Basic and Pascal on one of the old terminals that used the wide “greenbar” computer paper with the tractor feed holes. As time went on, we gained access to monochrome (amber or green) video terminals. I was fascinated by the APL video terminals which included many symbols that were not found on a conventional keyboard.

I also recall learning 6502 Assembly language on a tiny device (either Rockwell or Honeywell ?) that had a little red 8-character LED display.

In one of my classes, the Final exam was to write a flowchart for the Space Invaders arcade game.

KIM-1

There is visual Fortran, if that helps… here in Intel’s quick start guide.

https://www.danysoft.com/estaticos/free/IntelVFwinprimpasos.pdf

I had to take a Fortran class at Georgia Tech in the 80s. But my professors in subsequent classes allowed us to write in any language we liked. I always used Pascal.

IMHO, “running assembler/machine code using commands” is what Python is all about, too. I don’t suppose the two have much in common, but the idea is nearly the same.

Having said that, Python is really ANSI C in its core, so, technically, it is ANSI C with the extra-friendly layer on the top of. I imagine there are Python libraries out there that out-C any well-written ANSI C due to being machine-code at its core. I think there used to be some kind of assembler-compiler Python library, too, forgot the name.

Translation – some of that machine-code FORTRAN may have already been embedded in other places.

“I think there used to be some kind of assembler-compiler Python library, too, forgot the name.”

CPython and PyPy are examples.

In the late 70’s we the undergraduate computer science students used to help the engineers get their programs to work. This would be our first experience of dealing with users who would await us when we got jobs. It proved computer science was actually useful not just Turing machines and Chomsky languages.

I learned how to program in f77 back in the day, during my bachelor. I found it to be an efficient and laconic language, which can be really fast for certain applications.

Now I’ve moved on to other languages, but I still remember fondly all the times I had to debug f77 codes, optimizing it to the millisecond!

From the examples, Fortran looks quite a lot like BASIC. I guess that BASIC evolved out of Fortran, similar to many languages these days are based on the C syntax.

I am sort of curious of how punch cards compare with punched paper tape. A few days ago I saw an Usagi video about the Bendix G15 (1956) and that machine uses paper tape.

https://en.wikipedia.org/wiki/Bendix_G-15

Paper tape seems quicker to access and probably more reliable (Just roll up the tape again if you drop it). but the punch cards may have an advantage for things like sorting such as with the (very) old Hollerith machines. It also looks like the tape has a significantly higher data density. Holes are closer together, less waste paper around it, and the paper is thinner too then the very thick puch cards.

The difference was how they were edited. You could hand edit a card deck – moving sections of code, replacing a line etc. Editing paper tape was a lot harder, and less likely to work.

Card punches could duplicate cards and parts of cards, so they essentially served as line-orientated editors, with the user handling block operations!

The downside was the occasional invitation to a game of 3655 card pickup! After the first time you learn to write the card number on the card in pencil.

Two runs a day should be enough for anybody – the computer center.

The common solution to n-card pickup was to draw a diagonal line across the top edge of the card deck with felt pen, which would instantly indicate any out-of-order cards. https://en.wikipedia.org/wiki/File:Punched_card_program_deck.agr.jpg

to a point… To large a deck (I ran to several boxes at times) and too many edit cards would foil the system. On a student income, buying another couple boxes and duping the set was not a real option.

I learned early: use the serializer on the punch. Use the lefthand digits first, so edit cards could get intermediate numbers (unpunched columns were treated, roughly, the same as zero by the card sorter)

Basically what @PernicuousSnit said, but also: I imagine punch cards could be loaded much faster than paper tapes; i.e., the more durable punch cards would survive a high-speed card reader like you would have for a mainframe. (The thinner paper tapes would be more prone to breakage in a high-speed reader.) Based on my one experience with paper tape, generated by an Intel 8080 development system, I don’t think editing a paper tape was really feasible. I don’t remember the paper tape having the characters printed on the tape, so you would have to figure out where and what to edit based on the patterns of the holes. Possible, but not really feasible!

Punched paper tape is a byproduct of the use of the ASR-33 as a user terminal. And it was 5-bit (Baudot) for a long time before it was 8-bit. My mom used 5-level tape when she was in the Navy during WWII.

Punch cards are a legacy of the old IBM “unit record equipment”, accounting machines, sorters, reproducing punches, etc, which predate computers.

I suppose you can think of punch cards as individual records, whereas paper tape is more like magnetic tape…you get the whole shebang, no rearranging the individual items on the tape.

It kind of was comparable to BASIC, indeed.

I remember there was Tiny Fortran (“FORM”) by a Japanese company, Hudson Soft.

The company nowadays is more known for its video games business.

https://archive.org/details/Io19806/page/n86/mode/1up

“A good programmer can write Fortran in any language”.

The young people at work look at me with their WTF faces.

As long as they don’t leave the room by sheer annoyance, I think it’s okay. :)

I want to give as commenter very interesting thing l like For FORTRAN

Way of computer coding and I see today after 1999 or 2001 GC again back to 20 years later , because it doesn’t run widely dominated vast way Java , JavaScript , and so on . Therefore , please I will follow it to use Al prompting coding be free to recover It. Good lack to your team , i will be ypur team follower .

“A gentle introduction to Fortran” sounds like “how to sit on a cactus confortably” to me as I remember trying to learing Fortran from an old book on that subject. I got nothing.

…had many fiends and acquaintances with sterling incoming credentials bomb out of one of the world’s premiere tech universities because they were “…too smart…” to abide the indignities of the uni’s introductory EE courses (“…stupid, dumb*ss ‘flash-light’ courses…”).

What you get out of a course–on any subject–is in proportion to what you put in.

Sometimes that proportion is, amazingly, not linear, but exponential.

“…I remember trying to learing[sic] Fortran from an old book on that subject…”

Most times, old books are the best books.

Missing here is perhaps Fortran’s greatest legacy, it was the defacto language for NASA and the Apollo program. While the actual command and control software on the flight equipment was all highly tuned machine code, Fortran was used for most of the analysis and planning required to ensure mission success. My dad worked at NASA for 40 years and represented NASA on the 77 and 90 Fortran committees. Fortran was at the vanguard of the first paradigm shift in software development, the transition from machine code to compilers. Until the proliferation of C in the 80s, every computer manufacturer depended on the speed of their Fortran compiler to be able to compete. This performance advantage is why so much misguided Fortran was developed in the 70s. The cost of computer resources greatly outweighed the cost of lowly programmers. Now we waste megawatts of energy to replace costly software engineers. We are right in the middle of the next great transition from high level compiled/JIT languages to context and prompt engineering for LLMs, 68 years since the invention of Fortran.

I think Fortran’s greatest legacy is that it was so widely used, both in and outside the scientific realms. And, thanks to the efforts of people like your father, Fortran has improved over the decades and is still widely used, although less visible. Check out the Fortran Discourse forum: https://fortran-lang.discourse.group/

I last programmed in Fortran (77) in the 1980s on a NASA project. We used F77 for the heavy math calculations; for the real-time monitoring, control, and I/O of hardware; for the administrative side of things (coordinating the scheduling and flow of data between different disjoint systems); for the terminal-based operator UIs; etc., etc.

Fortran 77 was arguably better than C. (And I was a C/C++ developer for most of my post-Fortran career and I love C.) A simple, but profound example: F77 had safe strings; C still doesn’t after decades of uncountable time/expense/effort spent on coping with known and unknown bugs related to string handling. C has pointers and dynamic memory allocation, but neither is a deal-breaker in programming: like you point out, we sent men to the moon without them. (And the computer/OS we used on our 1980s project, VAX/VMS, did support pointers and dynamic memory allocation in F77 and other languages via its system library.)

I wouldn’t say Fortran software developed in the 1970s was “misguided”. You’re right that computing resources were much more expensive, but I would say the 70s (and any era) had the same distribution of programmer talent as we have now. People wrote good programs then, they wrote bad programs, and they wrote middling programs. I don’t think the evolution of tools over subsequent decades has greatly affected that balance. (My opinion, of course, and as someone who is a little skeptical of the current resurgence of AI!)

“A Gentle Introduction To Fortran”

Thanks for the great article.

Would have been great even–perhaps outstanding, even, if you had been less gentle.

(I think any naysayers here need to take this, from DL Parnas, to heart:

“One bad programmer can easily create two new jobs a year. “)

Great article and I enjoyed reading the comments even more. Much nostalgia. Fortran was my programming language for a science degree in the late seventies. I was lucky enough to be able to enter my code into a teletype and store it on via side attached punched tape printer. Used the rolls of tape as decorations at Christmas. Sadly Fortran requiring jobs paid poorly so I moved to COBOL for a few years before discovering that the BOFH career path paid even better :-)

Still have my 6-volume set of the “IBM Programmed Instruction Course” entitled

“FORTRAN IV for IBM SYSTEM / 360”: four stand-alone chapters(books), plus one “Illustrations” book and one “Problems” book.

Good stuff; good reading. Still extremely, absolutely relevant today. Even if you know nothing about the language, you can spend five easy-paced week-nights with this and be 95% up to speed on modern Fortran.

No; it’s not for sale.

In the Early 80’s, creating images of the Mandelbrot set was a big fad among programmers where I worked.

Most of the Mandelbrot generators used some type of shortcut to simplify generation of the set, usually on one of our IBM 370 mainframes, printing “paint by numbers” image maps that we colored with crayons — via text on lined paper.

We happened to get an early copy of RM Fortran 77 for DOS on 8088 and were able to create a program consisting of a few lines of Fortran math, and an assembler put-pel routine to generate and display the set in fine detail on my IBM 5150 with CGA. It only took overnight to display the entire set. Re-running the program with new coordinates let one zoom the image to any interested area. Again Over Night.

The speed was amazing for the time, especially on a the newfangled PC. RM Fortran was amazing for its speed and efficiency back then. Limiting the iterations when no value was found sped things up a lot, but you lost detail around the vacant (black) areas when you zoomed in. Interpreted basic would take many days for the fine level of detail we achieved with RM Fortran overnight. (RM Fortran did imaginary numbers intrinsically, so only one small formula we needed.)