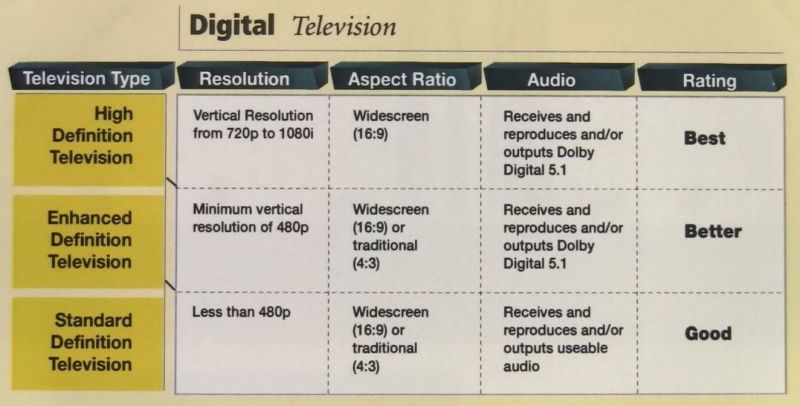

Although to many of us the progression from ‘standard definition’ TV and various levels of high-definition at 720p or better seemed to happen smoothly around the turn of the new century, there was a far messier technological battle that led up to this. One of these contenders was Enhanced Definition TV (EDTV), which was 480p in either 4:3 or 16:9, as a step up from Standard Definition TV (SDTV) traditional TV quality. The convoluted history of EDTV and the long transition to proper HDTV is the subject of a recent video by [VWestlife].

One reason why many people aren’t aware of EDTV is because of marketing. With HDTV being the hot new bullet point to slap on a product, a TV being widescreen was often enough to market an EDTV with 480p as ‘HD’, not to mention the ‘HD-compatible’ bullet point that you could see everywhere.

That said, the support for digital 480p and ‘simplified 1080i’ signals of EDTV makes these displays still quite usable today, more than SDTV CRTs and LCDs that are usually limited to analog signals-only at regular NTSC, PAL or SECAM. It may not be HD, but at least it’s enhanced.

I had a Sony Trinitron Wega CRT TV 4×3 with 1080i and DVI Input. The playback quality from DVD via DVI port was significantly better that common RCA back then.

The model was similar to KV-30XBR910. The specs don’t mention “EDTV”, but HDTV and 1080i.

So it’s basically the same as standard definition PAL but with progressive scanning?

SD PAL is 576. This would be SD NTSC.

Yes, but in the video the proposed standard, or one version of it, would have been 525 lines progressive.

Which puts it very close to what became standard DVB-T, which wasn’t an improvement over standard analog PAL because the broadcasting system was privatized and the new owner removed “redundant” transmitters to save money, making the signals weaker. Then they started charging money for bandwidth instead of fixed allocations, so the channel operators choked the channels so tight the picture went to mush and the error correction started skipping even in areas with good reception.

And 5.1 audio, yes.

As @mrallinwonder at ATI, I spent most of the 2000s working on chips for the transition from full analog to full digital TV. It was far messier than just EDTV. TV vendors would market a TV as “HDTV” if it did even one of these tasks: accepted 480p or 1280p or 1080i input (analog yprpb or VGA, or digital HDMI or DVI or MPEG2 ATSC ), put it through multiple digital<>analig conversions for processing, and finally displayed that HD input on anything from 480i CRTs to 800×480 plasma to 1080i LCDs. For giant rear projection TVs where signals had to travel many feet inside the cabinet, digital RGB was often converted to analog to limit RFI from the cables, then back to digital on the next board. It took quite a few years before it all settled down. Fun times.

I had an Plasma EDTV.. Sucker weighed in at about 150 Lbs.. Some Super Off. OFF Brand.. Picture was great, as I was Big Ugly Satellite Dish, Cable, and DVD’s.. The Part I did not like about it was the Software was Sctchey.. You had to Re-Select your Input for Vid and Audio each time. I had to Build my own Corner Wall Mount, and Strengthen my Wall with Plywood for Anchor Points..

Finally when TV’s got Cheaper and Lighter, I settled in on the Sony Quarto.. That machine is Likely 10 to 14 years old.. It was a PAIN to get that Plasma off the Corner.. Three People to get it off..

Beautiful Picture.. Not a Smart Set.. But the DVD/Blue Ray hooked to it is.. as well as the ROKU Box..

Would love an article on AHD which is still a thing for cheap analogue CCTV cameras:

https://www.ebay.co.uk/itm/326659062693 for example claims to be AHD and 1920 x 1080 resolution for 16 monies.

I looked at AHD a while back but sourcing chips or any real info seemed to be quite hard.

AHD is the lowest quality of the 3 HD analog CCTV formats. It crams the 1080p video into 33 MHz of bandwidth. HD-TVI looks much better with almost double the bandwidth. Most cameras these days can be switched between all 3 formats.

There was a proposal for some analog HD TV system here in Europe. And this got blocked by “government”. The argument was that it would just be an intermediate standard, and that everybody would have to switch to digital a few years later. “Industry” itself would “of course” be perfectly happy with changing TV standards every 5 years. So industry had to take on the task of making a “future proof” digital TV standard.

I think this was in the early ’90-ies

I’ve never had cable (nor satellite) TV in the place I live now (for over 20 years). Internet has completely displaced tv for me, and I have never had a “digital TV”, so I don’t know when the switch finally happened.

here’s a related complication that really drives me crazy if i think about it too much

1366×768. it’s the norm for budget laptop displays (i finally got 1920×1080 for the first time a few months ago). to my considerable surprise, it’s also the norm for 720p LCD TVs!?? but 768 isn’t 720!

i had no idea, until i hooked up a raspberry pi to my livingroom TV. with regular TV-style content, it isn’t perceptible to me. but i still use the default classic X11 background (it doesn’t seem to be default anymore), a mesh kind of like chain link fence. and the moire pattern is very stark. to make it worse, HDMI does allow custom resolutions to some extent, but (IIRC) the X dimension has to be divisible by 8 or 16, which 1366 isn’t. so it seems to be impossible to actually get the TV to render in its native resolution.

it upsets me when i think about how many times my content was re-sampled, including one completely non-negotiable resampling in the TV itself. ridiculous. i try not to think about. i try to tell myself that above pathetic resolutions, for moving pictures, resampling is free in time/energy/complexity, and quality. i know it’s a lie but

anyone know why the TV manufacturers don’t make 720p panels?? given the enormous volume of like 32″ 720p TVs that have been manufactured, i’m just astonished that they don’t make a panel that’s actually suited exactly to that purpose?

I’m confused by the statement that EDTV doubled the lines of NTSC from 525 to 1050 lines. NTSC does have 525 lines but only 480 (digital) or 485 (analog) have picture information on them. The remainder are used for such things as sync, closed captioning, and teletext. Did EDTV sets really double the composite lines signal before extracting the the picture information or did the writer assume that all 525 lines contained picture information?

I thought this was going to be about a special channel for ads about viagra and cialis…

And hen they moved on to the trainwreck of “4k” nonsense.

Sure, let’s take a standard name that already exists within the realm, and just use it with a different meaning anyway…

But let’s ALSO use it inconsistently, because confusing people will make us more money.

Then let’s rename the old standard…

The DCI 4k standard is WAY older, and actually 4k (4096×2160).

There is also a 2k standard, which makes perfect logical sense at 2048×1080.

If they are going to make a 16×9 resolution from DCI 4k and call it “4k” then they need to call the 16×9 1080p “2k”.

1440p is NOT 2k.