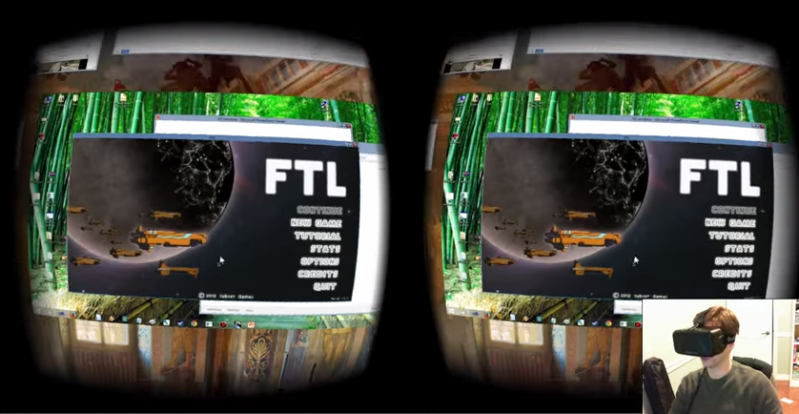

[Jason] has been playing around with the Oculus Rift lately and came up with a pretty cool software demonstration. It’s probably been done before in some way shape or form, but we love the idea anyway and he’s actually released the program so you can play with it too!

It’s basically a 3D Windows Manager, aptly called 3DWM — though he’s thinking of changing the name to something a bit cooler, maybe the WorkSphere or something.

As he shows in the following video demonstration, the software allows you to set up multiple desktops and windows on your virtual sphere created by the Oculus — essentially creating a virtual multi-monitor setup. There’s a few obvious cons to this setup which makes it a bit unpractical at the moment. Like the inability to see your keyboard (though this shouldn’t really be a problem), the inability to see people around you… and of course the hardware and it’s lack of proper resolution. But besides that, it’s still pretty awesome!

In the future development he hopes to add Kinect 2 and Leap Motion controller integration to help make it even more user intuitive — maybe more Minority Report style.

One step closer to that cool rig Ed wears on Cowboy Bebop.

I refuse to die until I have those goggles

well Ein laid waste to that cult with the brain console. call me when I can log into the matrix with my google credentials.

Has he thought of using your real world environment add the background? I know that it might be heavy to have a 1080p camera strapped to your head. But it would give you awareness of your surroundings including your keyboard. It would also make it a very augmented reality feel, which I personally like.

I like the idea but I hope the rift will eventually have a relatively low-res stereographic pair of “view thru” cameras that point down from the line of sight to the keyboard. Imagine if you could see a mostly transparent view of you keyboard overlaying the bottom of your stereographic virtual monitor view. Maybe that could even be dynamically faded in/out based on your activity on the keyboard. If you had a keyboard that could sense the proximity of your fingers or even maybe have a vibration sensor in the keyboard that would queue the app plug-in type keyboard overlay to fade in as you were about to start typing. I’ve so many ideas, but so little coding prawess…

People keep whacking their heads against their desk and lamps and what not when looking around corners in VR. Something that filters and overlays stereo-reconstructed video on your view when it’s at close proximity, or rapidly approaching you / moving around could be an important safety feature. – When the collision warning is over, it just fades those objects out and lets you seamlessly re-immerse in VR.

Just put another Kinect on the Oculus facing out and add the input…

I remember from an old episode of Tales From The Afternow, how people jacking in to the virtual web would rig up defenses around them to protect them while they were exposed in reality. String to pull out their jacks, or fancy ones with motion sensors and servos.

Of course if you have something like a Kinect tracking you already, you could just set it up to alert you if there’s also any movement that’s not you happening outside of the goggles. Perhaps even ghost them into the 3D environment.

my question is, how readable is txt at a “distance”?

Its not very readable, you can move closer to see more clearly, but I fine the pixel layout in the DK2 really hurts text.

Resolution be darned, if you can’t discern pixels and the smallest elements are sharp enough you are fine. that said, if the Note 4 panel is going to be used next (after the current 2nd gen dev kit), then I think the res problems may finally be a non-issue.

Can you move your head in 3 dimensions with this program? and can you move closer to the monitors? If so the resolution is not a problem.

This is the solution I have been waiting on, now to get it to connect to multiple hardware inputs, like a game console, remote server, or android device. Maybe each screen could be a virtual computer. Wonder if the Note 4 on its own will be enough hardware to do most of this stuff, Samsung needs to be on that.

Honestly, this is what I see as a truly powerful use for this sort of device. Especially for mobile use. As for the “not seeing the keyboard” issue, put locator tags on the corners of your keyboard, where it can be seen by the rifts webcam (love it or hate it, it’s a feature in the new prototypes). And then have that track the location of your fingers in relationship to a virtual version of the keyboard…look down, and in the simulation, is a real time representation of your finger tips over a image of your keyboard.

Minority Report style AKA repetitive gorilla arm style. Leave the hand waving interfaces to the movies, please.

I will keep my keyboard and mouse but having a huge virtual monitor is just too cool.

I think you could put in some handwaving interface components, but they should not be the primary input method. For instance, if you were actually using this to be productive and not a quick tech demo, moving your head 90 degrees up and to the right is rather uncomfortable. Being able to use your hands to manipulate the sphere and drag the content that is over there into a more neutral position would be nice. Also controlling the zoom with your hands so you didn’t look like a chicken all day would be great.

Now just mount two cameras on the glasses so the screens could float in the real environment and your all set.

I

my keyboard is blank anyway.

Every time you try and operate these weird black controls that are labeled in black on a black background, a little black light lights up in black to let you know you’ve done it.

– Zaphod Beeblebrox

Check out the Occulus Wayland compositor.

So does it mean the user must close one eye or the other so the user could focus on a screen?

Oh wait, don’t mind me, I didn’t watch the vid.

I’m still waiting for a real J.A.R.V.I.S., then I’ll get into VR

Other then weight, why not use a Kinect style depth sensor, you would have many channels of information for an AR solution, heck if you could add on a thermal camera as well. Just saying.

Instead of using laptops, they wear their computers on their bodies, broken up into separate modules that hang on the waist, on the back, on the headset. They serve as human surveillance devices, recording everything that happens around them. Nothing looks stupider; these getups are the modern-day equivalent of the slide-rule scabbard or the calculator pouch on the belt, marking the user as belonging to a class that is at once above and far below human society. The payoff for this self-imposed ostracism is that you can be in the metaverse all the time, and gather intelligence all the time.

I don’t understand the complaints about seeing your keyboard. If you have a keyboard that isn’t stupid the ‘j’ and ‘f’ keys (on a qwerty keyboard) have knotches on them which allow you to always find the home keys. It’s 2015 people, if you’re using VR for multi monitors I’m assuming you can touchtype.

The bigger issue to me is how uncomfortable the DK1 was (and i’m assuming the DK2 is only marginally better. The other big one is how much work it is to get the thing on and off. It should be as easy as glasses.

People hitting their head on stuff are just idiots. Use some common sense folks.

)

Sorry, had to close that.

VR tends to make me feel nauseous but I still want to play/work with it. I have only tested a DK1 for a short while and in video games. I always thought it would be neat to use VR more for everyday use like software development. I dreamed of having my source code in the center of my view and then documentation, datasheets and any other things hovering around the main view. The issue of “seeing through” the goggles always popped up in my head. Then I saw vrvana’s headset.

I’ve followed this company for a few months now but haven’t put any money down, yet – if I do at all. Anyway, VRVana has a headset that has two cameras embedded in the headset. Check it out here: http://www.vrvana.com/ ( I have no connection to the company at all )

If anyone has links to other companies or projects I would love to see them.

Do you require it do be in windowed mode to be able to render the applications?