Analog sticks have become a core part of modern video game controllers. They also routinely fail or end up drifting, consigning expensive controllers to the garbage. [sjm4306] recently did a repair job on an Oculus VR gaming controller with drifting analog sticks, and decided to do an autopsy to figure out what actually went wrong.

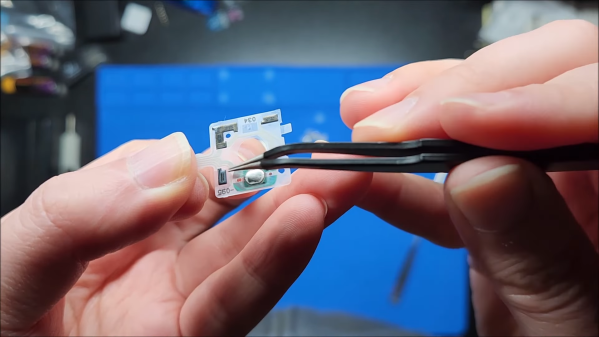

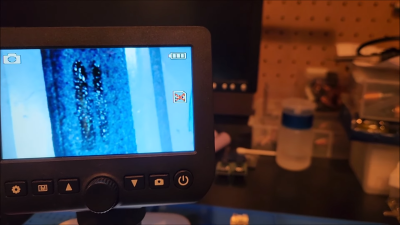

The video starts by taking apart the analog joystick itself by prying off the metal case. Inside, we get a look at the many tiny individual components that make up a modern thumbstick. Of most interest, though, are the components that make up the potentiometers within the stick. Investigation revealed that the metal contacts that move with the stick had worn through the resistive coating on the thin plastic membrane in the base of the joystick, creating the frustrating drift problem.

It doesn’t have to be this way. Analog sticks in modern controllers could be manufactured with higher-quality components that don’t wear so easily. After all, it’s hard to imagine a 90s video game controller wearing out as fast as this modern Oculus unit. But everything is built to a price, at the end of the day, and that’s just how it goes. Video after the break.

Continue reading “Autopsy Of A Drifting Thumbstick Reveals All”