Sometimes there’s just no place like your desktop. You’ve already got your favorite development tools and references setup or installed and it’s a pain when you’re trying to work on an unfamiliar, or simply uncustomized, system. On your desktop everything is at your fingertips. If you want to search the web, the browser is just an alt-tab away. If you need a calculator, it’s right there to run. Your editor highlights syntax in your favorite colors already.

When developing on a Raspberry Pi, you leave all these creature comforts behind unless you spend the time to configure the Pi to your liking. Then it all gets wiped when you install a new distribution, like the recent change from Wheezy to Jessie. Even then it’s frustrating to switch back and forth between the desktop and the Pi because there is always something on the other system that you need. My usual comment is, “dirty word”, literally.

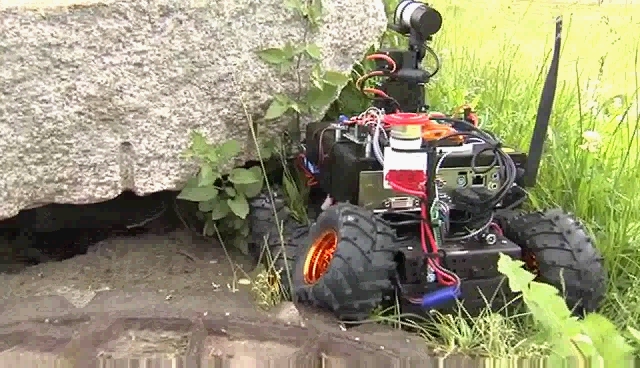

Cross-developing on your desktop is a very workable solution. We’re going to walk through setting up your desktop and a Pi to do this. This means loading a Pi ARM toolchain on your desktop and a debugging server on the Pi. This’ll let you develop and debug from in the comfort of your desktop. An added advantage is when you put that Pi in a robot you can debug over a wireless link.

Cross System Development

Cross-development is actually fairly common. You’ve already done it if you’ve compiled for the Arduino on your desktop. In the late 80s I used 80×86 based PCs to develop software for a STD bus system that used an 80286 processor. More recently for my 2013 NASA Sample Return Robot Competition entry I debugged my rovers, running XP on ITX PCs, over WiFi.

Cross-development is pretty natural approach for a Pi since it’s how Raspian gets built. I’ve been meaning to try this for a while and after the first pass on the Pi lidar project decided that the time was now.

Setting Up Cross Build Tools

I’m using Ubuntu 14.04 on my desktop and 15.04 on my laptop. I first setup the desktop for cross-development and then replicated the steps on the laptop to verify the process. This process should work okay on Linux and something similar can be done under Windows. Take care if you use an earlier distribution since the ARM development libraries may not be the ones used on the Pi.

The first step is to install the development tools on the desktop, or host system. From the command line run the following:

sudo apt-get install build-essential sudo apt-get install g++-arm-linux-gnueabihf sudo apt-get install gdb-multiarch

The first line installs the general build tools. The second installs the C and C++ compiler and build tools for the Pi’s ARM processor. The third installs a version of the gdb debugging tool that works with target systems regardless of the processor architecture. Make a note somewhere of the ARM architecture designation, arm-linux-gnueabihf-, because it is used as a prefix to distinguish the ARM tools from the host system tools. Be sure to get the dash at the end of the string.

To test if everything is working enter the following line, which should report the version of the G++ compiler installed, plus a lot of other information:

arm-linux-gnueabihf-g++ -v

Work back through the installation of the build tools if you get an error.

Hello World From Pi!!!

Your host system should now be ready to build a Raspberry Pi program. Let’s start with the ubiquitous Hello World with a little extra, in C++ of course. Create a file hello.cpp and enter this source code:

#include <iostream>

using namespace std;

int main() {

cout << "!!!Hello World From Pi!!!" << endl;

return 0;

}

To build the program run these two command lines:

arm-linux-gnueabihf-g++ -O3 -g3 -Wall -c -o -fPIC "hello.o" "hello.cpp" arm-linux-gnueabihf-g++ -o "hello" hello.o

The first line compiled the file hello.cpp and the second linked the compiler output to build the executable hello. Assuming all goes well, enter the following command and you should see output similar to what is shown in italics:

file hello hello: ELF 32-bit LSB executable, ARM, EABI5 version 1 (SYSV), dynamically linked (uses shared libs), for GNU/Linux 2.6.32, BuildID[sha1]=bc5e0850c0dc9d88ba60887facf52c00222dc746, not stripped

This tells us that the file is built for an ARM processor and not for your host system. Copy that file to your Pi, set the execute and owner permission bits, execute it, and it will generate the “Hello World” message. You’re now all set to cross compile for the Pi.

While this is great, the ability to debug the software on the target Pi is missing. A lot can be done by tracing execution with output statements or logs, but nothing beats stepping through an ornery routine that just refuses to work.

Debugging Across Systems

Debugging with the GCC toolset is done using the program gdb. The last item we installed, gdb-multiarch, provides the debug capability on the host system. Now we need to install a debug server on the Pi target system that we can connect to from our the desktop and control execution on the target. Switch over to the Pi and run the following install to get the target’s version of gdb:

sudo apt-get install gdbserver

Note that when gdbserver is running it creates a big security hole on the Pi. That’s not likely to be exploited since you’re running on your local network but don’t set it up to run on startup and then deploy the system in the wild.

Now determine where on the Pi you want to put the executable file. I created a directory remote which you’ll see in the examples. To run the server we’ll just use this simple command line, as follows, but there are options you should explore:

gdbserver --multi mysticlakelinux:2001

This command starts the server and tells it to run continuously. The computer name, or IP address, is that of the host computer. In my case it is mysticlakelinux. Finally, 2001 is the port that the host and target will use for communication. Be sure to remember it because it’s needed for debugging on the host.

We’re done with the Pi at the moment but leave the SSH terminal running. Besides keeping the server running it will display error messages and, when finally all is working, the output from the program. Now switch back to the host, open a terminal, and change to the directory of the “Hello World” program so we can start debugging.

The program gdb-multiarch is an interactive program. There are a host of commands that can be entered. It also takes a large number of command line options including the ability to read the interactive commands from a text file. We’ll just do enough to demonstrate it running the program because there is a plethora of options and capabilities.

On the host, enter the command:

gdb-multiarch

You should get a bunch of output followed by the prompt (gdb). In the future you might want to add the option -q to the command line. That stifles the introductory information. At the prompt, using the name of your Pi, enter the following commands and you should see the responses, as shown in italics here:

target extended-remote pizero.local:2001 Remote debugging using pizero.local:2001 set remote exec-file remote/hello [[no response]] file hello Reading symbols from hello...done.

The target command tells gdb the name of the target system, pizero, and the port, 2001, to use. If it worked, you’ll get the response shown; otherwise a timeout message. The set remote line specifies the file location to use on the Pi target system. Remember that the executable needs to be copied onto the target system every time it is changed. We’ll see how to do that from within gdb, below. The last command, file, is the path and filename on the host computer. This can be a directory other than where the debugger is running. Now, type the following and get the response as shown:

run Starting program: /home/rmerriam/development/ws/pi/hello/Debug/hello [[ a bunch more lines, some warnings and stuff to ignore for now ]] [Inferior 1 (process 1532) exited normally]

If it works you’ll see that last line. Now switch to the SSH terminal connected to the Pi and you’ll see a couple of lines of status, the output from the program, a blank line, and a line reporting the exit status of the program. If all this doesn’t work check the error message from the server. It should explain what is happening. Often there is a mismatch between what you told gdb and the location of the executable.

The debugger provides a large number of commands for manipulating breakpoints, continuing execution, listing the program, etc. For instance, type in main and the program stops when main() is reached. A ‘c’ runs the program after a breakpoint. To help with debugging, there is an interesting mode, the Terminal User Interface, or tui. You can access and leave it by typing in Ctrl-x, Ctrl-a. This mode provides windows that show the source, registers, assembly language, and other information.

One unfortunate aspect of gdb is it doesn’t automatically copy new versions of the executable, or at least not that I could find. This can be handled by entering an ‘escaped’ command in gdb, which is a normal Linux command run on the host but outside of the debugger. The scp command will copy files from one system to another. Here’s what I tried and the response:

!scp hello pi@pizero.local:/home/pi/remote/hello pi@pizero.local's password: hello 100% 69KB 68.7KB/s 00:00

This wouldn’t be bad except it asks for the password every time. The way around it is to use sshpass like this:

!sshpass -p 'password' scp hello pi@pizero.local:/home/pi/remote/hello

Once you start gdb and get everything set up you don’t want to have to reenter all the setup commands. One solution is to add them all to a text file and run them inside gdb using the source command. The other option is to never leave gdb. Typing a ‘!’ at the beginning of a line passes the command off to a shell to run and return. Type in the ‘!’ by itself and you’re at the host system command line. You can work in the terminal environment until ready to go back to debugging. Just like any other terminal, type exit, and you’re back in gdb.

Wrap Up

If you are happy with using the command line for debugging then you’ll need to explore the details of working with gdb. You can set breakpoints, step through programs, and display information about the program. I’ve used similar command line tools in the past for embedded systems work. No thank you, I’m going back to using Eclipse, and in the next article I’ll cover that process. That article will also revisit the Lidar project and another Pi library, pigpio. See you then!

Nice! But what about for those of us who run other Linux distributions, who are not based on Debian? I run Slackware. And of course Raspian on the Pi…..

Those who use Slackware should know how to translate an apt-get command into their distro’s package manager.

Seriously, by asking this question and not answering it by yourself, you should reconsider the use of Slack and start from the beginning with a user friendly distro.

Regards,

Remy

Ps : no offense.

What he said :/

For Gentoo theres a nice wiki page for distcc and crossdev, you can use either or both for compiling.

Actually Slackware does have available third party arrangements, And Slackware happens to be more use friendly then Debian. It’s also one of the oldest.

Another cool trick is using a debootstrapped rootfs with qemu-user-static, which looks just as if it was running on the RPi (or any other embedded Linux device), only you have all the performance of your workstation. You could even install an x86_64 cross-toolchain onto it for even shorter build times.

Example: https://scontent-lhr3-1.xx.fbcdn.net/hphotos-xtp1/v/t1.0-9/10437342_764362406974489_3301223555858387530_n.jpg?oh=2939f56e8140736b1a804a2516f0d78e&oe=57321B41

obviously!

That URL has now expired.

My approach when the PI Zero becomes a real (as in available) product: set one as dev platform then export everything on the net, including desktop via x11vnc, then set up DistCC to speed up compilation distributing it among all machines in the local net. This way one can code directly on the target board but gets all the benefits of having part of the compiler run on much faster systems.

What about using libraries? Getting the compiler to run isn’t that hard. But getting proper linking results is usually here the problems start.

The standard include and library paths are built into the compiler and linker. Installing the package puts them in the correct places. I mistakenly typed ‘gcc’ instead of ‘g++’ when first trying this and the compiler couldn’t find ‘iostream’. Using the correct compiler fixed the problem. If I remember correctly when you add the -v to the command line it dumps out the search paths for the include and library files.

iostream is not a library. libz is a library, or libGLes2 (or what’s it named). And as far as I know, apt-get does not install ARM versions of libraries that you have installed.

First, I said “include and library paths”.

Second, in C++ parlance ‘iostream’ is a library just as all the classes in the STL are libraries.

Third, note I did not say ‘iostream’ was part of the STL.

Fourth, there sure are a shit-load of files under arm-linux-genueabihf/include that I didn’t create. Bet they got there from using apt-get.

Yes, this is a nice article (which comes up first on Google for “raspberry pi cross compile”) but missing important information. The headers and ARM libraries installed by your desktop distro (including libstdc++) may not be the same version as the ones installed on your RPi’s Raspbian system, hence there may be ABI incompatibilities.

To ensure that we build against the right libraries, I found the following trick to be simple and elegant: https://medium.com/@zw3rk/making-a-raspbian-cross-compilation-sdk-830fe56d75ba#bd07

My favourite way to cross compile is by using either sshfs (or nfs) to mount the RPi’s root filesystem onto your PC over the network. With You can even fix all the soft links include & library soft links. You can then use the cross compiler on the PC to compile code on the PC and link it with libraries already on the RPI. Once the binary is created it can then be moved to the RPI root filesystem. Done!

I was able to successfully compile Qt4 applications on the RPi using this technique http://hertaville.com/cross-compiling-qt4-app.html.

BTW, your site helped me with setting things up.

Glad that you found my site helpful! My blog is due for a revamp that I’m hoping will happen this summer.

Afair g++-arm-linux-gnueabihf is only the g++ compiler. gcc-arm-linux-gnueabihf is the compiler suite.

Afair the compiler suite package is called gcc-arm-linux-gnueabihf not g++-arm-linux-gnueabihf.

Hi Rud,

I’d just like to say thanks for this article, and register my interest in more of the same. Whilst I realise this will be a bit basic for a lot of your readers, I’ve always found setting up cross compiler tool chains a bit hit and miss (even though my day job is trading systems development) and I’m sure there must be more folks like myself that find it useful.

Cheers,

Phil

I agree, setting up the compilers has been a big hurdle to starting in the first place for me. In the mean time the Pi is looking at me accusingly. Hopefully I can find some spare time soon and get started.

Why doesn’t somebody put together a nice IDE environment to build Rpi apps without all the linux command line

vodoo. I mean like a sharp develop type thing with only the bare bones features. Is making it easy against the linux

religion?

Standby for next week’s article where I setup Eclipse to handle cross development.

No but if you need that maybe you should be learning how to set that up first, and then trying to debug this rather than the other way around.

Replying here but addressing all the comments. I have difficulty understanding how verbose command line interaction can be ‘easier’ than the gui implementations like sharp develop. You see there are those who like ‘using’ the operating system as much as producing the end result. Not me. I don’t care about makefiles or all the non intuitive ‘setup’ that is just a group of work needing doing in order to get the end result, which is a functioning application. I get that knowing the internals intimately provides flexibility for those who need it. But shouldn’t there be room for those of us who like focus only on the application logic and not the tools. And yes I get that this someone I speak of would have to do quite a bit of work to build the easy to use tools but isn’t the rasb pi about education in programming. I don’t think the ‘tools’ are the desired end result and I am fairly sure that the obscurity of the tools setup turns off quite a few budding programmers. Let me summarize, I think too many techies are in love with command line and complicated setups because it is a badge of honer to get a point where hello world can be compiled. I think that is rubbish in general. Learning tools is not really a necessary technology if proper tools were available. How many people need to be able to disassemble a circular saw in order to build a doghouse with it.

OK I am ready, fire at will…

The circular saw comparison to gui vs cli is really not quite accurate here. Training wheels on a mountain bike would be better. Sure you can get most places but you will be never experience the fun or efficiency as the guys or gals who learned to ride without them. They can also access cool places you can’t. Thing is if you never see those skilld folks in action you will always think your training wheels are just fine because that is all you have seen.

GUI’s often require an interaction with a USER, command-line interfaces allows automated use of the tool.

I think the part of CL that intimidates you is the need to have all the parameters memorized to use them quickly. A *good* GUI will spoon feed you the applicable options. I think there is also a lack of good GUI’s because they require much cosmetic consideration and anticipation to provide every possible use-case a clever hacker may decide. Like your file-manager-gui can probably find files matching a pattern, but can it then manipulate them using another GUI/program and filter based on the output? Maybe, but probably not.

Compromise: write a batch file to compile / link / etc. with all the right flag options.

Offers the education of the CLI, ease of the GUI – and a fraction of the size.

The problem is that everyone who is able to make those tools already understands the command line, and how it is actually more powerful than a gui (for these sorts of things). Why would they make the tools.

Making things easy isn’t against the linux “religion”, but someone has to do it. In the linux “religion”, if you think it should be done, you should do it, or pay someone to do it.

Mastery of the command line makes everything easy!

I’ve been looking at an RTOS that I can use with the ATs PICs etc and I think I’ve narrowed it down to two. FreeRTOS and ChibiOS. ChibiOS has a customized 32 bit Eclipse IDE (not a plugin) and FreeRTOS charges $67 for the documentation to learn if it’s worthwhile spending the time learning about it.

Any thoughts out there from people that have used both/either another? I’d like to use Eclipse but prefer to manage one install and add plugins to that.

The snag I ran into was attempting to compile an app that could run on both the RPi and the BeagleBone Black. Both targets are running wheezy (i.e., the RPi running raspbian based on wheezy). They are still stuck on glibc 2.13 and it is not easy to find an armhf toolchain that ships with glibc 2.13.

This one works for the RPi: https://github.com/raspberrypi/tools

But it was built without multiarch support , which causes problems when running on the BBB (see https://github.com/raspberrypi/tools/issues/42)

I guess I will have to bite the bullet and build the toolchain myself. I was trying to avoid doing so because I have no idea how difficult that is going to be.

http://crosstool-ng.org/#download_and_usage Might be helpful

Take a look at openembedded, it will make your crosscompiling issues easier :)

Errmmm.. rather than using password based authentication if you’re sick of entering it all the time, what about key based authentication? Scripting in a password feels… wrong…

it is and shouldn’t be necessary. it’s easy to install your key on the target system and remove if needed.

Here’s an article on how to configure your pi for passwordless ssh access:

https://www.raspberrypi.org/documentation/remote-access/ssh/passwordless.md

Once set up you can use scp to copy files from your host PC to your pi without having to enter your password

Good tip, Richard. I’ll try this.

https://linuxconfig.org/passwordless-ssh Similar but uses the ssh-copy-id command instead of having to use cat and redirects

arm-linux-gnueabihf-g++ -O3 -g3 -Wall -c -o -fPIC “hello.o” “hello.cpp”

should be

arm-linux-gnueabihf-g++ -O3 -g3 -Wall -c -fPIC -o “hello.o” “hello.cpp”

this should really be fixed in this article. Thanks for telling

Great post! I detailed on my blog how to do something like that for multiple architectures a while ago: http://blogs.nopcode.org/brainstorm/2016/07/26/cross-compiling-with-docker

sshpass is the devil even in development environments. Sure it makes things easy but setting up ssh keys requires little to no extra effort and is more secure. I’d encourage you not to advocate using sshpass.

ssh-keygen -f ~/.ssh/id_rsa -p ”

ssh @ sh -c “‘mkdir ~/.ssh && cat >> .ssh/authorized_keys'” < ~/.ssh/id_rsa.pub

THANK YOU SO MUCH!

from a bunch of us.

yup. Really good.

Thanks for taking the time.

A little thing — your test program compiler invocation had to be tweaked a little:

arm-linux-gnueabihf-g++ -O3 -g3 -Wall -c -fPIC -o “hello.o” “hello.cpp”

ie. moving ‘-o’ to the name of the object file output name.

I typically place ‘-c’ before the input source, but apparently that’s not really necessary.

This is excellent. The next question is: how to access all the libraries that the Pi requires to do a build of an application. You could export them from the pi to a local directory? Or is there another way?

Thanks,

Chris

Using the raspberry pi apt-get library generated in my case files that don’t run. When executed, they throw a Segmentation Fault.

After searching about the problem, I came across this forum

https://raspberrypi.stackexchange.com/questions/58984/cross-compile-error-from-ubuntu-14-04-to-raspberry-zero-using-arm-linux-gnueabi

Where they explain the difference between compiling for hardwares with HardFloat.

Finally, the solution to my problem was:

1) Do not apt-get the raspberry toolchain. Download it from the official repository. https://github.com/raspberrypi/tools

2) To “install” it, just follow the topic INSTALL TOOLCHAIN at the official site of raspberry pi. https://www.raspberrypi.org/documentation/linux/kernel/building.md

This way I corrected the segfault and could go on.

Hope this helps others with issues as well =)

Pedro

I’m having trouble with the non-trivial case: I’m trying to compile an application that builds fine on the rPi but slowly .. 20 minutes or so. The application I want to build uses wxWidgets and many of its associated libraries. Is there a way to build anything that builds on the rPi on a Linux platform?

I tried adding the apt resources that the rPi uses to but the ‘apt update’ failed with various complaints and the libraries required to support the build weren’t accessible by that method.

The ‘helloWorld’ example works fine and with qemu, I can even run ‘helloWorld’ on my VM running Ubuntu.

Would it possible to do some non-trivial case?

Thanks