University of Wisconsin-Madison is doing some really cool stuff with phototransistors. This is one of those developments that will subtly improve all our devices. Phototransistors are ubiquitous in our lives. It’s near impossible to walk anywhere without one collecting some of your photons.

The first obvious advantage of a flexible grid of phototransistors is the ability to fit the sensor array to any desired shape. For example, in a digital camera the optics are designed to focus a “round” picture on a flat sensor. If we had a curved surface, we could capture more light without having to choose between discarding light, compensating with software, or suffering the various optical distortions.

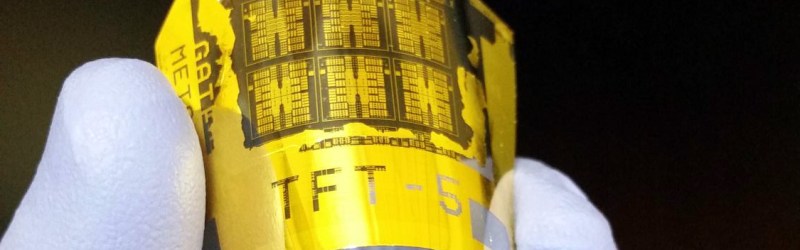

Another advantage of the University’s new manufacturing approach is the “flip-transfer” construction method they came up with. They propound that their method produces a vastly more sensitive device. The sensing silicon sits on the front of the assembly without any obstructing material in front; also the metal substrate it was built on before flipping is reflective; also increasing the sensitivity.

All in all very cool, and we can’t wait for phone cameras, with super flat lenses, infinite focus, have no low light capture issues, and all the other cool stuff coming out of the labs these days.

you will never see a tiny cheap camera without low light capture issues. We just can not override physics. If there are very few photons available you can not gather more of them in a very short time so that human twitching and shaking does not blur the photo, not in the super tiny 1/3rd of an inch or less sensor size we have today in phones, and not at the insane cheap prices phone makers are willing to pay.

True, but with a more sensitive sensor the definition of ‘low light’ gets dimmer. More sensitive equipment gets you closer to the theoretical minimum. Combine a more sensitive sensor with the reflective substrate and you get more chances for photons to strike the sensor, much like the tapetum lucidum in animals. Though this creates an extra processing step to account for the increased path length, but horsepower is an ever shrinking issue these days.

No additional processing power needed. You won’t be able to measure the delay due to propagation directly over the distances involved in this design anyway.

No semiconductor detector can directly resolve the time delay between two photons entering the face of this device, one absorbed directly, and one reflected and then absorbed, over the distance involved.

Both photon energies just add to the base charge.

Without overriding physics, the camera in my phone, while much cheaper and smaller, outperforms my first full size digital camera (Nikon E950), and I don’t think we’ve reached the limits yet.

>you will never see a tiny cheap camera without low light capture issues.

Never say never.

Advancements in biotech will allow us to cheaply adapt retinas as sensor arrays.

Do your retinas not have a low light capture issue? Weird.

20 menthol Kools will get that fixed*.

*surgical shine job not FDA approved.

Low light performance is a sensitivity issue. We have grown accustomed to larger lenses. When you increase the sensitivity of the photon detector, you no longer need the large optical system. Have you ever heard of intensifier tubrs? They work under starlight conditions by increasing the sensitivity using a variety of methods. Photon sensitivity is the exact reason noise exists at low light levels. The energy in the photon is there, but it isn’t energetic enough to convert to an electron in the substrate. That’s not nature’s fault. It’s a human ingenuity problem. Look at all the tiny creatures that can see wonderfully in the dark and have very small eyes. It’s all about the quantum efficiency.

“you will never see a tiny cheap camera without low light capture issues. We just can not override physics.”

how perfectly arrogant.

Curved photodetectors will likely find application in artificial eye prosthesis, although this area is still in its infancy.

Think about a single-sensor 360 degree camera. The lens would probably look like a glass o-ring, or something.

That would just act like a cylindrical lens, and focus to a line.

Exactly.

Even if it’s flexible, you still can flex it into a spherical shape without creating distortion. Also, you’d still have to map the spherical image to a flat screen for most applications. Seems easier just to use a flat sensor, and use software to compensate for the distortions.

should be: “you still CAN’T flex it”

There is no reason to curve it into a sphere. The curvature just helps compensate for spherical aberration from the lens, allowing uniform focus across the sensor array, with the need for a f-theta lens arrangement.

I thin you meant to say- “without the need for an f-theta lens arrangement”

Are you saying the aberration only occurs in one direction ?

Aberrations increase as you get further away from the center of a lens. Using aspherical lenses helps. But the broad wavelength range of human vision requires a camera for visible wavelengths to use achromatic lenses (for high quality imaging), which are often two or more lenses of different refractive index bonded together or precisely spaced apart.

I’m curious whether anyone knows how the “flip-transfer” build of these sensors relates to BSI (back side illuminated ) sensors in some of today’s cameras. Seems like the same concept but maybe the manufacturing process is different.

It’s the same technique. The two differences here are-

The semiconductor substrate in this design is so thin that it can be slightly flexible. And a reflective layer is placed on one surface to give the photons that didn’t get absorbed another chance by sending them back through the active junction.

could this by any chance have any good effect on solar panels?