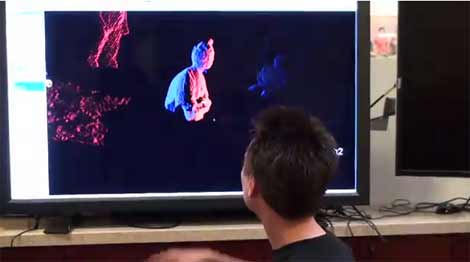

Willow Garage, the makers of the PR2 robot have been playing with the Kinect. You might be a little tired of seeing every little new project people are doing with it, but there’s something here we couldn’t help but point out. When we posted the video of the guy doing 3d rendering with the Kinect, many of the commenters were speculating on how to get full environments into the computer. Those of you that said, “just use two, facing each other” seem to have been on to something. You can see that they are doing exactly that in the image above. The blue point cloud is one Kinect, the red cloud another. The Willow Garage crew are using this to do telemetry through the PR2 as well as some gestural controls. You can download the Openkinect stack for the Robot Operating System here. Be sure to check out the video after the break to see the PR2 being controlled via the Kinect as well as some nice demonstrations of how the Kinect is seeing the environment.

[via BotJunkie]

thats absolutely amazing! makes me want two now.

I remember that there was a big debate about using two kinects and if their sensors would interfere with each other. I couldn’t find any detailed info on Willow Garage’s post. Does anyone know if this was a non-issue and if not, how did they deal with it?

looks like others have been doing it from whatever angle they want

http://vimeo.com/17107669

Yep, same question…

Here Radu is quoted as saying:

“Judging from a few basic tests with two Kinects, I can’t seem to get them to interfere with each other to the point where the data is unusable.”

http://gilotopia.blogspot.com/2010/11/how-does-kinect-really-work.html

Heh… looks like I’m gonna getting two of ’em…

Seems even 2 is not enough, since they act like a pointsource with no reflections you need more than 2 to cover an object completely I guess.

Most Impressive! Its amazing that Microsoft stated that they would take legal action against anyone using the Kinect for “Hacking” rather than embrace peoples creativity doing amazing things with Gaming Hardware. I guess that why they changed there tune later on and saw this as an advertising opportunity.

It makes sense that they wouldn’t interfere with each other if they’re tracking /changes/ in the dot pattern, rather than absolute positions (which would really be prohibitively difficult, if you think about it). Of course, given enough of them, you could probably get such a dense point cloud (along with tons of stray IR light bouncing off stuff) that the data would be useless. But, since you only need three, maybe four, to get a complete scene, there’s no reason to even approach that limit (unless you can afford an army of robots, each with three Kinects. And if that’s the case, I really want to be your friend/employee! ;).

Depending on what you’re tracking, the better solution would probably be to build some intelligence and persistence into your recognition algorithm. http://grail.cs.washington.edu/projects/videoenhancement/videoEnhancement.htm provides a good example of how a system like this works.

Breaking the body into individual point clouds at the joints and predicting unknown points based on previously captured data should yield a very high degree of accuracy when coupled with dual depth cameras. You could also do some enhancements based on the knowledge that the unseen points must be somewhere in the shadow of the captured images.

Wouldn’t 4 Kinect sensors in each corner of a square room allow for a complete 3D map of the room and its contents? What if you changed the IR wavelengths of each emitter and sensor so each sensor would not interfere with the others? Just sayin…

Yea, I wonder if they need to be 180 degrees offset from each other or if 90 degrees or less will work too — it seems like their point clouds might interfere.

Seems like two Kinects seeing each other’s pattern don’t fail, their output just gets slightly noisier.

If high accuracy, max framerate, and 360° operation is required, seems like the way to go is polarization; like so:

0°: Horizontal polarization

90°: Vertical polarization

180°: Horizontal polarization

270°: Vertical polarization

Each Kinect wouldn’t be able to see its two neighbors’ dot pattern because of the differing polarization. And Kinects 180° apart won’t see each others’ dot patterns regardless of polarization, unless there’s mirrors present.

You’d need to put a polarizing filter over the camera. The laser is already polarized, and to alter that you’d need to rotate the laser diode in the projector module (without rotating the entire module or pattern lens).

@Chris:

Polarized light would /only/ work on mirrors/shiny things, because most things scatter and depolarize light. That’s why you need a “silver” screen for cross-polarized 3-D movies. It doesn’t work on just a blank wall, for instance. It might /slightly dim/ the other Kinects’ dots, but it wouldn’t filter them out completely on most things.

Also, what makes you think there’s a laser in the Kinect? Even if there is (which I doubt) why would it automatically be polarized? Lasers are /coherent/ but not (necessarily) polarized.

One more thing: you really only need three for a more-or-less complete view of the scene/object (and they did pretty well with two in the article, going by the above screen-shot).

(Oops, I forgot about lenticular screens…)

@Amos It’s rather obvious that there’s a laser in there, how would you get uniform dots in uniform size over a large area otherwise?

And I already said that a while ago but now the ‘scene’ is also starting to say it most probably is.

@Amos: I though reflections maintained at least a majority of the original polarization? Maybe not enough for 3D movies, but enough to reduce the interference on the Kinect.

As for whether it’s an actual laser in there or not, I’m going off the preferred embodiment of the device, as described in the patent filed by the company who original designed the depth sensing system. It describes the projector as an IR laser, and a one-piece compound acrylic lens that forms the dots. Although there are also some alternate embodiments which use IR LEDs and other dot schemes (gotta cover as many bases in a patent as you can).

And according to Sam’s Laser FAQ and other sources, laser diode are generally linearly polarized.

Aw. I expected Willow Garage, one of the best applicators of OpenCV around, would have compared CV’s stereoscopy function against Kinect.

If the kinect IR laser is pulsed, multiple kinects should rarely interfere with each other. Any pulse-frequency aliasing should quickly subside due to async clock drift, and the internal kinect firmware could discard anomalous readings caused by periodic inter-kinect interference.

Pulsing the laser would also keep it cooler and allow brighter dots.

P.S. The laser pulses would have to be genlocked to the IR camera, if indeed the kinect uses a pulsed laser.

KIKAIDER!