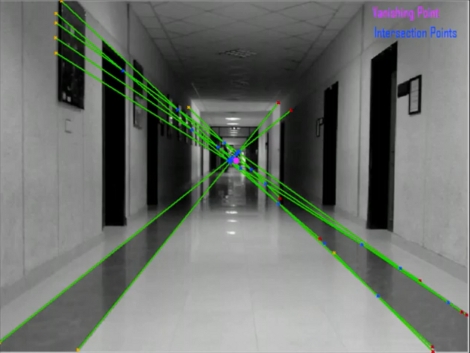

Students at the National University of Computer and Emerging Sciences in Pakistan have been working on a robot to assist the visually impaired. It looks pretty simple, just a mobile base that carries a laptop and a webcam. The bot doesn’t have a map of its environment, but instead uses vanishing point guidance. As you can see in the image above, each captured frame is analyzed for indicators of perspective, which can be extrapolated all the way to the vanishing point where the green lines above intersect. Here it’s using stripes on the floor, as well as the corners where the walls meet the ceiling to establish these lines. From the video after the break you can see that this method works, and perhaps with a little bit of averaging they could get the bot to drive straight with less zig-zagging.

Similar work on vanishing point navigation is being done at the University of Minnesota. [Pratap R. Tokekar’s] robot can also be seen after the break, zipping along the corridor and even making turns when it runs out of hallway.

NUCES Pakistan visually impaired guide robot:

[youtube=http://www.youtube.com/watch?v=Z2H9LhJ46Hw&w=470]

University of Minnesota offering:

[youtube=http://www.youtube.com/watch?v=nb0VpSYtJ_Y&w=470]

That is the kind of thing that is both absolutely brilliant and extremely obvious once you see it in practice.

Pretty nifty, but I suspect only useful for relatively empty hallways, where the visually impaired really don’t have much trouble navigating anyway. (I’ve recently been working on assistive technology for the blind.) In a small room, or anywhere with furniture this thing is going to need to fall back on other more robust navigation methods.

One thing that it seems to do better than other sensing methods is keep a relatively straight and centered path. Most other methods would either hug the wall or have a more pronounced zig-zag.

I think a much better application would be self-guided delivery robots for factories and offices.

Similar idea from September. The ICRA 2011 paper is on their website.

http://www.youtube.com/watch?v=7XyddRwP_KA&feature=player_embedded

http://www.cs.cornell.edu/~asaxena/MAV/

Thank you for your comments. It really means a lot.

Bob D you are right about that, it wont work in a small room, as the basic concept behind this is to find that imaginary vanishing point, which can only be found in long straight footpaths, roads, walking tracks etc.

the mobile robot was built to test the algorithm, which can be further enhanced in future. Once the algorithm is thoroughly tested, it will be implemented as a standalone device which would use a blind person’s other senses to guide him.

It sure can not avoid any obstacles at the moment, however if the path is partially obstructed by obstacles( in this case people walking ) it is able to build the vanishing lines from the available information by joining the discontinuities. Therefore it worked perfectly fine with people walking in the path.

The things aren’t a perfect solution, for example…

http://www.youtube.com/watch?v=4iWvedIhWjM

WWRD? (What would the robot do?)

Thank you for your comments. It really means a lot.

Bob D you are right about that, it wont work in a small room, as the basic concept behind this is to find that imaginary vanishing point, which can only be found in long straight footpaths, roads, walking tracks etc.

the mobile robot was built to test the algorithm, which can be further enhanced in future. Once the algorithm is thoroughly tested, it will be implemented as a standalone device which would use a blind person’s other senses to guide him.

It sure can not avoid any obstacles at the moment, however if the path is partially obstructed by obstacles( in this case people walking ) it is able to build the vanishing lines from the available information by joining the discontinuities. Therefore it worked perfectly fine with people walking in the path.

“The National University of Computer and Emerging Sciences in Pakistan”

Lol – emerging “sciences” in Pakistan – what a oxymoron.

Lol Hiudinea …

@ Realcomix

It is a point though, this robot would be a sucker for optical illusions like a painted tunnel, and God forbid they have a plate glass window at the end of the hall! Mabye the robot should have an ultrasonic sensor added so it dosn’t run through sheer clifs.

@vonskippy: media hyped?

As an alternative to averaging the visual input to minimize the zigzagging would adding a second camera serve the same purpose? Stereoscopic vision would give 2 slightly different perspectives for the software to sample…

yes or no?

The zig-zagging can be minimized by many different ways. Averging and stereoscopic vision will both just do fine.

Basically, the zig zagging is caused by the execution delays. When it makes a decision, the next one comes in after about 200ms. Till the next decision, the robot moves in the direction computed by last frame. Now about minimizing this:

1) an external hardware can be added to control the speed of the dc motors at different stages, this will provide it with enough time for decision making and hence minimize the zig-zagging.

2) the algorithm can be simplified, with lesser computation, the delays will decrease, eventually minimizing the zig zagging.

I definitely think their control algorithm needs to be tweaked. It seems to me like they are only using proportion feedback to their controller. Much like controlling an inverted pendulum, perhaps trying to include the derivative or integral of the error can minimize the oscillatory motion of the robot. Although since this is working in discrete time increasing the sample rate will(or minimizing the time between decisions) will allow the controller to be more responsive to the error.

Still really cool though!

@moe

Probably there is no feedback mechanism or speed control mechanism implemented on the robot yet, so after taking a decision the robot turns itself more than required so its showing the zig zagging behaviour, once its implemented and the turning is done through PCM, which is actualy no big deal, it will follow the path very smoothly and accurately, but as far as the objective of the project is concerned, amazing work done by masad, thumbs up!

Great work , well done.