Most bits of a computer we take for granted today – the mouse, hypertext, video conferencing, and word processing – were all invented by one team of researchers at Stanford in the late 60s. When the brains behind the operation, [Douglas Engelbart], showed this to 1000 computer researchers, the demo became known as The Mother of all Demos. Luckily, you can check out this demo in its entirety on YouTube.

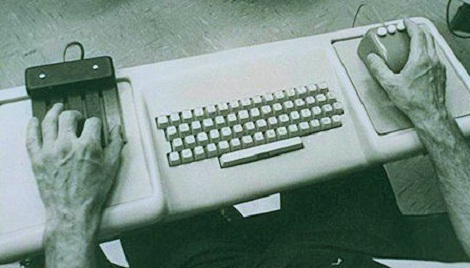

Even though [Englebart]’s demo looks incredibly dated today, it was revolutionary at the time. This was the first demonstration of the computer mouse (side note: they call the cursor a ‘bug’), a chorded keyboard, and so many other technologies we take for granted today. During the presentation, [Englebart] was connected to the SDS 940 computer via the on-line system 30 miles away from Stanford. Yes, this pre-ARPANET, what is normally cited as the precursor to the Internet.

On a more philosophical note, [Englebart] began his demo with the question, “If, in your office, you as an intellectual worker were supplied with a computer display, backed up by a computer that was alive for you all day and was instantly responsive to every action you had, how much value could you derive from that?” In the 44 years since this Mother of all Demos, we’ve gotten to the point where we already have a computer on our desks all day that is able to do any task imaginable, and it certainty improved our quality of life.

There are a few great resources covering the Mother of all Demos, including the Douglas Engelbart Institute’s history page and the Stanford Mousesite. Looking back, it’s not only amazing how far we’ve come, but also how little has actually changed.

“was instantly responsive to every action you had”

Nope we’re not there yet.

Or maybe the definition of instantanious went from a minute to half a second…

:)

Relatively speaking, we’re close enough to instantaneous. Exponentially closer than them at least.

once you have an established connection, you can get latency below 10 ms. most people cant tell the difference of 3 ms to instantaneous.

A while back I read a study about perception of input lag. They set up some dumb, repetitive GUI task, something like clicking a series of buttons that moved around. When a test subject clicked a button, it would flash. The delay between click and flash varied with each trial. Even with a fairly significant delay, people eventually started perceiving the flash as instantaneous (“it was lagging at first, but eventually it stopped”).

The interesting thing is what happens when the system suddenly goes from a large delay to no delay: for the next few clicks, the user perceives the flash as happening *before* the click. So not only is perception of the passage of time subjective, temporal ordering of events can be subjective, absent any means of verifying with a computer.

Basically, my point is that unless we’re talking about scientific, machine-verified contexts where we have some actual reason to both measure and care about small differences, it’s silly to be pedantic over whether a few milliseconds really counts as instantaneous.

128 k memory

Try a gaming mouse. It’s within a few nanoseconds.

Seriously dude? Do you actually believe that? Did you buy a monster mouse per chance?

But…

They have SPEED HOLES!!!!

Interestering how this idea back then has become the “new” thing today with cloud computing

Yep. If you just noticed that just you wait for the surprise coming down the pipe. In (n)years they will figure out that redundancy, local storage, and pushing the work off to cheap user-owned machines will be more effective and we will be back to where we started.

Just like how TABLETS are new, but I have had once cince 1996.

Dauphin DTR-1 was the first tablet, everything else is simply a copy.

Viva Koalapad!

Purely awesome. Many thanks good sir for your contribution to our society.

I still dream of the tektronix 4051. A truly badass late 70’s machine compared to the equivalent IBM, and a green vector display that could cause epilepsy in lesser mortals.

1024×780 graphics, followed by 4000×3000 graphics – quite rare even today. It had a few drawbacks, but at a slightly lower price point, they would have dominated the market.

Nobody foresaw the power of visicalc on the apple 2, or mail-merge with wordstar on the CP/M systems. Except of course, Microsoft, who was the first to churn a significant portion of their software revenue into advertising.

If Tektronix had gone after the business market instead of seeing the 405x as a lab toy…

Those Tek display were the best part of the original Battlestar Galactica.

These guys really were brilliant to see the potential of the computer like they did.

At school, I wrote a graphics package for the 4051. We had one in the computer center. Very few people understood what it was, and the storage screen was enough to keep most casual users away. X and Y scroll wheels for the cursor!

The dual displays of our new CDC Cyber 74 kinda overshadowed the poor Tek…which sulked in a corner of the terminal room.

Small World. Control Data, Model 73. Not exactly the King of Kings, but dual core before dual core existed. Probably the only system where space war wasn’t a game. 60 bit fixed point math and chain smoking programmers driving crappy second hand Fiats and VWs they couldn’t afford and wearing way too much brown. Dead Start and the Moody Blues, a great way to kick off your weekend.

I found a copy of the video with a better resolution at: http://sloan.stanford.edu/mousesite/1968Demo.html

Here’s a higher resolution video of the demo: http://sloan.stanford.edu/mousesite/1968Demo.html

… or you could go to archive.org and grab the three reels of the demonstration in some decent formats:

http://archive.org/details/XD300-23_68HighlightsAResearchCntAugHumanIntellect

http://archive.org/details/XD300-24_68HighlightsAResearchCntAugHumanIntellect

http://archive.org/details/XD300-25_68HighlightsAResearchCntAugHumanIntellect

;)

1875 Remington Scholes QWERTY. Some things just get stuck.

Ultimate was an apt statement. Kept wondering whether I was watching the Techno-Nostradamus or the birth of everything we use today. 1968, Wow!

Makes me happy I was born after such dinosaurs weere ancient history…the lag on that thing is worse than most Microsoft products, and I’m sure it was optimized to the last byte.

Yeah it’s 2012, but uhm – now try drawing on any touch tablet.. oops :/

I must have been two month old when this actually happened. ;)

It’s a real shame that Engelbart was marginalized for such a long time. Sure, he was finally recognized and awarded within the past ten years. But he basically had to sit out the later half of the 70’s up through 1995 before people started to fully realize the potential of his vision. Yes, we had hypercard on older macs (did Apple steal everything?) but hypercard was little better than a toy compared to the Engelbart vision of shared human intellect.

Both he and Ted Nelson were considered to be hippie cranks; and by not monetizing their ideas, they didn’t win any points in the business world, either. All of these guys were brilliant, very brilliant… but they lacked the ability to put the rubber on the road.

Other people, perhaps not quite as brilliant, like Stewart Brand, Steve Jobs and Bill Gates, managed to collect, package and market the works of much smarter people who simply invented to invent as dreamers do.

These marketing people will be remembered long after the contributions of the real doers and makers are forgotten. But the ones who made it actually work are just as valuable, if not more so.

And like all the branders before and since, they will be considered the real artists. Even the great Leonardo “liberated” many of his inventions from persian and chinese predecessors.

This is not quite correct. Ted tried to make money off hypertext many times — but fairly unsuccessfully. At one point he was courting the CIA (who wanted a system for storing and browsing internal records and thought a hypertext system might be useful, but who just sort of led him on for a few years while he wrote proposals), and another time he signed away large portions of his IP (which is arguably spurious — a trademark on several names and a handful of patents that may or may not be defensible — but that he values quite a lot) to Autodesk (albeit indirectly) in the hopes that they would manage to make a proper product (whereas because of development group politics, it became two or three half-completed demos, now released as open source as Udanax Green and Udanax Gold). There was also the time when he courted Microsoft in the early 90s… up until Bill Gates came to him and said “I understand hypertext now! It’s CDROMs!”

Ted’s neither a Bill Gates figure nor a Richard Stallman figure. Maybe some people thought he was an RMS in the 60s, when he was first floating this idea.

I don’t know enough about Engelbart to say anything either way about him, aside from his fixation in the 60s and 70s on what we today might categorize as ‘social media’ and ‘online collaboration’. But, he wasn’t alone in that (Alan Kay thought that’d be the killer app for his hypothetical tablet, the Dynabook, and Vallee did some experiments on the Arpanet in the early days into the effects of long-distance computer-aided project collaboration on psi of all things).

His ideas of knowledge management are pretty well realized today — check out FreeMind and TheBrain!

It’s spelled “Engelbart”, not “Englebart”.