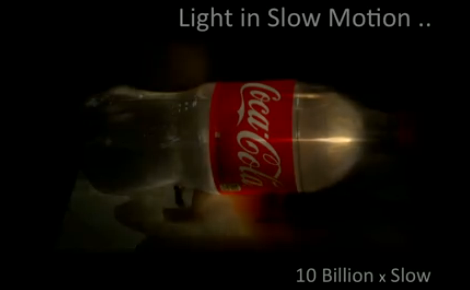

Femto-photography is a term that derives its name from the metric scale’s prefix for one-quadrillionth. When combined with photography this division of time is small enough to see groups of light photons moving. The effect is jaw-dropping. The image seen above shows a ‘light bullet’ travelling through a water-filled soda bottle. It’s part of [Ramesh Raskar’s] TED talk on imaging at 1 trillion frames per second.

The video is something of a lie. We’re not seeing one singular event, but rather a myriad of photographs of discrete events that have been stitched together into a video. But that doesn’t diminish the spectacular ability of the camera to achieve such a minuscule exposure time. In fact, that ability combined with fancy code can do another really amazing thing. It can take a photograph around a corner. A laser pulses light bullets just like the image above, but the beam is bounced off of a surface and the camera captures what light ‘echos’ back. A computer can assemble this and build a representation of what is beyond the camera’s line of sight.

You’ll find the entire talk embedded after the break.

[ted id=1520]

[Thanks Pim]

“embedded after the break” What fucking break? The link is in line with the text body.

The “Break” is for RSS. They add a break to control what shows up in rss readers. I use Mac Mail. So in order to see the video, I have to follow the rss address to hackaday.com (this page). It assures that they don’t loose too much traffic to their website because of RSS.

Funny you should mention “break” and “rss” in the same note – hackaday’s rss feed has been broken since 10-11am this morning (eastern time). It broke after it was updated with the Jeri Ellsworth post. Every validator i’ve checked it against says it fails on more than one section…

I saw your email… Looking into it!

Working fine for me In google reader on my computer and phone. Anyone else verify?

Besides RSS, when I visit Hackaday, and an article says ‘after the break’, it always seems to imply there is something further to read/see if I click on the article link rather than reading it on the home page. Personally, I think the term is appropriate.

I saw this a while back, but I don’t recall where. The problem is the ‘camera’ only captures one line of the image per exposure, dozens or hundreds of repeated pulses must be fired in order to compose a two-dimensional image. Even so, the mere fact that they can capture (even in this manner) light in transit is quite amazing.

Drat, the reply should cancel after posting one reply. :s

Oh My Gosh, this is wonderful! If someone hasn’t already made plans to watch a wave interference pattern develop, I would be surprised, this could easily be responsible for a few string theory breakthroughs too. the end result implications are mind boggling. put one of these in over at CERN

“watch a wave interference pattern develop”

Uhm, they’ve already done that.

The individual particles initially seem to hit random spots (when only a few particles have been fired), but as they stack on the pattern starts to be visible (lots of particles hitting the peaks, very few particles hitting the nulls).

Wiki has pics but I don’t remember on what article.

But not in slow motion, they’ve only been able to see it develop one electron or photon at a time, we are talking a real time flash slowed down to manageable speeds. MAYBE there is more visible at slow motion that we can’t normally see, believe me this is big news

I don’t see how it can make a difference, the photons that will hit the camera sensor are the same ones that would’ve hit the film.

Controlling the rate at the sensor or at the emitter is algebraically equivalent.

You take no joy in the scientific experiments do you? you just sap the fun out of it.

My science joy-taking formula:

int joy_taken = (result_is_new || result_looks_shiny);The experiment you propose doesn’t meet the criteria – we already know what’s the result going to be, and the pictures: http://bit.ly/MD3sfv quite frankly look boring.

An experiment I’d take joy in?

Say, we take the camera to where this is set up: http://hackaday.com/2012/08/16/toorcamp-hackerbot-labs-giant-faa-approved-laser/

Hmm…

No, wait, better: Rug it up and take it to this guy’s place: http://hackaday.com/2012/08/16/toorcamp-hackerbot-labs-giant-faa-approved-laser/

Now we’re talking!!

Whoops, the last link was meant to be this one: http://hackaday.com/2012/08/13/copper-vapor-laser-is-diy-awesomesauce/

that is neato

Awesome!

Saw this some time ago, I think on Next Big Future. It’s not real-time capture. Repetitive light pulses are used, each time capturing just a bit of the spatial/temporal information. As long everything is motionless and the light pulse propagation repeatable, the final result is accurate. It still was, and is, incredibly impressive; and there’s no telling what further breakthroughs will result.

wow, just wow i didnt think id see that in my life time

That’s the same crap that the oscilloscope makers sold the people as 200 MHz digital oscilloscope two decades back: equivalent sampling. They do not have this frame rate, they only combine many many shots. The process has to be cyclic or it won’t work. By the way, the first nerve impulse was measured in exactly the same way, somewhen in 1800something. I hate this hyped up TED bullshit.

While I think you’ve understated the significance of this. I do agree that TED is usually stupidly hyped up marketing. “Look at us, we are making progress: pay for our research”

There are definitely some interesting things to be gained, but never enough to intrigue me.

Now it is a cool setup, but I wish the press would stop misrepresenting what it really is. This will never be able to take a pciture of a speeding bullet for example, like ChrisC pointed out.

Potentially I could see this as being useful for Hollywood to get a decent BDRF like dataset for a whole scene, (really a Bidirectional Surface Scattering Reflectance Distribution Function dataset) other than that I don’t see much usefulness.

I wonder how long the “exposure time” is. I mean how many bursts do they need and how long would it take. They could probably also use more cameras to catch more data/light and reduce the time needed to gather the required data.

Why is this called femto-photography and not pico-photography? What does femto refer to here?

Didn’t even read as far as paragraph #1? Tsk.

Didn’t even read as far as my first sentence? Tsk.

Very interesting if true. Story is too new to make an informed decision. Recommend skepticism until other independent researchers are able to reproduce the results. There are HUGE amounts of data used to produce the movie and therefore a huge number of opportunities for error.