Since his nerves were wracked by presenting his project to an absurdly large crowd at this year’s SIGGRAPH, [James] is finally ready to share his method of mixing fluids via optical tomography with a much larger audience: the readership of Hackaday.

[James]’ project focuses on the problem of modeling mixing liquids from a multi-camera setup. The hardware is fairly basic, just 16 consumer-level video cameras arranged in a semicircle around a glass beaker full of water.

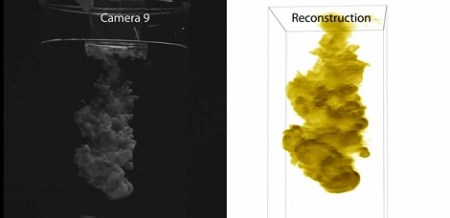

When [James] injects a little dye into the water, the diffusing cloud is captured by a handful of Sony camcorders. The images from these camcorders are sent through an algorithm that selects one point in the cloud and performs a random walk to find every other point in the cloud of liquid dye.

The result of all this computation is a literal volumetric cloud, allowing [James] to render, slice, and cut the cloud of dye any way he chooses. You can see the videos produced from this very cool build after the break.

[youtube=http://www.youtube.com/watch?v=QV7qgAwGp4E&w=470]

[youtube=http://www.youtube.com/watch?v=mV6vh_xM0hI&w=470]

Or 3d print them.

This will eventually lead to better aerodynamic surface understanding when used in a wind tunnel…

I’d pitch it to Formula 1 teams for a development budget.

I second cknopp, I’ve toyed with realflow (realslow!) and always thought some way of sampling real world fluid dynamics would be a very useful tool. In all industry.

this is really awesome.. For our fluidodynamics lab at school I’ve been talking about something like this with some other students.. We however thought it couldn’t be done (because we had no idea of a possible working algorithm).

Anyhow, to theorize this is great, but to actually make it work in a practical “cheap” set up is awesome!

Kudos to [James] and all others who did this!

This is brilliant! Such a simple algorithm applied so effectively with such a spectacular result. I am very impressed.

Inverse Radon?

(filtered) back projection?

Which is it I can’t be bothered to open watch the video

None of these, but we can’t be bothered to watch the 3 minute video for you.

Interesting, but to get a true internal(CT-style) mapping of the added dye it would need to be less opaque or you would need to use some sort of scanner which can penetrate visibly opaque material.

Maybe laser or ultrasound?

Something I do not get: why are they using a random walk algorithm instead of sampling each pixel subsequently?