The 1980s were a heyday for strange computer architectures; instead of the von Neumann architecture you’d find in one of today’s desktop computers or the Harvard architecture of a microcontroller, a lot of companies experimented with strange parallel designs. While not used much today, at the time these were some of the most powerful computers of their day and were used as the main research tools of the AI renaissance of the 1980s.

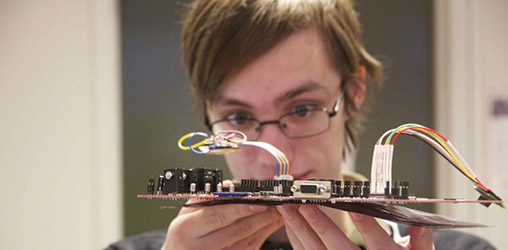

Over at the Norwegian University of Science and Technology a huge group of students (13 members!) designed a modern take on the massively parallel computer. It’s called 256 Shades of Gray, and it processes 320×240 pixel 8-bit grayscale graphics like no microcontroller could.

The idea for the project was to create an array-based parallel image processor with an architecture similar to the Goodyear MPP formerly used by NASA or the Connection Machine found in the control room of Jurassic Park. Unlike these earlier computers, the team implemented their array processor in an FPGA, giving rise to their Lena processor this processor is in turn controlled by a 32-bit AVR microcontroller with a custom-build VGA output.

The entire machine can process 10 frames per second of 320×240 resolution grayscale video. There’s a presentation video available (in Norwegian), but the highlight might be their demo of The Game of Life rendered in real-time on their computer. An awesome build, and a very cool experience for all the members of the class.

von Neumann

Von Newmann?!

von Neumann and not von Newmann as incorrectly stated in the article.

NEWMANN!!!!!

Jerry…

50 Shades of Cray would have been a better name!

More like 6624 Blades of Cray: http://www.ncsa.illinois.edu/BlueWaters/

” It is one of the most powerful supercomputers in the world.” … but did I just use 1000 Teraflops, or only 999, the question is punk, do you feel lucky?

Great project, great documentation, completely misrepresented by HAD.

– “strange design” = GPU implemented in an FPGA

– “massively parallel” = 6 pixels (and 14 neighbors) massaged by dedicated cores implementing SIMD instruction set

– “process … like no other micro controller could” I’m pretty sure there are ARM implementations out there that beat 10fps

Remember this was in the 1980’s when the PC was still a baby, and the most commonly used internet connection required a dialtone.

10fps on 320×240 isn’t at all impressive. Over 25 years ago on a Sun 3-50 (Motorola 68020 CPU clocked at 15.7 MHz, 4M RAM, 1152×900 bit-mapped display IIRC) I wrote custom assembler that ran Life on the display at 3fps. Had I been handling a QVGA display it would have run at around 40fps.

That said, a grayscale processor isn’t much use for Life as you only need black and white.

First, as everyone else had said, Von Neumann.

Secondly, computers are modified Harvard.

What an odd thing to say. Apart from a couple of MCUs, all modern computers follow the Von Neumann paradigm. However, on the hardware side, data and code is usually separated in the first levels of memory hierarchy and that is why people sometimes talk about Harvard architecture.

I am utterly confused by the documentation on the site, and maybe that is also the reason for the wonky description by HAD. The Websites needs around 50% fewer photos of the creators and the hardware and about 500% more text and diagrams to describe the actual hardware.

Ok, looks like I was too quick. The full report is here: http://www.256shadesofgray.com/assets/pdf/report.pdf

It is good to see people experimenting with parallelism at this level. They will probably learn faster than the larger engineering community that the whole ‘multi-core crisis’ is false.

It is good that the poster mentioned the 1980s. There were many good and interesting designs that were way ahead of their time from that era. They just didn’t have good economics, so they had to fold.

It is also good that these guys are learning and experimenting with massive parallelism.

They might learn something real about this topic. For example, the engineering community at large believes there is a ‘multi-core crisis’. There is a belief that the existing proposals will scale and that we need software transactional memory, etc. These students will learn the existing shared memory designs are a huge dead end.

I get really excited reading about massive parallel architectures, and really not when it gets implemented in a single FPGA.

I think they [NTNU students] translate the name of the university to english like this: ‘Norwegian University of Technology and Science’. ‘NUTS’.

SMID=Vector processing architecture?

Nice if the coder knows what they’re doing,or you’ve got a clever programming language that can optimise code for those kinds of architectures. (quite a few functional programming languages can do that easily- largely due to tricks Referential transparency allows)

That’s some spankin’ good work.

Sorry, couldn’t resist. :-)

Mmm… Private Youtube videos ?