[Ben] has written all sorts of code and algorithms to filter, sort, and convolute images, and also a few gadgets that were meant to be photographed. One project that hasn’t added a notch to his soldering iron was a camera. The easiest way to go about resolving this problem would be to find some cardboard and duct tape and built a pinhole camera. [Ben] wanted a digital camera. Not any digital camera, but a color digital camera, and didn’t want to deal with pixel arrays or lenses. Impossible, you say? Not when you have a bunch of integral transforms in your tool belt.

[Ben] is only using a single light sensor that outputs RGB values for his camera – no lenses are found anywhere. If, however, you scan a scene multiple times with this sensor, each time blocking a portion of the sensor’s field of view, you could reconstruct a rudimentary, low-resolution image from just a single light sensor. If you scan and rotate this ‘blocking arm’ across the sensor’s field of view, reconstructing the image is called a Radon transform, something [Ben] has used a few times in his studies.

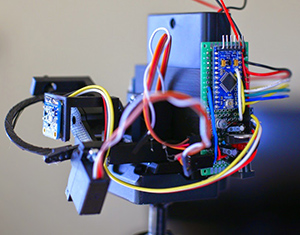

[Ben]’s camera consists of the Adafruit RGB light sensor, an Arduino, a microSD card, a few servos, and a bunch of printed parts. The servos are used to scan and rotate the ‘blocking arm’ across the sensor for each image. The output of the sensor is saved to the SD card and moved over to the computer for post-processing.

[Ben]’s camera consists of the Adafruit RGB light sensor, an Arduino, a microSD card, a few servos, and a bunch of printed parts. The servos are used to scan and rotate the ‘blocking arm’ across the sensor for each image. The output of the sensor is saved to the SD card and moved over to the computer for post-processing.

After getting all the pixel data to his laptop, [Ben] plotted the raw data. The first few pictures were of a point source of light – a lamp in his workspace. This resulted in exactly what he expected, a wave-like line on an otherwise blank field. The resulting transformation kinda looked like the reference picture, but for better results, [Ben] turned his camera to more natural scenes. Pointing his single pixel camera out the window resulted in an image that looked like it was taken underwater, through a piece of glass smeared with Vaseline. Still, it worked remarkably well for a single pixel camera. Taking his camera to the great outdoors provided an even better reconstructed scene, due in no small part to the great landscapes [Ben] has access to.

I had a similar idea, but instead of servos I planned to use an lcd screen. Switching on (or off, depending how you look at it) a pixel at a time across a rectangular matrix would hopefully move the ‘pinhole’ around, thus moving the image around the sensor. Was wondering if this would work for thermal imaging. Do lcd displays work on FIR?

That’s a pretty neat idea, and similar to a Tilt-Shift lens, which may introduce a lot of distortion into the image. I think it would produce a very fish-eye like effect. I look forward to seeing how it works out.

You may want to look into something called compressive imaging.

http://dsp.rice.edu/cscamera

Good idea, and that’s more in line with how research-grade single pixel cameras work. They open a few pixels at a time which produced a strange and blurry image. But if you know which pixels you used, you can do some math to work back to a clear image. No idea if an LCD works in FIR through!

http://dsp.rice.edu/cscamera

That’s actually my research advisor’s work you just linked to Ben. We actually acquire about 50% of the pixels at a time, compared to a raster scan that has to take data one pixel at a time. So we get a much better signal to noise ratio.

also infrared imaging is a huge market for this work right now because of the large price of infrared sensors. We typically use DMDs to make the patterns though, so I’m also unsure about the LCDs in FIR.

Oh nice! Small (internet) world. I’m using some similar math methods for my own research, we are moving more towards deconvolutions of highly correlated data to increase signal to noise.

Would a conventional image sensor produce more samples per unit time in this arrangement, or does the distribution of the pixels make the data incoherent?

That’s brilliant! You’d have to do a huge amount of modification to the LCD, but it might be possible to not even need a RGB sensor- just turn on individual R, G, or B pixels. They might filter the light enough to do it.

You’d need a hella sensitive sensor though, I don’t imagine much light would get through.

At least two pieces of glass, a metal element and a liquid / glass/liquid interface, I would be really surprised if you could get any sort of reliable colour/light through that.

Light seems to travel through glass pretty well. A problem I anticipate is not getting a clean edge to the pinhole, leading to distortions, especially if it is square. Also the glass may create a problem due to refraction, although this may be less pronounced in FIR, which is what I was aiming for. Using visible light is a convenient means of testing the concept but isn’t the end goal.

Obviously using servos is easier, and a setup with a thermal sensor was featured on HaD a few months ago. It just seems a little clunky/slow.

Most one pixel cameras use DLP chips. Those chips are used as reflector.

Now that’s cool.

“and convolute images”. Convolve, not “convolute”.

.. the word you’re looking for is “convolution”

The word I’m looking for is ‘excellent’. Found it !

^ THIS GUY. ;)

Both are in common use.

Many years ago they would fire a modified artillery shell that had a simple light sensor in a hole. A low-powered transmitter in the shell would vary it’s frequency according to the amount of light hitting the sensor. As the shell spun it made a line by line image of the ground beneath that was received, decoded and printed much like a fax image.

It doesn’t seem like a shell would spin nearly fast enough relative to its forward speed along the ground to make this work.

Do you have any references to this? I’d love to read about it.

This is the first I’ve heard of this too. A little googling and I came across this (PDF warning) http://www.dtic.mil/dtic/tr/fulltext/u2/909437.pdf

It looks like one of the proposed schemes, and the one bobfeg describes, squeezes multiple scans of the ground out of a single rotation with sensors spaced evenly around the shell. The transmitter chooses the sensor that’s currently within the ground FOV and ignores the ones pointing at the sky or horizon.

That uses optics though.

The result is pure art, I’d like one of those on my wall.

Now the question is, can we emulate this on a regular camera? Like write an Instagram filter producing the same effect.

Actually, yes. Before I built this camera, I spent a few days taking normal images, transforming them into simulated scans, messing with them to mimic the problems I was expecting, then seeing how they looked when transformed back. It’s kind of neat.

Nice. So waiting for Instagram or Photoshop filter from you ;)

Try a Nipkow disk! No need to move the camera…

Stop wasting time and so much effort, go look into ‘slow scan tv’ a method for using a single sensor for recording and displaying a moving picture

Personally, I think ‘wasting time and effort’ are some of the most enjoyable aspects of working on any project. But perhaps that’s just me?

If he didn’t want to waste time and effort he would use a normal camera, or at least use a lens.

I recall reading about a 1950’s attempt atempt at making a single pixel battlefield camera, that was a rifled shell fired from a cannon with lots of spin, and it recorded light/dark as it spun and flew. could then be decoded into scanlines of an image. i think the hw was not good enough to broadcast at the time so never made it past a few wired or retrieval tests.