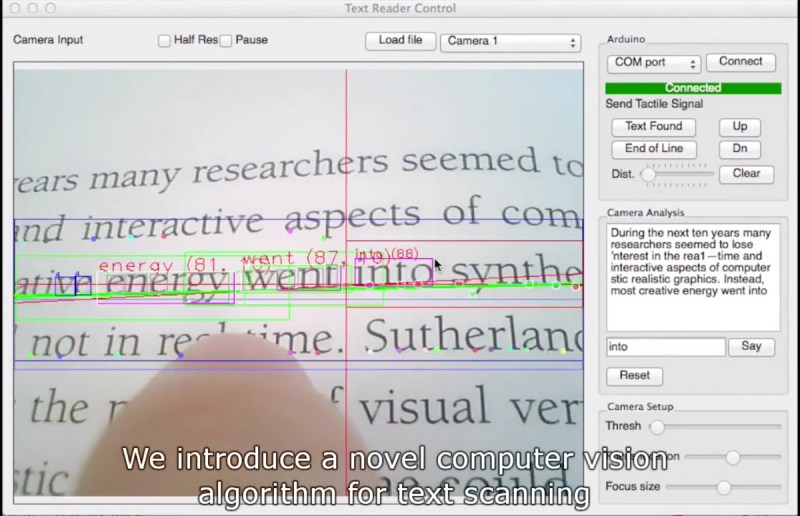

[Roy Shilkrot] and his fellow researchers at the MIT Media Lab have developed the FingerReader, a wearable device that aids in reading text. Worn on the index finger, it receives input from print or digital text and outputs spoken words – and it does this on-the-go. The FingerReader consists of a camera and sensors that detect the text. A series of algorithms the researchers created are used along with character recognition software to create the resulting audio feedback.

There is a lot of haptic feedback built into the FingerReader. It was designed with the visually impaired as the primary user for times when Braille is not practical or simply unavailable. The FingerReader requires the wearer to make physical contact with the tip of their index finger on the print or digital screen, tracing the line. As the user does so, the FingerReader is busy calculating where lines of text begin and end, taking pictures of the words being traced, and converting it to text and then to spoken word. As the user reaches the end of a line of text or begins a new line, it vibrates to let them know. If a user’s finger begins to stray, the FingerReader can vibrate from different areas using two motors along with an audible tone to alert them and help them find their place.

The current prototype needs to be connected to a laptop, but the researchers are hoping to create a version that only needs a smartphone or tablet. The videos below show a demo of the FingerReader. For a proof-of-concept, we are very impressed. The FingerReader reads text of various fonts and sizes without a problem. While the project was designed primarily for the blind or visually impaired, the researchers acknowledge that it could be a great help to people with reading disabilities or as a learning aid for English. It could make a great on-the-go translator, too. We hope that [Roy] and his team continue working on the FingerReader. Along with the Lorm Glove, it has the potential to make a difference in many people’s lives. Considering our own lousy eyesight and family’s medical history, we’ll probably need wearable tech like this in thirty years!

[via Reddit]

WOW!!! Impressive. My only question: How do they find the text if they are blind?

Exactly, how would a blind person know where to start, and if he’s tracing the line.

This device makes no sense for blind people .

Addendum: Mind you you could as the video tries to portrait find the edge of a book and find the edge of paper, but that ignores pages where there isn’t text from top to bottom and presumes blind people have a bookcase with books.

And don’t e-readers already have text-to-speech for the blind?

But I have an idea, this might also help the deaf to learn to read, I hear it’s very hard for deaf people to learn to read so if you can adapt this somehow as an teaching aid where it shows the sign language version of words they point at it might make it easier to pick up reading skills.

From TFA:

“If a user’s finger begins to stray, the FingerReader can vibrate from different areas using two motors along with an audible tone to alert them and help them find their place.”

Uhm, blind people have more sense than you think. All they have to do is feel for the edge of the page and start tracing. They already know to read starting from the left and move right. They already have a keen sense of hearing, probably keener than yours. They’re already accustomed to depending on other vision than their own (i.e. cues from their dogs and other people). If I am ever blind, I want this. I’m not concerned at all that I would have trouble finding the text in a book. It might take some practice, to be sure.

Many people are not completely blind, just blind enough they can’t read. For those who have only a small amount of vision, this could be really great.

Fair point, but for them it would have to have a better voice/pronunciation to not be too annoying I expect, but that is something they no doubt planned to tweak anyway.

I could see this being used to help people learn to read, especially stroke victims and the like.

Stroke victims will never read. Most stroke survivors knew how to read prior to their stroke. Those whose stroke occurred in the right. Those whose stroke affected hemisphere of their brain may have difficulty in tracking the lines, but they can read the words just fine. Those whose stroke whose stroke affected the left hemisphere of their brain may have to deal with aphasia, a communications disorder. This may aide those suffering from aphasia I don’t know, but I can’t see it helping those whose right hemisphere stroke left them with tracking difficulties. I’m 21 years post stroke, with luck being relative I don’t suffer from aphasia.

Alice: “My finger hurts from all this reading.”

Bob: “Which finger?”

Alice: “The sweaty one.”

I don’t get it?

I don’t know, don’t you?

Joke fail.

commendable goal, cute prototype, but ultimate effect is a big fat FAIL

Why bother with finger tracing when you can take one photo of whole object and read it using NLP algorithms to achieve seamless sentences and context aware error correction?

This is what I thought. The only benefit I can see to this is if it were used with really odd layout text, signs, labels etc. but then you’re back to the “how do they know where to point” question. Perhaps it could work to read shelf labels in the supermarket?

But it could be useful to help kids lean to read?

Reblogged this on Alexander Riccio and commented:

The MIT media lab does some pretty damned cool things.

Although, I can imagine a few improvements ;)