Dr. Robert Hecht-Nielsen, inventor of one of the first neurocomputers, defines a neural network as:

“…a computing system made up of a number of simple, highly interconnected processing elements, which process information by their dynamic state response to external inputs.”

These ‘processing elements’ are generally arranged in layers – where you have an input layer, an output layer and a bunch of layers in between. Google has been doing a lot of research with neural networks for image processing. They start with a network 10 to 30 layers thick. One at a time, millions of training images are fed into the network. After a little tweaking, the output layer spits out what they want – an identification of what’s in a picture.

The layers have a hierarchical structure. The input layer will recognize simple line segments. The next layer might recognize basic shapes. The one after that might recognize simple objects, such as a wheel. The final layer will recognize whole structures, like a car for instance. As you climb the hierarchy, you transition from fast changing low level patterns to slow changing high level patterns. If this sounds familiar, we’ve talked out about it before.

Now, none of this is new and exciting. We all know what neural networks are and do. What is going to blow your  mind, however, is a simple question Google asked, and the resulting answer. To better understand the process, they wanted to know what was going on in the inner layers. They feed the network a picture of a truck, and out comes the word “truck”. But they didn’t know exactly how the network came to its conclusion. To answer this question, they showed the network an image, and then extracted what the network was seeing at different layers in the hierarchy. Sort of like putting a serial.print in your code to see what it’s doing.

mind, however, is a simple question Google asked, and the resulting answer. To better understand the process, they wanted to know what was going on in the inner layers. They feed the network a picture of a truck, and out comes the word “truck”. But they didn’t know exactly how the network came to its conclusion. To answer this question, they showed the network an image, and then extracted what the network was seeing at different layers in the hierarchy. Sort of like putting a serial.print in your code to see what it’s doing.

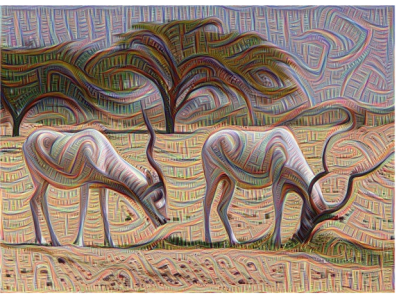

They then took the results and had the network enhance what it thought it detected. Lower levels would enhance low level features, such as lines and basic shapes. The higher levels would enhance actual structures, such as faces and trees.  This technique gives them the level of abstraction for different layers in the hierarchy and reveals its primitive understanding of the image. They call this process inceptionism.

This technique gives them the level of abstraction for different layers in the hierarchy and reveals its primitive understanding of the image. They call this process inceptionism.

Be sure to check out the gallery of images produced by the process. Some have called the images dream like, hallucinogenic and even disturbing. Does this process reveal the inner workings of our mind? After all, our brains are indeed neural networks. Has Google unlocked the mind’s creative process? Or is this just a neat way to make computer generated abstract art.

So here comes the big question: Is it the computer chosing these end-product photos or a google engineer pawing through thousands (or orders of magnitude more) to find the ones we will all drool over?

Cybernetic apophenia.

Cybernetic psychedelia. Those pics aren’t a million miles away from some of the visuals you get from LSD.

Taking drugs is interesting too, it’s like studying stroke patients to find out how the missing part of their brain affects them. Except less drastic. You can catch the bits of your brains, or the process of a thought growing and blossoming. Sometimes, at least. It’s something you can become aware of, and then notice in everyday life.

And pareidolia – some of the images are generated from originally random (noise) input images, but others are from actual photos of things.

Now if it could just paint them onto a canvas…

It says in the article: statistical heuristics favour results that match our idea of what a picture should look like.

For what? For the pictures the guy chose to show? Not for the process of image recognition itself tho I’d suppose. And that’s the machine’s day job, so people aren’t interfering with that.

The interesting parts are where they gave it an image of just noise and ran the network backwards, asking it what it would be seeing if they said it was an image of a banana, etc. Then the higher level layers produced those images out of seemingly nothing. That is where the training set becomes more visible in the results, but more interesting is the fact they were able to run a neural network backwards when it’s classically described as a one-way algorithm from input to output. I studied that stuff for a semester and have been reading more about it for years and I still can’t work out how they did that one part. Absolutely astounding!

Definitely an ANN-barely-understander, myself, but I wonder if there’s some mixing of two separate concepts, here… both of which I (barely understandingly) consider astounding

A) backwardness: Is it that they’re actually feeding data backwards through the previous layers, or that they’re feeding the data forwards through a branch (inception?) layer which, essentially, is a reverse of the first layer…? e.g. the number of inputs and outputs on the first layer correspond to the number of outputs and inputs, respectively, on the “branch-layer”, with what I assume would be identical (or maybe inverse?) weights…?

B) in which case, feeding a “noise” image through a normal one-way system, WITH the “banana” training-input set… and “incepting” that normal one-way system’s output, might make sense in the “classical one-way” sense…

OTOH, if such a “incepting”/branch-layer could be created as in (A), then it might make sense that those layers, tied-together, could essentially be the “reverse” of the normal-one-way system…

This is purely-hypothesizing by someone who hasn’t even tried a one-neuron ANN, and has never gotten through an entire article/document on “how they work”/”how they’re implemented” despite trying so many times (maybe that’s how they’re supposed to be learned…? “This is an ANN” training-sets in the multitudes wherein no single “this is an ANN” example is understood…? “This is a banana” “This is a banana” … “This is

noisea banana, now show me the banana”) ;)I can’t *quite* see how it’d be possible to create an inverse/branch/inception-*layer* if such thing actually exists (rather than reverse-feed-through as they seem to describe it)

I think “Pig-Snail” is my favorite.

‘Hippo faces. Hippo face everywhere!’

This is truly fascinating! Interestingly the net tries to apply faces to anything it can which is EXACTLY what the human mind does. Faces in the clouds, tress, things that go bump in the night. Also how all the lake picture has huts and people as if imagining the potential of the space.

The Japanese towers on a snowy mountain and blue/green twisty pagodas are worthy of paintings btw.

We have survival imperatives to quickly recognize danger, to seek other humans, and to find food and shelter. These are the things that our meat processors are trained to identify. These neural networks don’t factor their own survival into their training. However they were mostly trained on pictures of things that we tend to photograph or paint: landscapes, buildings, people, and animals. The researchers could have used geometric shapes or objects we find less interesting instead.

Actually, YOU see faces and pagodas and trees. The network simply outputs a bit field based on it’s training set.

Actually, YOU see lines and shapes which you interpret as faces and pagodas and trees, the network kinda uses the same principles to “see” those things in the images.

Now the NN needs what real brains have, bullshit rejectors, most of the data we take in is discarded, these NNs need to do the same so they won’t keep thinking they see faces in the clouds. But I do agree with you, some of these images are pretty artistic, so when NNs start making art who gets the royalties?

My favorite bit is where we put computers in charge of drones and give them guns and missiles.

Man to Computer: Dude, you blew up a kid competing in a steeplechase!

Computer: I was aiming at the tiny terrorist dog he was sitting on. The kid and horse were just collateral damage.

Your getting humans and machines mixed up here.

Dont blame the poor machines for “acceptable loses”, thats humans all the way.

Haha, I can tell you don’t follow the news because real-life, human-controlled drones blow up weddings, goat herders, etc on a regular basis.

But markedly few office blocks with 1000s of people in them.

I think if you’re keeping score, USA-UK-Israel vs Muslims are way, way ahead in bodycount.

Wow, I’ve seen this story 5 times now, and hack a day has been the only source to actually tell my how/why it’s an interesting thing. the other stories were just a quick write up about “wow look at the crazy shit computers made” hackaday was the first to tell me the images were a product of a neural network.

Well, they all usually post the link to the source.

http://googleresearch.blogspot.co.uk/2015/06/inceptionism-going-deeper-into-neural.html

yeah they post the source, but that doesn’t give them any points, I wouldn’t read the source article of the news post makes it sound completely boring and pointless.

Haha, I actually do the opposite – I don’t read the article but go directly to the source. In many cases article’s “interpretation” is flat out wrong. If subject interests me I rather read the original info. So pretty much I use these websites as aggregators of links on a certain area of interest.

i want them to feed the neural net some hr giger and see what comes out.

With a side order of Bosch.

Thanks for crediting me (not) for tipping this in :P

Anyway I knew you would all have enjoyed this at least as much as I did.

Is getting credited for tips a new thing? I’ve submitted things before and later they appeared on the site – and I never expected credit in the first place.

It’s not a new thing. We usually credit people for the tips they’ve sent into the tip line.

In this instance, Michele sent in the tip on June 23, 2015 at 6:51 am (pacific).Will actually claimed this topic in the writer’s backend a little before this, and even though this post was finished on June 23, 2015 at 8:20 am (pacific), he started writing it a bit before hand.

In fact, yesterday, I got hit with the same complaint., even though the post was written before a tip was sent in.

If this is a new thing and people want credit for tipping us off, even though the tip had no influence over the post, then that’s a conversation we need to have. The editors and writers don’t have the tools to organize this, so expect some tips to slip through the cracks.

Brian, do you read the Hacker News (ycombinator)? You would have found this last week, as I did. I didn’t send anymore tips (from there) because I thought that many of us also read the HN.

BtW: Back to the subject NN and sound creations: https://www.youtube.com/watch?v=0VTI1BBLydE&app=desktop

(again thanks to HN, today)

No prob here Brian, in fact I just thought that the post may have been written just before I sent this in. Also, thanks for coming back at me so swiftly.

Cheers, Mick

Does it answer the question: Why do we have to sleep? Why do we dream?

It’s known that a good NN has to be initialized with a random values for good result, is it possible that parts of the brain have to be reseted (while sleeping) with random noise (creating dreams) to get effective again when we wake up?

Are there some Brain Hackers around here?

Are there some Brain Hackers around here?

I certainly hope not, unless you are referring to neurosurgeons who work to heal.

Ok, no hardware (surgery) brain hacking.

But EEG users, binaural sound experimenter,…

“Are there some Brain Hackers around here?”

Not saying there is or isn’t, but really;

a) Install a firewall already.

b) Thats some disturbing stuff you got in there!

No. Sleep is fundamental to allow our minds to do its garbage collecting. Basically, it is during a night of sleep that your brain will weaken the neural connections corresponding to information that you did not use recently. It will also make stronger the neural connections corresponding to information that you recently learned (or revisited). This is why that you tend to memorize things more easily when you sleep after it.

Without sleeping, our minds wouldn’t be able to “forget”. All the neural pathways corresponding to different pieces of information would just end up being all garbled and you would have serious trouble to remember or learn things. Ever wondered why people go insane with weeks of sleep deprivation? Now you know why.

As mentioned bellow, the 60% shriveling of brain cell, is like a necessary chemical washing/cleaning.( Somewhere I can see this mechanism also as a possibility for the reinforcing of the neuronal connections.)

But such cleaned brain cells, would have “noise” values (due to the re-expansion of cells), which would triggers dreams in the wake up phase of sleep. My bad to ask about sleep, while I meant the reason of dreaming.

Regarding why we dream… My theory is that when we go to sleep, we are shutting down our senses and using the full capacity of our brains to sort out short term and long term memories. We process EVERYTHING we get throughout the day. This is an enormous amount of data. The reason that we dream is that this data is being passed along parts of the brain we use to hear, to see, etc.. and our brain tries to interpret it as we pass into a higher consciousness cycle. This essentially becomes short term memory which is why it is usually difficult to remember a dream after a few minutes. Though sometimes things get shoved into long term memory and we sometimes get a dream confused with a real experience.

Apparently Dejavu is a hiccup in the process of filing long term and short term memories. It also tends to happen most often when we are sleep deprived.

I am a bit of a brain hacker, but haven’t seriously messed around with it in about 12 years.

Also notice that there are times when you have only slept an hour, but somehow experienced an entire day in a dream. This is also an effect of messing with long term and short term memory to sense time.

You’re right with the consolidation of memory, but it doesn’t make sense to reshuffle ( or reprocess) everything back through the sensory parts of the brain, and I never experienced anything like that in dreams.

I don’t say we aren’t reprocessing all the data, but I doubt that dreams are crucial for this.

As more we sleep (in one day) the more we dream which is a bit contradictory to the reprocessing idea, and dreaming is the last thing we do while we sleep.

But if the deleting of our short memory is done with random noise (the init state of any NN), and when this noise get processed, it could explain why we get dreams (which can get amazingly crazy when I can get to control them).

Brain hacker: I find it interesting to listen to white/pink noise and hearing voices and even music inside these noises or using binaural soundtracks to get in a “trance” like state or watch the darkness/optical noise (were I can see patterns in the neuronal tissues covering/in front of the light receptors inside the eyes)

Exactly, I’m more inclined to believe that dreams are just a consequence of our mind having reduced input stimuli (eyes shut, no noise, low light, constant temperature …). Lower power on stimulus equates to our mind picking up more background noise… With such low SNR, the brain would just pick up noise which is turned into dreams, akin to what the Google guys attempted in one of their examples.

Pretty cool stuff.

It’s obvious we sleep so that our brains and bodies have downtime for things like long term memory reinforcements and muscle repair etc. Most stuff about us you can just ask “why would evolution make this happen” and get the answer.

In a predatory world, sleep doesn’t make much evolutionary sense. Why would we close your eyes, lie down and don’t move for 1/3 of a day, with the danger to get eaten?

Last studies do point out that the brain want’s to get rid of waste, every brain cell shrivels up to 60% while we are asleep. Kind of washing the braincells from the accumulated neurotransmitter fluids.

But you’re right about then memory consolidation and cell repair.

My understanding was always that dreams is sort of “lazy loading”. There is just too much info to process in real life. So brain buffers that data and then, during sleep, it starts feeding that data to the network. As associations form, they produce visions (dreams). And since it’s not a simple act of remembering, but rather re-arranging the network on multiple layers to include new patterns, those visions can be quite weird.

So I’d like to see this project modified a little bit to visualize what the network goes through when it is learning. That would be the exact analogy of a dream. What we’re seeing now should probably be called “imagination”

I had some lucid dreams which kind of contradicts the “lazy loading” interpretation, more often their were absolutely no relation to real life, like dreaming about simplistic geometric patterns (lines and squares so boring!)

The project has probably some relation with these guys: https://www.youtube.com/watch?v=M2IebCN9Ht4

It doesn’t really. Lucid dreaming simply means that something went wrong and your brain decided to start this process while you were awake. It’s an exception which further supports the rule.

“no relation to real life” – it’s your sane interpretation. On some level of the neural network a house you went by or someone’s eyes could be imprinted as a rectangle or triangle. Dreams always have connection to real life.. either recent experiences or older ones (because recent events may cause previously established associations to update).

But, of course, full disclosure – all this are just speculations at this point.

They need to run this again, this time _without_ giving the computers any mushrooms!

I am far from an expert on this subject, but doesn’t a neural network rely on feedback to evaluate success? If so, then all the network is really doing is trying to please us. The more we tell it we like a certain thing, the more it will produce that certain thing.

Let’s say we create a fairly simple network that inputs small writings and we want to evaluate whether the network finds them funny or not. If the network reads a short story about a someone grieving over the loss of a loved one and then it classify that as funny, do WE determine that it was successful or not?

It’s the classical limitation in modern efforts in AI, IMO. The intelligence is limited by the creator. It can’t become smarter than us. We could make it smarter than any one person by using feedback from many people. But how then does it ever become smarter than a human?

Then again, our own consciousness is pretty much built the same way, though much more difficult to pin-point. What we enjoy or hate depends heavily on our experiences and social interactions. And what we like or hate changes with time based on those experiences and social interactions.

In your example about a neural network (NN) that evaluates texts as being funny or not, the network would first need to be trained. This means that you would feed the NN with a different text, one a time and for each you would “tell” the NN if the text is funny or not. After a certain large number of training iterations, your NN would be able to evaluate if any text (any text is just a random variation of a text inside the training set) is funny or not.

The are problems with NNs, like “overtraining”. For example, if you only use classic-literature texts to train your NN and then ask your NN to evaluate if a SMS text is funny or not, the NN would much probably fail at task.

Another problem is overfitting. For example, if you train your NN using texts in English, French, Chinese and German, the NN would not be able to learn much from the training set because your texts are too different. Using the wording in this article, it would mean that the network wouldn’t be able to store much invariant data from your training set.

Nobody is talking about consciousness here, that’s the holy grail of AI. Currently neural networks are just an easy way to do pattern matching in very very complex signals.

Sounds more like an inference engine. Hmm

“But how then does it ever become smarter than a human?”

The positive feedback doesn’t have to be supplied by a human directly.

You could put your neural network in a “virtual environment”, for example, with how well it does in that environment being the factor that strengthens and weekends connections. Humans might be supplying the initial conditions, but theres no reason to say that limits the AI to the same intelligence as humans as a cap.

Intelligence in this case is just “fitness for the task” – and while that fitness can be directly determain by human-dictated truths (“this book is funny”), it can also come from anything humans can measure even if humans cant predict or control it themselves (say, the weather). In this way its determined, but not limited by the creator.

When the only tool you have is a face-detection algorithm, every object starts to look like a face. Or something.

The fact that they fed its own processed images back through itself shows that we have a lot of ANN research left to do. If we arrange an ANN as a torus-shaped ring of layers with spokes coming off to accept input or process output then we might have a lot of interesting feedback loops and a more realistic simulation of how gray matter really works.

A torus shaped ring with spokes coming out of it. Ive never thought of that before, and now I want to make one!

Take the front wheel off your bike.

The retina has similar layers of processing, finding lines shapes etc.

LSD creates feedback and cross coupling in the brain, synesthesia.

This explains the visuals oft reported.

I’ve tried LSD twice in my life and was extremely disappointed. Other than seeing smoke coming out of the street once and seeing trails (but only on things like text), it was pretty underwhelming to me.

Next time try some real LSD.

Nah. My experimental days are way behind me now.

Maybe electronic LSD?

Yes, have google ANN’s process everything you view and display it on some VR goggles. =D

If your experimental days were not behind you I’d say try datura instead.

PS. Don’t try datura.

I don’t think anyone would appreciate me designing weapon systems while playing around with drugs. :)

Electronic LSD is actually a CES device which supposedly interrupts brain activity and can alter perception.

http://www.elixa.com/estim/CES.htm

Neural net assembled by Tibetans perhaps? I think I see the stages of Van Gogh and Waines insanity.

Awesome article that Google wrote about this. Funny to see it here after reading it a week ago on google news. Never seen an article in the news before it was featured on HAD before. LOL @ people quarreling over who ‘tipped’ it to HaD first. Irony.

Or, you know, just follow Googles own research blog directly which occasionally has interesting things.

“Has Google unlocked the mind’s creative process?”

No, this is a common technique researchers use to study neural networks. It provides insight into how to optimise an ANN.

It also has a Rorschach-test aspect when you look at them, perhaps they can run these things through human brains and average the reaction and see what comes out when it’s reversed like that through a ‘real’ neural network.

Incidentally, based on this and similar social media discussions I now reached a point where I feel justified in estimating the amount of americans that use LSD to be about 20% minimum.. And yet it’s never discussed in anti-drugs discussions or used as a thing in cop shows.

They should show these images and other half processed ones to people with brain damage, autism and try to understand what they see. I thing they are on to something here.

if an ai robot ever tells you it sees a quail in the clouds, just remember, its right.

Feed it Pron.

Does this type of neural network require specialized hardware? If not, this could be a lucrative Photoshop plugin.

Yep, I have certainly seen some of this stuff on blotter before…