The Therac-25 was not a device anyone was happy to see. It was a radiation therapy machine. In layman’s terms it was a “cancer zapper”; a linear accelerator with a human as its target. Using X-rays or a beam of electrons, radiation therapy machines kill cancerous tissue, even deep inside the body. These room-sized medical devices would always cause some collateral damage to healthy tissue around the tumors. As with chemotherapy, the hope is that the net effect heals the patient more than it harms them. For six unfortunate patients in 1986 and 1987, the Therac-25 did the unthinkable: it exposed them to massive overdoses of radiation, killing four and leaving two others with lifelong injuries. During the investigation, it was determined that the root cause of the problem was twofold. Firstly, the software controlling the machine contained bugs which proved to be fatal. Secondly, the design of the machine relied on the controlling computer alone for safety. There were no hardware interlocks or supervisory circuits to ensure that software bugs couldn’t result in catastrophic failures.

The case of the Therac-25 has become one of the most well-known killer software bugs in history. Several universities use the case as a cautionary tale of what can go wrong, and how investigations can be lead astray. Much of this is due to the work of [Nancy Leveson], a software safety expert who exhaustively researched the incidents and resulting lawsuits. Much of the information published about the Therac (including this article) is based upon her research and 1993 paper with [Clark Turner] entitled “An Investigation of the Therac-25 Accidents”. [Nancy] has since published updated information in a second paper which is also included in her book.

History and development

The Therac-25 was manufactured by Atomic Energy of Canada Limited (AECL). It was the third radiation therapy machine by the company, preceded by the Therac-6 and Therac-20. AECL built the Therac-6 and 20 in partnership with CGR, a French company. When the time came to design the Therac-25, the partnership had dissolved. However, both companies maintained access to the designs and source code of the earlier machines. The Therac-20 codebase was developed from the Therac-6. All three machines used a PDP-11 computer.

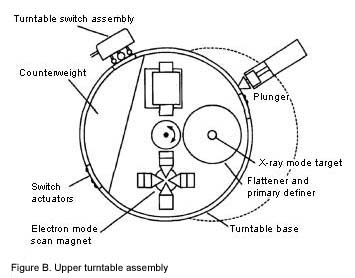

Therac-6 and 20 didn’t need that computer, though. Both were designed to operate as standalone devices. In manual mode, a radiotherapy technician would physically set up various parts of the machine, including the turntable to place one of three devices in the path of the electron beam. In electron mode, scanning magnets would be used to spread the beam out to cover a larger area. In X-ray mode, a target was placed in the electron beam with electrons striking the target to produce X-ray photons directed at the patient. Finally, a mirror could be placed in the beam. The electron beam would never switch on while the mirror was in place. The mirror would reflect a light which would help the radiotherapy technician to precisely aim the machine.

Therac-6 and 20 didn’t need that computer, though. Both were designed to operate as standalone devices. In manual mode, a radiotherapy technician would physically set up various parts of the machine, including the turntable to place one of three devices in the path of the electron beam. In electron mode, scanning magnets would be used to spread the beam out to cover a larger area. In X-ray mode, a target was placed in the electron beam with electrons striking the target to produce X-ray photons directed at the patient. Finally, a mirror could be placed in the beam. The electron beam would never switch on while the mirror was in place. The mirror would reflect a light which would help the radiotherapy technician to precisely aim the machine.

On the Therac-6 and 20, hardware interlocks prevented the operator from doing something dangerous, say selecting a high power electron beam without the x-ray target in place. Attempting to activate the accelerator in an invalid mode would blow a fuse, bringing everything to a halt. The PDP-11 and associated hardware were added as a convenience. The technician could enter a prescription in on a VT-100 terminal, and the computer would use servos to position the turntable and other devices. Hospitals loved the fact that the computer was faster at setup than a human. Less setup time meant more patients per day on a multi-million dollar machine.

When it came time to design the Therac-25, AECL decided to go with computer control only. Not only did they remove many of the manual controls, they also removed the hardware interlocks. The computer would keep track of the machine setup and shut things down if it detected a dangerous situation.

The accidents

The Therac-25 went into service in 1983. For several years and thousands of patients there were no problems. On June 3, 1985, a woman was being treated for breast cancer. She had been prescribed 200 Radiation Absorbed Dose (rad) in the form of a 10 MeV electron beam. The patient felt a tremendous heat when the machine powered up. It wasn’t known at the time, but she had been burned by somewhere between 10,000 and 20,000 rad. The patient lived, but lost her left breast and the use of her left arm due to the radiation.

On July 26, a second patient was burned at The Ontario Cancer Foundation in Hamilton, Ontario, Canada. This patient died in November of that year. Autopsy ruled that the death was due to a particularly aggressive cervical cancer. Had she lived however, she would have needed a complete hip replacement to correct the damage caused by the Therac-25.

In December of 1985, a third woman was burned by a Therac-25 installed in Yakima, Washington. She developed a striped burn pattern on her hip which closely matched the beam blocking strips on the Therac-25. This patient lived, but eventually needed skin grafts to close the wounds caused by radiation burns.

On March 21, 1986, a patient in Tyler, Texas was scheduled to receive his 9th Therac-25 treatment. He was prescribed 180 rads to a small tumor on his back. When the machine turned on, he felt heat and pain, which was unexpected as radiation therapy is usually a painless process. The Therac-25 itself also started buzzing in an unusual way. The patient began to get up off the treatment table when he was hit by a second pulse of radiation. This time he did get up and began banging on the door for help. He received a massive overdose. He was hospitalized for radiation sickness, and died 5 months later.

On April 11th, 1986, a second accident occurred in Tyler, Texas. This time the patient was being treated for skin cancer on his ear. The same operator was running the machine as in the March 21st accident. When therapy started, the patient saw a bright light, and heard eggs frying. He said it felt like his face was on fire. The patient died three weeks later due to radiation burns on the right temporal lobe of his brain and brain stem.

The final overdose occurred much later, this time at Yakima Valley hospital in January, 1987. This patient later died due to his injuries.

Investigation

After each incident, the local hospital physicist would call AECL and the medical regulation bureau in their respective countries. At first AECL denied that the Therac-25 was capable of delivering an overdose of radiation. The machine had so many safeguards in place that it frequently threw error codes and paused treatment, giving less than the prescribed amount of radiation. After the Ontario incident, it was clear that something was wrong. The only way that kind of overdose could be delivered is if the turntable was in the wrong position. If the scanning magnets or X-ray target were not in position, the patient would be hit with a laser-like beam of radiation.

AECL carefully ran test after test and could not reproduce the error. The only possible cause they could come up with was a temporary failure in the three microswitches which determined the turntable’s position. The microswitch circuit was re-designed such that the failure of any one microswitch could be detected by the computer. This modification was quickly added and was in place for the rest of the accidents.

If this story has a hero, it’s [Fritz Hager], the staff physicist at the East Texas Cancer Center in Tyler, Texas. After the second incident at his facility, he was determined to get to the bottom of the problem. In both cases, the Therac-25 displayed a “Malfunction 54” message. The message was not mentioned in the manuals. AECL explained that Malfunction 54 meant that the Therac-25’s computer could not determine if there a underdose OR overdose of radiation.

The same radiotherapy technician had been involved in both incidents, so [Fritz] brought her back into the control room to attempt to recreate the problem. The two “locked the doors” NASA style, working into the night and through the weekend trying to reproduce the problem. With the technician running the machine, the two were able to pinpoint the issue. The VT-100 console used to enter Therac-25 prescriptions allowed cursor movement via cursor up and down keys. If the user selected X-ray mode, the machine would begin setting up the machine for high-powered X-rays. This process took about 8 seconds. If the user switched to Electron mode within those 8 seconds, the turntable would not switch over to the correct position, leaving the turntable in an unknown state.

The same radiotherapy technician had been involved in both incidents, so [Fritz] brought her back into the control room to attempt to recreate the problem. The two “locked the doors” NASA style, working into the night and through the weekend trying to reproduce the problem. With the technician running the machine, the two were able to pinpoint the issue. The VT-100 console used to enter Therac-25 prescriptions allowed cursor movement via cursor up and down keys. If the user selected X-ray mode, the machine would begin setting up the machine for high-powered X-rays. This process took about 8 seconds. If the user switched to Electron mode within those 8 seconds, the turntable would not switch over to the correct position, leaving the turntable in an unknown state.

It’s important to note that all the testing to this date had been performed slowly and carefully, as one would expect. Due to the nature of this bug, that sort of testing would never have identified the culprit. It took someone who was familiar with the machine – who worked with the data entry system every day, before the error was found. [Fritz] practiced, and was eventually able to produce Malfunction-54 himself at will. Even with this smoking gun, it took several phone calls and faxes of detailed instructions before AECL was able to obtain the same behavior on their lab machine. [Frank Borger], staff physicist for a cancer center in Chicago proved that the bug also existed in the Therac-20’s software. By performing [Fritz’s] procedure on his older machine, he received similar error, and a fuse in the machine would blow. The fuse was part of a hardware interlock which had been removed in the Therac-25.

As the investigations and lawsuits progressed, the software for the Therac-25 was placed under scrutiny. The Therac-25’s PDP-11 was programmed completely in assembly language. Not only the application, but the underlying executive, which took the place of an operating system. The computer was tasked with handling real-time control of the machine, both its normal operation and safety systems. Today this sort of job could be handled by a microcontroller or two, with a PC running a GUI front end.

AECL never publicly released the source code, but several experts including [Nancy Leveson] did obtain access for the investigation. What they found was shocking. The software appeared to have been written by a programmer with little experience coding for real-time systems. There were few comments, and no proof that any timing analysis had been performed. According to AECL, a single programmer had written the software based upon the Therac-6 and 20 code. However, this programmer no longer worked for the company, and could not be found.

The Aftermath

The FDA declared the Therac-25 “defective”. AECL issued software patches and hardware updates which eventually allowed the machine to return to service. The lawsuits were settled out of court. It seemed like the problems were solved until January 17th, 1987, when another patient was overdosed at Yakima, Washington. This problem was a new one: A counter overflow. If the operator sent a command at the exact moment the counter overflowed, the machine would skip setting up some of the beam accessories – including moving the stainless steel aiming mirror. The result was once again an unscanned beam, and an overdose. The patient died 3 months later.

It’s important to note that while the software was the lynch pin in the Therac-25, it wasn’t the root cause. The entire system design was the real problem. Safety-critical loads were placed upon a computer system that was not designed to control them. Timing analysis wasn’t performed. Unit testing never happened. Fault trees for both hardware and software were not created. These tasks are not only the responsibility of the software engineers, but the systems engineers on the project. Therac-25 is long gone, but its legacy will live on. This was the watershed event that showed how badly things can go wrong when software for life-critical systems is not properly designed and adequately tested.

The problem is in this sentence: “The lawsuits were settled out of court.”

As nobody goes to jail, the shareholders will pay for the management failures and the product itself is not shamed into oblivion, businesses will just keep right on killing and maiming people to save a few dollars.

Why would anyone go to jail? This was a mistake made in the early days software control. They did not know that there was a problem. The FDA did not find the problem in testing. When they saw the first error they thought it was a hardware problem with the microswitches.

This lust for sending people to jail for honest mistakes needs to stop.

Very true, there was certainly no intention to cause harm and no provable negligence, it was just a mistake. But the mistake would not have been made if real engineers were in charge. PHBs are incompetent and should not be in charge.

Wrong. Even real engineers make mistakes.

Take a look at the removal of the interlocks.

On the old system if the interlocks came into effect it blew a fuse. So to get the system back working you needed to replace the fuse.

They replaced that with a software system that should have caught the error but did not.

Yes even engineers make errors.

No. Real engineers don’t make mistakes in safety interlocks. That is why safety interlocks are such clunky, ‘dumb’ things – you can be very sure that they work as expected (i.e. within the limits of known materials science). Real engineers can make fail-safe equipment.

Disclaimer – I’m not a real engineer, but I know some who are. Of course, if you are a bean counter you push for the lowest price and overrule all objections – it’s a valid argument today.

This is aimed at John Spencer and his disdain for “bean counters”. There just wasn’t a reply button under his comment.

As a “bean counter”, I find your assertion that bean counters are more interested in more beans on the P side of a PandL than lives and safety. When presented with ONLY cost, bean counters can only make decisions based on what facts are presented to them. You want to spend X number of dollars more than the estimated development costs? SUPPORT IT. Don’t just come to me and say you need more money. You’ve gotta say why you need more money. Bean counters have a fiduciary duty to reduce costs and maximize profit. The shareholders demand it (they aren’t to blame either). Bean counters have hearts too. Stop blaming catastrophes on cost cutters anonymous. The decision to remove the interlocks was not made in the accounting department. In fact, it may not have been “decided” at all. It could have been lack of oversight that allowed the conditions that led to the catastrophe. If it was a conscious decision, it was an engineering decision. Accountants do not have the education to make engineering decisions. If someone in accounting, finance, or management ever says that safety is where the costs should be cut, be an ethical engineer; support your budget overrun proposal and support it with safety data or be a whistle blower and maybe save a few lives. Whatever you do, don’t blame the accountants.

wjp –

I’ve been in meetings with bean counters on this sort of issue and their decision is to threaten to fire the engineer. They’ve already promised a price or a schedule, without asking engineers, and don’t want to be fired for bringing bad news. And remember – if it doesn’t fail, the bean-counters get credit for saving a nickel; if it does, it’s the engineer’s fault.

Clearly that sort of thing happened in the Therac-25 where they did not get a qualified software developer, did not have a test plan, and did not have any code review – but did remove those costly mechanical safety items. It is likely they had an engineer/engineers who quit rather than work on this machine, but the bean counters found unqualified replacements.

They could’ve perhaps designed the interlocks to be a bit less dramatic, simply cutting power to the beam, rather than blowing a fuse. Unless the fuse blowing was somehow deliberate, perhaps to really hammer correct procedure into the operator’s brain.

But this was a stupid decision. They should have kept the physical interlocks anyway, as well as the computer control. So that, no matter what the computer does wrong, it will never fire the beam in a dangerous way. Simple matter of mechanical implementation, and being wise enough not to trust a computer with life and death. Besides software bugs, sometimes computer hardware goes wrong. A simple interlock, to check the beam isn’t firing in deadly-mode, would have solved the problem.

Really I dunno why they removed it, not like the cost of a few microswitches would have made any difference to expensive, low production run machines like that. It’s really inexcusable. Sure, hindsight is 20:20, but not trusting human life to the correct running of a computer, where at all possible, is something everyone should stick to.

There are less failure modes in the process of blowing a fuse as compared to temporarily disabling the beam. The designers realised that safety circuits can fail to so they designed them carefully.

Glass tube TV’s had a safety circuit like this. If the anode voltage went too high then the tube would generate high (and dangerous) levels of X rays. A very similar problem but not as severe perhaps. High X rays would easily cause birth defects when pregnant women were exposed.

The solution to the problem in glass TV’s was called a crowbar circuit. It would drop a dead short circuit to the power rails going into the high voltage generator. This usually popped (exploded) or burnt out several parts (mostly transistors) in the power supply unit. I liken it to the effects of putting a crowbar through the circuit board. This was an effective safety feature because it meant that even with one minor failure, the set had to go back to someone who knew how to check the HV output so that it was in spec.

https://en.wikipedia.org/wiki/Crowbar_%28circuit%29

Another example of safety features to override a micro-controller is in almost every kitchen. The Microwave generator has safety interlocks on the door so that the micro-waves are turned off no matter what when the door is opened. The micro-controller can (and does) turn the beam off but we don’t trust a micro-controller as much as two simple micro-switchs.

I see the point, but blowing a fuse is very inconvenient, as well as itself potentially dangerous. Even a 240V mains fuse makes a fair explosion when it goes, can leave black burn marks on the plug and socket. And that’s the over-engineered, super-chunky British plugs and sockets. The sort of power flowing through some big medical particle accelerator is going to be something to worry about!

I see that blowing a fuse is a basic, low-level method of cutting power. But what about just putting a relay in to cut off the power? Or even 2 in series, in case one welds itself shut, or whatever. Or 2 in parallell, or 4 in series-parallell! Even a single relay sounds a lot safer than blowing the fuse on purpose. Whatever circuit blew the fuse could just cut power to the relay(s) instead.

And yup I’ve heard of a crowbar circuit. Comes from the practice of railway maintenance men. When the power was supposed to be cut off, before they worked on an electric railway system, they’d double-check by dropping a crowbar across the rails. Literal power rails! And a literal crowbar. If the power had been accidentally left on, it wouldn’t be any more.

Sounds like a great source of old war stories actually, I’d love to hear from the guy whose crowbar melted into slag, or something. I know a modern crowbar circuit often uses something like an SCR, they come in lots of high-power varieties, aren’t too expensive, and don’t switch off until the power’s cut.

Re the CRT TVs, it sounds again, a bit drastic, to deliberately blow a load of circuits just cos the high-voltage generator isn’t set properly, or is running out of spec. Sure it’s gonna get the attention of whoever ends up having to replace all the ruined boards. Although these days (or the days a few years ago when people still bought CRTs) it’s almost certainly just going to lead to the TV being thrown away. Needlessly wasteful, and a shame, if they still used that technique. Would’ve been much better, again, to just cut the power, and maybe emit a series of beeps as an explanation.

Your point about the microwave oven is exactly my point. Never mind how clever the computer is. When it comes to accidentally irradiating people, putting a mechanical switch or two in the path should absolutely be done. As a programmer, I understand hardware and software well enough to know absolutely not rely on them if at all possible.

If you use relays as you suggested and perhaps some beeps then the customer is likely to find a way to get to back on. Perhaps they keep flicking the switch on and off until the contacts fall off the relay and then when the safety circuit fails they won’t know there is a problem until there is a birth defect, or perhaps they become suspicious after the 6 birth defect much like this article where the equipment kept being used when it was dangerous.

On the other hand (with the crowbar circuit) no one has any choice. The equipment renders itself ‘unconditionally unserviceable’ and there *has* to be human intervention by a qualified person who then must inspect and repair the machine including it’s safety and present it again as ‘serviceable’ and safe. If this had happened in the case of this article then lives would have been saved because people were arguing that there can’t be a problem instead of fixing it when there was actually a problem. There is no confusion with a crowbar circuit and the equipment *must* be inspected and repaired.

I worked repairing domestic electronics in the era of glass tube TV’s. I had many many TV’s come in after the crowbar circuit had blown the power supply. It was very educational so see where design engineers had failed in critical circuitry and at the same time were saved by a simple safety circuit.

As mentioned, the model before this one still had the safety interlocks *and* it had the same software bug and not one person was harmed. Compare that to a number of lost lives.

Of course this should send someone to jail! How would you feel if you were supposed to get treated for something but you are actually getting poisoned by a deadly machine. You saying it is an “honest mistake” is ridiculous, a company that releases a machine that treats patients, especially with radiation needs to be thorough in safety regulations and go through vigorous testing for software errors. The fact that they did not notice an error that is caused by entering information quickly leading to 25x the deadly dosage of radiation is absolutely unacceptable. These errors could be found in less than an hour of standard testing. It is obvious that ACEL was just trying to make some easy cash and did not have any concerns for errors or testing. Furthermore, it is sad and inconceivable that after the first death due to the error ACEL still did not manage to test for such errors. If a radiation therapist can find such an error in a short amount of time how come the company creating it can’t! There is no excuse software engineers who have the least amount of knowledge know to test for an extensive period of time before release. This is just sad….

So are you the arbiter of who qualifies as a “real” engineer? This sounds like a no true Scotsman fallacy. Engineering textbooks are full of mistakes made by engineers, even ones at NASA. I suppose those were not “real” engineers, however…

In this case, a “real” engineer is pretty simple. A licensed Professional Engineer, P.Eng. is a legally protected designation in Canada (where AECL operates), much like medical doctor, or lawyer, where it is a crime to falsely claim to be one.

How exactly do you think “real engineers” come about? *Everything* we know about design of safe systems comes from studying the instances that things broke and people were injured or died. Therac isn’t unusual, it’s just the case that illustrates this specific failure mode.

Failure Mode: Death!

“Real engineers…”

As if humans can not make mistakes. We learn very little from success. It’s human error that breeds smarter ideas. Nobody wants a loss of human life, and I wish so much that those who died at the hands of human error to live. However, they were doomed to die without the machine that killed them, were they not? They didn’t give their lives for nothing. They could have died anyways, but at least they left a mark. They left behind something more valuable: wisdom. They helped forge the future by showing mistakes, and this future “real engineers.”

Yeah but there’s such a thing as caution, “belt and braces”. Where you’re working with a beam that might easily, invisibly kill people, you try and think up as many failure modes as you can. They already thought of the interlocks on the older models. I wouldn’t trust computers, software or hardware, with somebody’s life, unless I really couldn’t help it.

In the case of, say, cars, there’s a huge amount of money to be saved from having computers control stuff, it’s a trade-off, since so many cars are sold. But in this, I can’t think why they’d remove safety features deliberately. The more safety, the better. They knew what the beam could do from the earlier models.

Sure we learn from mistakes but we can also imagine problems before they happen, that’s the better way of doing it.

No matter how smart an engineer you may claim to be, you *will* make mistakes.

Take the phrase “why not use a relay”.. well because relays can fail open or closed. You can’t predict which.

Fuses fail open (eventually). However, even a blowing fuse can cause problems, since they don’t fail instantly, can permit large currents while failing, and can cause fire and explosion. A fuse is not always the answer either.

Nothing beats exhaustive testing, except *more* exhaustive testing. There is always going to be a small residual risk involved, even in the best designed machines, the Therac 25, was not however, the best designed of machines.

Blame doesn’t help either. If the engineers that design our internal combustion engined machines go to jail as a result of deaths caused by accidents with those machines, we would all be walking everywhere. We need to take a scientific approach and feed back from the design mistakes of the first generation of any machine, into the design of subsequent ones. We don’t tend to do this however, since we are being continuously pressed as engineers to produce the next generation, faster, cheaper, and with more features than the previous one. As a result machines become more failure prone, not less.

Take for example the humble food mixer in your kitchen. There are some of these machines, built in the 1950 and 1960, that are going strong (I have just fixed one from the early 1980s). They are repairable, and easily serviceable. They are however not going to continuously put money in the pocket of a company that produces food mixers. For that you need a food mixer that is just good enough to make it through the warranty period.

Medical equipment however shouldn’t need to follow the same path, but unfortunately, it tends to.

The next generation of therapy machine X has to push more patients through the machine faster than the previous one, it needs to do so in a manner that is “safe enough”, but not in a manner that is too expensive, and since exhaustive testing costs money, then things tend to be tested “just enough”.

I really dislike the suggestion that anyone is going out of their way to make sure things fail just after the warranty period, just so customers have to go buy a new one. Designing things to fail predictably after x number of hours is almost as difficult as designing things to never fail.

What you see instead is that because of the advent of accelerated lifetime testing, engineers are realizing that they are consistently over-engineering things because there was no real effort put into determining a meaningful lifespan. Claiming old things last longer is just survivorship bias. Airplanes are unambiguously safer than they were 100 years ago (or even 50 years ago), but they feel the exact same cost pressures. Arguably, airplanes see greater pressures to decrease material usage, to decrease weight, and therefore justify smaller engines or provide greater fuel efficiency.

I have some rock climbing equipment that my father purchased ca 1985. And I have some equivalent climbing gear that I purchased ca 2015. The modern stuff is a lot lighter, but no less safe. The manufacturer has gone on the record as saying that they specifically decreased the diameter of the steel cable in the equipment, because they had never received a single report of the cable failing.

Agreed … engineers (who are believe it or not, are human) do make mistakes. Just remember the engineers (& of course the accountants) who picked a cheaper O ring for the shuttle. And not only have I played an engineer on TV, I am one. (I even have the train set to prove it!)

It wasn’t an ‘honest’ mistake at all was it. It was (at the very absolute kindest) an ignorant mistake, and a stellar example of the Dunning-Kruger effect. The programmer never once thought about how dangerous any error might be (nor did the supervisors or, pretty much anyone else in a decision making capacity.) It never occurred to anyone that this was not consistent with safety culture.

Expecting the FDA to do a good job of testing is a joke. Neither the skill, experience, or inclination to do an appropriate job. And I suspect you wouldn’t be nearly so willing to absolve people of what is considered criminal negligence (and it in fact IS) if it was you that were operating the machine that caused the harm, or it was your spouse or child that was harmed, or that it was you that suffered and died.

And even if it was a hardware malfunction, that would have been criminally negligent to have released a product that could so easily and undelectably malfunction and cause such a high level of incredibly costly harm. Product liability law is super clear on this. That is EXACTLY why things were settled out of court. People involved and AECL as a company acted in a very wrongful manner and as fajensen very accurately said “businesses will just keep right on killing and maiming people to save a few dollars”.

The profit motive, and the obsession with ‘shareholder value’ has killed more people than I’d care to know, and the managers and accountants are just as culpable when they create an emphasis on cost-savings instead of safety at all costs. There is as much blood on the hands of the bean counters as the programmers, designers, and everyone else. I’d say this is particularly true in the modern world where there are often untrained managers supervising projects they know nothing about, and finance and marketing people making decisions (and tying the designer’s and manufacturer’s hands) without knowing anything more than cost data.

This is CLEARLY the sort of place where a man’s reach should NEVER exceed his grasp. When it does, people die needlessly. And when a profit motive comes into play, people willingly close their eyes just so they can get a piece of the pie. It was wrong from an design standpoint and perhaps even immoral for them to have ever let this machine get to market.

The FDA does not, in fact, perform any testing. According to their website:

“FDA does not develop or test products before approving them. Instead, FDA experts review the results of laboratory, animal, and human clinical testing done by manufacturers. If FDA grants an approval, it means the agency has determined that the benefits of the product outweigh the known risks for the intended use.”

In other words the FDA accepted the false claims or assumptions of safety by AECL. It was a situation similar to what doomed the first Ariane 4 rocket.

Assuming that modifications to a previous design that worked in spite of certain poor decisions (such as leaving a subsystem running after it was no longer needed, to avoid small inconvenience in case of a late launch hold) would have no effect on a later iteration despite major changes to other functions and features.

In the Ariane 4 rocket’s case that subsystem wasn’t used at all, yet data access was allowed between it and the rest of the systems – and the unused system was activated before launch and allowed to run just as it had in previous rockets. In essence they left it in and enabled because they were a bunch of cheapskates unwilling to spend money on an all-up simulated launch test to see what would happen with and without that unused subsystem installed and running. Spending that time and money would’ve resulted in “Oh, crap. We need to leave that out and make sure any issues caused by leaving it out are corrected, then test again.”. Instead they pinched pennies and blew many millions of dollars in satellites to tiny bits.

The owners should be executed by lasers.

Hey they didn’t found the guilty programmer anyway

no, people should’ve gone to jail to repeatedly ignoring that those machines were killing people. AECL wasn’t innocent.

Also, “honest mistakes” still land people in jail. If you accidentally run someone over you go to jail. It doesn’t matter of it was a mistake – you killed someone.

Atomic Energy of Canada Limited (AECL) is a federal Crown corporation, like the national postal service (Canada Post).

These days AECL is “government owned, contractor operated” but that was implemented well after Therac-25 as far as I know (after 2000 I think).

I’d like the be the first to point out that settling out of court did not keep anyone from going to jail. It was a civil case, not a criminal one. There was never a chance of jail time for anyone involved.

This is one of few well-documented and well-investigated cases of race condition in the embedded software. Should be a mandatory read to every IT students.

There was very similar occurence of overdose for radiotherapy patients in the Oncology Treatment Center in Bialystok, Poland. That one, however was traced down to lack of proper safety barriers (both technical and procedurals) and the root cause of these was traced to a…faulty rectifier diode.

http://www-pub.iaea.org/MTCD/publications/PDF/Pub1180_web.pdf

I might just respectfully point out that IT and Engineering are actually two very different things. IT typically handles computer infrastructure; desktops, laptops, tablets, domain controllers, office style software apps etc. Engineering designs and creates systems from the ground up, typically involving the integration of hardware and software design. One MAJOR problem that I’ve seen in these last 35 years is assigning IT ‘experts’ to perform engineering jobs.

Beware that parts of the report are NSFW due to nudity. (And not the enjoyable kind either – think disgusting and disturbing.)

Often retermed as “NSFL” if it’s medical squick

With both my parents diagnosed with cancer (dad long gone, mom just had a breast removed) i glad the machines they use nowadays are a few generations further :S

I share the sentiment, especially since my mom just had a relapse scare. Thank god for medical and technological breakthroughs. ‘god’ being a stand in for all the hard working innovators i don’t know the name of, of course.

“Thank god for medical and technological breakthroughs. ‘god’ being a stand in for all the hard working innovators i don’t know the name of, of course.”

Couldn’t agree more, also in completely different fields of medical tech, its good to see great strides being made across the board over the past decade or so.

My B-I-Ls best friend may have been one of the victims of the Therac 25. He had a brain tumor and it was removed.

The surgery was deemed a success with ~95% of his capacity. But to reduce the possibility of recurrence, he traveled to a major medical center for a radiation treatment, and came back a vegetable.

The deaths reported here are not the most recent.

interstingly enough, Frank Borger, the staff physicist at the clinic in Chicago, was also one of the leading PDP-11 internals experts at the time. I wonder if his PDP11 expertise came into play while investigating the issue with the PDP11 in the Therac?

The software was written entirely in PDP assembly (and was monolithic, including some proprietary OS) so my guess is that it didn’t hurt to say the least. I’m more interested why top-notch computer engineer with expertise on machine widely used by number of industries would work as clinic staff physicist, what clinic staff physicist even does as a job?

A staff physicist is usually a PhD-level position, someone with extensive physics or nuclear engineering background. It’s a combination of a clinical and research/theoretical position- while the radiation oncology docs will have training in physics during their fellowship, probably equivalent to a Master’s level, their focus is more on understanding the applicability of and approach to treating different cancers with radiation, managing side effects, coordinating with other treatment teams (medical oncologists, surgical oncologists, etc.) and so forth. The physicist will have a better understanding of where the particles come from, how to make them go where needed, and the finer details of how they interact, with less focus on the specific diseases being treated.

As you’d expect, especially in the 80s when a lot of the hardware was less mature, this requires a deeper understanding of how all the black boxes work than the typical clinician, and it’s not surprising that a really good staff scientist would also be a really good software/hardware jockey.

Thanks for answer

“The software was written entirely in PDP assembly (and was monolithic, including some proprietary OS”

Back then all OSs where proprietary even Unix. One has to understand that you average ARM M4 is much more powerful than one of those PDP-11s.

Sure thing, I know UNIX was proprietary a long time (even though V and VI versions source was included in academic coursebook at the same time). I was more impressed by “monolithic firmware including their own OS” part, as this isn’t something one sees often today… then again, one does not hear about someone killed by programming bug that often today, not having to write your own OS to start with might help a bit with that.

I would expect that the ‘OS’ was much less than you would expect even of something as simple as DOS. The ‘OS’ was probably not much more that some vector tables and common routines along with some task management.

Wow – the same Frank, Great catch! Google even shows him posting in comp.sys.dec with the name “Frank Hardware Hacker Borger”.

Not sure Varian would appreciate the use of a photo of one of their Clinacs in an article talking about the Therac 25. :S

Indeed, completely different beast than the Therac 25.

I still operate one of those Varians. (they’re getting a bit old though)

Added a caption to clarify that it’s not the same machine

Yeah, Varian has had their own share of problems (so I’ve heard).

My dad wrote the docs for that machine. The Varian.

Another rule of thumb:

If your device can cause serious injury or death if in an unsafe configuration, design hardware interlocks into it and don’t let anyone talk you out of it.

Add software interlocks, and preferably two different one where either being triggered brings the device to a screeching halt.

Never assume that an operator won’t be stupid enough to do Y.

Damn rights.

Yup. If they’d had Hackaday in 1985 this wouldn’t have happened. Which is pretty terrible.

If anyone ever bothers to read their End Users license, they will notice the fine print somewhere in the document that states the software is not to be used for medical equipment or nuclear power plants. If you want to run stuff like that, you don’t use off-the-shelf software, but buy/build software with the necessary safeguards.

Interesting. I remember hearing about this case at the time. My memory of it was that the bug caused an earlier, lower-power machine to trip a breaker, but that because of its higher power capabilities, the bug resulted in the patients receiving an excess of radiation.

I imagine that those patient getting treatments when the fuse blew also received overdoses, just not immediately critical doses.

No – the Therac-20 would blow a fuse when the linear accelerator attempted to power on with the turntable out of position. This would happen fast enough that the patient would not be exposed.

should be standard reading for IT students. indeed it was when i was student.

I heard about this in class just the other day. Everyone teaches it as an example of a race condition now.

I would modify the last sentence. It’s what happens when life critical hardware is improperly designed. Software should never, on its own, be life critical. There’s too many ways it can go wrong.

Have you mentioned this to Tesla Motors?

Or indeed any major vehicle manufacturer since the last decade? Software with ultimate control over engine output, brakes and steering (in approximate order of increasing severity of failure) is getting quite common. Frightens me so much I won’t drive anything made since 2000 and I write safety critical software for my day job. (And get to see the caliber of what has been deployed in the field.)

Or indeed any modern “fly-by-wire” commercial aircraft manufacturer.

Since the death rate under human operation is reportedly 10x the death rate under autopilot, Tesla feels morally (and perhaps legally) compelled to provide autopilot software in all of their vehicles.

All modern passenger airliners pass the control inputs first through (a bunch of) computers, THEN to servos which move aerodynamic control surfaces…same goes for pretty much all jet fighters since the end of the 70s with the MiG-29 being the only exception (the computers assist in stability, but there is mechanical linkage between the pilot controls and control surfaces)…

Nowadays anything above a couple tens of tons MTOW is fly-by-wire…AND IT WORKS!

Moral of the day – design life critical SW to fail safely.

@AKA the A:

“AND IT WORKS!” is perhaps a bit of an overconfident statement. Today’s software is so damn complicated, that it untestable. Yes, one can test the “vast” majority of cases, under “most” inputs, but it is not possible to generalize and say that a piece of software is “perfect” and will “always” perform as expected.

All systems designed by man, are fallible. The question which should always be asked, is, what happens when a system fails? How can failures be minimized and/or mitigated?

Take a look at the following:

http://www.flyingmag.com/technique/accidents/predicament-air-france-447

And there’s lots more, here:

http://www.cse.lehigh.edu/~gtan/bug/softwarebug.html

http://paris.utdallas.edu/IEEE-RS-ATR/document/2009/2009-17.pdf

The list goes on, and on, and on…

I’ve worked with someone who did life-critical programming. I thought he was a reckless lunatic who was certain to get someone killed someday. I didn’t hide my feelings which made me something of a pariah and now I don’t work with him anymore.

To do life or death programming requires a lack of agnosticism about failure modes. The same people who are confident that they know the scope of what they don’t know probably are the ones who are least qualified to do this sort of programming.

Sort of like political power: “those who want it… etc.”

When industrial safety is concerned: you remove the power source before working on a system. No stored energy/energy supply minimizes the ways things can go tits up(batteries, capacitance, gravity, and spring tension can still fuck you). In the embedded world: apparently because the serial console says everything is ok: it must be alright to stick your nuts in the automated shear… This is because computers are magic?

Maybe I’ve just been playing too much video poker but my policy is to treat deterministic systems as if they aren’t(as far as safety is concerned).

AFAIK: the program looks something like:

void *i;

for((int)i > 0; (int)i++;){printf(“It is now safe to put nuts in shear.\n”);}

for(i < 0;){printf("Darwin is laughing at you.\n");}

Then again: I was taught a gun is always loaded so maybe I'm just a victim of doublethink?

Unless you have checked less than one second ago a gun is always loaded even when it is empty.

even then the gun is still loaded….

I’ve been on a shooting range, and there are things you can do to a gun that gives you some visual assurance that it is safe. For example, a double-barrel shotgun that is “bent” open, or a revolver with its cylinder ejected can be assumed to be safe. For other weapons, there were red plastic flags affixed to plastic cartridge simulacra that would stick out of the breech to show that nothing else was in the chamber. The range safety rules at the last range I visited required that all guns be visually-safe in some similar manner and not be handled during the cease-fire interval (when you could go forward of the firing line and set targets, etc).

we are just about to enter an era when the cars will inevitably drive themselves. now imagine where this all lawsuit bullshit will go. To sue the programmer? The designer? Chip maker? The possibilities are endless.

The company.

just wait till they work out they brain damaged 1% of the population with antipsychotics… and we just didnt know.

Source?

Tin-foil?

Or just lack of knowledge of the natural history of psychosis (death by suicide or misadventure)

it always involves a history of antipsychotics. the people that didnt get medicated did a lot better, there has been studies.

People like you make me angry cause your helping people get their brains melted against their will!

I think it’s time for your meds.

^^ This is how you lose a rational debate.

~5% of people diagnosed with schizophrenia commit suicide.

Non-compliance with medication increases that risk

Antipsychotic therapy reduces symptoms and relapse rates

Progressive brain volume changes in schizophrenia are thought to be due principally to the disease.

The strongest correlation with grey matter loss in Schizophrenia is duration of follow-up. The size of the effect of antipsychotic use – which is significant at the statistical level – is less than 1/10th that of follow-up duration.

This is complicated by the statistically significant correlation between antipsychotic dose and symptom severity, muddling the line between treatment effects and disease effects.

I’m not saying the correlation doesn’t exist. But the last thing people with psychotic symptoms and their carers need is conspiracy theory about brain damage.

Or more succinctly*:

you have been diagnosed with schizophrenia. In ten years, you will lose 1.80 units of grey matter. If we treat you with antipsychotic medication, you will have less life-destroying relapses, they will be briefer, and you are both more likely to keep your family and less likely to kill yourself (baseline risk ~5%); but you will lose (on average) 1.87 units of grey matter, likely be obese and risk known infrequent (<5% lifetime risk) severe side effects likedive dyskinesia, extra-pyridimal symptoms or seretonin toxicity.

* this is succinct- it is a brief snapshot of the kind of complexities a treating team will discuss with a patient and their carers over years of treatment.

^^ this is not how you win a rational argument.

My referenced post has been eaten, but the brief summary*;

You or your loved one has been diagnosed with schizophrenia, a deadly disease where 40% of the mortality comes from suicide or misadventure. There is a reasonable chance that with treatment they will never have a relapse; there is a reasonable chance they will never be cured.

We know that over time people with schizophrenia have loss of grey matter. We think there may be an added effect of antipsychotic use, but this is also tied to severity of disease.

If we don’t treat, you will lose 1.80 units of grey matter.

If we do treat, you will most likely recover from your acute episode, have less symptoms during it, be less likely to kill yourself or others through misadventure (the latter being very rare), but you are likely to gain weight, have sleepiness or difficulties with concentration and fatigue, and if you require antipsychotics over a long period of time lose ~1.87 units of grey matter.

*This is not a brief summary of the kind of conversation that is had between treating teams and patients. It is the briefest summary of that summary, ignoring all possible patient factors that shift the choice and dose of agent.

If you and your carers don’t trust your team, get a second opinion from a professional service with experience in schizophrenia and a team that can support your ongoing care.

Don’t get it from anyone not willing to reference PubMed or publish in a peer reviewed journal, and don’t ignore 30+ years of treatment until the weight of consensus is shifted against it.

Aka most doctors are mostly right about most things; and mostly unsure about most other things; but any doctor who is certain about anything will almost certainly be wrong.

It wasn’t eaten; the spam filter caught it, probably because of the links. It’s up now.

Most of the US school shootings were done by people on strong psychofarmaca…whether they were the cause or a symptom is yet to be determined by actual investigation…

Certain politicians and people would rather demonize a type of inanimate object rather than put the blame on the people who used them. No need to investigate why, just wail and whine about the tools.

Good point. But the vast majority of shooting deaths are done by perfectly sane, white men with legally purchased firearms.

More seriously; equating mental health to firearm deaths is WRONG and PROFOUNDLY OFFENSIVE and you should educate yourself then withdraw the comment.

The person most likely to get killed with a gun if you have mental illness is yourself. Next is your family.

The person you are most likely to kill with a gun without mental illness is your family, or yourself. The gun owner most likely to kill your loved ones is you.

Gun laws aren’t about stopping guns having access to a shooter. They are about distancing lethal intent from a high-lethality weapon. Sometimes that intent is self-harm and driven by mental health; mostly it is anger, rage or opportunity.

Gun laws don’t affect the rate of suicide attempts or violent crime; but the experience of every developed country other than the USA suggests they do reduce lethality.

That comment does seem a little “out there” however, the old “chemical handcuffs” were(are) popular in nursing homes but recently there has been a move away from using them due to the reduced life expectancy.

How many ref. do you need?

Straus SM, Bleumink GS, Dieleman JP, et al. Antipsychotics and the risk of sudden cardiac death. Arch Int Med. 2004;164(12):1293-1297. Erratum in: Arch Intern Med. 2004;164(17):1839.

Reply to AussieLauren:

What proportion if those deaths involve a law enforcement or military shooter?

I remember reading about a similar deadly outcome with watchdogs. When I was learning about WDT’s, I came across this article (don’t remember the ads though). It’s a thoughtful read about how WDT’s are incorrectly imemented.

http://www.ganssle.com/watchdogs.htm

Considering how expensive a machine like that is, it’s hard to understand why they would decide to do away with a few simple interlock switches

It is easy to understand.

Many if not most corporations are run by sociopaths.

“A safety engineer? Full Time? PSHAW! We don’t even have a full time programmer!” was heard in that company – guarantee it.

An excellent book to read [especially for new programmers] on these type of problems is: If I Only Changed the Software, Why is the Phone on Fire? by Lisa K. Simone –

I myself on the first pass of hardware/software for a coil winding machine had the computer freeze and had the coil winding head hit the end of the winding area and fracture an aluminum support bracket. The next version of the machine used additional end stop microswitches and diodes to cut the power to the servo motor in the end stop direction, but allow it to back off the end stop.

Another great one is a book called “digital woes: why we should not depend on software.” (Lauren Ruth Wiener, 1993)

The title is a little wacky in the world of 2015, but the book has a lot of great examples of software or electronics screwing up and causing unusual things to happen. The Therac-25 is in there, of course, and so is the PATRIOT system error that was a HaD post a few days ago. More mundane ones include discussions of a rail control system that would lock up every autumn as wet leaves were ground into a conductive paste on the tracks; a McDonald’s drive-thru that would spontaneously report dozens of orders for cheeseburgers every time a nearby military radar was operating; and a tomato plant that repeatedly called 911 as juice from an overripe tomato dripped into the answering machine below.

Great read.

That tomato story is a good one! The rail thing though I think is the controversy over “leaves on the line”. We had that in Britain, although it might have been after 1993, it’s probably the same problem. Rail services were cancelled or severely delayed because of “leaves on the line”, which people took to be a pathetic, ludicrous excuse.

What actually happened, was the paste of crushed wet leaves was an *insulator*, and meant the contact the train made to the rail was interrupted. This contact has been used, since practically forever, to detect a train on the line. So when the line was insulated by leaf paste, the train “disappeared”, and preventing trains from smashing into each other is a business that errs on the side of caution.

Then again at this point the nation’s railways had been privatised for a few years, so maintenance, including trimming trees back, was something they barely bothered with. Same thing happened when we sold off our national water board, they cut back maintenance and, during droughts, it was found that 50% of the water supply was leaking out through cracks.

I can’t speak to leaves as insulation, but when pounded into a smooth coating on the rails by trains rolling over them, they’re *extremely* slippery. Obviously this is not ideal for trains wishing to accelerate or, somewhat more concerningly, slow down. In the US, this phenomenon is called “slippery rail”. Read more: https://en.wikipedia.org/wiki/Slippery_rail

Similarly, trains may need to slow down in unusually high heat or cold because the rails expand/contract and shift the guage, which must be fairly precise for safe operation. All of these are good reasons to delay or cancel service, but without understanding why, they tend to sound like weak excuses.

IIRC, the leaf sludge in the story I mention I think had become baked onto the rail, on a warm sunny day that you sometimes get in autumn.

The high heat can actually do substantially more terrifying things than “shift the gauge” (there’s a bit under a cm of tolerance in that for comfortable rail, and there’s safety margin more than that) – the actual distance between the rails doesn’t appear to tend to change much, but the path of the rails can shift /feet/ to the side in a concentrated area as buckling focuses the compression forces in the rail down on one small region of deformation. Cold isn’t as much of an issue, as tension doesn’t cause buckling. But seriously, do a google image search for “heat kink rail”, it’s impressive and definitely not something you want to ever hit at speed.

The long running (30 years), mailing list and digest, The Risk Digest is moderated and compiled by Peter G. Neumann, sponsored by the ACM, is freely available and strongly recommended for new (or experienced) programmers, security, IT/CS professionals and academics.

It’s worth a HaD article in its own right.

http://catless.ncl.ac.uk/Risks/

Ah yep, educational, informative, and entertaining! Keeps you up to date with the latest stuff in computery risks in general, from a very smart and educated viewpoint. Back when Usenet still lived, comp.risks was one group I always kept in my subscribed list.

Wasn’t the temporary “solution” (to the problem of speedy operators being too fast on the keyboard) to issue a field service not to have the keycaps removed from the cursor keys?

I thought it was QWERTY (urban myth)

Yes – AECL issued instructions that the up arrow keycap was to be removed, or the key rendered inoperable. This was just a stopgap to allow the machine to be used while more permanent fixes were made.

I read this article with interest having stumbled upon it a couple of years back. After I read about it the first time a “Therac 25” became a measurement in how lethal a routine or theroetical new design may be…

(So far it would appear nothing that came out of my head or workshop has been a Therac 25)

So, running with scissors is about a Therac 4, falling asleep on railway tracks scores a Therac 90. I like it!

In the first chapter of his 1993 book, Steven M. Casey writes about the 1986 incident with the Therac-25 at the East Texas Cancer Center in Tyler, Texas, and the victim, Ray Cox. Casey noted: “Before his death four months later, Ray Cox maintained his good nature and humor, often joking in his east Texan drawl that ‘Captain Kirk forgot to put the machine on stun.'”

The title of that chapter and the book is Set Phasers on Stun.

“Cheapskate business owners kill patients to reduce engineering costs” is the major takeaway I see.

One engineer, and not on staff – creating software to control a particle accelerator that gets pointed at people’s heads?

Call me crazy for expecting some serious engineering oversight up to and including engineers who’s sole job it is to discover error modes.

There is a flip side to this coin:

Had those extra safety checks been put in place, how many people would have died due to not having the new fancy features of the new treatment machine? How many wouldn’t be able to afford the more expensive machine so would have died?

Compare people saved due to innovation vs. people killed due to innovation.

In my opinion, modern medicine is far too conservative.

The older versions had some of the safety features – read the article.

You think this story is in anyway excusable?

This has nothing to do with pensive medical culture – this is about a corporations greed causing deaths. In fact the owners of the company murdered those people to save on a couple ‘useless salaries’ for, you know, engineers who make sure the particle accelerator is safe.

“The Therac-25 itself also started buzzing in an unusual way. The patient began to get up off the treatment table when he was hit by a second pulse of radiation. This time he did get up and began banging on the door for help.”

Holy crap, that makes me think of one of the Final Destination movies. That’s terrible on so many levels.

It’s even worse than that – there is an intercom and video camera which allows the operator to monitor their patient. The walls for treatment rooms are thick concrete, so you can’t just yell for help. That day, the camera and intercom were out of service….

You should also consider putting a blanket or something on that poor computer if it is freezing.

This story reminds me of a story I heard from the horse’s mouth.

Kirk McKusick used to (still does?) sell a video / DVD called “Twenty Years of Berkeley Unix.” It’s the history of BSD – much (if not most) of which he personally witnessed. I was in the audience when it was taped.

Near the end there is a Q&A segment. One of the questions was, “What’s the most unusual application of Unix you’ve heard of?”

His answer was an article he said once bore the title of “/dev/kidney” – and was about a Unix controlled dialysis machine.

His reaction to that was, “God, I hope I never need dialysis. I can just envision the machine entering some sort of critical portion of the operation, and then seeing ‘panic: freeing free inode.'” [Kirk McKusick’s most famous contribution to BSD is his work on the Fast File System – FFS]

I know the safety mechanisms could have prevented the incidents. And its a interesting case study. But I can’t just stop to think about the thousands of lives this machine has saved. I would always go for the safest rout possible but the regularizations on medical devices are just no fun. My colleague searched for months to get a medical approved lipo battery…

I worked for a medical imaging company around that time. I can assure you that sw practices changed as a result of those deaths, at least within medical device companies. Requirements were picked over, design documents picked over, Risk Analysis mandated and picked over by many peers, unit and system test design and record keeping etc. No random checkins of code with meaningless or empty log messages In other words, it introduced the concept of engineering processes to software. Something I haven’t seen elsewhere sine the late 90’s. IOW nothing at all like the free-for-all hacking in most of the FOSS world.

i really hate how they used rads then and millisieverts now

Oh.

I was involved with a software-only interlock, but this one worked. Here’s what it took:

Two PLCs, by two different manufacturers.

Two independent I/O chains, no shared contacts

Two Programmers, using entirely different languages (one ladder logic, one state machine)

A third engineer to write validation procedures (avg. 300+ pages)

the entire group staff performing said validations biannually, or whenever a configuration change occured

If the validation failed even one step, fix problem and start back at the beginning

This was at a National Laboratory Accelerator with a 9GEV electron beam.

Ah, I’d have just build a big lead wall and stood behind it. Simpler.

Actually, the electron is very small compared to the nucleus of a lead molecule. It might fly right through without a problem. You need smaller molecules (such as water) to really trap those boogers.

Every software engineer who works on systems like this should read Les Hatton’s “Safer C”.

http://www.amazon.com/McGraw-Hill-International-Series-Software-Engineering/dp/0077076400

The problems with the Therac series only popped up when the operators gained proficiency and were able to enter the settings and bang the button to fire faster than the machine was capable of running its software.

When they slowly and carefully checked and verified each step, the race condition would finish and the machine would be properly set up. When the operator “won the race”, the machine would just go “OK, operator said fire, I FIRE!” The 6 and 20 had the mechanical interlocks and fuses to blow and halt the firing as a band-aid for the crappy software.

They took the band-aids off the 25 and plastered on some more software in an ineffectual attempt to replace them, instead of starting over from scratch on the software to ensure that nothing could go worng. (To quote the Westworld tagline.)

It’s the wrong mindset to consider the hardware interlocks as bandaids to crappy software. The correct mindset is to consider them insurance. I do this all the time. I write my software with the idea no interlocks are there. If the interlocks fail, my software is the safety net. If the software fails, the interlocks are the safety net.

Airbags and seatbelts are usually more effective together than independently.

According to my local ER doc, airbags and safety belts have to both work to get real benefits (each isn’t much good by themselves and may actually be worse in rare cases), but your point is well taken.

I see some that blame the engineer and I tend to think it’s more management / accounting or whatever from above.

An engineer will follows instructions when directly given instruction that are counter to their ‘opinion’ a lot of the time.

The engineers got it right in the first place with the safety interlocks so it wasn’t an engineering decision to remove them.

In avionics you have sign off on something being safe and if anyone dies because it’s not so safe then that signature is going to come back to you along with a lot of people asking a lot of questions.

I was once pressured to sign off on a system for several years. In hind sight they just wanted to have someone to blame rather than spend the money to fix the problem. The problem I fixed was in automated flight control and it cause the aircraft to randomly drop about 1000 feet. An unrestrained pilot would be on the roof of the cabin saying his Hail Marys. It was a software bug in a poor system. Even though I fixed that one bug, I refused to certify the system as safe as there would have been other bugs so there was no way I was putting my name to it. Eventually they spent the millions and replaced the system, software and all. If I signed off on it then they wouldn’t have done this until they lost an aircraft and the staff.

Today we have ‘safety assessments’ that have no regard to the magnitude possible consequences.

The safety ‘risk’ of someone loosing a toe is assessed in the same way as a dozen people dying within the same assessment. The way we do safety assessments is all wrong. The methods used look like they come from insurance companies which tend to place a dollar value on lost life.

As for the rest of this article, well it’s about ‘fly by wire’ and now we have ‘drive by wire’ as well. There are many lessons to be learnt and it’s a piety that we don’t turn to the air transport industry as they have already learnt many of these lessons.

Where computers fit in with safety.

https://www.youtube.com/watch?v=bzD4tIvPHwE

Airbus had an other incident where a microburst hit one of their new fly by wire airliners at an airshow and the computer would not allow the pilots to have full throttle. Fortunately only the flight crew was onboard for that one. For the incident in the above video, the pilots were to do a low flyby but the only way the computer would allow such a maneuver was to put it into landing mode. So when they got near the end of the runway and pushed the throttles, the computer went “Nope. Landing.” Finally Airbus agreed that Pilot Knows Best and reprogrammed the system to always allow pilot override on the throttle. Pilots like to take off at full throttle so if anything goes wonky they’re already at full thrust. Airbus’ idea was to save fuel and reduce noise by having the system calculate the minimum thrust needed to get the plane into the air. But if there’s a sudden down draft or microburst, the plane would get slammed into the ground.

Another place Airbus likes to pinch pennies is on the structural strength of some parts. Remember the one that crashed in October, 2001 when the fin broke off, followed shortly by both engines? The pilot was whipping the rudder back and forth, stop to stop. The manual for the plane said Do Not Do That without pausing in the middle. It wasn’t designed to withstand that type of rudder motion. Boeing airliners are designed to take such abuse. A pilot cannot break a Boeing in flight with anything that’s possible to do with the flight controls. (Dunno about the mostly composite 787!)

That’s why I figure the MAV in “The Martian” must have been built by Airbus. They took the highest *observed* Martian wind speed and built it to not fall over in a Martian wind of that speed. Then Mars throws a *faster* wind at it. Oops. Nevermind that wind on Mars would have to blow at 666 MPH to equal the 74 MPH minimum Earth wind speed for hurricane force. 666 MPH on Mars is quite a bit faster than the speed of sound in the Martian atmosphere. I very much suspect that it’s impossible for Martian wind to ever blow anywhere near that speed.

Actually, minimum thrust take-off’s are widely used within the entire industry. It’s called Flex for Airbus and Derated for Boeing (D-TO), and it saves maintenance costs. Fun fact: It costs more fuel, and produces a lot more noise, as the aircraft remains at a lower altitude for a longer time.

Actually, derated or ‘reduced thrust takeoff’ are pretty much industry-standard. (Look it up, it’s called FLEX or Derated Takeoff) It actually increases fuel-use and noise, as the airplane remains at a lower altitude for a longer time. Just saves some maintenance costs.

This aged well with the murderously badly designed Boeing 737 Max!

The video is not a landing but a low pass, at high alpha and low speed.

You can find the details of the accident hereL https://en.wikipedia.org/wiki/Air_France_Flight_296.

Please forgive my ignorance but why would a machine such as this be given such a high potential output power, many times higher than the required dose?

Because you need far higher electron beam current to get a therapeutic X -ray dose. Hence the overdoses when the X-ray target was not in place.

I first read about the Therac-25 in a book entitled “Fatal Defect: Chasing Killer Computer Bugs” by Ivars Peterson http://www.amazon.com/Fatal-Defect-Chasing-Killer-Computer/dp/0679740279/

A few variations, as I recall them, between Peterson’s rendition and the one presented in this article:

Peterson never relayed that the earlier machines were entirely manual and only the introduction of computer power did they become fatal. Peterson’s machines were always computerized.

Peterson never mentioned the existence of hardware interlocks on the earlier models, removed when the machines became fatal.

Peterson named the earlier models as the Therac-5 and the Therac-15, rather than the -6 and -20.

In Peterson’s telling, the fatal defect was one entirely of the user interface. In the -5, it was a series of text prompts that had to be answered in order, the first being for x-ray or electron therapy. By the time the therapy parameters were entered entirely, the machine had had time to properly configure itself. In the -15, it was a curses-style text interface with arrow key/tab navigation, which first offered the capacity to enter all the particulars, rush back to change the mode between x-ray and electron therapy and then rush forward to the commit-and-begin command input, but still seldom did this happen. In the -25, it was a full modern(ish) GUI with mouse pointer, drasticly cutting time between changing the operating mode and committing to machine operation, yielding fatalities.

You don’t learn from your successes, only your mistakes.

When designing health & safety systems if all potentially dangerous failure modes haven’t been addressed in design and locked out by secondary systems you failed standard FMEA risk analysis and mitigation. The Therac 25 was not engineered by any engineering standard. I think these so called engineers and their management could have been prosecuted under negligent homicide laws because standard engineering practices known at the time were not followed. These guys didn’t innocently fail, they were negligent. The Therac 25 should have never been allowed to enter a state where lethal results were possible no matter the cause. Seems to me that actually engineering the safety interlocks was much simpler than the functional design. How complicated could it have been to have secondary system check that critical “settings” were in fact in place to prevent what seems to be not that many independent fatal failure modes prior to activating the beam?

IMHO this was not an engineering failure, it was a failure to engineer.

http://www.nytimes.com/2010/01/24/health/24radiation.html?_r=0

Might be intresting if people want to know of more recent actions

American FDA prevented Thalidomide from entering the country though the drug was a success in continental Europe. Its the FDA testing in Canada to blame. These killer machines still operate and I’m shocked no one went to jail. Much like Union Carbide disaster in India.

I’m sure this was bypassed because they believed that it would do more good than harm.

I get really angry at the incidents and operators involved including stakeholders and shareholders. As human beings we can know better… no should have, could have and would have help anything. Either we know we can healthy, safe and for the general well fair and being or we can not.

Radiation systems including even EMF emissions testing… not only chemical and biological for adverse effects on sensitive groups.

I am amazed that the best radiation devices are the Gamma Knifes and X-Ray systems that can detect and transmit at the same time and at highly focused focal points yet are not used as much as the huge machines that cook everyone to death and cause more health issues still to this very day.

A drug very similar to Thalidomide is still used for some cancer treatments.

It carries strict precautions that children or women of child bearing age, are not to even touch the pills.

Surely someone with more experience within the programming of the machine should have checked it first? Products should be released YEARS after being tested thoroughly. I understand that the inexperienced programmer wanted to do something good in the world, but that shouldn’t cloud judgement on how safe something is.

Actually, microwave ovens really do have crowbar circuits that blow the fuse if the door interlock switches get into an invalid state.

30 + years ago we had an Amana RadarRange (remember those?) come into the shop that continued to run even when the door was opened. But yes, I have serviced a number of microwave ovens in my life, and that was the only one that did that.

Wow, they even called it the Radar Range. Did the Amana colony build that or is that the cute front for the most cute sweet innocent acting felonious Germans?

“Radar Range” was named because Amana’s parent company (Litton or Raytheon, I think) had surplus magnetrons left over from WWII radars, and needed to do something with them in the 1946-1949 timeframe. “Radar Range” was a trademark for many, many years. My aunt had one, and so did my in-laws.

Thanks for sharing and clarifying. I get paranoid easy. Especially, concerning military grade equipment that is modified for non general health, safety, fair well being ways and means. I worked with IR and NIR sensors and thankfully, those systems aren’t typically dangerous in the public. UV can get strange though.

Well, for better or worse, my mother died of lung cancer in ’79, prior to the introduction of the 25. I know she had radiation treatments, that in the end did her no benefit. Good to know it could have been worse, much worse.