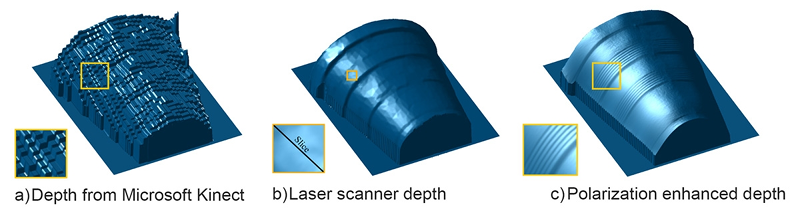

What if you could take a cheap 3D sensor like a Kinect and increase its effectiveness by three orders of magnitude? The Kinect is great, of course, but it does have a limited resolution. To augment this, MIT researchers are using polarized measurements to deduce 3D forms.

The Fresnel equations describe how the shape of an object changes reflected light polarization, and the researchers use the received polarization to infer the shape. The polarizing sensor is nothing more than a DSLR camera and a polarizing filter, and scanning resolution is down to 300 microns.

The problem with the Fresnel equations is that there is an ambiguity so that a single measurement of polarization doesn’t uniquely identify the shape, and the novel work here is to use information from depth sensors like Kinect to select from the alternatives.

The processing isn’t real-time yet, although the team thinks they can readily achieve 30Hz scans. In addition to the obvious 3D printing applications, the team hopes their technique, polarized 3D, will also have an impact on autonomous cars and robots.

We’ve seen plenty of Kinect scanners over the past few years. However, the polarization technique looks easy to implement and offers the promise of greatly increased resolution.

Now that is what I call a hack! It takes scanning output from voxels to Bézier like surfaces.

I think it combines them, like a reverse Phong shading. The gross measurement gives an average depth at each voxel and the polarization gives the local slope. Combine and smooth and it comes pretty close.

I am impressed.

300 microns makes it sound great until you realize that is .3mm. Good but still not great, at least in my opinion.

Not great for bearings and such tight tolerance things, but for many many things it is great.

I read this “But with the addition of the polarization information, the researchers’ system could resolve features in the range of tens of micrometers, or one-thousandth the size.” That’s better than 300 micometers. Where did that number come from?

I actually graduated on this exact topic two years ago. So i’d like to take some time to point out some the issues with how this is presented. It’s that that my thesis was never published or this could have been seen as plagiarism (I’m going to write it of as two people having the same idea without ever being in contact)

The 300 micron resolution has nothing to do with the selected method (Shape from polarisation) but everything with the selected hardware (a high resolution camera). If you would have combined the Kinect with a standard std reructured light method such as a standard 3 image phase shift, you would be able to get the same resolution, but then without any moving parts (which this method requires!).

It’s a shame that the marketing department of MIT changed a pretty decent paper into a “It increased scanning a 1000x time in resolution!”, which is only true as they compare a kinect with a much higher resolution camera.

This idea isn’t nearly as interesting as you might think. The Fuel3D for instance uses a similar train of thought. They use shape from shading as their main scan method, but just like SFP it only gives a surface normal (whereas polarization is actually -worse- as it gives two (possible solutions). They combine the depth gained from ‘standard’ two camera depth as a scaffold, with the more accurate data of the SFS to improve the results.

How do you graduate without a published thesis? Copying ideas isn’t plagiarism. Stealing the text of your thesis is plagiarism.

Publishing a thesis isn’t a hard requirement for a lot of universities. If you do an internship with a company, you can even get it in a confidential state (eg; It’s never public). It might not be exactly plagariarism, but if my work did got published, it would remove pretty much all the academic contribution they have with this paper.

would you care to post your paper? The MIT group open sourced theirs.

[Al Williams] says a DSLR was used, and you say a “much higher resolution camera” was used.

But the source article says nothing was used but a Kinect and a polarizing lens:

“The researchers’ experimental setup consisted of a Microsoft Kinect — which gauges depth using reflection time — with an ordinary polarizing photographic lens placed in front of its camera. In each experiment, the researchers took three photos of an object, rotating the polarizing filter each time, and their algorithms compared the light intensities of the resulting images.”

And you say this method requires moving parts. It’s true the test setup did, but that’s solvable in a commercial product:

“A mechanically rotated polarization filter would probably be impractical in a cellphone camera, but grids of tiny polarization filters that can overlay individual pixels in a light sensor are commercially available. Capturing three pixels’ worth of light for each image pixel would reduce a cellphone camera’s resolution, but no more than the color filters that existing cameras already use.”

So what’s the real story? It sure looks like the only misrepresentation on the part of MIT would be if what [Paul] says below is correct, about only certain materials displaying detectable polarization.

the polarising lense was on the DSLR, not the kinect, it is in the picture of the setup. Also not a new thing.. the linked article is poorly worded in a number of ways.

This is why you never look at the articles but at the actual paper. The article is weirdly worded and full of glaring mistakes.

Furthermore; Using 3 pixels with a polarized filter would significantly complicate the usage of the algorithm as the data you’d get would be slightly translated with respect to eachother. You will need quite a bit of extra processing to fix this. I find this a very naive statement to make by computer vision scientists. They should know that this is a non-trivial problem

Interesting exploit. So, the technique requires the object to be very cooperative: In order to produce a detectable polarization, the object must be a smooth, polished dielectric material within a certain range of angles from a well-positioned light source. Anything metallic won’t work. Textiles won’t work. Skin won’t work. Carefully-positioned injection-molded plastic tchotchkes, now they’ll work.

More fantastically-spun academically interesting stuff from the Media Lab. I am yet reminded again of the (sadly somewhat dated) commentary http://philip.greenspun.com/humor/media-lab

this is fantastic!

If this works, why isnt there new software for the Kinect by now? I want to use my Kinect setup in higher resolution