Machines running out of control are one of the staples of comedy. For the classic expression, see Chaplin’s “Modern Times”. So while it starts out merely impressive that [Denver Finn]’s robotic fingers can play an iPad piano video game, it ends up actually hilarious. Check out the linked video to see what we mean.

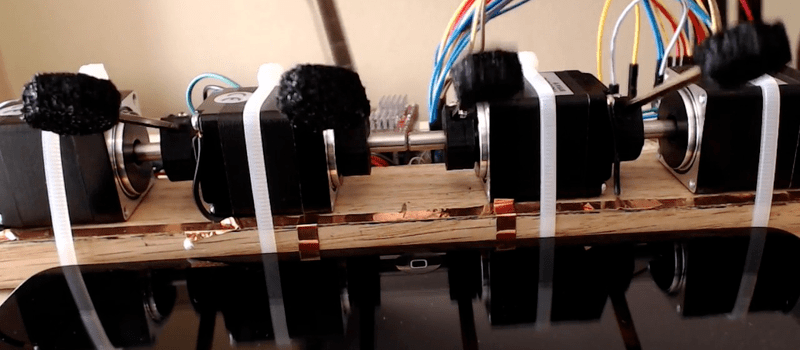

Details on the bot are scarce, but it’s not all that hard to see how it works. Four large servo motors have conductive-foam pads on the end of sticks that trigger hits on the iPad. Motor drivers and some kind of microprocessor (is that a Teensy?) drive them. An overhead iPhone serves as a camera and must be doing some kind of image processing. [Denver Finn], if you’re out there, we could use some more details on that part.

We suspect, based on the fact that the narrator mentions the phone-camera’s speed, one of their chief marketing gimmicks, that we’re being virally marketed to. (Or maybe we’ve just become too jaded.) That said, it’s still cool.

We’ve seen robots playing video games before, of course, more than a few times. But this one is the first that we can recall that makes use of a machine’s extraordinary dexterity, and that’s pretty cool.

Thanks [MakerBro] for the tip!

incredible ! :D

I was playing with this idea a month ago. Used foil pieces/coins and cutouts for photo detection using an all analog “dark? switch to ground” method. Works for multiple presses, no iPhone, no MCU, and will “hold” down the appropriate keys. Just needs a fast enough dark detection method, I had some very responsive photo detectors. This wins for showmanship for sure, not much to look at a foil+wires on a screen. I don’t know what the limit is on this kind of setup. Might want to move the arms closer to the screen. At 120 fps with the iPhone I wonder how longer before the system can’t keep up with a tile moving across the screen, sending the signal to the motor, and having the game register the touch is an issue.

Those motors look more like steppers to me, not servo’s, especially considering the stepper-driver-like PCBs in the background.

Yeah, I’m puzzled at the use of steppers instead of say… solenoids. I would have thought that steppers would not have the response times necessary for this but he got it working. Can’t argue with success– actually, sometimes you can. Nicely done.

Very cool

They’re so totally stepper motors…

Ok, first – it’s not a marketing gimmick.. you’re totally jaded :) I built this after my 7 year old daughter showed me Piano Tiles and I figured I could beat it with a robot.

There’s an iPhone 6+ overhead that does image processing – this runs a custom app using the GPU based on Apple’s RosyWriter sample code so it can handle frames at 120fps. This allows it to sample higher than the 60hz refresh rate of the iPad screen.

It detects the speed of the oncoming tiles and looks ahead based on this to be able to get ahead of the communication delay and fastest swing time of the motors.

When it detects a tile on a frame change, it sends a MIDI note for that key with the time to hit encoded in the velocity to a Teensy 3.2 (via lightning USB camera cable)

The Teensy monitors and queues the MIDI messages, assigning each hit/release a specific deadline minus a static offset for the communication delay. For each command it determines the acceleration needed to reach the goal in that time, and drives steppers accordingly. The steppers have some 3D printed collars on their shafts that hold brass bars with conductive foam pads to hit the iPad. They are all attached to a strip of copper tape that runs under the iPad to increase the capacitative coupling.

Great work, This is amazing!!!

Thanks so much!

Awesome! Thanks for the reply. (Figures you’d be a Hackaday reader…)

I saw the line where you detect the black squares moving forward as it gets faster and faster. That’s a very interesting comment (below) about the touch-sensor’s maximum frequency and the screen’s refresh rate. That makes it likely that there’s actually a maximum score for this sort of game, huh?

Also really like this guy’s build: https://www.youtube.com/watch?v=8hlQ0MiowN8 He does it with photocells and relays that ground/float coins, which is pretty clever. But it’s not half as cool to watch it run. The physical thwacking is brilliant.

Thanks again for stopping by! Don’t be a stranger.

No problem, thanks for the write up! Yeah, admittedly my primary design goal was to make it look cool :) I really liked the idea of making it at first look slow and only just able to hit the tiles but then turn out to be unstoppable. At one point I had the extra fingers move up slightly in a certain shape when they weren’t the ones tapping so the movement looked more human, but it would have complicated it a bit at higher speeds. I was focused on the timing so rather than setting a speed threshold for it I just removed it.

I didn’t use solenoids or LDRs because I wanted it to work more humanly and anticipate the tiles, using minimum acceleration to get there. I tested the steppers to a hit rate of 60 hz – (imagine that!) so that isn’t really the limiting factor.

One of the biggest limitations is the LCD persistence – even sampling the screen at 120hz with sub 1/120s shutter, everything tends to blur together. The screen also doesn’t all display simultaneously because the iPad GPU does deferred tile based rendering.

Lastly, the iPad samples touches at 60hz. As you approach 30hz (the nyquist), you get increasingly worse aliasing when your touches don’t line up with the sample times of the iPad touch hardware. Basically the same effect as when you record video of an old CRT and see the black bars move slowly across the screen.

LDR are slow, but some phototransistor should work. You could place them at the top of the tablet so there is a bit of time to process the signal and send an impulse to one of the steppers. Because the speed of the tiles is increasing it must be measured, maybe one row with 2 phototransistors and some simple math?

I think one issue with phototransistors would be that you couldn’t detect the position of the tile when it is detected. It might have just hit it, or just be leaving it when the speed gets really fast. You’d also wouldn’t be able to differentiate between blurring due to the LCD ghosting and the blue hold sections. With the camera I can detect each frame change on the iPad and the tile position and time the hits based on that. The timing requirements of the blue hold sections are also slightly different than the regular black tiles, and become a problem at higher speeds.

Mainly, I wanted to make it as separate from the iPad as possible, and make the movement natural. That would exclude wires/detectors across the front.

If I didn’t care about any of that, barring jailbreaking I’d probably use AirPlay to stream the video for analysis and skip the motors and leave the pads on the screen and switch in/out the capacitance with half bridges (which I did test and find to work).

For detecting the edges of the tiles the sampling must be quite fast. Possibly it wouldn’t work, i didn’t think about the blurring. Oh and for the blue hold sections i forget them too… (maybe it’s possible to distinguish from the tiles by reading an analog value from the phototransistors?).

Each frame is 1/60 second, and the LCD persistence causes around 3 frames to blur together.. so at a 60 tile/s rate, that would look like one 3 tile long gray snake jumping forward by one tile every 1/60 second.

Thanks for explaining background and considerations on hardware in this project.

Also cool project! Made me and the wife lough.

Sure, glad you enjoyed it!

Same here, Koen took the words right out of my mouth (except i have no wife :) ) Thank you for sharing about the tiny but many aspects of this work.

Now Rimsky-Korsakov’s Flight of the Bumblebee is too easy, and it would be fairer for your mechanical player to challenge proper mechanical music, and next update of the game should upgrade its playlist with some random Conlon Nancarrow piece :) :

https://www.youtube.com/watch?v=f2gVhBxwRqg&t=34s

Since Piano Tiles are in fact piano rolls for humans :) , this has quite a few similarities with another project previously reported on Hackaday : http://hackaday.com/2013/01/07/reading-piano-rolls-without-a-player-piano/

I like your iPhone used as a “high-performance video analysis MIDI controller” (with built-in monitoring screen and all in one ultra slim form factor!), but based on your experience on requirements for this project, and besides software practicality, do you think it would be possible to achieve almost the same results with OpenCV on a Raspberry Pi + camera module v2 with a video feed limited to 640×480 at 90pfs, as a low-cost equivalent solution ?

What do you think the is the upper barrier without tomfoolery involving altering the iPad in some way?

Simply and purely genius. Thanks for sharing!