Some tools in a toolbox are versatile. You can use a screwdriver as a pry bar to open a paint can, for example. I’ve even hammered a tack in with a screwdriver handle even though you probably shouldn’t. But a chainsaw isn’t that versatile. It only cuts. But man does it cut!

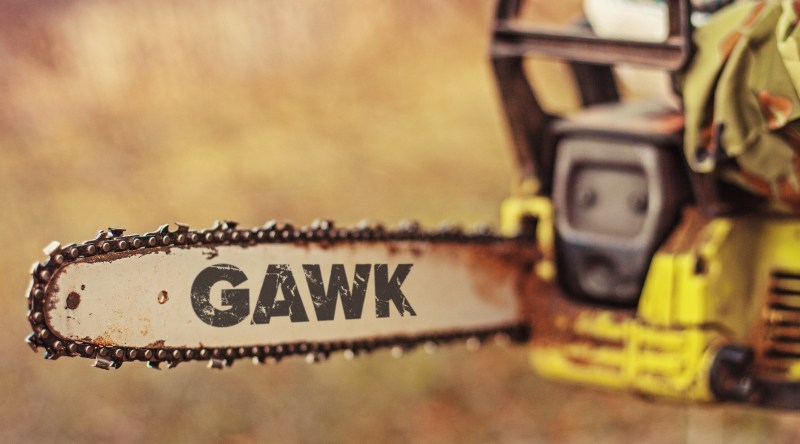

AWK is a chainsaw for processing text files line-by-line (and the GNU version is known as GAWK). That’s a pretty common case. It is even more common if you produce a text file from a spreadsheet or work with other kinds of text files. AWK has some serious limitations, but so do chainsaws. They are still super useful. Although AWK sounds like a penguin-like bird (see right), that’s an auk. Sounds the same, but spelled differently. AWK is actually an acronym of the original author’s names.

AWK is a chainsaw for processing text files line-by-line (and the GNU version is known as GAWK). That’s a pretty common case. It is even more common if you produce a text file from a spreadsheet or work with other kinds of text files. AWK has some serious limitations, but so do chainsaws. They are still super useful. Although AWK sounds like a penguin-like bird (see right), that’s an auk. Sounds the same, but spelled differently. AWK is actually an acronym of the original author’s names.

If you know C and you grok regular expressions, then you can learn AWK in about 5 minutes. If you only know C, go read up on regular expressions and come back. Five minutes later you will know AWK. If you are running Linux, you probably already have GAWK installed and can run it using the alias awk. If you are running Windows, you might consider installing Cygwin, although there are pure Windows versions available. If you just want to play in a browser, try webawk.

AWK Processing Loop

Every AWK program has an invisible main() built into it. In quasi-C code it looks like this:

int main(int argc, char *argv[]) {

process(BEGIN);

for (i=1;i<argc;i++) {

FILENAME=argv[i];

for each line in FILENAME {

process(line);

}

}

process(END);

}

In other words, AWK reads each file on its command line and processes each line it reads from them. A line is a bunch of characters that ends with whatever is in the RS variable (usually a newline; RS=Record Separator). Before it starts and after it is done it does processing for BEGIN and END (not exactly lines as you’ll see in a second).

Line Processing

Big deal, right? The trick is in the line processing. Here’s what AWK does:

- It puts the whole line in a special variable called

$0 - It splits the line into fields

$1,$2,$3, etc. - Variable

FSsets the regular expression to use for splitting. The default is any whitespace, but you can set it to be commas, commas and spaces, or even blank lines. - Variable

NFgets the number of fields in the current line. - The line number for this file is in

FNRand the line number overall is inNR.

Now the AWK script is what handles the processing. Comments start with the # character, so ignore those. They go to the end of the line. Anything in column 1 (except a brace) is a match. Think of it as an implied if statement. If the expression is true, the code associated with it executes. The BEGIN expression is true when the processing hasn’t started yet and END is for the processing after everything else is done. If there is no condition, the code always executes.

Example:

BEGIN { # brace has to be on this line!

count=0

}

{

count++

}

END {

print count “ “ NR

}

You can put semicolons like you do in C if it makes you feel better. You can use parenthesis too (like print(…)). What does the example mean? Well, at the start set count=0 (variables are made up as you need them and start out empty which means we don’t really need this since an empty variable will be zero if it is used like a number). There are no types. Everything is a string but can be a number when needed.

The brace set in the middle matches every line no matter what. It increases the count by 1. Then at the end, we print the count variable and NR which should match. When you write two strings (or string variables) next to each other in AWK they become one string. So that is really just printing one string made up of count, a space, and NR.

Something Practical

Here’s something more interesting:

BEGIN { # brace has to be on this line!

count=0

}

count==10 {

print “The first word on line 10 is “ $1

}

This prints the first word ($1) of the 10th line of input. If you don’t give any arguments to print, it prints $0, by the way. Overall, this still isn’t too exciting. Maybe it would be more exciting to use $1==”Title:” or something as a condition. But the real power is that you can use regular expressions as a condition. For example:

/[tT]itle:/ {

This line would match any input line that had title: or Title: in it.

/^[ \t]*[tT]itle:/ {

This would match the same but only at the start of a line with 0 or more blanks ahead of it.

You can match fields too:

$2 ~ /[mM]icrosoft/ # match Microsoft $2 !~ /Linux[0-9]/ # must NOT match Linux0 Linux1 Linux2 etc.

Functions

AWK has functions for regular expressions in the code too such as sub, match, and gsub (see the manual). You can also define your own functions. So:

/dog/ {

gsub(/dog/,”cat”) # works on $0 by default

print # print $0 by default

next

}

{

print

}

This transforms lines that have dog in them to read cat (including converting dogma to catma). The next keyword makes AWK stop processing the line and go to the next line of the input file. You could make this script shorter by omitting both the first print and the next. Then the last print will do all the printing.

Associative Arrays

The other thing that is super useful about AWK is that it has a little database built into it hiding as an array. These are associative arrays and they are just like C arrays with string indexes. Here’s a script to count the number of times a word appears in a text:

{

for (i=1;i<=NF;i++) {

gsub(/[-,.;:!@#$%^&*()+=]/,"",$i) # get rid of most punctuation

word[$i]=word[$i]+1

}

}

END {

for (w in word) {

print w "-" word[w]

}

}

Don’t forget you can use numbers too so foo[4] is also an array. However, you can also mix and match even though you probably shouldn’t. That is foo[4]=10 and foo[“Hello”]=9 are both legal and now the array has two elements, 4 and “Hello”!

Another note: $i and i are two different things! In the first pass of the above for loop, i is equal to 1 and $i is the content for the first field (because i is 1). You often see $NF used, for example. This is not the number of fields. It is the contents of the last field. NF is the number of fields.

You can run this script with:

awk –f word.awk

Or, if you are on a Unix-like system, you could start the file with “#!/bin/awk –f” instead and make it executable.

Hacker Use

There are lots of other things you can do with AWK. You can read lines from a file, read command line variables, use shell programs to filter input and output, change output formats, and more. Most C functions will work (even printf). You can read the entire manual online and you’ll find examples everywhere.

You might wonder why hardware hackers need AWK. Next time, I’ll show you some practical uses that range from processing logged data, reading Intel hex files, and even compiling languages. Meanwhile, maybe you’d like to use crosswords to learn regular expressions.

Photo credit: [Michael Haferkamp] CC BY-SA 3.0

I read the title and thought this would be about gleaning celeb porn (are wrestlers celebrities?) from text files….

I either use a combination of (g)awk/sed/(e)grep on the command line for quick things I want to process or, if it’s something more serious then I just write it in Perl (which has constructs very similar to those three tools). When you’re processing text files, they’re very handy tools to have nearby (either directly on *nix or via Cygwin).

I’ve used them many a time to automatically extract signal lists from internal database files and, with a bit of care, can even generate the useful bits for header files (port declarations in Verilog/VHDL or SDC/UCF/XDC).

Exactly! Perl. End of story. Asserted of piped awl commands actually pays a load time overhead much much greater than perl. Perl has many command line flags to automate common cases like looping line by line, splitting each line on delimeters into and array and printing out results without even writing code. No one should ever use awk.

It depends on what you’re doing. Not every *NIX system will have PERL, but they most certainly will have Awk. If you are writing some scripts that need to run on all kind of systems then Awk is a good tool to have. I use many different tools depending on the job at hand: Awk, grep, BASH, Javascript, Perl, Python, sed,

I use a bevvy of MS tools to parse huge text files to get them in standardize order for data mining. I use DOS find, replace, etc. MS Excel and MS Word. In a browser environment you can use JSCRIPT regular expressions to parse through text too. I still like building MS DOS Batch Files to attack text data too. I found an easy way to do a text input command in DOS I did not know was there. And no not that convoluted COPY CON: [data] [F6] [enter] command either. I use it for work to type in date to my batch copy of my camera field photos to my hard drive.

” I found an easy way to do a text input command in DOS I did not know was there.”

Well, whatever you do, don’t share it with us…

>I still like building MS DOS Batch Files to attack text data too.

You must be ill. Seriously, you should go see a doctor.

DOS-Batch is a horrible thing!!

You’re correct but I like quick & dirty solutions…

We can agree it’s dirty and yeah, if you really know it it might even be quick. Personnally i use Perl for text processing and scripting stuff, often mixed with DOS-commands. For personal use (and especially run-once-only scripts) it’s ok and quicker than looking up how to use the convenient Perl-module…

For example when i need to process a list of files in a directory i often use something like

for my $file (split(/\n/, `dir /b [/s] *.fileext`))

{ …. }

some guy – I guess you noticed, as many other HaDers, I’m the “retro” guy. It’s kinda hard to shake the old retro ways. They do come in handy sometimes. When I was into IT back in the post 2000’s, I used to know PERL. I did use it for text searching/parsing too. I know there are systems out there that are made for handling huge text files and fast. I just let it all go fallow when I changed occupations to what I do now. Obviously it’s not IT work (which I wish it was – I loved IT). Now I am too “retro” and the younger guys/gals have the helm now. I still enjoy coding as a hobby and general inventing. I’m starting to just braindump some things I’ve been sitting on in hackaday.io. I know they aren’t high-tech or Arduino-ready, but I like to brainstorm stuff. Might as well share it now rather than later when I can’t…

@sonofthunderboanerges

I was absolutely not criticizing you, on the contrary, hats off to you for understanding DOS-batch! As long as it helps you to get the job done almost every tool is right. And this batch-stuff has even one advantage opposed to Perl etc: You don’t have to install anything.

Thanks Al, you can find some more goodies and examples here, https://rosettacode.org/wiki/Category:AWK

That site is a great resource for almost any language intro.

(g)awk was in the top drawer of my toolbox so to speak during my gradschool years. I had a particularly useful script (that I still use from time to time) called mmmss.awk which calculated the Min, Max, Mean, Standard deviation and Sample size from an input stream of numbers (with an option to select different columns in the input). I ran it all the time to get a quick peak at data to make sure that things were going sanely before cranking through any real analysis. Dozens of other useful scripts too.

I don’t know if this will make sense to everyone, but I always looked at (g)awk as the text-processing equivalent of PLCs (think ladder logic).

Great for cleaning up pinouts copy-pasted from chip data sheets and making them into schematic symbols in for format of choice for your schematic capture package…

Ahemn… a chainsaw is actually quite versatile :-) Check this video on how to open a bottle: https://www.youtube.com/watch?v=yGAZLZ5h_e4 and this one (01:37) shows how to open about a 100 bottles http://www.dr.dk/event/allemod1/video-ole-aabner-sodavand-med-en-motorsav-der-er-damer-i-det#!/

Unix text processing is an excellent book, and has been released by O’Reilly via their open books project.

http://www.oreilly.com/openbook/utp

This code is wrong

BEGIN { # brace has to be on this line!

count=0

}

count==10 {

print “The first word on line 10 is “ $1

}

count is not incremented

You could also use FNR to tel you what record as in

FNR == 10 { print “The first word on line 10 is “ $1

}