A DLP 3D printer works by shining light into a vat of photosensitive polymer using a Digital Light Processing projector, curing a thin layer of the goo until a solid part has been built up. Generally, the resolution of the print is determined by the resolution of the projector, and by the composition of the polymer itself. But, a technique posted by Autodesk for their Ember DLP 3D Printer could allow you to essentially anti-alias your print, further increasing the effective resolution.

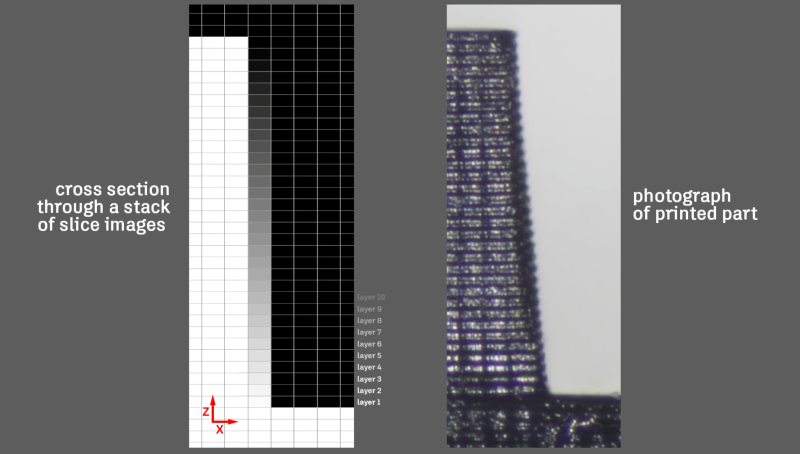

The technique works by using grayscale anti-aliasing on the image projected for each layer. The dimmer gray light results in the polymer taking longer to solidify. Therefore, if it’s used in key points, it can smooth out the print along the edges. It’s similar to how TrueType fonts, and other graphics display techniques, visually smooth out the edges of fonts and graphics in order to give the impression of smoother lines.

Now, the Autodesk Ember is open source, which is great for the community. is a proprietary 3D printer – something we’re not too fond of around here. But, there isn’t any reason this technique couldn’t be used on other DLP 3D printers. Even ones you’ve built yourself. As simple as this tweak is, it’s pretty safe to assume it’ll soon become a pretty standard output option.

[via reddit]

Hello Kanon, I must disagree. I work with 3D printers and did not know of this forums. Think of it as synergy.

Cameron, get used to this comments because this is the price of the previous post, and keep up writing about interesting things.

thanks for this amazing contribution to the internet kanon

If you grayscale pixels on your DLP, won’t the resin there not fully polymerize ? My guess it’d be gooey and need post processing and UV curing outside of the printer. Which in my opinion would make the subpixel technique a bit redundant.

As far as i know, DLP printers still have the “sticking on the bottom” problem as the major hurdle for speed and accuracy. (That’s why the “¿Carbon?” Printer is so fast … according to their marketing team, they seemed to solve that).

I would imagine the resin closest to the existing solid part starts polymerising first. So greyscaling produces the result you want. Of course you can’t do these sub-pixel thingies just floating in the liquid, there have to be full pixels for it to attach to.

If the resin had 100% absorbtion of UV light, yes. This is one of those innovations where in retrospect we all get to do a massive facepalm and say “OF COURSE!”

The UV light scatters to a certain degree within the resin. If you look at the edge of their black & white tests you’ll notice that each layer isn’t perfectly vertical – it’s rounded. This is because at the surface the light hits wherever it’s focused. This can be tightly controlled. As you go deeper into the material scattering has a more pronounced effect – some of the light that started traveling a few degrees from vertical is bent toward the outside, partially curing adjacent voxels. Near the bottom of the layer most of the light has already been absorbed reducing this effect.

Trolololol

Carefully conducted experiments to increase your DLP 3D Printer resolution by 50x? Yeah, this belongs here.

this is pretty cool… however the semantics of anything “subpixel” honestly makes my skin crawl how can there be something smaller then the smallest possible unit?? also good job cameron dont listen to the trolls….

I guess this is a different usage, but previously, I think subpixel usually meant selectively control of the R, G B elements such as with Microsoft’s ClearType. But instead, projectors often overlay & mix R, G & B rather than have them side-by-side like they are in a typical display panel.

PIxels aren’t atoms, or Planck lengths (wait for the Iphone 7), they’re divisible, into RGB elements as JRDM mentioned, but also in this case, into making smaller little plastic blobs than the laser can move to, through modulating power. Apparently in this case it works, so, sub-pixel. It’s not actually smaller pixels since you don’t have full spatial control over where they form.

yeah sorry maybe i need to clarify in the context of this article it kinda makes sense if you consider the RGB elements but in general i know a few scientific programs used to measure lengths of digital(ized) object that claim “subpixel” precision which dosnt make sense. you can on course upsample a picture but still claiming improved precision is just bogus…..

The concept of a pixel is not fixed. A pixel is a unit in a certain physical space. That unit needs to be converted from and to something. For example a 3D scene needs to be converted to display as physical pixels on a monitor, that needs to be interpreted by eyes. This conversion where the process of sub-pixels comes in.

Our brain works in much the same way. Our ability to sharply resolve a scene is crap. Our eyes are simply not that good of an instrument. However by subtly moving around our brain builds up a picture far sharper and more detailed than the individual pixels (our rods and cones) can pickup at any given instance.

This same process is at work here. Take a look at anti-aliasing. Improved precision does not mean sharper. It means more detailed or more accurate or true to the original trying to be produced. What is more “precise”? A pixel sharp picture of a circle rendered as a square due to bad aliasing? Or a less sharp shape of a circle that looks like a circle due to anti-aliasing, something that happens on a “sub pixel” level.

Actually the Autodesk Ember is open source with Autodesk releasing the designs for all the major components including the resin forulas. https://ember.autodesk.com/overview#open_source

This works because there is some horizontal light scattering in the fluid so adding a partial pixel next to a full pixel shifts the boundary position, the threshold point for activation.

I worked with EnvisionTEC SLA printers a decade ago which used this sort of technique, they call it “ERM” or “Enhanced Resolution Mode”.