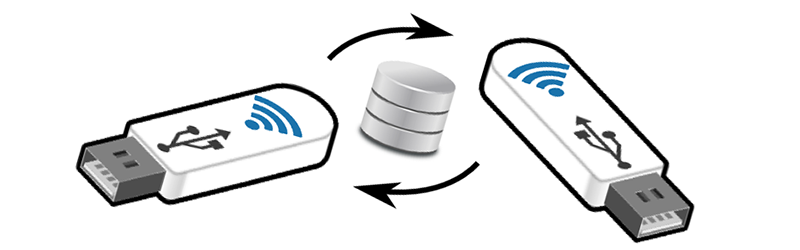

I did not coin the phrase in this article’s headline. It came, I believe, from an asinine press release I read years ago. It was a stupid phrase then, and it’s a stupid phrase now, but the idea behind it does have some merit. A collaborative Dropbox running on hardware you own isn’t a bad idea, and a physical device that does the same is a pretty good idea. That’s the idea behind the USB Borg Drive. It’s two (or more) mirrored USB thumb drives linked together by condescending condensation saying you too can have the cloud in both your pockets.

Like all good technology, the USB Borg Drive began as a joke. [heige] and his colleague were passing USB sticks back and forth to get software running on a machine without Internet. The idea of two USB sticks connected together via WiFi blossomed and the idea of the USB Borg Drive was born.

An idea is one thing, and an implementation another thing entirely. This is where [helge] is stumbling. The basic idea now is to use a Raspberry Pi Zero containing a WiFi adapter, USB set up in peripheral mode, some sort of way to power the devices, and maybe a way to set IDs between pairs of devices.

There’s still a lot of work for [heige] to do, but this is actually, honestly, not a terrible idea. Everything has a USB port on it these days, and USB mass storage is available on every platform imaginable. It’s the cloud, at ground level. A fog, if you will, but not something that sounds that stupid.

Sounds very handy-could’ve used something like that for in-field software installations (weather stations) where the Ethernet ports are not available (as in don’t exist on the product’s SBC).

I’m imagining this being very useful in industrial equipment that has no other way to remote-update files and such that’s stored on it’s usb mass storage. If I have a milling machine that loads gcode from USB drive, I could just plug drive A into the mill and drive B into virtually any computer, and use that computer to send files to the mill, even acting as a server for other machines to gain access.

This is certainly handy. It’s also what I use 95% of usb drives for.

I haven’t seen this done with two identical devices yet, but there are SD cards and USB sticks that can be accessed via WiFi, respectively can map network shares into their internal memory.

I’m using a Toshiba Flashair SD card for my 3D printer, and it’s saving me a lot of time.

I’d always heard that poor implementation of these devices ran the extreme risk of corrupting the shared storage, be double-mounting it between two hosts (say, the host device and the card’s SoC).

Has anyone done any research on this card? I’d love to know if a not-shite implementation exists :D

The issue is that USB mass storage is a block level protocol (its basically SCSI) and to get the wide spread support it will need an ntfs or FAT filesystem. Those are not network multi-mount filesystems and modifying the blocks of a mounted filesystem will not work well.

You can use MTP for this, it’s almost as well supported, but I’ve not seen any working implementation on the Linux gadget interface used by the Pi Zero.

What about enforce the condition that only one side can be mounted RW?

The idea with this ‘borg’ drive is to have multiple USB drives that are centrally updated with new software, and for that to work on things that support USB mass storage but not necessarily anything else like WiFI or Ethernet.

I suppose what you could do is like Steam does and make a large file on the drive then run your own filesystem inside that large file, so that the OS just thinks the large file remains the same but in reality the contents changes.

But then you’d need a utility to read from that virtual filesystem though. But that utility you could also put on the stick as a normal file executable and run it from there.

Stupid question: Can’t the usb device force the host to dismount it (as if it were unplugged, or threw an error) and then change it’s contents before getting the host to re-mount it?

Theoretically you could I suppose, but it would be a kludge and would be very annoying (makes a sounds when it un-mounts/remounts on many systems), and will probably eventually cause some sort of error since the OS doesn’t expect such behavior and some counter or something will overrun.

Won’t work either, the ‘read only’ side will still get confused by the blocks changing underneath it. There are caches in the USB gadget mass storage driver and the mounting OS.

True about the caches, but removable drives usually cause caches to be disabled and you can disable caches in that utility you need anyway (or force a flush). As for noticing changes, that would only happen if you used normal access, then it would change date stamps and such, but you can out-trick that. The functionality is already there in the filesystem if I recall correctly, but just not used a lot.

Also came here to mention this.

One way around it is to automate the process of “ejecting” the USB mass storage from the host, update or replace the backing volume on the Pi, and then remount it on the host. This would force the host to forget whatever it thinks it knows about what is on the file system.

I think (in Linux at least) once you eject the device the only way to re-mount it is to either physically reinsert it, or reboot.

I guess the gadget driver might be able to fake a physical repluging itself after noticing being ejected, but I think it would be less than ideal…

So, don’t eject it. Unmounting and mounting again is sufficient to make it re-evaluate every detail of the file system.

is it possible to have the USB mass storage device ctrigger an unmount/mount cycle like that?

trigger*

I don’t think so. The trick would be to make the machine/whatever to unmount the WiFi-equipped device on its own. Done with a file? Not only close the file, but also unmount the file system. Want to see once per day whether an upgrade is available? Mount the file system, look for it, unmount again.

The issue is the gadget/device needs to present a snapshot of the filesystem to the ‘read only’ stick that won’t change under it when the other stick does a write.

The read only end needs to be able to see the latest changes, but I don’t think the gadget/device even knows if it’s been (un)mounted to be able to load a new snapshot.

I suppose there’s no getting around it then. You’d have to have the device simulate being physically removed.

In that case, I’d recommend having the option of controlling when it’s triggered. For example, when a button on the opposite device is pressed, or the device itself, instead of just when a new file is available.

There is a negotiation when you plug it in, that’s how it knows it’s plugged in, and that is something you can do again, even if it is on hardware level, you could just do a timer to reattach after X seconds after a dismount. Although I don’t think it’s necessary to use that trick.

I keep reading this, but I have to say, many years ago I had an Amiga and a PC.

I had the two of them linked together via SCSI and a shared drive with FAT16 because Ethernet for the Amiga was very expensive.

Two controllers on different ID’s plus a drive. I would write files from the amiga and read from the PC and vice versa.

For example I would download files from Aminet on the PC via modem and move them over to the amiga’s native HD via this intermediate. I never had a problem with data corruption.

The drive was typically mounted on both machines at the same time.

I’ve been meaning to do something similar, now, with iSCSI. Where by one device is constantly writing but barely ever reading and another device would only ever be reading and would mount RO.

I get the impression that I’ll run into problems as it’s still block level ? but my prior experiences suggests it’s worth trying.

If i had to guess I’d probably say it worked better in the good old days due to the lack of caches. Maybe the filesystem drivers were even designed with that use case.

From those who have tried this, have noticed that even the gadget driver has a cache of the blob of storage it’s serving as a block device. You can’t just start changing bits of that blob.

Perhaps you could turn off all the caching (probably not so easily on windows and not at all on an embedded device) but then it would probably perform a bit crap and defeat the point of plug and play.

Again multiple iSCSI connections from a modern device to a filesystem not designed for multiple mounts is a bad idea, there are other network protocols that solve this problem (NFS, CIFS).

Windows has the ability to have two programs open the same file, it’s normally avoided but the functionality is there, the locking is optional.

And if you ever scripted with a runtime language or even old batch scripts you would probably have used it yourself at some point.

Yes but I’m not sure how that’s relevant.

If you write to a file from multiple processes or even threads within the same process you need to have some sort of concurrency control or you’ll corrupt the file. If you’d ever written a multi threaded application that writes to files you’d know this.

It’s the same with the filesystem, but none of the major supported filesystems are designed for it.

I would imagine crossdos implemented dos 3.3+ file locking correctly for bridgeboard support.

Where’s the problem? One can pair computers and exchange data using a Ethernet cable easily, the same can be done using by USB WiFi sticks. Using storage devices with WiFi on top is just asking for trouble.

If one insists on storage devices, use only one of them. Shovel the data via WiFi onto the stick plugged into the remote computer, then access that data directly there.

That said, using WiFi at all is only as secure as the logical setup, so it’s probably easier to connect both computers to a nearby router for the time of the operation.

The problem (as stated in the article) is computers lacking network access at the time that you want to move files to it. The creator was getting tired of throwing drives between two computers where one lacked network access at the time. This would allow you to wirelessly send files to a computer that lacks network hardware (many industrial embedded systems) or is missing the drivers at the moment to set up the network hardware (many systems that you are initially setting up). I admit that it is a bit niche, but the article admits that, and it appears that half this project at this point is just a case of why not try it.

Turn out you can’t just ‘shovel’ data onto the drive over wifi. Devices don’t like it at all when the contents unexpectedly change. The consensus seems to be that the device would have to simulate being physically removed and reinserted.

This coupled with the fact usb/sd memory is cramming so much into so little space these days could be a fairly viable replacement for the internet.

If the memory were segmented out. Home, local proximity, shared trusted and global. Not.very secure though. If someone decided to create custom hardware that could view anything, no amount of hashing and handshaking would stop someone spying on your data. I guess you could encrypt it locally with something nonstandard before it leave your hardware. I was working on a 3D bitmap based algorithm a while back that would do the job but sadly transcribing it from paper to code didn’t prove as straight forward as I’d have liked it to be.

Why not combine this hardware with an opensource solution like Syncthing? That will do all the work for the actual transferring and syncing of data. In UPNP (god forbid) environments it can sync even over the internet. On local networks it works perfectly.

I was going to suggest the same software. Works like a charm for me.