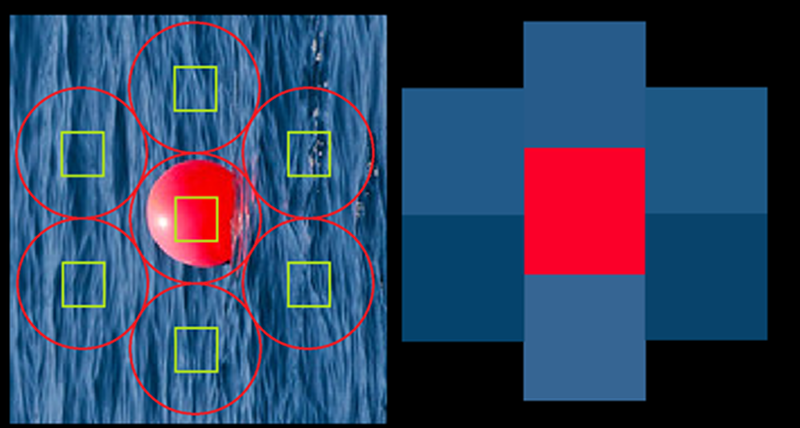

Video resolution is always on the rise. The days of 640×480 video have given way to 720, 1080, and even 4K resolutions. There’s no end in sight. However, you need a lot of horsepower to process that many pixels. What if you have a small robot powered by a microcontroller (perhaps an Arduino) and you want it to have vision? You can’t realistically process HD video, or even low-grade video with a small processor. CORTEX systems has an open source solution: a 7 pixel camera with an I2C interface.

The files for SNAIL Vision include a bill of materials and the PCB layout. There’s software for the Vishay sensors used and provisions for mounting a lens holder to the PCB using glue. The design is fairly simple. In addition to the array of sensors, there’s an I2C multiplexer which also acts as a level shifter and a handful of resistors and connectors.

Is seven pixels enough to be useful? We don’t know, but we’d love to see some examples of using the SNAIL Vision board, or other low-resolution optical sensors with low-end microcontrollers. This seems like a cheaper mechanism than Pixy. If seven pixels are too much, you could always try one.

Thanks [Paul] for the tip.

My mouse has a better camera for tracking!

https://en.wikipedia.org/wiki/Optical_mouse

An optical mouse only has a very short focus but there are advantages to that for some things. I was thinking of a fixed gantry CNC something (stacked axis) and using DC motors and an inverted mouse under the work envelope for feedback.

the focus is from the optic, not the ensor

True, but for me, if the optics are already there then I will never get it to work.

https://youtu.be/zMpEeag7kkM?t=205

It’s amazing what you can do with no computing power at all. This thing could optically recognize power outlets by shape, size and color, etc. No on board computer, only analog transistor circuits, photocells, lenses and optical masks.

Jump to about 3 min in.

HAHAA the pointless Calf stabbing robot.

Black cargos with square pockets…

Looks like computing to me. Analog and mechanical computing perhaps, but computing just the same.

If you call “to determine by mathematical methods” computing, then yes. But this thing has no instruction set, no RAM, no commands. It’s not a computer in the modern sense. If this counts as a computer then so does any circuit with inputs and outputs.

The analogue computers of the past were programmable, even if that meant moving parts around. They were versatile, like modern computers. This isn’t that, this has (far as I know) specific dedicated circuits for it’s tasks. So there’s no useful way of calling it a computer. It’s no more a computer than a thermostat is, or a 1970s television.

Preliminary design work on the first Dalek?

Ahh, I thought I recognised a younger Davros.

“Have you guessed the name of Billy’s planet? It was Earth! DON’T DATE ROBOTS!!!”

https://www.youtube.com/watch?v=wzowKw2oPVg

Quote: “Robot arm uses sg90 servos and the camera is an ADNS-2610 mouse senor with a lens from an old dash cam, all controlled by an Arduino Nano. Robot searches for marbles then reaches down when it ‘sees’ one. optical mouse sensor gives 16×16 image at about 3 frames per second”

Just moving my mouse, it seems that it updates about 10 times a second.

I might go play with some mouses.

Standard polling rate for a mouse is 125Hz. Gaming mouses can easily do 500-1000Hz polling rates with proprietary drivers.

When polling for the movement data, a mouse chip will usually apply the optical flow algorithms internally, and spit out only movement data. This is faster then reading the whole image. To read the optical raw image, it will take longer, ant it is not the normal operation for a mouse sensor. Reading the image is more like a debug mode.

This is interesting, I was wondering today what the minimum cost/complexity solution would be to using an ESP8266 as some form of motion detecting security camera. The 7 pixel camera probably isn’t it, but those sensors are interesting.

I’ve noticed there are a few replacement CCD modules for old Sony 5 megapixel cameras on ebay, going for around $5 too.

Also, could you take the back-light off an old Nokia LCD and use it to make a scanning pinhole camera aperture in front of the 7 pixel camera sensors to get more resolution that way? The LCD is all black except for one pixel which is you pinhole. I’m not sure, the maths to reconstruct could be a bit much for an Arduino, but perhaps and ESP8266 would be up to to the task. Obviously you can use more than one pixel so you can control the aperture size as well as the location. Perhaps this lets you scan fast in low resolution until you detect motion or feature of interest, then you slow scan at full resolution. You don’t really need to decode the image on the MCU if you just pipe the sensor data off it and decode the high resolution image on a more powerful system, even your smart phone would probably suffice.

This is not a camera on i2c; but it is a matrix of rgb colour sensors on an i2c multiplexer, which nonetheless can be used for machine vision applications.

Sure, this may be functionally similar but it’s not like the device will continuously stream pixel data for the whole matrix.

Interesting possible uses;

– repurposing the schematic to make a multi-lane skittles sorter

– utilising the white curve’s responsiveness into near infrared as an IR sensor

>This is not a camera on i2c; but it is a matrix of rgb colour sensors on an i2c multiplexer

What’s the difference, exactly? Is a camera not an array of color sensors?

Don’t most cameras separate out their color components on a grid using Bayer filters then uses interpolation software to blend? I think he’s saying that each of the 7 sensors can see all three colors.

Internally, they might not be different and it’s just a super low rez CMOS or CCD with some specialized firmware to generate the 7 pixel image.

That’s just my guess. Not up to speed on computer vision. I cheated and used a spare Android in my last imaging project.

What about building a real world colour scanner, Scan the colour of something and return the hex value? I know these exist as smartphone apps, But surely with 7 pixels it could be done easily enough.

Or even a skin tone tester to help find the correct cosmetics for your skin tone.

Here are a few attempts:

http://hackaday.com/2012/11/05/beginner-project-color-sensing-with-rgb-leds-and-a-photocell/

http://hackaday.com/2012/02/07/color-sensor-gives-the-rgb-values-of-anything/

https://hackaday.com/2014/01/16/fail-of-the-week-color-meter-for-adjusting-leds/

https://hackaday.io/project/3549-color-reader

https://hackaday.io/project/6426-wifi-color-sensor

Probably reading these in the past gave me the idea subconsciously of using it for that purpose, But hey cool collection of links.

4K you say? This project recently popped up on HaD.io

https://hackaday.io/project/19354-zyncmv

“after a bit more research I decided it was more capable than I originally realized so I decided change the image sensor to a higher resolution model and swap the memory to DDR3L since its more available” ….

That could make a good fail of the week:

machine vision have never too much digital computing power,

4K is just insane resolution for machine vision,

and DDR3 are just susceptible about trace length and PCB routing !

Besides that, failing like this is a wonderful occasion to learn those topics …

nothing has been built yet ? So IDK if its that much of a fail. But I see your point.

It seems that this Vishay colour sensor device is not designed to be used in an array. The I2C slave address is fixed at 0x10 (unsurprisingly). Hence the need for the I2C mux chip. If only there was a “backwards” version of the NeoPixel that samples colours and outputs a shift-register-like stream of RGBW values on a single pin. Someone should invent that.

Interesting article, but stupidly misleading headline.

Thanks for informative post!