The last year has been great for Nvidia hardware. Nvidia released a graphics card using the Pascal architecture, 1080s are heating up server rooms the world over, and now Nvidia is making yet another move at high-performance, low-power computing. Today, Nvidia announced the Jetson TX2, a credit-card sized module that brings deep learning to the embedded world.

The Jetson TX2 is the follow up to the Jetson TX1. We took a look at it when it was released at the end of 2015, and the feelings were positive with a few caveats. The TX1 is still a very fast, very capable, very low power ARM device that runs Linux. It’s low power, too. The case Nvidia was trying to make for the TX1 wasn’t well communicated, though. This is ultimately a device you attach several cameras to and run OpenCV. This is a machine learning module. Now it appears Nvidia has the sales pitch for their embedded platform down.

Embedded Deep Learning

The marketing pitch for the Jetson TX2 is, “deep learning at the edge”. While this absolutely sounds like an alphabet soup of dorknobabble, it does parse rather well.

The new hotness every new CS grad wants to get into is deep learning. It’s easy to see why — deep learning is found in everything from drones to self-driving cars. These ‘cool’ applications of deep learning have a problem: they all need a lot of processing power, but these are applications that are on a power budget. Building a selfie drone that follows you around wouldn’t be a problem if you could plug it into the wall, but that’s not what selfie drones are for.

The TX2 is designed as a local deep learning and AI platform. The training for this AI will still happen in racks of servers loaded up with GPUs. However, the inference process for this AI must happen close to the camera. This is where the Jetson comes in. By using the new Nvidia Jetpack SDK, the Jetson TX2 will be able to run TensorRT, cuDNN, VisionWorks, OpenCV, Vulkan, OpenGL, and other machine vision, machine learning, and GPU-accelerated applications.

Specs

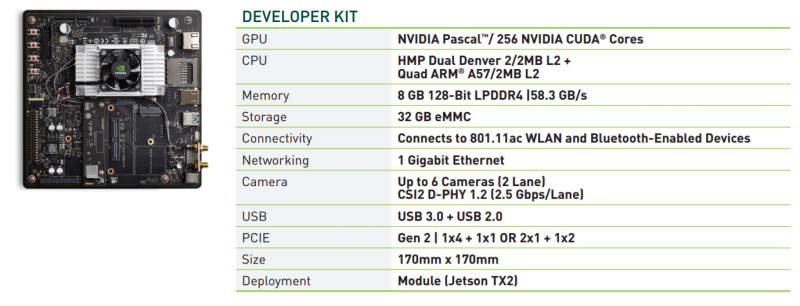

Like the Nvidia TX1 before it, the Jetson TX2 is a credit card-sized module bolted onto a big heatsink. The specs are a significant upgrade from the TX1:

- Graphics: Nvidia Pascal GPU, 256 CUDA cores

- CPU: Dual-core Denver + quad-core ARM A57

- RAM: 8GB 128-bit LPDDR4

- Storage: 32GB EMMC, SDIO, SATA

- Video: 4k x 2x 60Hz Encode and Decode

- Display: HDMI 2.0, eDP 1.4, 2x DSI, 2x DP 1.2

- Ports and IO: USB 3.0, USB 2.0 (host mode), HDMI, M.2 Key E, PCI-E x4, Gigabit Ethernet, SATA data and power, GPIOs, I2C, I2S, SPI, CAN

The Jetson development kit is the TX2 module and a breakout board that is effectively a MiniITX motherboard. This is great for a development platform, but not for production. In the year and a half since the release of the Jetson TX1, at least one company has released carrier boards that break out the most commonly used peripherals and ports. The hardware interface of the TX2 is backward compatible with the TX1, so these breakout boards may be used with the newer TX2.

The TX2 module will be available in 2Q17, with pricing at $399 in 1k quantities. The development kit will cost a bit more. If you’d like to develop your own breakout for the TX2, the physical connector is sourceable, and the manufacturer is extremely liberal with sample requests.

Nvidia Can’t I can’t trust this..

My experience of NVidia failure modes with green-lines/Text-block paterned screens:

The AI after-death would be like entering the Matrix!

That heat sink makes the power budget look like it depends on being plugged into a wall.

When you’re running it full boar it sucks lots. The idea is to sleep it lots :)

“running full boar” — I love the image

The heatsink on TX1 is deffinately overkill. You can run it without it at about full load and nothing bad happens. It gets hot, but not to the point that you cant hold your finger on it.

(I am taking about this massive heatsink with fins, not the alluminum block covering the chip).

sure, it just throttles to crap and crashes

At that price point its only viable in expensive products like autonomous cars and industrial robots. MAYBE a drone but definitely not for a broad consumer market.

Maybe a DIY laptop? Would be interesting to compare it to similarly priced x86 solutions. The X1 was on par with far more expensive Core m CPUs.

Would never pass automotive qual. Autonomous cars need way more horsepower. Maybe for ADAS apps or 360 view cameras on a car. Not for processing and shipping data via 5G.

As far as I could figure out, it’s only got the CUDA oomph of a $160-ish consumer card at 3 or 4 times the price…

Ummmm if I’m desperate enough I can dig PCIe out of a shitty little netbook and hook it to one of the much faster ones and be able to box it up as small as 5 of those…

Nvidia has a bad reputation at our facility.

1. the RMA support line tells you to F-off, and turns around to spam you

2. the cards can fail hard without warning, sometimes taking out the motherboard power supply

3. caused more than one fire due to bad design

4. For our software problems we only saw a 30% improvement in time, but at twice the node cost.

We now prefer pure Intel based stuff to handle the heavy lifting, and I’ve personally banned Nvidia’s stuff from all mission critical systems.

This white elephant will meet a similar fate as the Tegra…

well built software… on proprietary hardware… with a cost point that misses the consumer market completely…

proprietary Ncheatia never.

Do you do GPU-accelerated vision on the intel hardware?

I am building a solution that requires running a CNN 24/7/365, Nvidia solutions which are the only who support CUDA seem attractive because most libraries support it out of the box.

I usually ask people for C++ wrappers that can switch schemes with a linker flag to test if using CUDA will make a difference at all, or for systems that must also handle coordination tasks.

A general rule: the GPU speeds up things like iterative enforcement learning algorithms, but can slow down for problems that use many memory copy calls.

Early problem separation into stages is easier, as these can be paralleled with just about any reliable queuing lib. However, OpenMP and gcc compiled objects are not going to save your design pattern given they both add overhead.

Popular projects like OpenCV support both CUDA and Intel based GPUs, but your operating system version may not have compatible build environments. Some real advice, save yourself some real pain later and make sure to use a single GPU card per cluster node, and dedicate an interface for local SAN access..

If you are planning on 100% uptime, than reality is going to be a hard lesson.

It is an embedded solution so it is a single GPU in a single node anyway :)

And we do use CNN which benefit from GPGPU approaches.

I’m taking notes…

Intel hardware is capable, but you have to find the right stuff, and know how to use the entire processor. (but thats true of anything really). GPU’s will still be faster for parallel

Cuda is proprietary nVidia, so of course thats all that supports it. Look at what literally everyone else is using, OpenCL. That runs on all hardware, and has libraries.

Cuda is a hack. SIMT is nothing more than restrictive SIMD. It actually reduces parallelism in some problems but it makes it “easy” to program for….easy if all you’re used to is scalar processors. Trouble is, if you’re looking for ease, in not understanding your hardware, you’re likely doing all sorts of “bad” things for the hardware…like branching…its fine on GP processors, but horrible on massively parallel processors. You’re probably not considering register pressure, because you know, that huge GP Cache that usually is where the stack goes is totally present on GPU’s as well. (nope!) the SIMT model is easy to emulate in SIMD if thats the only way to get your problem parallel. SIMD just lets you bounce between the two depending on the problem, or which stage of the problem you’re in.

I’m not on the bashing bandwagon either. But I have no experience with any of their previous boards. I do have a lot of experience in GPGPU though, and can say that although cuda wasn’t the worst, it certainly did a good job of masking your errors/inefficiencies and not telling you about them. I will say I agree with the price point issue, and the claims of power usage. I don’t see this thing sleeping too much in a selfie drone.

You say that everyone else is using OpenCL but most DNN libraries are optimized for either CPU (x86, ARM) or CUDA-based GPUs (i.e. Nvidia only), the OpenCL implementations were added afterwards and their support is not in par with CUDA support.

Am I wrong here?

Again, cuda is “easy” (to get wrong) so of course versions came out for it first. OpenCL is more difficult, but puts you in the right frame of mind to make fast code.

I can’t speak to your particular application because though I’d love to do more with CV, I have a huge backlog of other projects, and a finite amount of time. I can speak in general, and to optimization, and to that, I say cuda appears simple so those implementations often come first, and often need tweaking to make run reasonably fast. First to market however often convinces people it has great support, and that everything else is an afterthought. I’m not saying your libraries aren’t that way, they could still be afterthoughts. However since intel, amd, and many other smaller companies support OpenCL, its a pretty dumb thing to be cuda only and have OpenCL be an afterthought. If that’s how your library authors are working, I’d find some other library, because I can almost guarantee that their cuda implementation is awful because they’ve obviously not tried to learn parallel programming, and the associated gotchas.

Try Apollo Lake. Doesn’t have 256 CUDA cores, but it’s got some real horsepower and is used in lots of apps. Great SOC. Low power. Reliable Intel product.

The “cards” being TX1s?

Nope, the server vGPU BS they sold the MBA division head.

They won’t get a another chance to burn-down a server rack any time soon.

That explains what happened on my 1070 card the other day: CPU Power seemed to take a dive, then the Graphics card wouldn’t reboot properly until I removed and re-installed the card… wierd, but tells me to watch for failure. Thanks LOL

4. For our software problems we only saw a 30% improvement in time, but at twice the node cost.

Are you new to performance computing, the oldschool cost/performance used to be like 4x for 10% :-D

Great that they offer a Dev-Kit. But why it’s only available in America? So I have to look somewhere else.

That heatsink fan would be a giant step backwards– if they didn’t already go with the same basic crap idea on the TX1. So they didn’t pay attention, we wanted fanless and if the HS wasn’t already big enough then the low power claims are suspicious. At the very least it’s a waste of $5 because the fan is coming off and landing in a shoebox. I don’t know whether to be surprised. I kinda want to get one in spite of this and use it for turning MIDI into PCM because deep learning and IoT are the opposite of interesting. If it runs too hot that’s a bummer, and 80mm fans are much quieter and live longer.

The “low power” claims are in comparison to an x86 + discrete GPU configuration.

Or in comparison to any other approach for running a DNN.

Everything is relative. It isn’t low power compared to a mobile device (even a tablet) but compared to any platform you might run a neural network on or heavy computer vision processing – it’s low power.

I’m 95% certain this is the same IC that is in the DRIVE PX2 platforms.

Derp, I meant TK1 not TX1 and sorry, tbh I was probably just channelling the unholy spirit of phoronix forums, as that’s the site where I initially read about that earlier platform. Still would like to have a compact compute kit no matter what silicon. still tbh I tend to think around using nV before other GPUs mainly because they seem to care whether their Linux drivers work even though their OpenCL support needs so much love.

Connect Tech has a passive lower profile heat sink

http://www.connecttech.com/sub/Products/TX1_Based_Accessories.asp

this isnt for “us”. inference is CHEAP, even couple of year old cellphone SoCs have no problem doing it real time. This is a platform Nvidia will try to sell Tesla/GM/Uber on so they can train on the go while killing people in the process.

lol

Can anyone explain the real life use scenario for these modules?

Lets say its used in Autonomous cars. Are these modules used directly in the products as it is? Or are these used for speeding up the development process and then you custom build the hardware including the processor and dev board hardware and adapt to your custom product PCB?

And are these affordable at $399 for the use cases they serve? Isnt it expensive or am I too naive?

PS: I don’t have much experience in high speed/processing hardware. Newbie in that domain.

It’s a mini-ITX sized dev board with module attached. the module simplifies much of the design work for an OEM, and they can focus on creating their PCB with custom peripherals and a footprint that holds the module. Software that runs on the dev board should also run as-is on a custom board.

Given the size and cost of the SoC and module, it’s not for low margin consumer devices. If you want to make a phone or settop box, you would need to create a single PCB from scratch and ditch the module.

If you build an industrial automation product, you might only need a dozen of these boards for an entire operation, and their cost would be a tiny fraction of your entire system cost. Billing a customer for a widget sorted for maybe $500k, and you only had to used a single $400 board to drive six cameras seems like a bargain.

Nvidia will have this on the market for a few years. When you are ready to develop something using the board, you are out of luck. This is not for production. The other option is to design a chip-down board yourself. I’d rather jump off Half Dome without a parachute. Intel has over 200 board suppliers that smoke the pants off Nvidia for most applications. Nvidia is great for high perf. graphics. that’s about it.

Even the TX1 beat an i7-6700K in several key benchmarks, and it’s cheaper and a fraction of the power. I think Intel’s dominance has more to do with supply chain and availability than with deep learning performance.