I never had the musical talent in me. Every now and then I would try to pick up a guitar or try and learn the piano, romanticising a glamorous career out of it at some point. Arpeggio – the Piano SuperDroid (YouTube, embedded below) sure makes me glad I chose a different career path. This remarkable machine is the brain child of [Nick Morris], who spent two years building it.

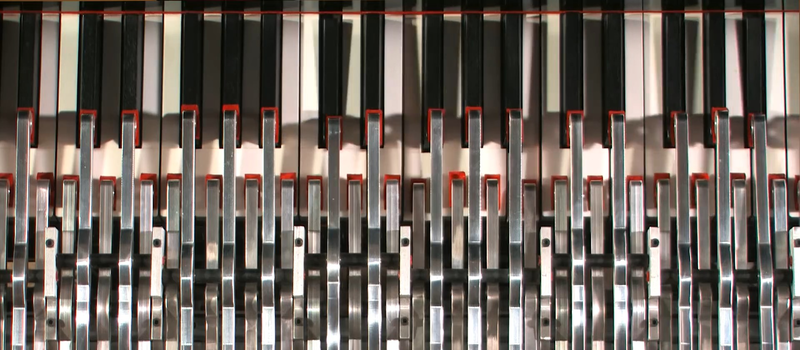

Although there are no detailed technical descriptions yet, at its heart this handsome robot consists of a set of machined ‘fingers’ connected to a set of actuators — most likely solenoids . The solenoids are controlled by proprietary software that combines traditional musical data with additional parameters to accurately mimic performances by your favourite pianists, right in your living room. Professional pianists, who were otherwise assuming excellent job security under Skynet, clearly have to reconsider now.

Along with incredible musical talent, Arpeggio is equipped with a set of omniwheels, allowing it to navigate around quite efficiently. This is not completely autonomous (yet). I cant wait to see the havok this robot causes if it were to go rogue.

[GIF credit: Gizmodo]

Story and Clark has been doing player pianos since 1900, and developed a portable adapter in 1989. http://www.qrsmusic.com/Playola.asp

Nice. Quite an elegant solution. But I guess a Metal Rover has its own charm ha!

I listen to a lot of piano music, and this is really more than just MIDI playback. When I hear this i got the feel like a person is playing, not very descriptive I know, but this is something special. wonder if there is a blur function that put that delicate variating touch in the strokes?

The creator of this Robot does mention that whilst it can play MIDI, his special software imitates the very “mood” of the original recording.

Do solenoids have the fidelity to vary stroke and speed as required to play like that? The sound is astounding!

Not by themselves, they don’t, but they are very well characterized so that its relatively simple in software to drive them in a way to produce any force curve you want, using pulsed power.

Outstanding. Hackaday, y’all need to get this guy on for an interview.

Very neat. But can it play these 1+ million note songs?

Does it even need 1+ million notes?

https://www.youtube.com/watch?v=zqan54VUSq0

It doesn’t do Jazz, if you disagree you just don’t get what Jazz really is. There is more to live music than what can be captured in the sense that once it is recorded it is no longer live and the next note in the sequence is predictable.

What if you combined the ability to do subtle variations akin to live performances with the efficiency of direct machine control? It would be inherently unpredictable and yet still, if done right, sound really amazing. Not really the same as just playing back a prerecorded set of notes, although that is basically what a live human performance is when you break it down to a fundamental level anyway.

The interaction between jazz players in a live performance borders on the psychic/telepathic, and I say that as a person who is not inclined at all toward magic thinking.

I’m not sure we even understand the process well enough to be able to emulate it.

Neural Nets can really be used here. They are amazingly well suited to learning things we cant quite formulate. Have a look: http://www.hexahedria.com/2015/08/03/composing-music-with-recurrent-neural-networks/

I see what you mean but that is off in a direction that doesn’t fully relate to the point I made about what makes jazz special. A NN still needs it’s inputs defined, but we can’t define those inputs when it comes to the interaction between human jazz performers. If we could an AI could play along with them and even take the lead without the piece going off the rails. I think we will get there in the end because it is ultimately a problem with defining the inputs and the complexity of the process, but we are still a long way away from that level of sophistication.

I think what Dan is defining here is General Artificial Intelligence. So in that sense no NN is not there yet, but to most people a NN can sound a lot like jazz.

“It doesn’t do Jazz, if you disagree you just don’t get what Jazz really is.”

In other words, if you get to choose the definition of ‘Jazz’, then you can make it so by definition. Your anthrocentrism will one day be obsolete.

But maybe I’m going too far – you’re not saying it’s not Jazz unless there is a human involved, exactly. But if the “live” element is essential, this must mean that there is feedback required, and therefore it is a closed-loop process rather than the open-loop process that this robot appears to use. But that’s just a matter of adding sensors and improving the software.

Unless you can define what consciousness is, you can’t really say that a machine can’t have it.

Eventually…

The real question is why would we want a robot that can play jazz? Surely only other robots would want to go hear/see another robot playing robot jazz? This just seems more like a scientific and technological experiment at this point, though I can see how the technology (especially if it were based on neural networks) could be used for something actually useful or helpful for people or help us study and understand how the human brain works when improvising for example.

I’m sure soon enough someone will make a neural network so it can play authentic live jazz. Probably a couple years out though.

Sure eventually, but it is one of the pinnacles of human achievement and something every African American should be proud of.

Should all black people be proud of what a few did in New Orleans? Even if they don’t like jazz? Should all white people or all gay people be proud of Liberace?

How about all Americans be proud of the music created by Americans. That is a lot less divisive.

No Liberace is something to feel deep shame about.

Yeah jazz is the notes you don’t hear lmao. Nope, Dan it probably can. Just have it strike every 8th note it plays wrong, have it go off on wild tangental parts that are not in the original key, and maybe a volt starve to mimic heroin use. I used to just layer piano and drum voices on top of each other to generate instant jazz. You would be surprised at how well it works.

The very fact that one can define jazz means there are elements that are standard and therefore predictable ;)

LOL, nasty snark is nasty, but seriously aren’t you at risk if judging their ability against your own ignorance of what they are really doing?

https://www.youtube.com/watch?v=ARvagv1FPoE

King cool.

Check out the last 15 min or so, they go out on a huge high.

The turn of the last century vorsetzers were in a much more elegant case. This is just ugliness next to a Steinway.

This, like all player-piano technology, is cheating. It’s playing the piano with 88 fingers. That’s not how humans play. If you really want to claim that you’ve made a robot piano player that plays “like a human,” then you need to make one with the same limitations – 10 fingers in groups of 5 with maximum separations between adjacent fingers and between the groups.

Player piano rolls have traditionally been “programmed” with super-human playing abilities – too many simultaneous notes for a human player – in some cases even too many for “four hands” playing (which is a thing – Pictures at an Exhibition has been arranged for piano-four-hands. It may have even been originally composed that way. I don’t remember). It’s nice and all, but it’s no better than composing for MIDI.

If you can play every note at once, you also have the ability to play like a human as well. That’s not really cheating, that’s just how it works.

In practice, though, many if not most player piano rolls back in the day didn’t limit themselves to merely reproducing human performances. They were edited and augmented in ways that made them impossible to manually reproduce.

There were probably people complaining that playing piano with four hands was also cheating. But if that’s acceptable, then three people sitting at each of three piano keyboards gives you 90 note capacity, with humans. You can do it like a bell choir, with each person playing only their ten notes, so hand spans become a non-issue as well.

I’ve been in a group that had 3 people on a small, electronic piano/synth and 5 people on a 2-layer organ.

Checkmate?

Player piano rolls ARE programmed. They’re programmed by a super-human who makes certain embellishments — his name is Rudy Martin.

Rudy uses an Apple II+, BTW.

It sounds like you guys are saying there is ONE piano roll producer left in the world.

More like half a dozen arrangers. Doug Henderson comes to mind. Making piano rolls is, I would say, a cottage industry, with some of the duplicating equipment being located in the homes of people like the Malones of Turlock, California.

I don’t mean to dis the guy’s work, it’s a beautiful machine, but it’s not that hard if your source material includes all the data necessary to reproduce the keystrokes. Call me when you can hand it a sheet of music and the output is indistinguishable from a human player.

On the other hand, it opens the doors to piano music that can have more than 10 (finger) events per beat

That basically isnt how sheet music works….

It would have to also listen to recordings on the piece, decide how to play it, then use the sheets to create a meaningful version of the song.

“It’s not that hard” – LOL!

Sheet music isnt MIDI.

Disklavier system playing some incredibly fast music transcribed from a performance by Jordan Rudess of Dream Theater:

https://www.youtube.com/watch?v=z85Y-ch-enA

And contrary to the impressive Disklavier performance of Rudess shown, Disklavier can play with dynamics as well.

Our local Yamaha dealer showed up as a surprise at my father’s funeral with a upright Disklavier playing a recorded performance of my dad.. Not a dry eye in the house.

Yeah this is just a transcription, not a recording. I don’t think they’d be able to accurately transcribe the individual velocities of every note for an accurate Rudess reproduction.

Good for you for transcribing that!

https://youtu.be/xgZhiYff7nM

I can understand this being a useful tool for studio _recording_ of live piano in the absence of a human pianist. But honestly at this point I hope only robots would be keen to go see a robot actually perform live for the musical/performance rather than technological value. That being said it doesn’t even perform yet – it just spits out a human’s previous performance so I’m just trying to understand which niche this fits into currently – apart from being a nice piece of engineering!