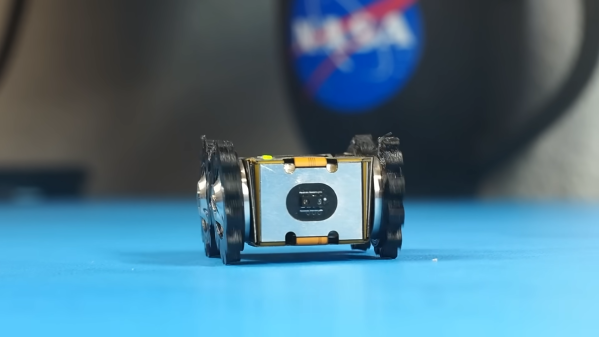

Not every robot has to be big. Sometimes, you can build something fun that’s better sized for exploring your tabletop rather than the wastelands of Mars. To that end, [philosiraptor] built the diminutive PITANK rover.

As you might guess from the name, the rover is based on the Raspberry Pi Zero 2. It uses the GPIO pins to output PWM signals, commanding a pair of servos that drive the tracks on either side of the ‘bot. The drivetrain and chassis are made from 3D-printed components. Controlling the robot is handled via a web interface, which [philosiraptor] coded in C# to be as responsive as possible. So you can see where you’re driving, the ‘bot is also kitted out with a camera to provide a live video feed.

Given its low ground clearance and diminutive size, you’re not going to go on big outdoor adventures with PITANK. However, if you wish to explore a nice flat indoor environment, its simple tracked drivetrain should do nicely. We’ve featured a great many rovers over the years; if you’ve got a particularly special one, don’t hesitate to notify the tipsline!