The review embargo is finally over and we can share what we found in the Nvidia Jetson TX2. It’s fast. It’s very fast. While the intended use for the TX2 may be a bit niche for someone building one-off prototypes, there’s a lot of promise here for some very interesting applications.

Last week, Nvidia announced the Jetson TX2, a high-performance single board computer designed to be the brains of self-driving cars, selfie-snapping drones, Alexa-like bots for the privacy-minded, and other applications that require a lot of processing on a significant power budget.

This is the follow-up to the Nvidia Jetson TX1. Since the release of the TX1, Nvidia has made some great strides. Now we have Pascal GPUs, and there’s never been a better time to buy a graphics card. Deep learning is a hot topic that every new CS grad wants to get into, and that means racks filled with GPUs and CUDA cores. The Jetson TX1 and TX2 are Nvidia’s strike at embedded deep learning, or devices that need a lot of processing power without sucking batteries dry.

Wading Into High-End Single Board Computers

Before diving into this review, it’s a good time to place Nvidia’s embedded offerings in a historical context. The Nvidia TK1 was the first offering, launched in April of 2014. While this is still a capable single board computer, there are cheaper options now that are almost as good. If you don’t need the Kepler GPU found in the TK1, just grab a Pi or Beaglebone.

The Nvidia TX1 launched in November 2015. This board was a marked departure from the TK1. The TX1 is a credit card-sized module strapped to a heatsink. At the time, the TX1 was the best high-performance embedded Linux device you could buy. With a powerful quad-core ARM Cortex-A57 CPU coupled with a Maxwell GPU, the performance was great. Even today, the Nvidia TX1 has acceptable performance compared to its competition.

Shortly after the introduction of the TX1, Pine64 — the “world’s first 64-bit single board computer” — launched on Kickstarter. The release was a disaster and I can’t recommend a Pine64. A few months after the Pine64, the Raspberry Pi 3B was released, sporting a quad-core ARM Cortex A53. The Pi 3B is the first Pi that feels like a proper desktop computer. It’s fast enough for general computing and good enough for (light) heavy lifting.

In March 2016, the Odroid C2 came on the scene. Like the Pi 3B, it sported a quad A53. Again, it’s a passable desktop computer that is fast enough for general computing. Late last year, the Orange Pi released their cattywampus PC2, another quad-core A53 single board computer. All of these are acceptable single board computers whose performance would have astonished people in the year 2000.

For about 18 months, the world saw the release of dozens of ARM-based single board computers. Now we’ve pretty much reached the limit of what a small, low-power ARM Linux board can do. In a rare interview discussing the future of the Raspberry Pi, [Eben Upton] says we’re stuck at 40nm chips for a while. Until newer, faster chips with new architectures are available (and cheap), these are the fastest ARM/Linux single board computers you can buy.

These single board computers are great if all you need is a computer capable enough to handle a few scripts, serve up a few web pages, or play a few YouTube videos. If your use case involves video games, rendering video, or machine learning, you’ll need something more powerful. This is why the Nvidia Jetson TX1 and TX2 exist. Is it as fast as a desktop loaded up with an i7 and a GTX 1080? No, but that’s not the point — a desktop built around an i7 6700K and a GTX 1080 will draw at least 300 Watts, whereas the Jetson TX2 only draws fifteen at full bore.

The Jetson TX2

The Hardware

The TX2 is a tiny board bolted to a credit-card sized heat sink. That’s the heart of the TX2, but I suspect very few people will ever work with a bare TX2 module. I don’t even know if you can buy the TX2 module in one unit quantities. Instead of starting off with the module itself and the benchmarks therein, I’ll begin with the TX2 Developer Kit.

The TX2 is a tiny board bolted to a credit-card sized heat sink. That’s the heart of the TX2, but I suspect very few people will ever work with a bare TX2 module. I don’t even know if you can buy the TX2 module in one unit quantities. Instead of starting off with the module itself and the benchmarks therein, I’ll begin with the TX2 Developer Kit.

The Jetson TX2 Developer Kit is basically a Mini-ITX motherboard. That’s a great form factor for a dev kit, and follows in the footsteps of the Jetson TX1. Very little has changed between the TX1 and TX2 Developer Kits.

For anyone who is already using the Jetson TX1, the TX2 will be a drop-in replacement. Additionally, Nvidia will continue to support the TX1, they’re not EOL’ing the TX1, and there will be a reduction in price of the TX1. Depending on how much of a price reduction we see, I would highly recommend the TX1 for anyone who needs a fast, low-power Linux system. Apparently, Nvidia is committed to the Jetson ecosystem, and if you ever need something faster, the ‘drop-in replacement’ promise of the TX2 awaits.

As far as what you get with the carrier board, here’s your bullet point list

Storage

Storage

- Full-size SD card, SATA connector

- USB

- USB 3.0 Type A, USB 2.0 Micro AB

- Network / Connectivity

- Gigabit Ethernet

- 802.11ac WiFi 2×2 MIMO

- Bluetooth 4.1

- Headers

- PCIe x4

- DSI (2×4 lanes), eDP x4 lanes

- 6 CSI connectors

- M.2 Key E connector

- PCIE x1, SDIO, USB 2.0

- I2C, I2S, SPI, UART, D-MIC

- JTAG

Not much, if anything, has changed on the carrier board since the Jetson TX1. Since this is a Mini-ITX motherboard, I would have appreciated something other than barrel connector and a brick power supply. A real 20 or 24-pin ATX power connector would have been overkill, but 6 or 8-pin PCIe connectors are small enough, and there’s space somewhere on the board for one. Maybe in a few years.

Even though this is a Mini-ITX-sized board, it’s still huge for any application where the Jetson makes sense. You can’t fit this board behind the head unit in a car, and it’s too big for a drone. Since the Jetson TX1 was released, at least one company has come out with a suite of carrier boards for this module. Connecttech’s Jetsons-themed boards break out the most important bits for an embedded solution, although I have yet to see them in the wild.

On the bottom of the TX2 is a huge, confusing, and actually sourceable connector. If you want to build your own breakout board for the TX1 or TX2, all you need to do is go over to Samtec and give them the part number SEAM-50-02.0-S-08-2-A-K-TR. This part shouldn’t cost more than $5.50 in quantity one. You’ll need a four-layer board to use it, you can hand solder it. I eagerly await a Pi-top adapter for the Nvidia Jetson.

The Module & Software

That’s the Developer Kit, but what about the actual Jetson TX2?

That’s the Developer Kit, but what about the actual Jetson TX2?

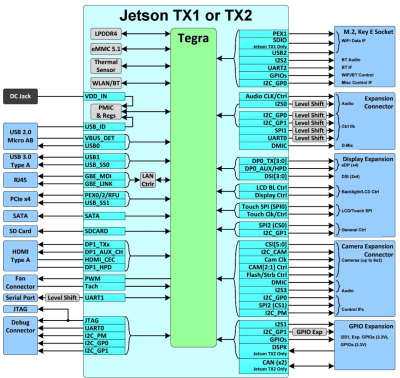

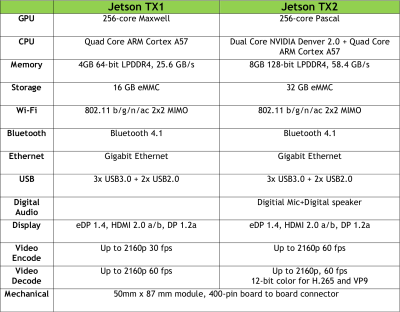

Compared to the Jetson TX1, the TX2 boasts twice as much RAM with more bandwidth, twice as much eMMC Flash, and can encode 2k video twice as fast. The CPU is a dual-core Nvidia Denver 2.0 and a quad-core ARM Cortex A57.

The Jetson TX1 had an ARM Cortex A57 and an A53 quad-core sitting on the die. The A53 cores were not enabled for the Jetson. The TX2, on the other hand, is a true multi-core CPU, with a quad A57 that is reportedly good for multithreaded applications, and a dual-core Denver 2 that is meant for high performance single threaded applications.

In the last year, Nvidia released their latest line of GPUs. We should not be surprised the TX2 is built around the Pascal architecture. This is great — if you want to build a GPU cluster or play Counter Strike at eight thousand frames per second, the best bang for the buck is a Pascal-based GPU.

The Jetson TX2 has two power modes. The ‘Max Q’ setting is maximum energy efficiency, which when measuring with a meter, comes in at about 7.5 Watts. The ‘Max P’ setting is for maximum performance and comes in at around 15 Watts. In Max P mode, the performance is reportedly double that of the Jetson TX1. I was able to switch between these modes with a single command in the terminal.

A word about the gigantic heatsink on the TX2 module: When running benchmarks, the fan never turned on. The heatsink was only ever barely warm to the touch. I assume the TX2 is designed to be in the engine bay of a car, in Florida, in August.

Performance

Finally the part you’ve all been waiting for. How fast is the TX2 over the competition? It’s very fast.

The CPU on the TX2 is a dual-core Nvidia Denver 2.0 coupled with a quad-core ARM Cortex A57. As stated above the Denver is intended for fast single core performance, whereas the A57 is meant for parallel processes, but not so parallel that a GPU would be a better solution. That’s what the Pascal GPU with 256 CUDA cores is for. Compared to the TX1, memory size and bandwidth is doubled.

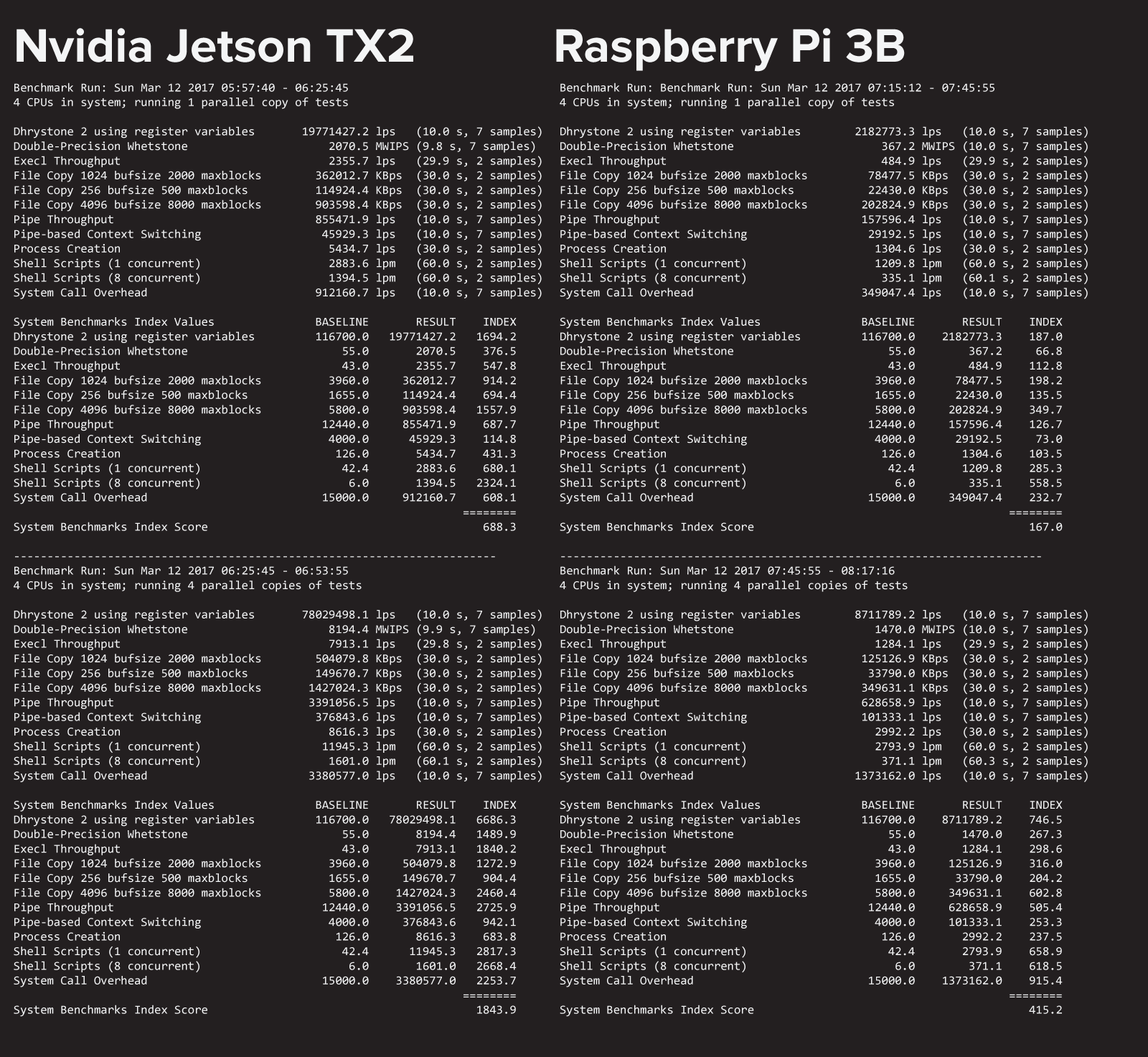

I used Unixbench to characterize the CPU on the TX2 and the Raspberry Pi 3 Model B. The results are below:

What’s the takeaway on this? In synthetic benchmarks testing the CPU, the Nvidia Jetson TX2 is about four times as fast as the Raspberry Pi 3. It’s fast as hell. I sincerely can’t wait for someone to 3D print a Game Cube enclosure for this thing.

Comparing the performance of the TX2 to other single board computers is a bit harder. I wouldn’t trust a self-driving car controlled by a Raspberry Pi; the performance simply isn’t there. Testing a self-driving car powered by the Jetson TX2 is also out of the question.

Comparing the performance of the TX2 to other single board computers is a bit harder. I wouldn’t trust a self-driving car controlled by a Raspberry Pi; the performance simply isn’t there. Testing a self-driving car powered by the Jetson TX2 is also out of the question.

Giving you an idea of the performance of the TX2 when performing image-heavy tasks is actually pretty hard. Luckily, Nvidia included a few VisionWorks examples in the review package.

With VisionWorks, the Jetson was able to identify features relevant to driving across the golden gate bridge. It was able to use parallax to build a point cloud of a parking lot. The Jetson TX2 was stabilizing video in real time. A laptop could do this, but a Pi couldn’t.

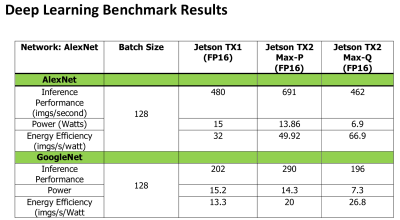

But not all Deep Learning is playing with a camera; in the benchmarks released by Nvidia, the TX2 is almost twice as fast as the TX1 at GoogleNet inference performance. For AlexNet inference performance, The TX2 performs better and uses less power.

AI At The Edge

Nvidia’s marketing wank for the Jetson TX2 is, ‘Deep Learning At The Edge’. What does that mean? The future will be full of robots running OpenCV, cars avoiding people automatically, and Alexa-like voice AIs that do all their natural language processing locally. These applications are collectively referred to as Deep Learning. ‘The Edge’ in this metaphor, is environments where network latency and bandwidth are issues. For a self-driving car, there may not even be a network to send data back to a server for processing. If you don’t want your Alexa bot sending audio recordings back to a server for privacy reasons, you need to do processing locally.

The Jetson is designed to put a lot of processing power at ‘the edge’, in applications that have a power budget. This is embedded deep learning. Is a desktop CPU faster than a Jetson at deep learning tasks? Of course, but a desktop CPU is going to draw 60 Watts — the Jetson TX2 only draws fifteen. If your project or product revolves around having a laptop tucked away somewhere, you now have a replacement that’s smaller, potentially faster, and draws less power.

The Takeaway

If you want to build a Game Cube emulator, the TX2 is not for you. If your idea of innovation is 3D printing a RetroPi enclosure, the TX2 is not for you. This is not a toy. This is an engineering tool. This is a module that will power a self-driving car, or a selfie-capturing quadcopter. These are hard engineering problems that demand fast processing with a low power budget.

There’s a reason the TX2 Developer Kit is expensive. The market for a device like this is tiny compared to the bushel of Pi Zeros at Microcenter. However, there is no other tool like this. If you need a fast CPU that only draws fifteen Watts, I’m not aware of a better option.

“[Eben Upton] says we’re stuck at 40nm chips for a while”. Does he indeed… https://www.qualcomm.com/products/snapdragon/processors/835

I can’t tell if that chip has even be released yet. And when it will be it will be a top of the line smartphone chip. The whole point of boards like the RPi is that they can do stuff for a relatively low price.

I thought the BCM chip on the Pi was a (sometime back) top-end smartphone chip too…

The original Pi? It was never a high end chip, and smart phones back then didn’t cost what they do now. I don’t know about the more recent models, but considering their low price they obviously don’t use bleeding edge chips.

It was a media chip originally targeted at media players and PND’s.

How much does it cost?

“In a rare interview discussing the future of the Raspberry Pi, [Eben Upton] says we’re stuck at 40nm chips for a while. Until newer, faster chips with new architectures are available (and cheap), these are the fastest ARM/Linux single board computers you can buy.”

According to the Google the Jetson TX2 dev kit costs ~600USD + postage. I saw Jetson TX1s for ~200USD so for those of us who don’t need the most bang per watt or bang per cubic inch or bang per gram it would seem that a TX1 would be an economical way to get the hang of programming the thing in OpenCL and then upgrade later once you had a target app in mind.

I don’t know about you folks, but I take issue at the “this is not a toy, it is a serious engineering tool” quip. I have always found that serious engineering tools make the most satisfying toys (especially when put to silly uses off the “for the hell of it / because I can” variety. Plus, since my day job is very niche (writing device drivers for custom hardware and system level profiling and optimization at the software/hardware boundary) at an enterprise networking solution vendor, if I want to keep my skills current and well rounded the best thing I can do is buy things like this as toys and then see how far I can push them or what whimsical application I can find to put their strengths to use.

Of all publications to make such a snooty assertion I would have expected hackaday to be the last. I am certain I am far from alone among hackaday readers in regarding the dividing line between toys and serious engineering tools nonexistent (or very fuzzy at best).

You are certainly not the only one, however you are more of an exception than the norm.

Some people have $600 toys, but usually they aren’t bought on a whim. Where as a $35 RPi can be bought on a whim as a toy.

If you think having a tool for learning and experimenting is fun, then this is probably a very fun toy.

Some people do buy $100,000 cars as a toy. The number of people who do that is fairly limited though but I can assure you that some people absolutely can and do so. Even Bill Gates has talked about periods of time where he bought all sorts of neat stuff just for the fun of it. Cars, computer screens, rather unique sets of items for his home.

The desire for neat toys is fairly universal. The ability to actually do so is not, which is why by numbers, most people buy the $35 RPi units. There is no one best way of doing this though.

First off, take a chill pill. If a snooty comment annoys you, you should leave the internet. Now.

Second, it’s not a snooty comment. It implies that it is a very powerful device that is much more than powerful than the applications mentioned previously require. Just because something is not a toy, doesn’t mean it can’t be used as one…I have a sports car – not to get from point A to point B, but to go out and have fun. I treat it like a ‘toy’. It is not a toy. It is a 320hp automobile stuffed with enough computers to make to control thousands of explosions per second, analyse real-time telemetry to individually control the speed of all four tires should I do something stupid and make sure cabin is a comfortable 20 degrees so I am comfortable as it tries not to let me kill myself.

You are welcome to spend the $600 and have this sort M&Ms or whatever else you’d like to do, however the point is, it’s extremely powerful and can be used for very intensive applications.

The difference between Men and Boys is the price of their toys.

I saw some of the modules coming out that are $200 per unit and have similar specs in even smaller frame. http://linuxgizmos.com/tiny-quad-a17-module-has-4gb-ram-and-hdmi-2-0/

The Raspberry Pi may well be stuck at 40nm, since they’re reliant on custom-designed Broadcom chips made specifically for them which probably don’t sell enough to justify the cost of smaller process nodes, but it’s certainly not true of small, low-power ARM Linux boards in general. For exampe, the now 18 month old Orange Pi PC uses a 28nm SoC.

Was also going to comment this, I’ve got a banana pi M1 using the Allwinner A20 released in 2012 that uses 40nm, but the H3 in more modern boards is 28nm. Think we’ll see the 14nm jump around the same time as cortex A73s become common in cheaper boards.

The first two versions of RPi did not use cutom-designed chips — they were processors already in use in the cellphone market. The RPi3 is a custom spin, but I’d wager it’s not far from other offerings Broadcom has for a wider market (either set-top box or smartphone but I suspect the latter).

The issue with smaller fab processes is rising cost (harder to manufacture, lower yield, etc.). It makes sense to push those boundaries for high margin products but STBs are generally designed to an extremely strict price point.

Um, that’s a Qualcomm chip. Not relevant given the tight coupling between the Pi Foundation and Broadcom.

I guess Broadcom is stuck at 40nm? Qcom has been well ahead of 40nm for quite a few years.

Hmmmm 1050ti with three times the cores is $140, without a “real” power figure given, just listed as PCIe standard of 75W. NVidia shy of letting you find out max sustained clockspeed of TX2, because yeah, you can probably tune down a 1050ti for equal power/efficiency. At standard clock it’s probably getting on for 1.5-2x fast at least. Packaging as a consumer GPU is about as big as the GPU portion of that board, all you need is your own small mini-itx or similar (hey you can do this in x86) with PCIe.

Fairly sure power is scaling about the same, this will be 7.5W at idle for 3x the cores running, then hitting an average of 70W full load from hardware review sites, when you’ve got at least 1.5*3*15W=67.5W for the TX2 equivalence.

..except your calculations for equivalence appear to only compare TX2 to a GPU – the TX2 contains more than just that (there’s several ARM cores, a chunk of memory, assortment of peripherals, etc) – you really should be comparing the TX2 to a GPU, motherboard and CPU combo. A closest equivalent in the desktop world would be AMD’s APU line (integrated CPU+GPU in to the same die) if you were to also consider the motherboard along with it.

I’m regarding that as phone class hardware that costs you a watt or two….

What I keep hearing about these TXs is that they will be used several at a time, 5 units, 7 units etc… and I’m not sure you really need more than one CPU controlling the whole, it’s just there to parcel out work to the GPUs and do some i/o, so if you need the oomph of 5 TX2s, you could replace with 2 1050tis, both running off the same host, and they’d wouldn’t need run full tilt, landing them in a good power efficiency region, so you’d probably end up same or less overall consumption. Unless it’s all about redundancy, but still if you’re talking of many units, then two or three 1050TI based units give you some too.

I see it more of a very high-end tablet rather than phone, given the feature list, and if the CPUs were only expected for basic data management then they could get away with something a lot smaller such as the ARM A53 (while the die space of an A57 isn’t that significant compared to the GPU, when there’s 4 of them, plus the Denver unit, it soon adds up and die-cost is king so you don’t normally fit things unless you really need them).

Now, that’s not to say that all applications will make full use of it, such as what you’re suggesting with multiple units (where they could get away with little more than a DMA engine rather than a core), but it strikes me that Nvidia has made a more general purpose module (that would typically be used on its own as it contains “everything”) and in a small package (which the desktop world is not so constrained by).

But it’s not *that* small a package is some of my point, 1050ti doesn’t have to be in a desktop case, it’s a module smaller than itx size of the TX2 and can be stripped of it’s bracket etc, then this hooked up to a sub $100 Hummingboard that’s 80x100mm ish….. now minimal power you could run that combo at might be 20W but at that you’re probably getting more gflops than TX2 already…. low end power scaling is meh, but as soon as you need more than single TX2, win, and it’s win at being less than half the price to screw around with if you can deal with it being a bit thirstier.

your forgetting form factor and heat tolerances.

tx2 is size of credit card x 2″ height. the breakout board is mini itx, not the tx2 itself. this is designed to sit in a car or robot or quadcopter etc…

the 1050ti is designed to sit in a motherboard and on it’s own can’t do anything. requires a cpu paired to it to achieve anything.

and let’s pretend for a second that nvidia makes both the tx2 and the 1050ti ;)

don’t you think they would be able to engineer around their pascal architecture to fit a design goal?

they did do the hard part of engineering the gpu itself, and now your trying to out think the same people on what would work best for a small low power device to put it in and how many cores would be optimal power wise?

The design goal of this might be several hundred percent markup. My point will probably become much more obvious when the 1020GTX basic desktop low low end unit appears in best buy at $39.99 or something (Whatever they call what will go below 1050) which may have close to TX2 number of cores,

1050ti does not have to be in a PC motherboard, you can as I stated hook it up to any small board with PCIe like a hummingboard. The standard size cards with this aren’t super long full size cards, they’re 5×7 postcard size and you can strip them down, or buy the low profile version for half that size… http://www.thumbsticks.com/gtx-1050-ti-low-profile-announced-23112016/

Even cooling could be less of a problem for use in low production, because you’ll be able to get all kinds of active, passive and heatpipe aftermarket ones that bolt on the 1050ti, and mod them as needed. TX2 it’s custom from scratch everything.

Maybe cubesats with facial recognition to drop a penny on a target from orbit will be so limited in space and power this board makes sense, I’m trying to say it’s just way cheaper for hacker screwing around to do it this way and there’s a bajillion applications where double the watts and double the space won’t matter, especially at such a huge discount. Also when you need more gflops than the TX2 offers, this is the upgrade for less cash.

Did you read the article? It repeatedly states that the TX2 draws 15 watts maximum.

It also states:

“Even though this is a Mini-ITX-sized board, it’s still huge for any application where the Jetson makes sense. You can’t fit this board behind the head unit in a car, and it’s too big for a drone.” – referring to the dev kit board.

Aerial drone makes sense, but I keep hearing multiple units for land systems. Whereupon, all those 15W add up to same as cheaper consumer GPU.

But me not make sense, heh, meant your drone argument made sense, but…

The most common use case thus far has been in cars, where there is 1 TX2. (Tesla’s new autopilot runs on the TX2) Cars aren’t really “low power” but they definitely would notice 300 watts of a desktop compared to 15….

Don’t need a whole desktop, scaling of 1050ti plus a board to run would be something like 20W at less than half the price for exact same glfop as TX2 through to 4 or 5 times performance at a consumption in the region potentially 10% less when multiple TX2 might otherwise be wanted, in pretty much same amount of space.

rw you assume scaling would net you 20 watts. i would bet nowhere near that. the pascal architecture is designed to be power efficient that means it’s designed to save power at higher workloads by shutting things off internally that don’t need to be running and ramping down clock speed whenever possible. if its 75 watts at full load you aren’t going to see scaling down to 20 while using it by simply dropping clock speed. the tx2 gets 15 watts and the gpu side is probably about 10-12 watts of that with 1/3 the core count and a lower clock speed of 1050ti. lower the clocks on 1050ti to match and you’ll have around 30-35 watts of draw just for the gpu alone. IT HAS 3 TIMES AS MANY CORES. it will have 3 times as much power draw. they are the same gpu cores and use different ram so it is going to be a bit higher even from ram power draw

You say I’m wrong, then go to explain how I’m right, the architecture shuts shit off when you need to save power. The cost of idling 2/3 of the cores appears to be 5W given 5W higher idle consumption.

Also, power consumption of GPU and CPU isn’t really linear, as it gains noise you have to raise volts to drown it out. Faster = more power per gflop, slower = less power per glfop. And direct correlation between clock speed and gflops. Hence it is possible for 3 cores running at 250Mhz to use less power than 1 core running at 750Mhz, because they might only need 0.9 V whereas the one at 750 needs 1.05 V

The exciting thing to me is the PCIe Gen2x4 port off of which you could hang any number of data acquisition boards or an FPGA for any preprocessing you may want to do on your dataset prior to feeding it to the GPU (there are some tasks best suited to GPU solutioms and some best suited to FPGA solutions and many real-world problems contain sub-tasks of both varieties). This board having a respectable GPU and decently fast CPU plus the expandability to shovel ~2GB/s full duplex to and from a custom I/O or I/O + preprocessing FPGA board sounds powerfully flexible.

I’ve been looking to get one of these, stick a network card into the PCIe slot and start doing some encryption/decryption acceleration and maybe some crypt-analysis. I’ve been curious about the concept of using OpenCL for crypto versus the accelerator cards I’m using now.

My ultimate goal is to build a portable FemtoCell that my cell phones would associate with, the device would encrypt the data, then forward the data onto a real cell tower.

That’s a really cool idea. Have you gotten any closer at achieving this feat?

Yah, if you are finally locked in to one algorithm then FPGA implementation will be faster, but for learning, general purpose crunching, and refining algorithms continuously then GPU is more appropriate.

In cryptocurrency mining, it went something like FPGA was 4x faster for half the power of higher end GPUs running openCL, then ASIC development banged that up by a factor of 10 or more. Not sure if you’d want to do everything in ASIC because FPGA still allows you to update periodically.

Too big for a drone?

Why not make the PCB the frame top/bottom and make an over-sized drone.

Might do that with scrapped EPIA-M10000 PCBs from old XN kit.

Will need to re-read, seems interesting so far…

Also you’d want to have heatpipes out to fins that just sat in lift rotor air instead of having fan.

ConnectTech and others already make carrier boards for the TX1 and TX2 modules. They are about the size of a credit card.

http://www.connecttech.com/sub/Products/graphics-processing-solutions.asp?l1=GPU

You could easily mount the module with one of these carrier boards on a drone, people do!

Just a note, that 2160p is 4k, not 2k.

(My opinion…not making a generalized statement about or for any group)

I wonder if Shenzhen is going to make copies of this. $600 for a dev board is not useful for anything I can think of. For $600 I could buy around 12 Raspberry Pi 3’s and play around with parallel processing which is something I’ve never done before.

Let me rephrase: What do I care about this? I’m guessing there will be no community support for makers. The cost is ridiculous. I could buy a very small ITX mainboard, Intel, and Nvidia GPU for that and have some serious processing power because I don’t care about the energy budget.

Energy budget seems to be the thing. And it’s the one thing I don’t care about.

>Energy budget seems to be the thing. And it’s the one thing I don’t care about.

Then you are not the target market.

Oh HaD gods (and Brian; “he’s not the messiah…”), does anyone have any plans for any future articles exploring a bit more of an introduction to getting your hands dirty with TX2/OpenCV?

I’ve know there has been a few projects where OpenCV has been used but it would be interesting to have a ‘beginners guide’/introduction to it; as I fall outside of the “recent-CS-grad” Venn chart, the whole subject appears as quite a large, impenetrable wall where I don’t really have much idea of where to begin or even what I need to be searching for to start me going.

Actually, Nvidia has made some good tutorials on OpenCV (and their Nvidia’s accelerations of the same). You can view them on their Youtube or through https://developer.nvidia.com/embedded/learn/tutorials that link. They’ve not made it trivially easy, but anyone who really wants to play around with OpenCV can learn how to do so there.

And several decent CUDA programming books out there. And no reason to make it TX2 specific. High level of inter-compatibility provided your desktop has a CUDA based GPU. You very likely have all the hardware needed to get started in front of you.

Were you running arm64 on the rpi3 for bench marking?

no, 6502.

“Even though this is a Mini-ITX-sized board, it’s still huge for any application where the Jetson makes sense.”

OK, maybe– referring to my earlier comment about turning MIDI into PCM– for a pseudo-random comparison, have you ever seen a M1R? It ain’t ITX, more like a 2U server… this board would use… (checks the M1R badge, 13W) a smidgen more power and would deliver absurdly much more in the way of possible expression. However the BT, Wifi, etc are all but useless so probably it’s just too much tool for the job anyway. Still, the module by itself on a custom board with a proper PCI-E audio interface seems like a nice daydream.

this is overkill for audio production work. even doing physical modeling you’ll achieve 8 or more poly note count on an rpi3.

if you want to make something like a synth just do what korg did after the m1r and marry a standard cpu/motherboard to a touch screen and run softsynths. call it the m3 or oasys or khronos etc…

Theres is OP-1:

https://www.teenageengineering.com/products/op-1

https://www.youtube.com/watch?v=YPCxE6Gy4Cs

And that is just a tiny BGA Sharc DPS running the show…

Softsynth is the whole entire point. It’s not overkill, I promise… and the synth in question isn’t some poorly written, terrifically sloppy CPU waster either. It’s hungry. Standard CPU/motherboard is what everyone already does, or a laptop, though people are also using it on their RPi with predictably limited effectiveness. My case isn’t audio production anyway, it’s about making an instrument. I’d intended to take a thing with me, together with whatever MIDI controller, instead of relying on a laptop and having to put it somewhere and having to keep its air vents unobstructed and… please, no touchscreens kthx.

Sorry i realized too late the difference between audio production (generation) and music production (w/ sessions) which ITYM the first time but you probably didn’t. Obviously my would-be instrument’s case is audio generation, no DAW in sight, that’s all I wanted. Then I had to wait for my comment to show up or else this explanation would seem insane.

Just wanna dink around with audio, older gen with few CUDA cores is probably enough. Like pick up a GT 420 at a yard sale, off eBay or something for $10 bucks. 48 cores.

I’d have thunk this would be better on a Parallela board or something like that, but…

Oh wait, potential audiophile thing.

No you want the Titan X…. actually 5 of them, in a rack.

Haha, not that far gone yet… the problem with older hardware is their hard limits on CUDA compute capability / OpenCL version. I would need to use C11-style atomics but IIRC you need at least OpenCL 2.0, which is also the one where kernels can launch kernels. The CUDA equivalent is called ‘dynamic parallelism’ and that requires Kepler or better, so their OpenCL library will never work for that, for me: I have just a Fermi atm. And it doesn’t matter because nVidia loves CUDA and wants you to love CUDA and their OpenCL lib is languishing on 1.2 no matter which hardware. The weird thing is, it was their own guy giving a big talk about Vulcan and friends who referred to OpenCL 2.1 the first time I heard that was a thing.

I was looking at Parallela boards just a bit ago– they are interesting, even more so if one can get low-latency audio from them. I2S would be way nicer than the micro HDMI, have to see…

Yah, GT 4×0 series is CUDA 2.1.. think even the 9 series, 9×00 had 2.0, I have a 32bit PCI 9400 GT in one system just for a smidgin of CUDA, 16 cores worth, and it’s still 10x fast as CPU. During memory jogging wikisurf, I see PhysX wasn’t meant to work on less than 32 cores, but it did. Had to run a specific driver though so it didn’t argue with the main radeon GPUs.

argh, fatfingered the report button. was just trying to say, it’s kinda surprising but neat that it worked at all. And I realize it’s kinda silly that I basically said “this thing is new and fast enough that i would like to use one but i would end up using CUDA necessarily and i don’t want to so maybe $(someday) when their CL lib is caught up even though they have explicit motivation to not do that, namely vendor-lock-in’

hmmm is there any other (cheaper ;)) ARM-board, that supports >4GB of RAM?

Most boards I have seen have 4GB or less so they work well as media center etc. but might get annoying rather quickly as a desktop replacement…

> If you want to build a Game Cube emulator, the TX2 is not for you.

Hey, that is not fair. I want to do so….

In fact, I already did for the Nvidia Android TV, so I want to see the performance of the Denver cores. And I don’t want to deal with Android any more ;) May you do me a favor and run a few games, just to check the performance?

umm, these statements seem to directly conflict: “Testing a self-driving car powered by the Jetson TX2 is also out of the question.” ; “This is a module that will power a self-driving car”

Yay! (for confusing readers???)

Yeah, thats weird. The PX 2 which runs Tesla’s autopilot is the same SOC as the one in the TX2. So the second one is more accurate, as it’s already true. The first, I think, was originally contrasting the Raspberry Pi as you wouldn’t run a self driving car with a Pi but you could with a TX2 but that got edited into confustion.

Probably because the PX2 is automotive qualified and the TX2 is not.

Market for TX line is not small, it’s actually huge as nvidia is currently the only major house targeting automotive AI/AR/VR. Not so long ago Volvo and nvidia published co-op agreement targetting driverless cars.

Makerspace is not the market this kit is targeted for but as stated in the article this is a tool for system designers and ODM/OEM folks targeting automotive/aerospace/industrial control.

Quite impressive chip and nice carrier thou.

soooooo where are the CUDA benchmarks

Uh … where can you get the Samtec connector for $5.50?

Cheapest I see is $24.72: https://octopart.com/search?q=SEAM-50-02.0-S-08-2-A-K-TR

Even Samtec themselves say that the 1ku price is $14.51 …

Typical hackaday commenter who doesn’t do his research….

That’s from the Nvidia Jetson TX1/TX2 supported component list. The price, “Should not exceed 5.545 USD… in quantity one.”

Actually, he DID do the research. He checked directly with the manufacturer and with distributors. Unfortunately, Nvidia is wrong in that document, it’s simply NOT available at those prices.

oy. Now it would be nice to see what one cost whenever that document was drafted, before all of this perceived demand.

Because I can’t post emoji here: rolls-eyes-emoji … I literally posted an octopart link AND check with the manufacturer.

If you find anyone selling the connector for $5.545, I will shit in my hat and wear it. And if (when) you don’t, I’ll be shitting in your hat.

Also … maybe you want to check that forum link again where you got the part number, and check what the name of the commenter was who was asking about it ……….

Tipp: you forgot to activate the denver cores. The benchmark you ran was A57 only. I had the same problem origninally

Check here:

https://devtalk.nvidia.com/default/topic/1000345/two-cores-disabled-/?offset=4

it was basically a media chip used in media players

I’m curious about the idea of making a ‘deep learning router’. Could this learn what ‘normal’ network activity looks like for a given device? could it learn what devices on a network are IoT? Could it ID IoT devices and put them on a separate VLAN as well as limit the internet communications of these devices to the bare minimum? Could the device be a self configuring firewall? Could it be used to provide some benefits of having a network engineer in places that would probably never hire one?

It’s too expensive for consumer level gear, but maybe as it gets cheaper, and a design focused on the bare minimum to be a router could get it closer.

This is spitballing for the most part, but I like the idea of a device that could understand what normal internet traffic is for me as well as abnormal so I could investigate it and see if a device has been compromised.

“go over to Samtec and give them the part number SEAM-50-02.0-S-08-2-A-K-TR. This part shouldn’t cost more than $5.50 in quantity one.”

Uugh… This aged like milk after the pandemic hit.

This thing is $48 Canadian in quantity one.