Although not nearly as intimidating as her ceiling-mounted hanging arm body, GLaDOS spent a significant portion of the Portal 2 game in a stripped-down computer powered by a potato battery. [Dave] had already made a version of her original body, but it was built around a robotic arm that was too expensive for the project to be really accessible. For his latest project, therefore, he’s created a AI-powered version of GLaDOS’s potato-based incarnation, which also serves as a fun introduction to building AI systems.

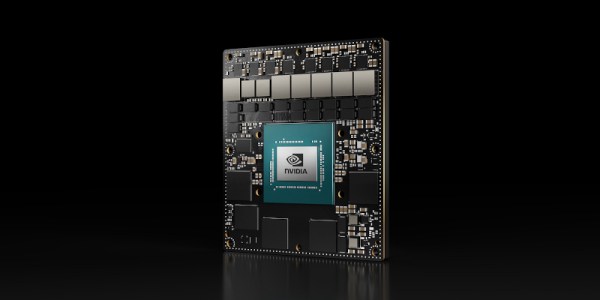

[Dave] wanted the system to work offline, so he needed a computer powerful enough to run all of his software locally. He chose an Nvidia Jetson Orin Nano, which was powerful enough to run a workable software system, albeit slowly and with some memory limitations. A potato cell unfortunately doesn’t generate enough power to run a Jetson, and it would be difficult to find a potato large enough to fit the Jetson inside. Instead, [Dave] 3D-printed and painted a potato-shaped enclosure for the Jetson, a microphone, a speaker, and some supplemental electronics.

A large language model handles interactions with the user, but most models were too large to fit on the Jetson. [Dave] eventually selected Llama 3.2, and used LlamaIndex to preprocess information from the Portal wiki for retrieval-augmented generation. The model’s prompt was a bit difficult, but after contacting a prompt engineer, [Dave] managed to get it to respond to the hapless user in an appropriately acerbic manner. For speech generation, [Dave] used Piper after training it on audio files from the Portal wiki, and for speech recognition used Vosk (a good programming exercise, Vosk being, in his words, “somewhat documented”). He’s made all of the final code available on GitHub under the fitting name of PotatOS.

The end result is a handheld device that sarcastically insults anyone seeking its guidance. At least Dave had the good sense not to give this pernicious potato control over his home.