When the ESP32 microcontroller first appeared on the market it’s a fair certainty that somewhere in a long-forgotten corner of the Internet a person said: “Imagine a Beowulf cluster of those things!”.

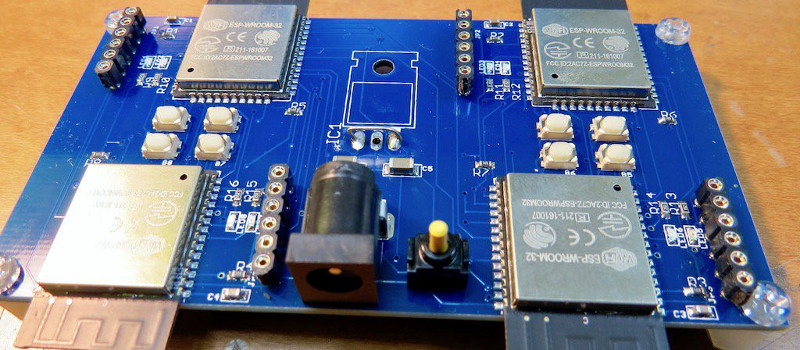

Someone had to do it, and it seems that the someone in question was [Kodera2t], who has made a mini-cluster of 4 ESP32 modules on a custom PCB. They might not be the boxed computers that would come to mind from a traditional cluster, but an ESP32 module is a little standalone computer with processing power that wouldn’t have looked too bad on your desktop only in the last decade. The WiFi on an ESP32 would impose an unacceptable overhead for communication between processors, and ESP32s are not blessed with wired Ethernet, so instead the board has a parallel bus formed by linking together a group of GPIO lines. There is also a shared SPI SRAM chip with a bus switchable between the four units by one of the ESp32s acting as the controller.

You might ask what the point is of such an exercise, and indeed as it is made clear, there is no point beyond interest and edification. It’s unclear what software will run upon this mini-cluster as it has so far only just reached the point of a first hardware implementation, but since ESP32 clusters aren’t exactly mainstream it will have to be something written especially for the platform.

This cluster may be somewhat unusual, but in the past we’ve brought you more conventional Beowulf clusters such as this one using the ever-popular Raspberry Pi.

The datasheet for the ESP32 does indeed show an ethernet MAC interface using either the MII or RMII specifications. I don’t know how the software for the ESP32 goes implementing the software support for the Wired ethernet but the chip appears to support 10/100 Full or Half Duplex comms with VLAN support.

Couple that with a 5-port Ethernet switch chip also supporting MII or RMII specifications you could probably get away with a board containing 4 ESP32s connected via a switch, and only a single PHY and magnetics for an uplink port

I was thinking the same but in reality a switch for each set of 4 would be a real problem full experience

I say SPI or parallel locally on each board, a single module having ethernet (probably with a phy and magnetics for simplicity)

But in reality this whole thing is silly … China produces cheaper arm chips with similar specs without all of that WiFi and FCC overhead

There are switch chips with 7 or more ports on them, and the chip costs aren’t exactly crazy expensive, ~$10-$15/ea

On the other hand a setup like this could become a building block for a wifi based phased array transceiver/network.

I’ve seen several people mention how the ESP8266 and now the ESP32 are such capable and inexpensive little processors, even ignoring the Wifi capabilities.

So… what chips, dev boards or SoC’s would anyone suggest that are as complete and easy to use as the ESP boards produced by AIThinker, WeMos, etc? (other than Arduinos)

I need a way to process lots of text in parallel. I think a cluster would work faster than the mere 8 cores on my laptop.

I’m learning Chinese, and I wrote http://pingtype.github.io to put spaces between the words, pinyin, and literal translation of each word. The slowest part is word spacing.

The algorithm is simple: read 5 characters. Is it in the dictionary? No -> read 4 characters. Is that in the dictionary? No -> 3 characters. No -> 2 characters. Yes! -> Copy to the output string, add a space, move along 2 characters. Read 5 characters, etc.

The problem is, this is really slow on a single CPU. I can’t think of a way to run it in parallel on a GPU. But I can see how it would be done on a cluster. So I’d need a cheap, low-power cluster. Do you think the ESP32 one would be suitable? Or does someone have a better idea?

seems to me I/O is your bottleneck. does the dictionary fit in memory ? also, are we talking javascript ??

also there are lots of ways to speed up look-up’s into sorted and/or indexed tables.

provided the dictionary fits in GPU memory, they are the way to go for processing data in parallel, even if it’s just a lookup (see ethereum proof of work algorithm).

But it seems the GP’s algorithm is sequential, depending on the index of the previous output, so in its current state it cannot be used iin parallel.

Seems like a cluster of standard computers would be easier, as those platforms are already around, with users and community support.

I have a cluster of cheap smartphones for altcoin mining. The performance per watt and performance per dollar is substantially better than x86 for that task.

What altcoinare you using this to mine?

Surprisingly this is my field when I’m not writing for Hackaday, I’ve done a lot of language processing work over the years. Formerly in the dictionary business.

The way I approach problems like this is to take the reasoning that storage space is almost limitless and very cheap these days, and that storage comes with a very fast hierarchical database system: the filesystem.

So I precompute a huge tree of lookups, every possible combination across my corpus, as an eyewateringly huge directory tree. In my case I use JSON files within those directories, and end up with countless small files.

Precomputing takes a long time. I’ve had corpora that take weeks to precompute. But in return I get lookups with no database layer to slow everything down, my lookups are as simple as filesystem paths. My stack of precomputed data can take up gigabytes, but disk space is so cheap that doesn’t matter.

Not necessarily the way to do it, but a way to do it.

That is a very special altcoin, then. ARM as used in smartphones really isn’t meant for optimum number crunching operations per watt. Server CPUs, however, are highly geared towards that. Also, that altcoin is then optimized for low memory bandwidth, something smartphone CPUs are (understandably) bad at.

It wouldn’t be difficult to get a x86 system that performs better, but can you find a x86 system that can outperform 4 Snapdragon 410s while costing less than $70 and using less than 10W? I haven’t even mentioned the emulation overhead yet…

A Ryzen 1700x is equivalent to 34x of those snapdragon 401.

If you calculate in the extra cost for networking hardware cables etc then it’s not worth it, let alone the effort to write software that runs on a cluster.

Unless the next generation of SBCs suddenly gets at least 5k on geekbench or whatever it’s never going to be cost effective and even then your time is still worth more than the hardware.

But that’s boring and power hungry :P

Boring is just like, your opinion, man.

Regarding power-hungry: That’s a somewhat common misconception. These small CPUs are **not** more power-efficient for number crunching than beefy x86. On the contrary. Their OPS/W is rather bad. But: they have so little performance that they use very little power. That’s what they were optimized to do.

So, if you need to calculate much and spend little on electricity, a proper workstation/server/laptop CPU would fare better.

GPUs are even much much more power-efficient than such CPUs, on the small subset of problems that work well on GPU architectures. You simply can’t do much branching on a GPU.

Several people have referenced ARM so far… just a reminder ESP32 is a dual core Tensilica Xtensa LX6.

I am thinking a secure random number generator. So the four devices generate their own random number, if someone outside the box is trying to influence the generated number buy controlling the ambient electric noise used to seed the systems then they will create the same number. Or at least the four devices would show a pattern between the four numbers being generated if it was under attack. Just an idea, could be rubbish. :)

Jenny, that’s simply awesome. I guessed you were smart but it’s always nice to have proof. As an old DSP guy from the days when we looked for any way possible to trade memory for cycles, I get it.

I hope this is a misplaced reply to my comment above about corpora and not to the ESP cluster. If so, thanks. :)

It only works when you say “Imagine a Beowulf Cluster of…”

To be fair, I do in the text

I can imagine there being value in it if you needed to be able to operate on or monitor 4 different wifi channels at once.